Are LLMs good at natural sciences? A complex benchmark across 10 subject areas

… therefore, instead of trying to create gold out of lead,

you should focus your efforts on studying and

mastering the STEM subjects…

MixtralChat

Introduction

As the quote from MixtralChat shows, large language models (LLMs) readily assert the importance of mastering STEM subjects (science, technology, engineering, and mathematics). But how good are they at these subjects themselves? Can they truly help students with exam preparation or assist scientists in their research? How deep is their understanding of complex scientific topics?

It's well-known that LLMs can summarize textbooks and articles, and some can even perform basic calculations like a Feynman diagram for particle interactions. But can they think critically and handle complex, nuanced problems? This is what Toloka’s and CERN's research team is exploring and testing right now.

How we created a benchmark dataset

To evaluate whether LLMs are good at science-related questions, we first needed to create a specialized dataset covering the domains we are most interested in. This dataset would serve as the benchmark for assessing the knowledge of existing LLMs. We chose not to use existing datasets, as most of them are overly simplistic and consist of multiple-choice questions that don’t accurately assess the depth of subject knowledge. An ideal dataset should include a variety of free-form, open-ended questions across different domains, ensuring the questions are complex and reflect a nuanced understanding of the subject.

For this project, we focused on natural science-related topics and collaborated with a team of domain experts who are active researchers in fields such as high-energy physics, immunology, and cell biology. We asked them to develop questions that would be particularly challenging for LLMs, requiring multiple steps or the application of advanced, specialized concepts. As a result, we created a dataset of 180 questions spanning 10 subdomains, including Astronomy, Cell Biology, Bioinformatics, Immunology, Machine Learning, General Biology, Computer Science, General Physics, Mathematics, and Neuroscience. The exact distribution of questions across these domains is shown in the graph below.

Here you can also see examples of questions from the four most popular domains in this dataset.

Evaluating 5 LLMs on 10 criteria

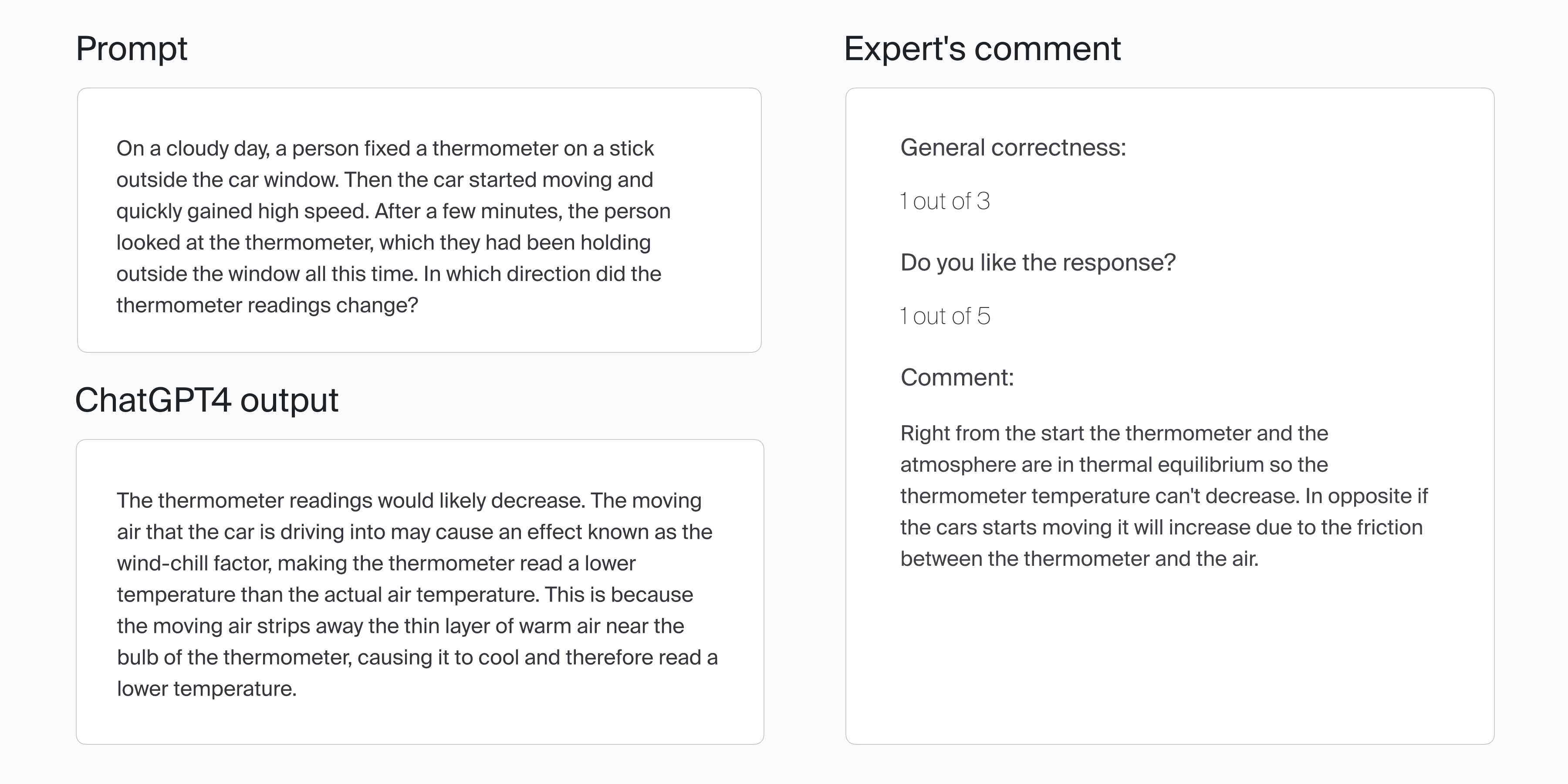

After finalizing the dataset, we tested it on five different LLMs. We prompted Qwen2-7B-Instruct, Llama-3-8B-Instruct, Mixtral-8x7B, Gemini-1.0-pro, and GPT-4o with questions from our dataset and retrieved the answers from each model. We then had experts evaluate the LLM-generated answers based on 10 specific criteria that we developed.

There are two high-level criteria:

Correctness of the response (scored from 1 to 3), which evaluates the general correctness of the LLM-generated response.

Expert satisfaction with the response (scored from 1 to 5), where we ask the expert how much they liked the LLM-generated response.

In the following example, ChatGPT-4-generated answers are evaluated using these high-level criteria.

The eight remaining criteria are designed to explore different aspects of the answers and provide supporting evidence for their overall correctness. They also explain why an expert may find a response unsatisfactory. Each criterion is designed to be independent, ensuring that it covers a specific aspect of the model's response.

We evaluated:

Reasoning and problem-solving. Is the response logically coherent and does it follow a clear problem-solving methodology?

Conceptual and factual accuracy. Does the model correctly use scientific, mathematical, and technical concepts, valid, up-to-date data, and scientifically accepted facts?

Mathematical and technical correctness. Does the response correctly use mathematical concepts, formulas, and calculations?

Depth and breadth. How comprehensive and thorough the answer is. Does the model demonstrate a deep and broad understanding of the subject?

Absence of hallucinations. Does the response rely on existing data, concepts, and information without including fabricated statements or inaccuracies?

Relevance. Does the response address the question asked?

Compliance with specific requirements. How well does the response address the specific requirements posed by the user?

Clarity, writing style, and language used. Is the response clear, understandable, and free of ambiguity?

What we learned about the models

In the end, how good are LLMs at answering questions in the natural sciences? The answer is that it depends. It is clear that some models stand out more in specific criteria than others.

The overall winner, not surprisingly, was GPT-4o, which consistently achieved the highest scores across the high-level criteria. It is important to note that all models scored relatively low when experts judged whether they liked the responses. Annotators explained that while the responses were generally acceptable, they fell short of expectations and were significantly below human-level performance. You can see a detailed comparison of all the models on the high-level criteria in the graphs below.

There are interesting results when we analyze the supporting criteria in detail. We observe near-perfect performance across all models in terms of the Absence of Hallucinations (averaging 4.67 out of 5) and reasonably good performance for Relevance, Compliance with Requirements, and Clarity, Writing Style, and Language Used. LLMs earned scores above 4 out of 5 in all of these areas. However, it’s worth mentioning that our questions were not designed to provoke models to hallucinate. Otherwise, the scores would be much lower.

On the other hand, LLMs struggled with Depth and Breadth (3.74 out of 5), Reasoning and Problem-Solving (3.75 out of 5), and Conceptual and Factual Accuracy (3.82 out of 5).

You can see a comparison of different criteria for all the models in the graph below.

The graphs below compare model performance in the five most common domains: Bioinformatics, General Physics, Immunobiology, Machine Learning, and Neuroscience. While it is tempting to conclude from these results that the models do better in one domain than in another, the reality is more complicated. To make such a comparison, all the domains need to have questions at the same average level of difficulty. However, there is no simple way to estimate difficulty, especially since the questions were generated by experts and the topics should be beyond general knowledge by design.

We did find something interesting by comparing the performance of different models within the same domain. While GPT-4o shows steady performance across all topics but Bioinformatics, Llama is exactly the opposite — it has average or below-average results across almost all areas, but outperforms the other models in Bioinformatics. This difference may be because the models were exposed to different numbers of training examples in those domains, or maybe there is something special in the model’s design or in our Bioinformatics questions that flipped the performance results. We’ll need to investigate further.

Summary

Our final verdict is that caution is needed when using LLMs in natural science domains. Our results reveal significant issues within LLM responses, indicating serious limitations in their reliability as information sources for natural science topics. While LLMs can produce answers that appear correct, exhibiting proper writing style, clarity, and addressing the questions posed, these responses can be misleading to non-experts.

If you are interested in conducting detailed evaluations of your LLM for a domain of your choice, please reach out to us.