Balancing Power and Efficiency: The Rise of Small Language Models

Language models are essential for enabling machines to understand and generate human language. Large Language Models (LLMs) often receive the most attention, boasting billions of parameters and excelling across various tasks. However, a new trend is emerging with small language models (SLMs). These smaller models strike a balance between computational power and efficiency, making artificial intelligence (AI) more accessible and widely adopted.

What are Language Models?

A language model is an algorithm that calculates the probability for each word in a language to occur in a particular context. Because there are so many words in any language, the model is taught to compute probabilities only for words in a particular vocabulary,which is a relatively small set of words or parts of words in a language.

In simpler terms, language models can continue texts. The model calculates the probability of possible continuations of a text and suggests them. It assigns probabilities to sequences of words and predicts the next word in a sentence given the previous words. Its main goal is to understand the structure and patterns of language to generate coherent and contextually appropriate text.

Transformers are a fundamental architecture in modern natural language processing that has radically reshaped how models work with sequential data. The main innovation of transformers is the self-attention mechanism, which allows the model to evaluate the importance of different words in a sentence relative to each other.

Why is the development of modern AI models centered around language models? Indeed, language models are the core of modern natural language processing (NLP) and artificial intelligence. Their existence and such rapid development are driven by the need to enable machines to understand, interpret, and generate human language.

Researchers started developing language models because human language is a fundamental to intelligence and communication. Practical needs for improved communication, information search, and automation, combined with the historical context of AI research and technological advances in machine learning and data availability, have prioritized language models.

Types of Language Models

Large Language Models

Large language models (LLMs), such as GPT-3 with 175 billion parameters or BERT with 340 million parameters, are designed to perform highly in all kinds of natural language processing tasks. Parameters are variables of a model that change during the learning process. The large language model is a neural linguistic network trained on extensive and diverse datasets, which allows it to understand complex language patterns and long-range dependencies.

Such highly versatile models can be fine-tuned to become domain-specific language models. LLMs are great for various complex tasks, from text generation and translation to summarization and advanced research tasks. However, LLMs require significant computational resources, memory, and storage, making them expensive to train and deploy. They also consume a lot of energy and have slower inference times, which can be a drawback for real-time applications.

Small Language Models

Small language models are neural networks designed to perform natural language processing tasks with a significantly reduced number of parameters and with fewer computational resources than larger language models.

Small models are trained on more limited datasets and often use techniques like knowledge distillation to retain the essential features of larger models while significantly reducing their size. This makes them much more cost-effective to train and deploy even on mobile devices because they require less computational power and storage. Their faster inference times make them suitable for real-time applications like chatbots and mobile apps.

Small language models, such as DistilBERT with 66 million parameters or TinyBERT with approximately 15 million parameters, are optimized for efficiency. They are trained on more specific datasets or subsets of larger corpora. Being trained on limited datasets, small models often use techniques like distillation to retain the essential features of larger models while significantly reducing their size. Capable small language models are more accessible than their larger counterparts to organizations with limited resources, including smaller organizations and individual developers.

While LLMs might have hundreds of billions of parameters, SLMs typically operate with parameters in the millions to low billions range. In contrast to all-purpose LLMs, small models are designed for highly specialized tasks as they handle them noticeably better. Despite their smaller size, these models ca be remarkably effective, especially for specific tasks or when optimized using advanced training techniques.

There has yet to be clearly defined distinction between LLM and SLM yet. Likewise, there is no clear definition of hthe number ofparameters large and small language models have. Larger models are considered to handle 100 million or more parameters, or according to other sources, 100+ billion. Small language models are considered to handle fewer parameters ranging from 1 to 10 million, or 10 billion.

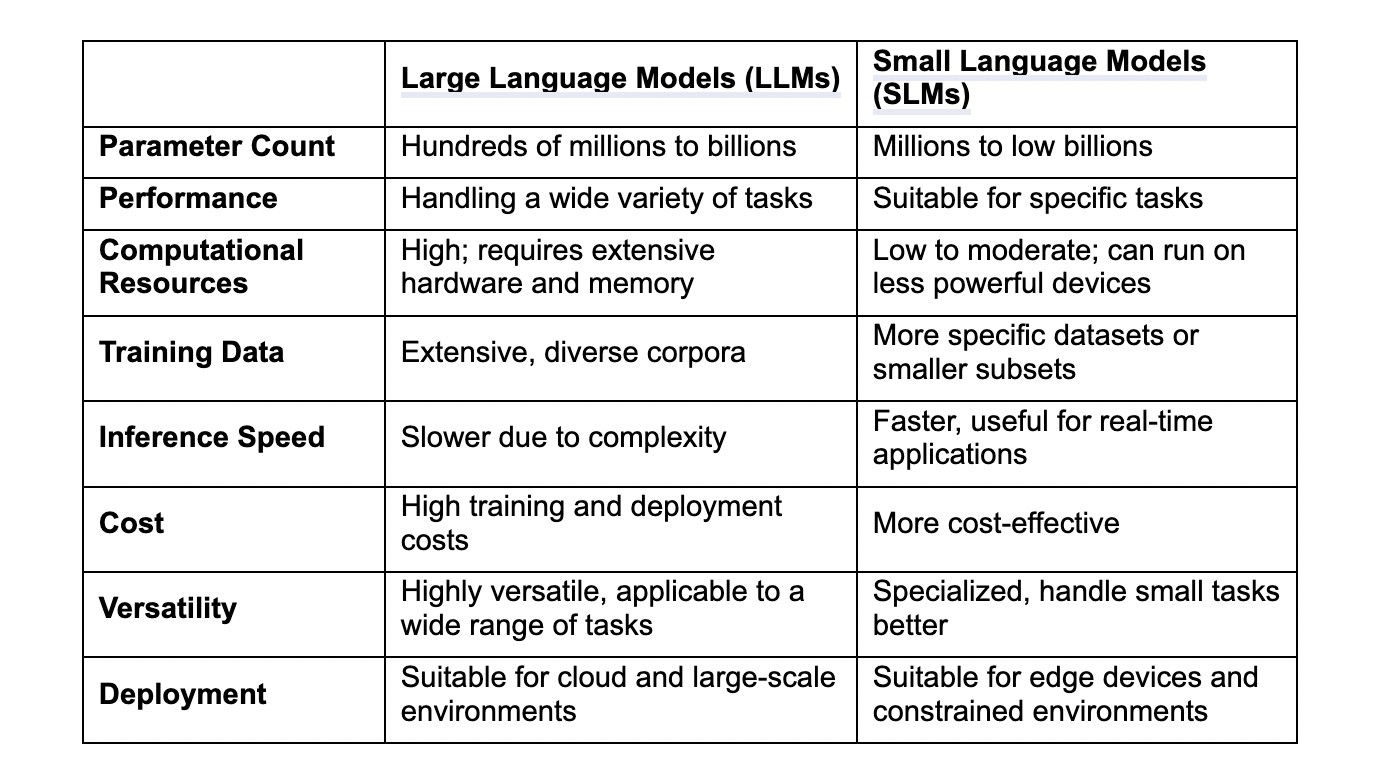

Large and small language models differ not only in the number of parameters, but also in the amount of data processed, training data, required storage, and neural architecture. A small language model requires significantly fewer resources to train and analyze data than a large one.

Comparative Summary: Small Language Models Vs. Large Language Models

Why Are SLMs Necessary?

Small language models are significant for several reasons. Firstly, many devices we use daily - smartphones, tablets, and even items like smart home gadgets - don't possess much processing power. Small language models only need a little processing power, memory, or storage, so they work great in these environments. They keep devices running smoothly without draining their resources.

Cost is another critical factor. Large language models are costly to train and use because they require a lot of computing power. Small models are much cheaper to run, meaning that cutting-edge NLP becomes affordable for more companies and developers, even with limited budgets. They also consume fewer resources, lowering operating costs and reducing environmental impact.

Benefits of Small Language Models

Lower computational requirements. Small language models require significantly less computational power and memory compared to large language models. This makes them more accessible for use on devices with limited resources, like smartphones, tablets, and edge devices.

Reduced training and inference costs. Due to their smaller size, these models are cheaper to train and deploy. This cost-effectiveness benefits organizations with limited budgets or those looking to deploy NLP solutions at scale without high infrastructure costs.

Speed. With fewer parameters, small language models have faster inference times. This is crucial for real-time applications such as chatbots, virtual assistants, and mobile applications, where quick responses are essential for a good user experience. Small models can also be fine-tuned more quickly with less data compared to larger models. This adaptability is helpful for domain-specific applications where training data might be limited, and rapid iteration is necessary.

Deployment in resource-constrained environments. Small language models can be easily deployed in environments with constrained computational resources. This includes IoT devices, embedded systems, and other edge cases where large models would be impractical. Small language models' reduced size and complexity of small language models make them easier to deploy on various platforms, including mobile devices and embedded systems. This flexibility benefits applications that need to run in environments with different hardware capabilities.

Wider accessibility. Lower costs and reduced hardware requirements make small language models more accessible to small organizations, academic institutions, and even individual developers. This contributes to broader access to advanced NLP technologies, allowing a wider range of stakeholders to benefit from AI breakthroughs.

Small Language Models Use Cases

Customer Support and Chatbots

SLMs are used to develop AI-powered chatbots and virtual assistants that handle customer inquiries, provide personalized responses, and automate routine tasks. Their efficient understanding and generating natural language makes them ideal for enhancing customer service experiences.

Sentiment Analysis and Social Media Monitoring

SLMs analyze text data from social media platforms and online forums to perform sentiment analysis, identifying trends, public opinion, and potential issues. This capability helps businesses and organizations monitor their online presence and customer sentiment.

Language Translation and Localization

SLMs contribute to language translation services by accurately translating text between languages, improving accessibility to information across global audiences. They can handle nuances in language and context, facilitating effective communication in multilingual environments.

Content Generation and Summarization

In media and publishing, SLMs are employed for content-generation tasks such as writing articles, generating product descriptions, and creating summaries of long documents or reports. They can produce coherent and contextually relevant content quickly and efficiently.

Best small language models

LLaMA 3

LLaMA 3 is open-source, which means its code and architecture are accessible to the public. Developed by Meta, LLaMA 3 with 8 billion parameters is part of their strategy to empower the AI community with trustworthy tools. Meta aims to foster responsible AI usage by providing models that are not only powerful but also adaptable to various applications and scenarios. By making LLaMA 3 open-source, Meta encourages collaboration and contributions from researchers, developers, and enthusiasts worldwide.Phi-3

Phi-3 represents Microsoft's commitment to advancing AI accessibility by offering powerful yet cost-effective solutions. Phi-3 models are part of Microsoft’s open AI initiative, emphasizing transparency and accessibility. This means that the models are publicly accessible, allowing developers and researchers to integrate them into different environments. Despite its smaller size compared to many other models in the field, Phi-3, with 3.8 billion parameters,has demonstrated superior performance in benchmark tests.

Gemma

Google's Gemma family of AI models represents a significant advancement in open-source AI technology. Gemma models are part of Google's initiative to provide open-source AI solutions that are accessible to developers worldwide. This openness allows developers to explore, modify, and integrate the models into their applications with greater freedom and control. Gemma models are engineered to be lightweight, making them suitable for deployment on various devices and platforms.

Small Language Models Challenges

SLMs are optimized for specific tasks or domains, which often allows them to operate more efficiently regarding computational resources and memory usage compared to larger models. By focusing on a narrow domain, efficient small language models can achieve higher accuracy and relevance within their specialized area.

However, SLMs may lack the broad knowledge base necessary to generalize well across diverse topics or tasks. This limitation can reduce performance or relevance when applied outside their trained domain. Moreover, organizations may need to deploy multiple SLMs, each specialized in different domains or tasks, to effectively cover a wide range of needs effectively. Managing and integrating these models into a cohesive AI infrastructure can be resource-intensive.

Although niche-focused SLMs offer efficiency advantages, their limited generalization capabilities require careful consideration. A balance between these compromises is necessary to optimize the AI infrastructure and effectively use both small and large language models.

Future of Small Language Models

SLMs contribute to democratizing AI by making advanced technology more accessible to a broader audience. Their smaller size and efficient design lower barriers to entry for developers, researchers, startups, and communities that may have limited resources or expertise in deploying AI solutions.

Collaboration among researchers, stakeholders, and communities will drive further innovation in SLMs. Open dialogue, shared resources, and collective efforts are essential to maximizing AI's positive impact on society.

With the differences between SLM and LLM gradually diminishing, there will appear new ways to apply AI will appear real-world applications. As research progresses, SLMs are expected to become more efficient regarding computational requirements while maintaining or even improving their performance.

Small language models represent a pivotal advancement in democratizing AI, making it more accessible, adaptable, and beneficial to many users and applications. As technology evolves and barriers diminish, SLMs will continue to shape a future with AI enhancing human capabilities effectively.