Base LLM vs. instruction-tuned LLM

Not all LLMs are built the same. Base LLMs and instruction-tuned LLMs are designed for different purposes and optimized for various applications. This article delves into the differences between these two types, their unique training methods, and use cases, helping clarify which one is best suited for specific needs.

What base LLMs are

A Base LLM is like a language model's core or raw version. It’s trained on tons of text data, from websites to books to articles. This training helps it learn about language and topics but doesn’t follow instructions. So, if you ask it a question, it might respond with something relevant, but it may not always give you exactly what you wanted.

Base LLMs are often called foundation models because they form the foundation for more specialized or task-oriented models. These foundational models are typically the first step in developing an AI system: they’re built by training on large, general datasets to learn the basics of language, knowledge, and context. Once established, these foundation models can be fine-tuned for specific applications, like creating instruction-tuned or task-specific LLMs.

These models are typically trained using unsupervised learning, where they predict the next word in a sequence based on the context of previous words. A base LLM is excellent for exploring ideas or asking a general question without expecting a specific or consistent answer. It may need extra guidance.

Key characteristics of base LLMs

Training. Base LLMs are trained on vast amounts of unlabeled data, such as public internet sources, books, and research papers, to recognize and predict language patterns based on statistical relationships in data. This allows them to be versatile and rely on a broad range of knowledge across many topics.

General knowledge. Base LLMs have a broad understanding of topics because they are trained on such diverse data. This cannot really be called understanding, as it doesn't possess consciousness. It’s more accurate to say LLMs process patterns in data and use them to predict and generate responses that resemble human understanding.

Flexible but not instruction-focused. Base LLMs are not specifically trained to follow instructions, so users might need to ask differently to get the exact answer they want. This is because these models are designed for general use and can handle a wide range of prompts. However, they have not learned to complete specific tasks and need additional prompting or guidance.

Foundation for fine-tuning. Base LLMs are often used as the starting point for building more specialized models. Developers can fine-tune these foundational models on specific datasets or train them with examples of specialized tasks. Natural language processing (NLP) techniques make it possible to fine-tune base LLM for specific applications, like answering questions, summarizing text, or translating languages. Fine-tuning for specialized NLP tasks transforms a base model into an instruction-tuned or task-oriented LLM.

Base LLMs applications

General knowledge responses. Base models can answer questions on broad topics. Since they’re trained on large amounts of text covering a wide range of topics, they can provide quick answers on almost anything, from history to science to pop culture. However, they might not accurately answer very detailed or specialized questions.

Translations and summaries. Base LLM can offer raw translations and summaries across languages or large documents. Although not as accurate as models specifically tuned for translation or summarization, they can provide quick, general overviews useful for preliminary understanding.

Basic chatbots. While they’re not specialized for in-depth conversation, base models can handle general queries and casual conversation. They can answer basic questions, give general advice, or chat in a friendly way.

Base language models can function without fine-tuning, and they’re quite capable in many applications right out of the box due to their extensive training on large, general datasets. This makes them pretty flexible and capable of handling a lot of tasks on their own.

Fine-tuning makes a big difference if a model needs to be precise, like answering complex, domain-specific questions or performing highly specialized tasks. Fine-tuning helps the model become more consistent and accurate in areas where a user needs it to be foolproof.

Instruction-tuned LLMs

An Instruction-Tuned LLM is built upon a base model but further fine-tuned to understand and follow specific instructions. It learns from examples where it’s shown how to respond to clear prompts. Instruction tuning involves supervised fine-tuning where the model learns from examples of instruction-prompt-response pairs. It helps the model better understand human commands and interact in a conversational or task-oriented way.

Instruction-response pairs often come from real interactions or manual human feedback examples showing the model how to respond to specific instructions. This fine-tuning improves the model's ability to answer specific questions, stay on task, and understand requests more accurately.

They’re trained not just on raw information but also on specific instruction-based examples. So instead of just knowing facts, they’ve been taught how to respond when a user gives them a clear prompt like “Summarize this,” “List the steps to do that,” or “Explain this topic at a beginner level.” This added layer makes them way better at:

Following complex, multi-step instructions without going off track

Giving consistent answers if you ask similar questions multiple times

Responding in a specific style or structure, like creating bulleted lists or writing in a formal tone

Key characteristics of instruction-tuned LLMs

Improved instruction following. Instruction-tuned models are much better at interpreting complex prompts and following step-by-step requests. After such fine-tuning, the model becomes better at creating structured content, providing better summaries, and answering questions with a specific level of detail.

Better at handling complex requests. Instruction-tuned LLMs are trained to break down complex instructions into manageable parts to handle detailed prompts more effectively. For instance, a user can ask them to write a step-by-step guide, and they're likely to produce precise, sequential instructions.

Task specialization. Instruction-tuned models can perform specific, detailed tasks like summarizing, translating, creating outlines, or giving advice in a structured format. They’re ideal when a particular style or structure is needed.

Responsive to tone and style. Because instruction-tuned models are trained on a mix of prompt styles, they can also respond in different tones or levels of formality, depending on what’s asked. This flexibility allows them to adapt to various contexts, like giving a friendly, engaging answer for a social media post or a professional response for a business report.

Better understanding of user intent. Perhaps one of the most impactful features is that instruction-tuned LLMs are likelier to pick up on the intent behind a user’s question or command. They’re generally more attuned to what a person might be asking, even if it’s not explicitly spelled out. This makes interactions smoother and reduces the need for clarification, as the instruction-tuned LLM is more likely to interpret what the user meant on the first try.

Instruction-tuned LLMs applications

Creative writing and content ideas. Instruction-tuned LLMs can be used to generate ideas. They are good at writing stories, creating articles, and composing other types of creative content. Since they follow instructions closely, these models can adapt to different writing styles and formats, for example, create formal reports or casual blog posts and marketing materials. They're also great for brainstorming creative ideas, such as coming up with unique perspectives or fresh takes on various topics.

Business and productivity tools. Instruction-tuned models enhance productivity by taking on routine tasks. For example, they can draft business emails or generate reports from raw data, allowing professionals to focus on more strategic, higher-value tasks. These models can also automate document formatting, write summaries for meeting notes, or generate templates for project proposals. Because they can follow precise instructions, they’re helpful for tasks that require a specific structure or format.

Customer support and virtual assistance. Instruction-tuned models can answer a wide range of questions about troubleshooting, product information, or account issues clearly and consistently. Because these models can follow detailed instructions, they can provide step-by-step solutions like guiding a customer through resetting a password or explaining how to troubleshoot a device issue. They can handle repetitive questions reliably, which saves humans time and allows them to focus on more complex customer needs. The models can also adjust tone based on the brand’s style or the customer’s mood—friendly, empathetic, or professional as required.

Instruction-tuning techniques

Low ranked adaption (LoRa). LoRA is a way to fine-tune big language models for specific tasks without needing massive computing power. Instead of changing all the model’s parameters, LoRA makes minor, targeted adjustments, adding only a few extra layers that specialize the model for a particular job. This method is particularly valuable for instruction tuning because it allows model adaptation with much lower computational and memory resources than traditional fine-tuning.

Multi-task learning (MTL) is a machine learning approach in which a model is trained on multiple tasks simultaneously. Rather than developing a separate model for each task, MTL allows a single model to learn and generalize across tasks. Because many tasks share similar language patterns and structures, the model can pick up on these overlaps, which improves its overall understanding and makes it more flexible.

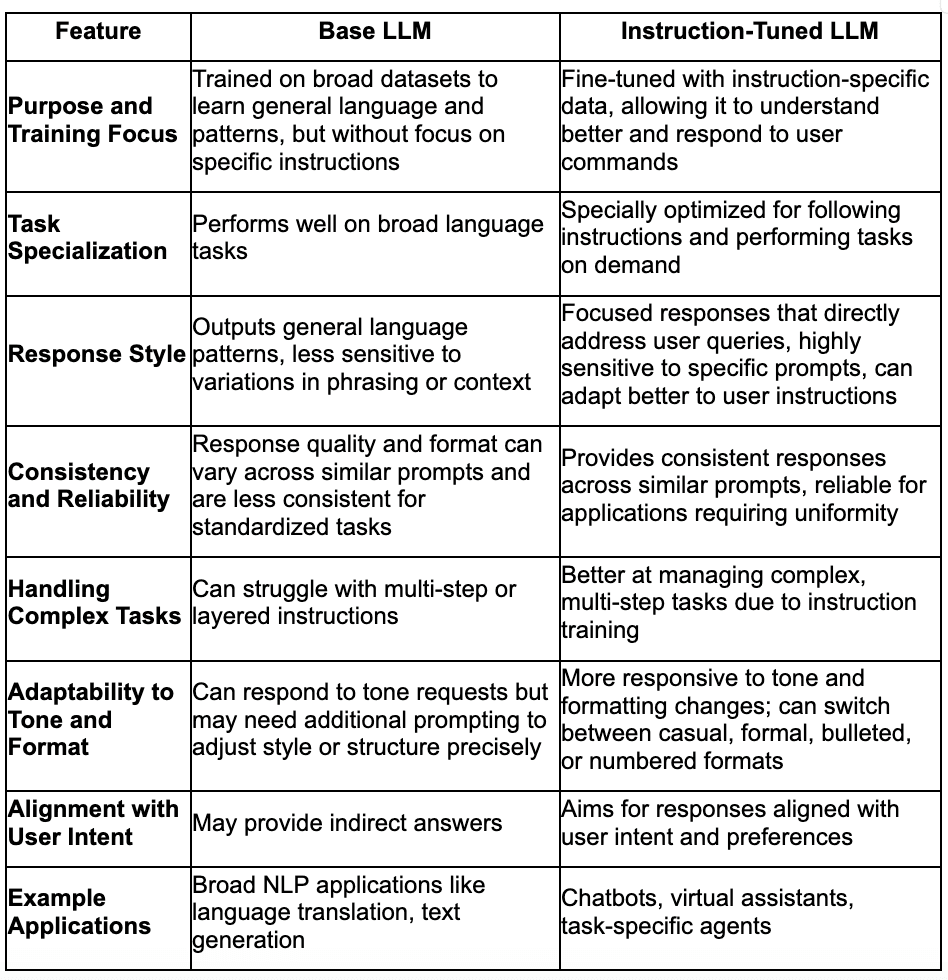

Key differences between base LLM and instruction-tuned LLM

Both base LLMs and instruction-tuned LLMs can often complete the same kinds of tasks. Still, instruction-tuned models are more consistent, reliable, and aligned to specific tasks due to their training on instruction-following data.

Base LLMs can respond to a wide range of prompts, but they may interpret instructions loosely or provide generalized answers because they haven't explicitly been trained to follow detailed commands.

Instruction-Tuned LLMs have been fine-tuned on instruction-specific data, which helps them better understand and act on directive prompts that often start with verbs like "List," "Summarize," "Explain," or "Write." This extra layer of training reduces ambiguity and makes them more precise in meeting the specific format, tone, or depth requested.

While a base LLM can technically generate similar content or answer the same kinds of questions, instruction-tuned LLMs offer a level of polish that makes them better suited for roles requiring high customization. Simply put, instruction-tuned models perform more reliably in specific scenarios. It will ultimately improve user satisfaction efficiency and reduce error rates in real applications.