BigCode project: Code-generating LLMs boosted by Toloka's crowd

Why BigCode is a big deal

Toloka recently labeled training data to support BigCode, an open scientific collaboration jointly led by HuggingFace and ServiceNow.

BigCode's mission is focused on the responsible development and use of large language models for code. This aligns perfectly with Toloka's values. We believe that a high-tech approach to data labeling requires a combination of science and high-quality human input obtained in a responsible and scalable way. That's why working on the BigCode project is so organic to Toloka and mutually beneficial for all the teams involved.

The PII challenge

Code LLMs are making developers more productive by taking over the mundane and error prone parts of programming. However, current systems face regulatory challenges such as GDPR, so the BigCode project looked to Toloka for help with removing sensitive information from their dataset called The Stack. This would mean getting help with the annotation of usernames, passwords, and security tokens. With 6.4 TB of code in multiple programming languages in the dataset, this was not a trivial task.

The curators set out to prepare a PII model training dataset to automatically detect and mask personal data in the The Stack. They identified 14 categories of sensitive data that needed to be accurately recognized. The remaining challenge was to find enough experts to label the training data.

Crowd magic: 4349 hours in 4 days

Toloka teamed up with BigCode to take on the dataset preparation. The traditional approach would be to hire a team of programmers who could read the source code and accurately label all 14 categories of data. Considering the size of the dataset (12,000 code chunks), it would take a lot of programmers or many months of effort.

Fortunately, Toloka is anything but traditional. Our team got the data labeled in just 4 days with the combined efforts of 1399 Tolokers (some non-programmers) from 35 different countries. This was a total of 4349 person-hours — the equivalent of an entire year of work for one programmer working alone.

Cracking the magic trick

The secret to making the task doable was to break it down into smaller steps, a process that we call decomposition. Let's dissect the project to see how we pulled it off.

Labels and skills

The goal was to find and label 14 categories of personal data: 7 main categories (names, email addresses, passwords, usernames, SSH and API keys, cloud IDs, and IP addresses), 6 subcategories, and an “ambiguous” category. While names and email addresses are easy to understand, some of the categories are difficult for non-experts to recognize when looking at programming code.

We used a quiz to teach Tolokers about all the categories. The quiz gave detailed instructions for each category, followed by a test. After the quiz, Tolokers were assigned a skill level for each category and granted access to tasks based on skill. So if a certain person was good at labeling names but not good at IP addresses, they were only allowed to do tasks for labeling names.

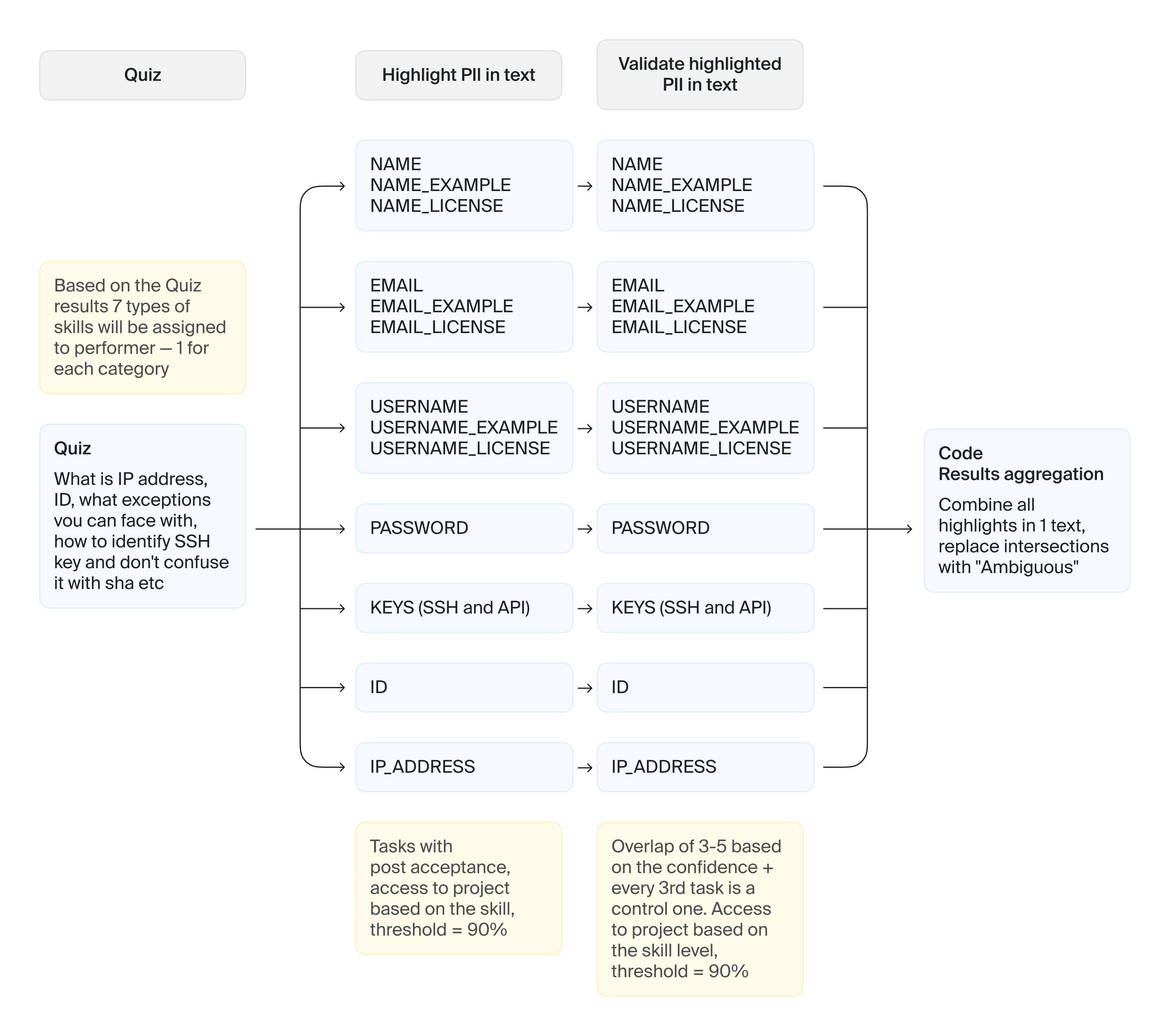

Project decomposition before we set it up in Toloka

The interface

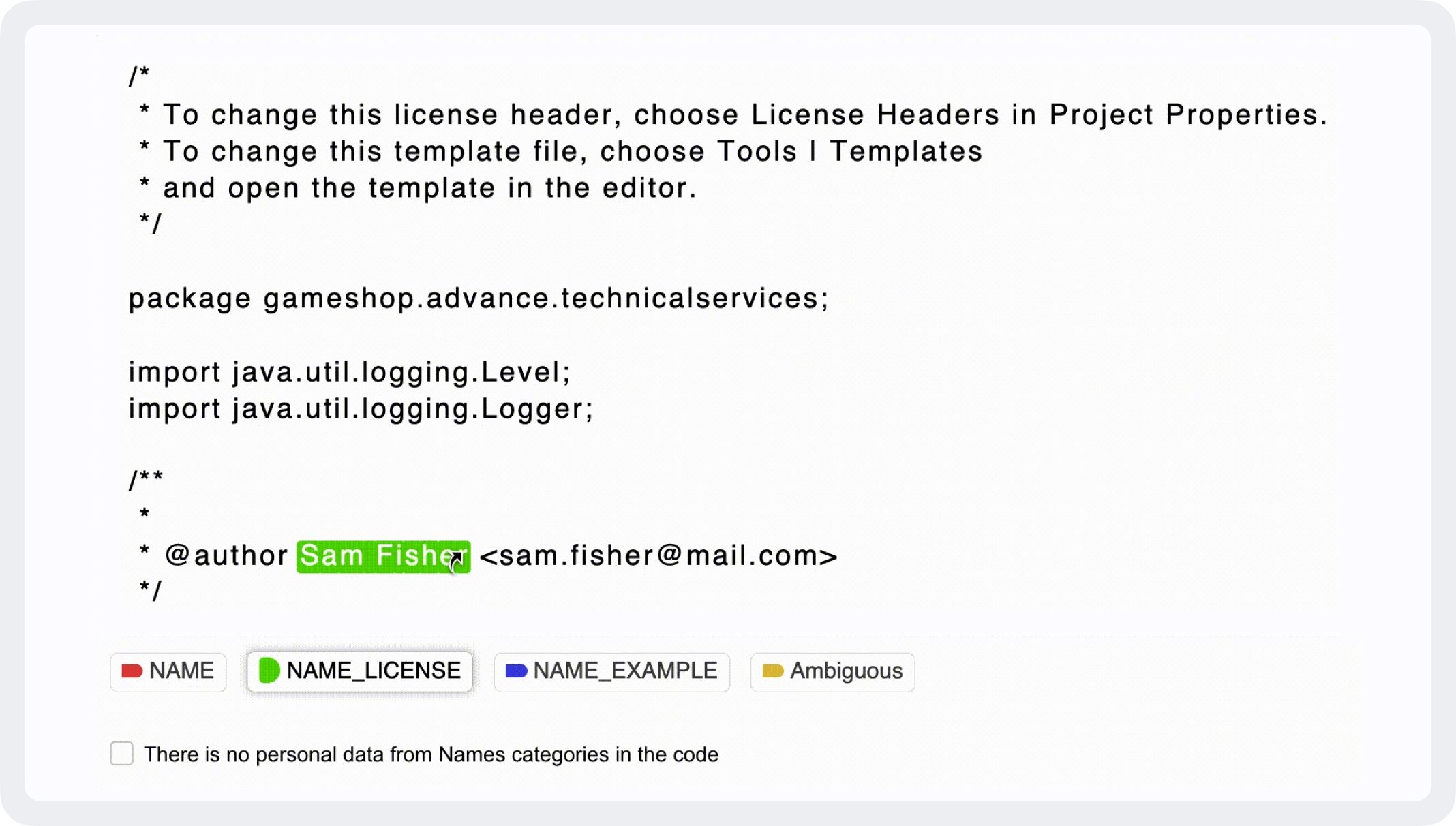

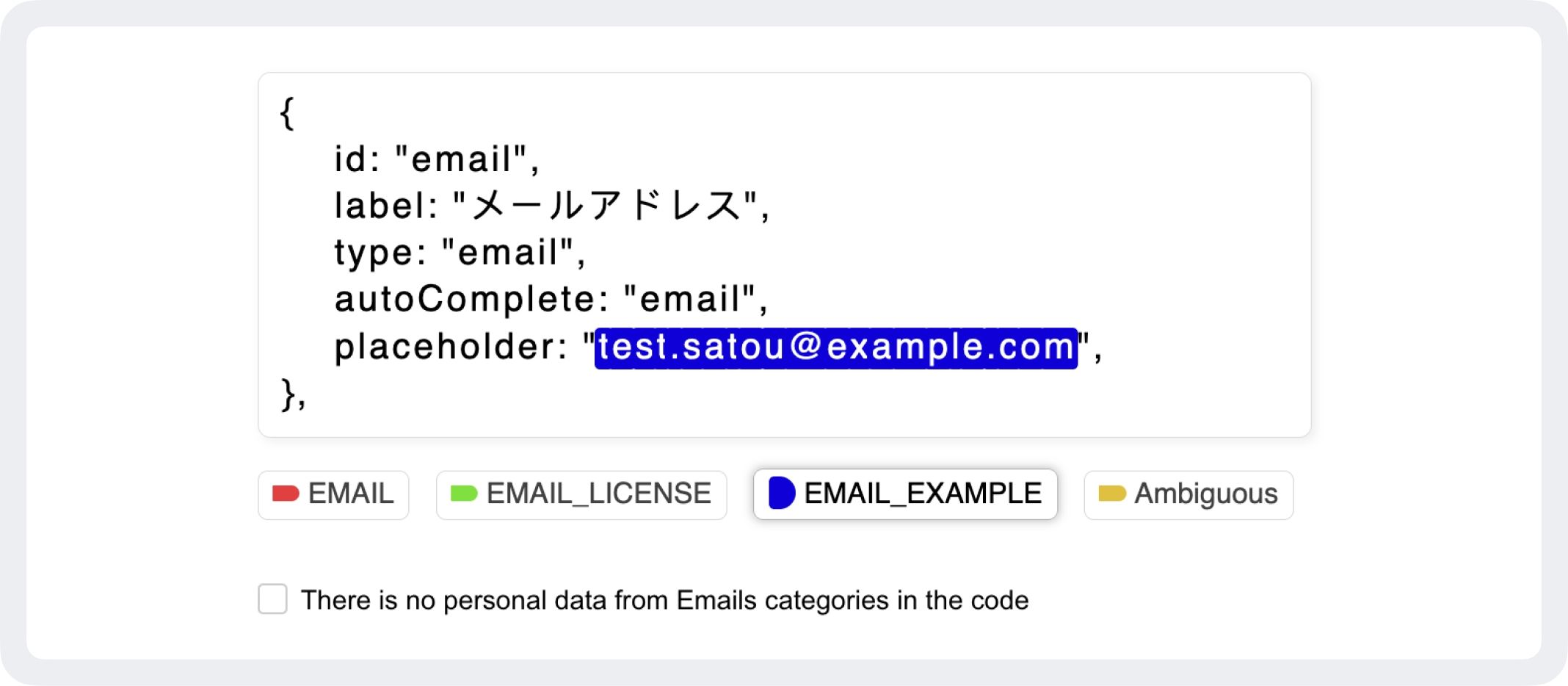

The 12,000 code samples were divided into chunks of 50 lines each. We decomposed the labeling into separate projects by category, which meant that each chunk was labeled in 7 different projects — once for each of the main categories. In any given task, the Toloker was asked to look for just one category of personal data at a time. This meant that more people could successfully contribute to the project with less expertise needed.

We designed a custom Javascript interface to display the code formatting correctly. Tolokers used color highlighting to select the personal data they found for each category. Each task had a maximum of 4 subcategories to choose from, which made it easy to differentiate them with contrasting colors. The visual simplicity speeds up labeling (imagine what it would be like if we tried to label all 14 categories with different colors at once). This is what it looked like:

The steps

The project was broken down into 4 steps:

Quiz. We showed Tolokers explanations and examples of how to find each category of personal data and how to handle exceptions. The quiz tested their skills in each category separately.

Labeling in 7 separate projects. Each code chunk was labeled separately for each main category.

Validation in 7 separate projects. Each labeled chunk was shown to other Tolokers to decide if the label is correct or incorrect. Validation was repeated 3 – 5 times, depending on the confidence and consistency of the answers.

Automatic aggregation. All of the labels were combined for each chunk. Intersecting labels were marked as “Ambiguous”.

Taking care of the crowd

The BigCode team and Toloka share a commitment to responsible data collection and fair treatment of crowd workers.

A main concern for the BigCode project was to pay Tolokers more than the minimum wage. Together, we analyzed minimum wage rates and purchasing power in different countries and set an hourly pay rate of $7.30. The project was open to Tolokers in countries where this pay rate would have the same purchasing power as the highest minimum wage in the US ($16.50).

We wanted to reward Tolokers for their performance, so we started with a fair market price on tasks and then awarded bonuses to reach the hourly rate. When the project finished under budget, we allocated all of the remaining funds as final bonuses.

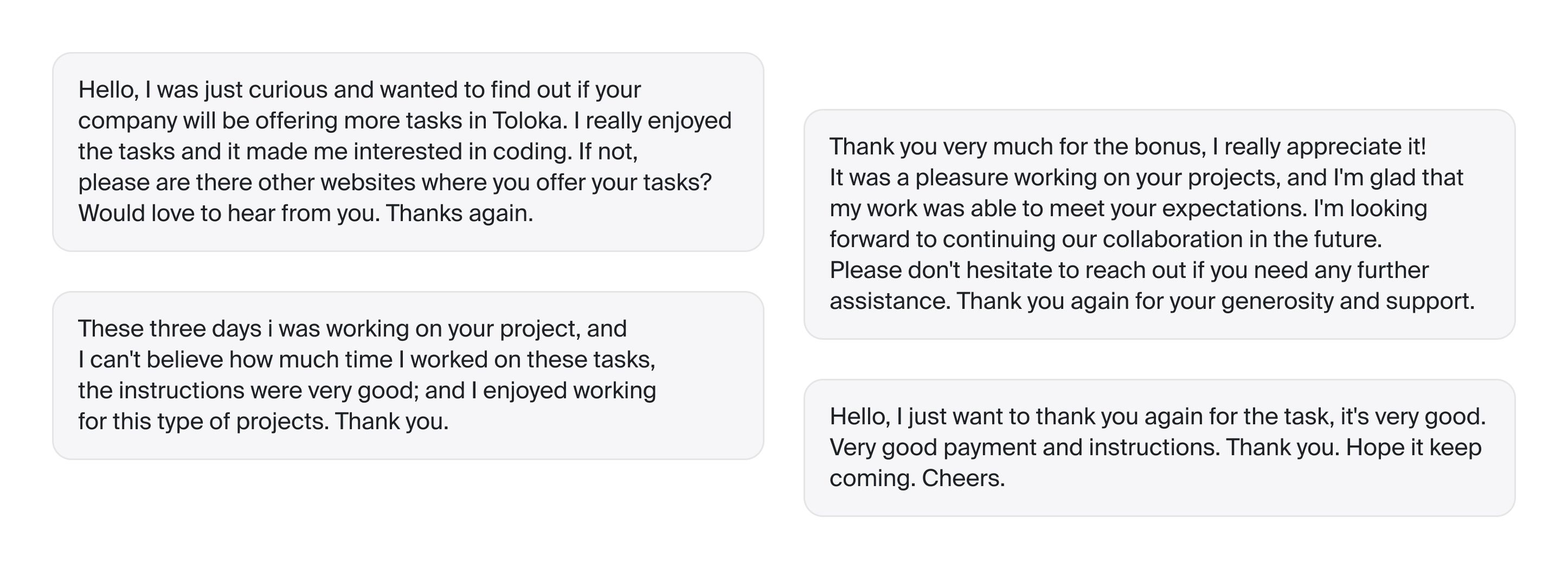

The project was a big hit with Tolokers. Many people welcomed the opportunity to work with real programming code and learn more about coding in the process. We received waves of positive feedback from people asking for more tasks.

Some feedback received from Tolokers

The ethics of BigCode

We did have concerns about the ethics of sharing personal data with the crowd. BigCode took pains to make sure that all the code samples were taken from permissively licensed open sources that were already publicly available, and we made sure that Tolokers understood that the dataset would be used to train a model to handle this task automatically.

As a company, Toloka supports the BigCode goals of transparency, openness, and responsible AI. Open datasets are just part of the equation, but it's essential that those datasets are collected in a fair and transparent way — Toloka's number one priority.