Toloka's Quality-to-Price Ratio is Hard to Beat: A Case Study from Japan

When a Japanese startup approached Toloka's partner Roman Kucev with 34,000 images from various TV shows and a seemingly daunting task of labeling human faces in every one of those images, they asked for 3 things: we want it done well, we want it done fast, and we want it done cheap... To the clients' delight, 3 weeks later, the task was completed at a fraction of the expected cost.

Why crowdsourcing?

Roman admits that even three years ago this task would have been tackled differently — without crowdsourcing — and it would have cost the clients 2.5 times the amount. Being a former employee of Prisma, Roman explains that other methods such as Computer Vision Annotation Tool (CVAT), though open-source and free, require a dedicated team of trained developers to run. Teams like that often aren't available. And their services are expensive.

Crowdsourcing has been a complete game changer that today allows companies to recruit talent across the board without needlessly paying through the roof. Instead of having a small team of highly qualified and often overpriced specialists do all of the work, crowdsourcing allows for an infinitely larger pool of non-expert users, each one contributing a relatively small amount.

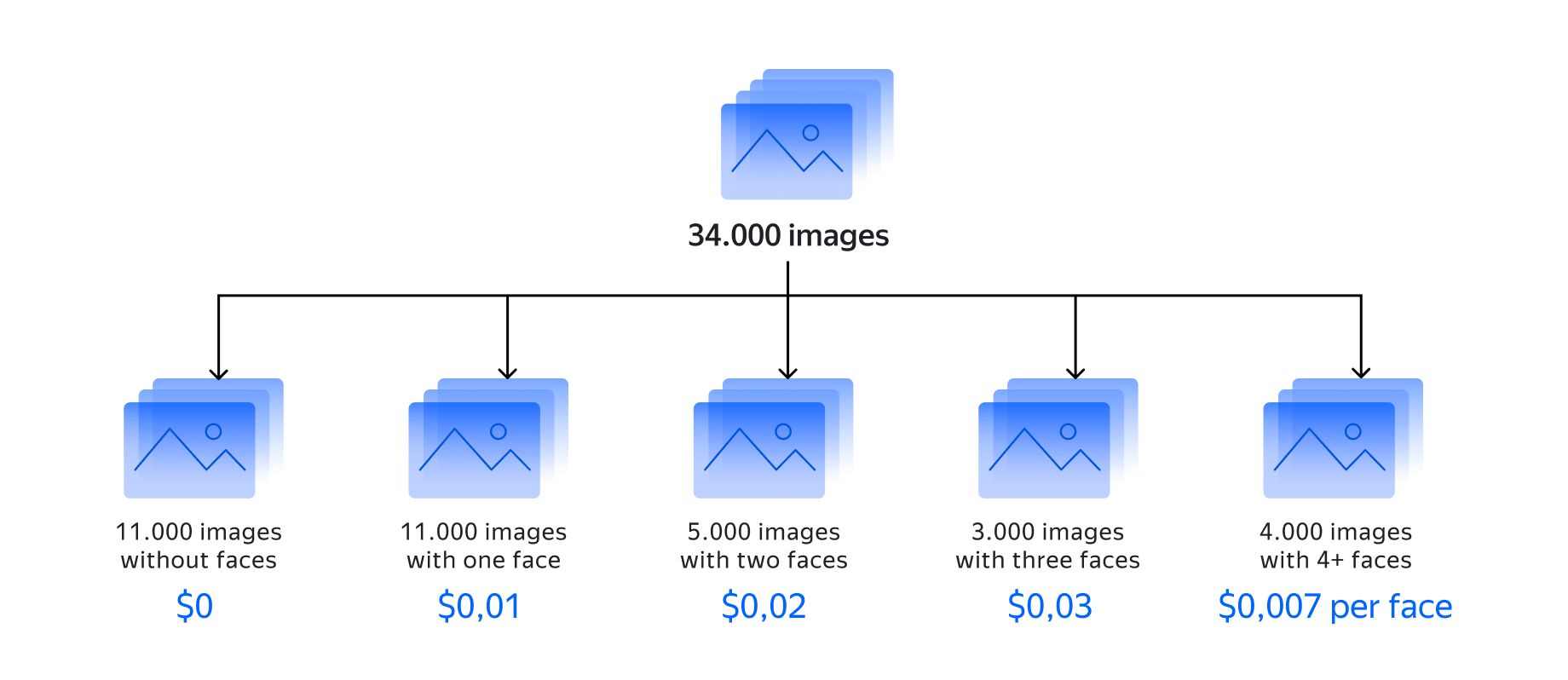

Prices

The task at hand and its challenges

Since none of the content provided by the startup contained any personal data, crowdsourcing was a no-brainer. It was the only cost-effective way to go about the task of labeling tens of thousands of faces without having to hire software-specific experts. Be that as it may, the task still wasn't without its challenges.

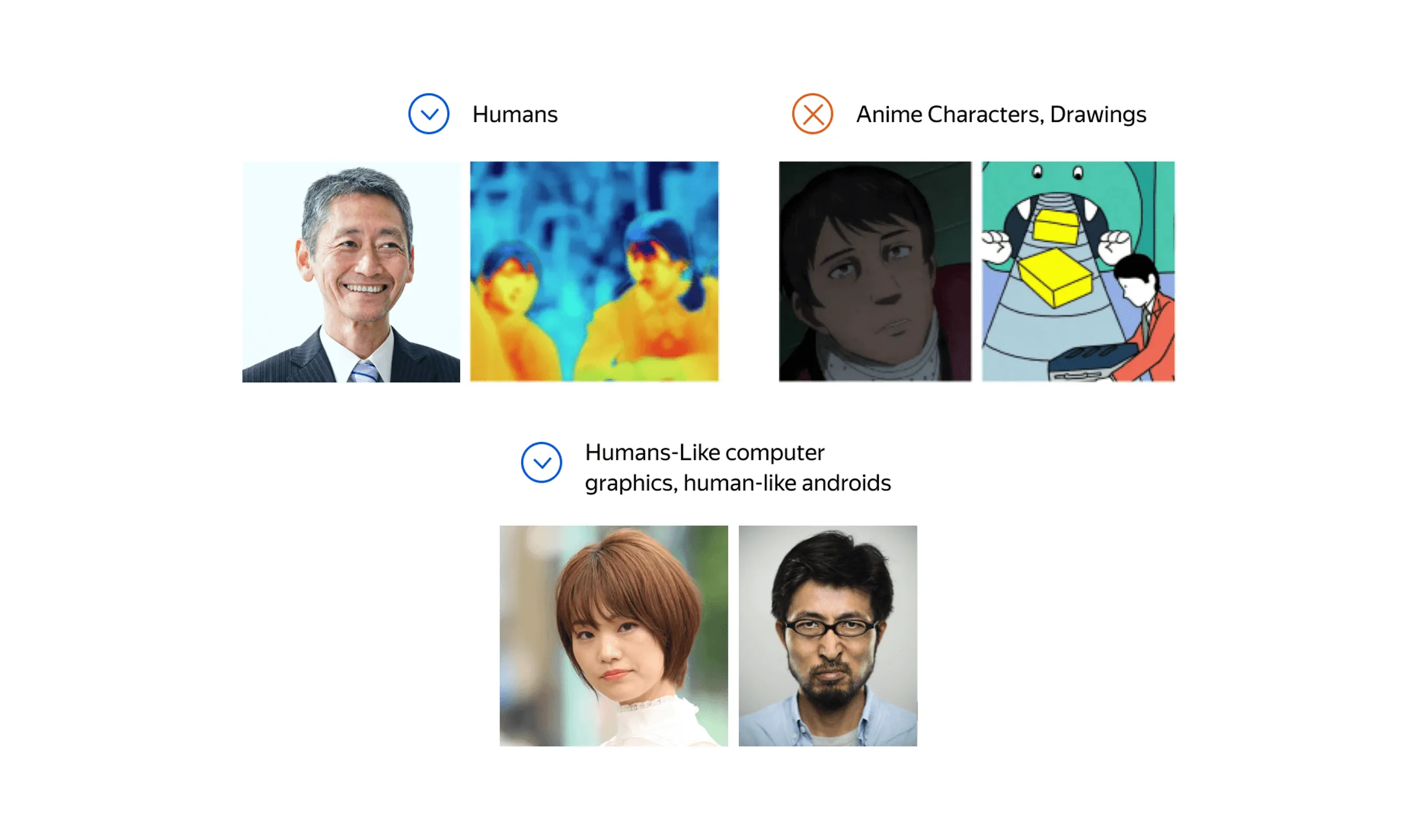

First, there was a bit of a disagreement as to what should be considered a human face. This may sound absurd at first, but it turned out that among the many images taken from a multitude of Japanese programs, there were not only those of men and women, but also anime characters, various drawings, human-like computer generated imagery, and humanoid androids. Eventually, it was decided that all but the animated characters and drawings were to be treated as human faces.

Face types

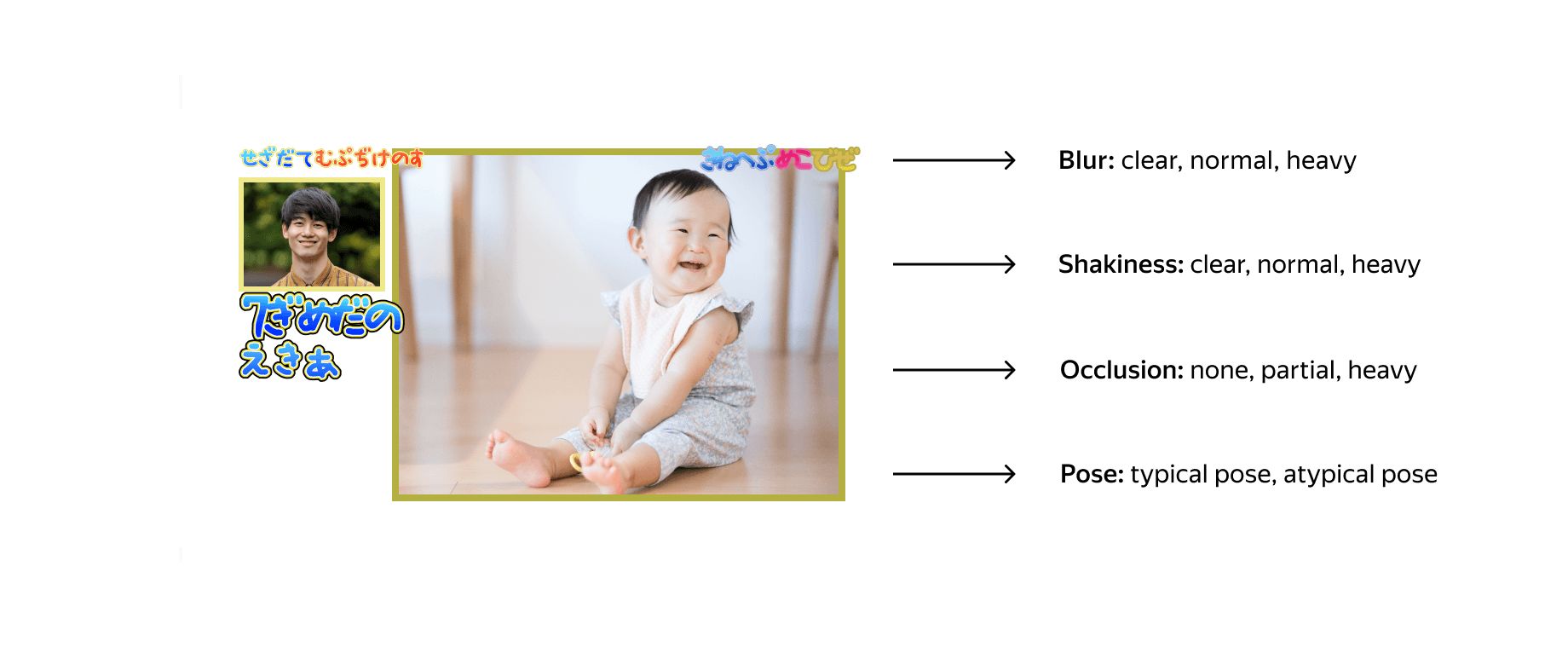

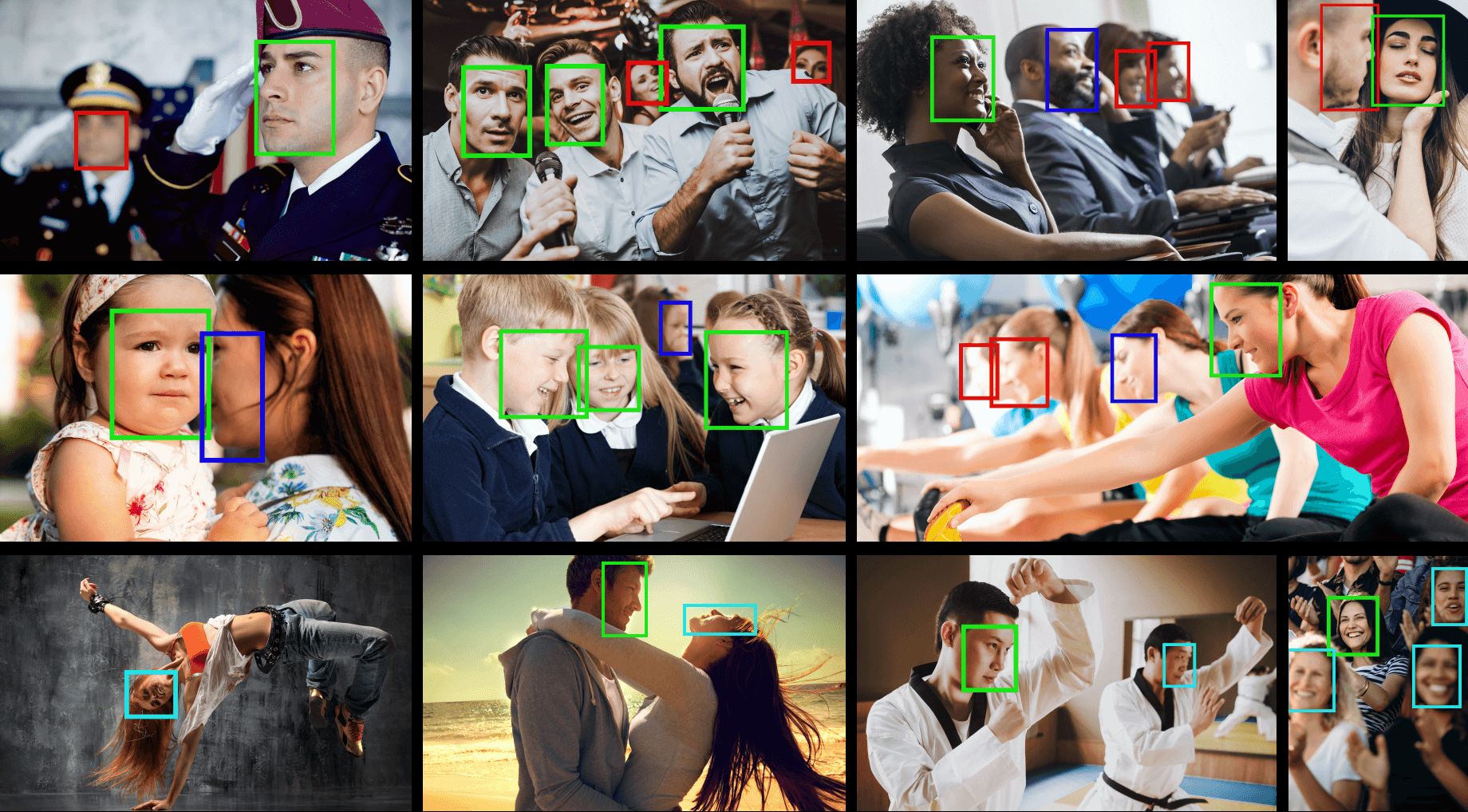

The next challenge was identifying different levels of blur and shakiness, different degrees of occlusion, and different poses — with follow-up instructions for Tolokers, which was key to accurate labeling.

Image parameters

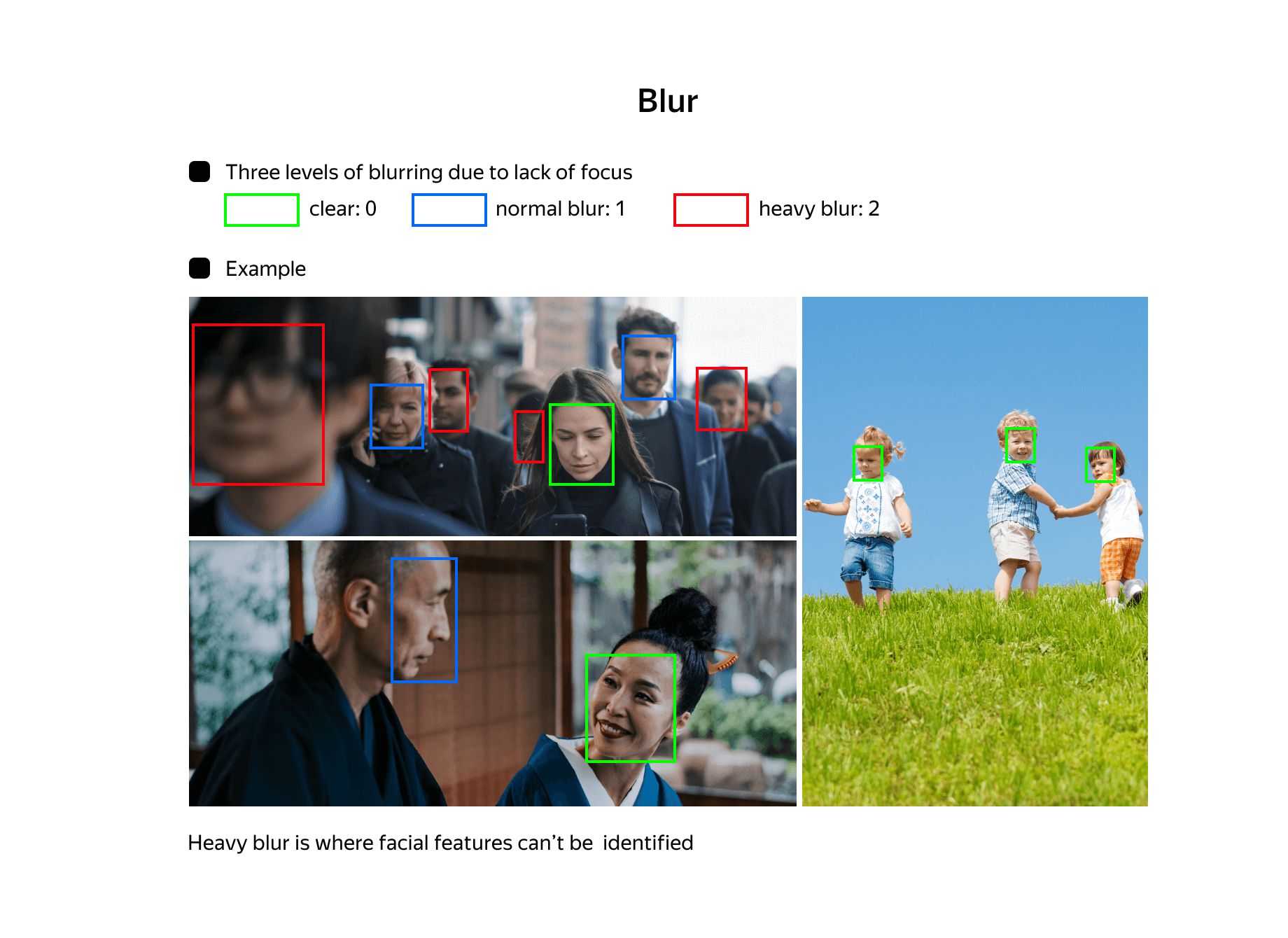

Three colors were used (green, blue, and red), each one indicating a different rate of visibility.

Rate of visibility

Solution

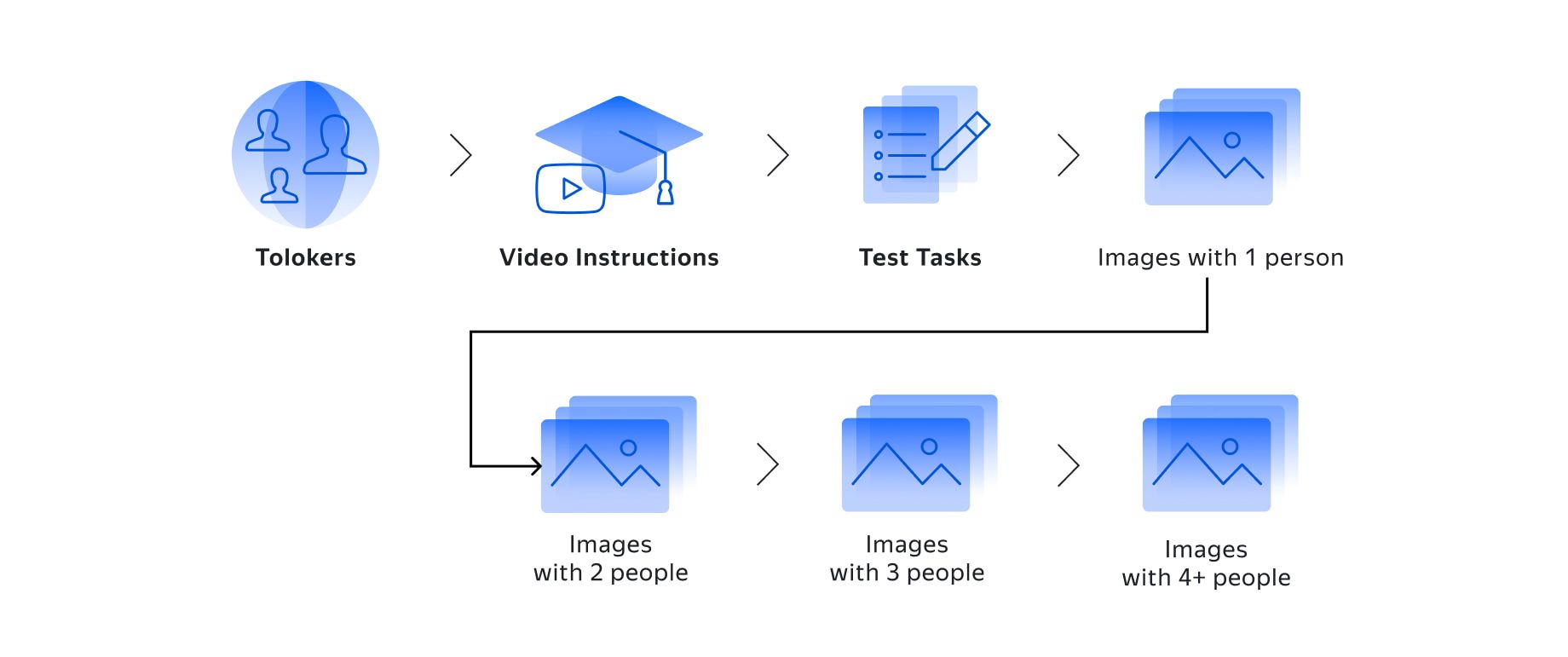

Every image could contain any number of faces, from zero to fifty. As a result, it was important to set different pay rates for processing images of varying complexity, and task-train all of the contributors. It was also necessary to assign a handful of moderators for quality control. The task was eventually solved in three stages:

Introduction. Before starting on the task, every interested Toloker watched video instructions and then labeled 3-4 images as a test. If they did a good job, they moved on to the actual task.

Learning and labeling. Each stage of the task required a higher level of labeling skills: the Tolokers started out with images that contained only one face and gradually moved all the way up to 4+ faces. With this smooth learning curve, the Tolokers were more likely to deliver high quality on the more complex images. Each image took them around 7-8 minutes to label.

Quality control. A moderator, who was a more experienced user, subsequently checked whether each image was labeled correctly, which took an additional 10-15 seconds per image. Each moderator oversaw a team of 30-40 Tolokers on average.

Solution

Results

65,000 faces were labeled over a period of 3 weeks with the cost of approximately $0.015 per face. The cost is estimated to be 250% lower than any other non-crowdsourcing solution currently available on the market while the quality never fell below market average throughout.

Results