DeepHD Technology: Improving Video Quality with Computer Vision

When people search the internet for an image or video, they often narrow their query with a phrase about "high quality." Quality usually means resolution — users want a large image that looks sharp on a modern computer, smartphone, or TV display. But what if the source simply doesn't exist in good quality?

The development team in our Computer Vision and ML Applications department tackled this problem and created a technology called DeepHD. In short: Yandex learned to increase the resolution of videos in real time using deep learning. We'll share how the team developed this technology, and how Toloka users helped them along the way.

There's a lot of video content with low resolution floating around on the internet, from movies made decades ago to TV programs. When users stretch a video like this to fit their screen, the image becomes pixilated. The ideal solution for old movies would be to find the original film, scan it with modern equipment, and restore it manually, but this isn't always possible. Streamed content is even trickier, since it has to be processed in real time. With this constraint, the best solution to help increase resolution and clean out artifacts proved to be computer vision.

In the machine learning industry, the task of enlarging images and videos without losing quality is termed super-resolution. Many articles have already been written on this topic, but real-life application turned out to be much more complex and interesting than expected. Here are some of the challenges our team faced when developing the DeepHD technology:

Super-resolution methods can be used to restore and fill in details that weren't in the original video due to its low resolution and quality. But there's also a downside: they add clarity both to the image itself and to compression artifacts.

Collecting training samples is problematic. Training requires a large set of video pairs with the same video in both high and low resolution and quality. In real scenarios, there is usually no high-quality version available for low-quality content.

The solution has to work in real time.

In recent years, the use of neural networks has led to significant advances in almost all computer vision tasks, and the problem of super-resolution is no exception. Our development team found the most promising solutions to be based on GANs (Generative Adversarial Networks), which can produce high-definition photorealistic images and fill in missing details like hair and eyelashes on images of people.

Filling in missing details

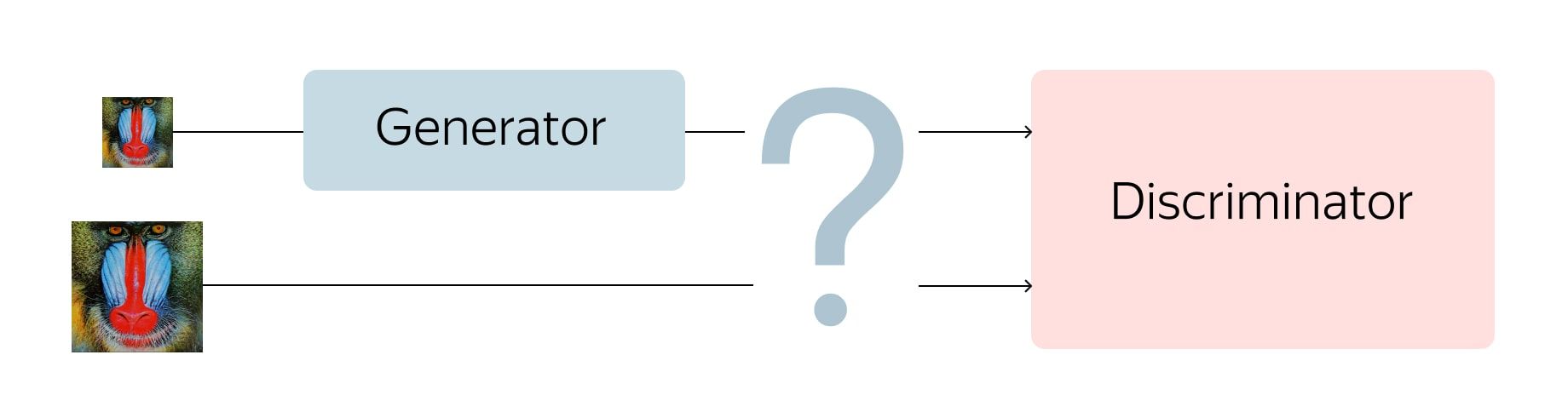

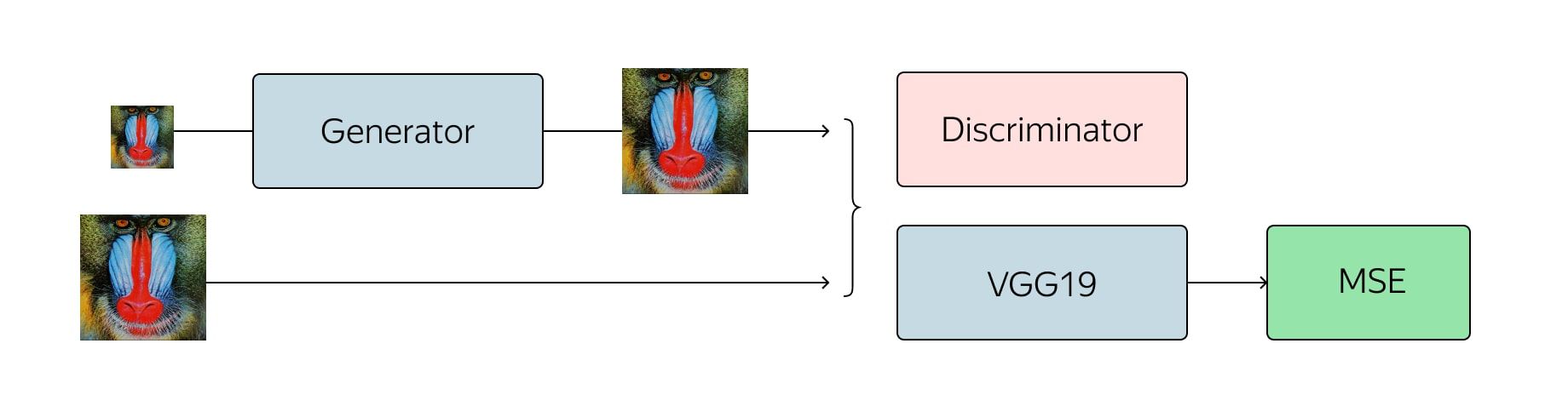

In the simplest sense, a GAN consists of two parts: a generator model and a discriminator model. The generator takes an image and returns one twice as large. The discriminator receives the generated and "real" images and tries to distinguish them from one another.

GAN (Generative Adversarial Networks)

For training, the team needed to collect several dozen videos in Ultra HD quality. They reduced these videos down to 1080p resolution and got reference examples. They then further reduced the video resolution by half, at the same time compressing them with different bitrates, to get something resembling real low-quality videos. The resulting videos were divided into frames and then used to train the neural network.

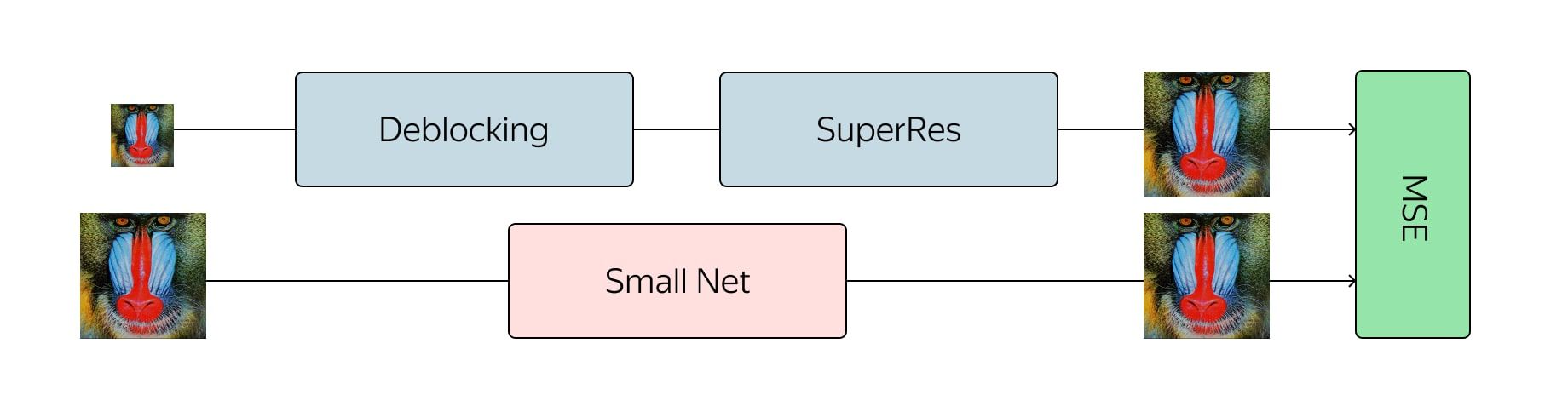

The team was striving for an end-to-end solution and they intended to train the neural network to generate videos with high resolution and quality straight from the original. However, it turned out that GANs were unreliable and kept trying to add detail to compression artifacts rather than eliminate them. So the process had to be split into stages. The first stage consists of removing video compression artifacts, also known as deblocking.

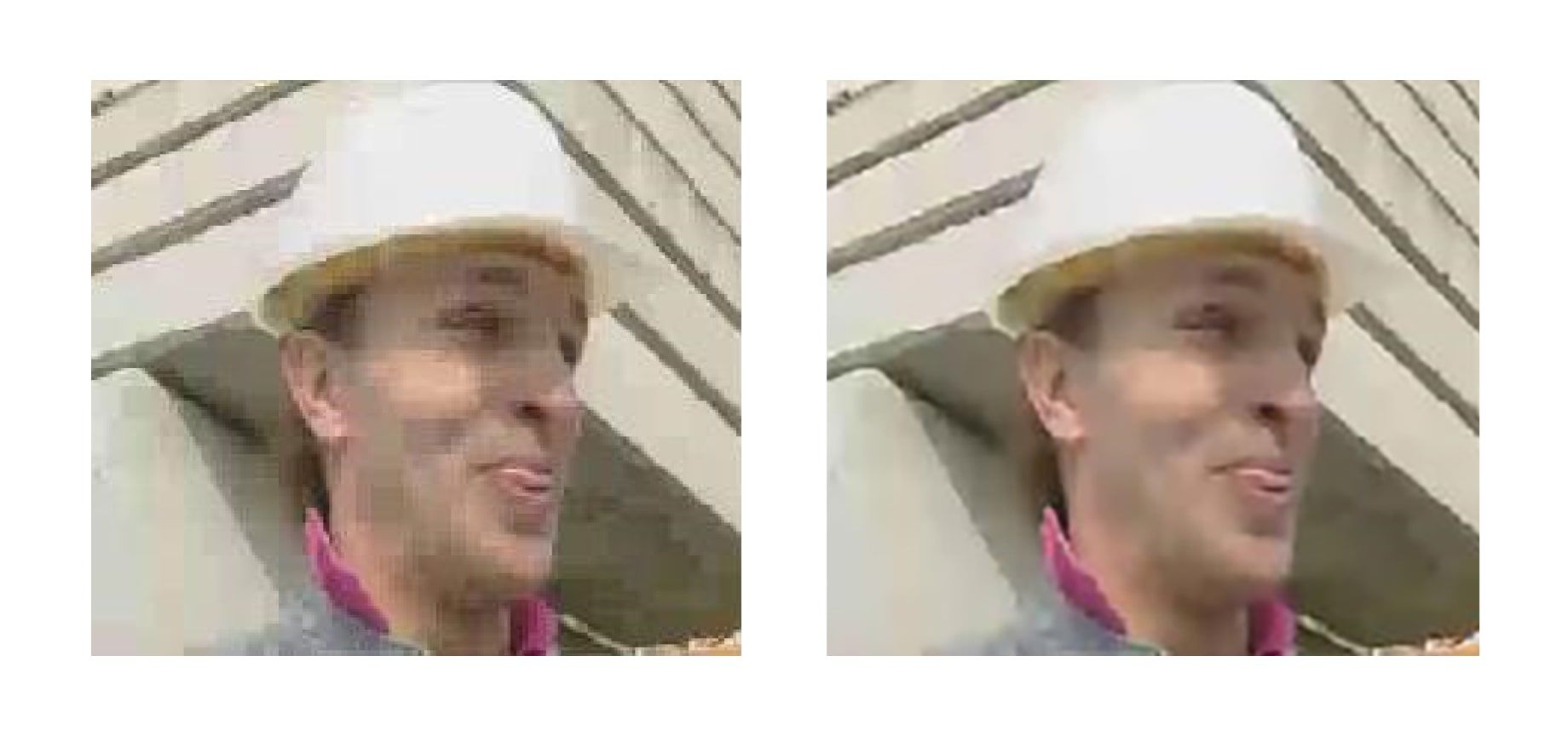

Here's an example of one deblocking method:

Deblocking

At this stage, the team minimized the standard deviation between the generated frames and the original ones. This increased the image resolution, but there was in fact no real increase in resolution due to regression toward the mean. The neural network didn't know the exact pixel coordinates of borders in the image, so it was forced to average several options, generating a blurry result.

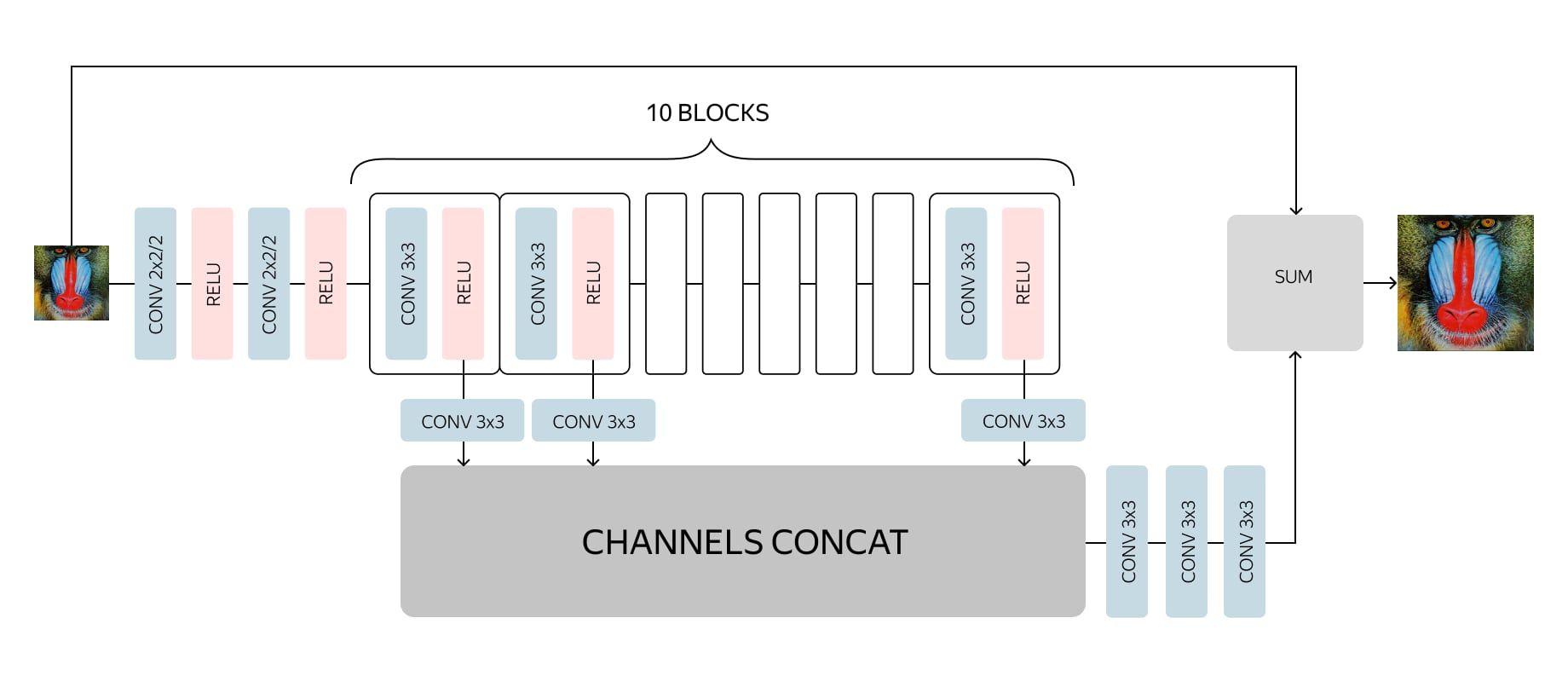

The main achievement at this stage was the removal of video compression artifacts. This meant that, at the next stage, the generative network would only need to improve the image's clarity and add in missing details and textures. After hundreds of experiments, the developers identified the optimal architecture for balancing performance and quality. This architecture somewhat resembles that of DRCN:

Deblocking architecture

The intention was to get as deep an architecture as possible and avoid convergence problems during training. On the one hand, each consecutive convolutional layer extracts increasingly more complex features from the input image, helping to determine what kind of object is located at any given place in the image and to restore complex and severely damaged elements. On the other hand, the distance on the graph between any neural network layer and the output remains small, which improves the convergence of the network and makes it possible to use many layers.

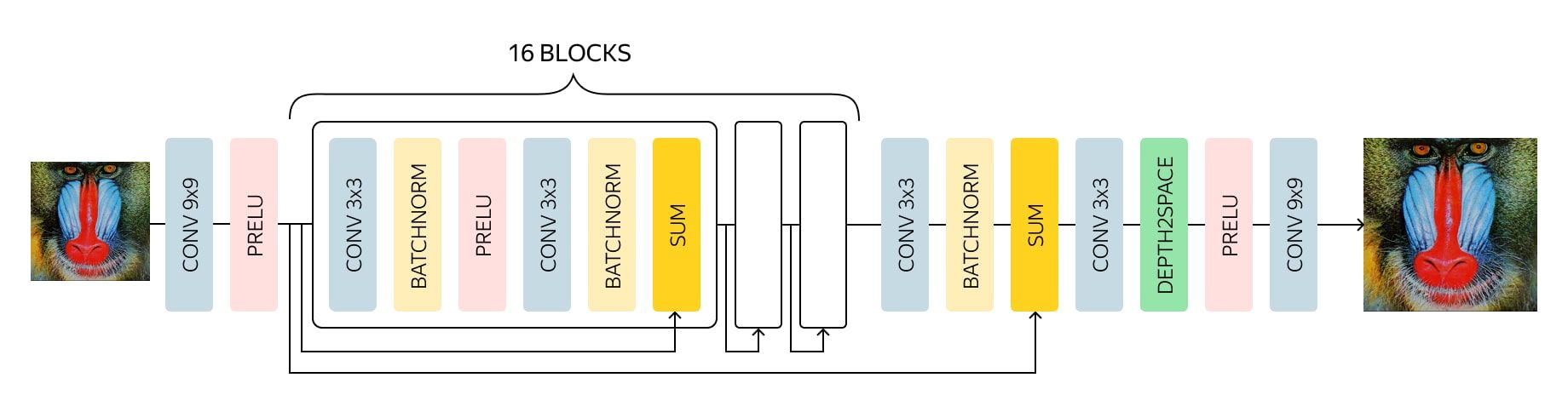

The developers decided to base the neural network on SRGAN architecture in order to increase resolution. Before training a competitive network, it is essential to pre-train the generator the same way as at the deblocking stage. Otherwise, the generator will begin returning noise, and the discriminator will immediately start to "win out," meaning it will easily learn to distinguish the noise from real frames, and training will fail.

Deblocking architecture

After pre-training, the next step was to train the GAN, which had its own set of issues to deal with. The generator must create photorealistic frames and also save the information that they already contain. For that purpose, a content loss function was added to the classical GAN architecture. It consists of several layers of the VGG19 neural network that was trained on the standard ImageNet dataset. These layers transform the image into a feature map that has information about the content of the image. The loss function minimizes the distance between the maps that were obtained from the generated and original frames. Furthermore, the loss function prevents the generator from being ruined in the first stages of training, when the discriminator has yet to be trained and only produces useless information.

GAN architecture

A successful series of experiments produced a good model that we could apply to old movies. However, it was still too slow to process video streaming. As it turns out, you can't just reduce the generator without suffering significant quality loss in the resulting model.

The knowledge distillation approach saved the day. In this method, a lighter model is trained to repeat the results of a heavier one. The team took a large number of real videos in low quality, processed them with the generative neural network from the previous step, and then trained the lighter network to produce the same output from the same frames. The result is a network with only slightly worse quality than the original one, but which works ten times faster. A TV channel in 576p resolution can be processed using a single NVIDIA Tesla V100 card.

GAN architecture

Perhaps the most difficult part of working with generative networks is evaluating the quality of the resulting models. There's no clear error function, unlike when solving a classification problem. Only the accuracy of the discriminator is known, which does not reflect the quality of the generator (a reader well-acquainted with this topic may suggest using the Wasserstein metric, but in this case it produced a notably inferior result).

What helped us solve this problem was using real people. Toloka users were shown pairs of images — one was the source image and the other was processed by the neural network, or both were processed via different solutions. Users were paid to select the better version. This produced a statistically significant comparison of the versions, even in the cases when changes were difficult to detect visually. Our team's final models came out on top in over 70% of cases, which is quite a lot, considering that users spend only a few seconds evaluating each pair.

Another interesting result was that, for videos in 576p resolution, the versions that were increased to 720p using DeepHD won out over the original 720p videos in 60% of cases. That is to say, the processing not only increased the video resolution, but actually improved the video's visual qualities.

DeepHD technology was tested on several old movies.

The difference between the before and after versions is especially clear if you study the details, like the characters' facial expressions in close-ups, clothing textures, or fabric patterns. The technology was able to make up for some of the shortcomings of digitization by fixing things like overexposure on faces and the clarity of objects in the shadow.

DeepHD quality improvement