Evaluating Large Language Models

Read the recent case study to learn how Toloka effectively scaled human evaluation by processing thousands of multi-turn dialogues daily, demonstrating significant improvements in throughput and efficiency. By leveraging a large pool of domain experts, Toloka’s approach optimizes the quality and speed of evaluations necessary for training, fine-tuning, and validating. This framework ensures consistency and accuracy in assessment and supports the dynamic needs of high-throughput environments, making it ideal for ongoing, intensive model evaluations.

Introduction

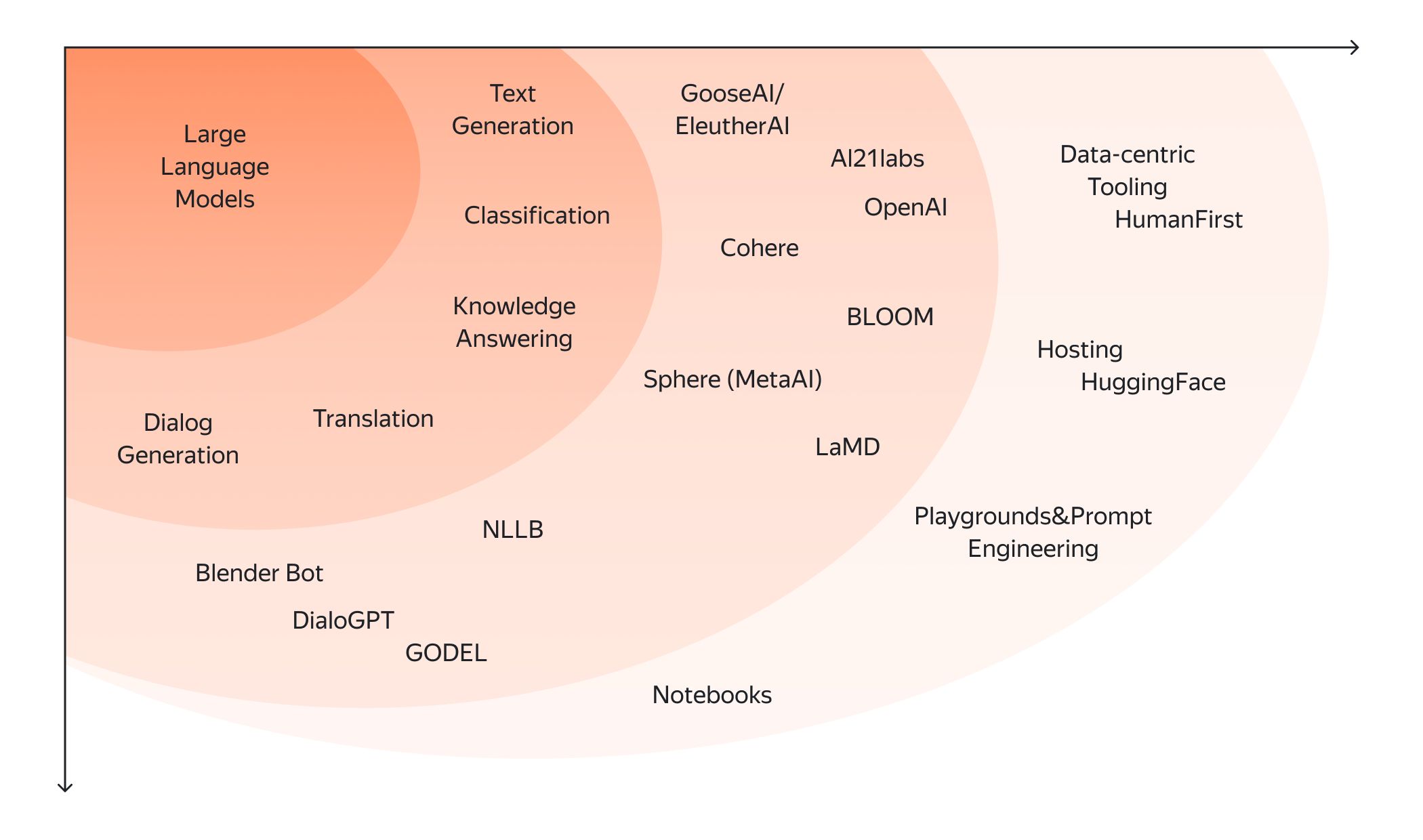

As advanced Natural Language Processing (NLP) models, Large Language Models (LLMs) are the talk of the town these days given their uncanny ability to generate human-like text by leveraging large quantities of pre-existing language data and multifaceted machine learning algorithms. Think GPT-3, BERT, and XLNet. However, as we become increasingly dependent on these models, finding ways to effectively evaluate them and safeguard against potential threats is more imperative than ever.

In this article, we cover in detail the importance of evaluating large language models to improve their performance and why human insight is critical to this process. While much of the information out there indicates that incorporating human input can be costly, with the help of Toloka’s crowd contributors, it can be done much faster and in a more cost-effective way.

The importance of evaluating LLMs

As large language models become more deeply embedded in machine learning, having thorough evaluation methods and comprehensive regulation frameworks in place can help ensure that AI is serving everyone’s best interests.

The number of large language models on the market has increased exponentially, but there’s no set standard by which to evaluate them. As a result, it’s becoming significantly more challenging to both evaluate models and determine which one is the best — and safest. That’s why we need a reliable, comprehensive evaluation framework by which to precisely assess the quality of large language models. A standardized framework will not only help regulators evaluate the safety, accuracy, and reliability of a model, but it will also hold developers accountable when releasing these models — rather than simply slapping a disclaimer on their product. Moreover, large language model users will be able to see whether they need to fine-tune a model on supplementary data.

Source: https://www.analyticsvidhya.com/blog/2023/05/how-to-evaluate-a-large-language-model-llm/

Why evaluate LLMs?

It’s vital to evaluate large language models to assess their quality and usefulness in different applications. We’ve outlined some real-life examples of why it’s important to evaluate large language models:

Assessing performance

A company must choose between several models for its foundational enterprise generative model based on relevance, accuracy, fluency, and more. The given LLMs must be assessed according to their ability to generate text and respond to input.

Comparing models

A company selects and fine-tunes a model for better performance on industry-specific tasks by carrying out a comparative evaluation of LLMs to choose the one that best suits their needs.

Detecting and preventing bias

By having a holistic evaluation framework, companies can work to detect and eliminate bias found in large language model outputs and training data to create fairer outcomes.

Building user trust

Evaluating user feedback and trust in the answers provided by LLMs is paramount to building reliable systems that are aligned with user expectations and societal norms.

Methods for evaluating LLMs

Evaluating an LLM’s performance includes measuring features such as language fluency, coherence, context comprehension, speech recognition, fact-based accuracy, and its aptitude to generate pertinent and significant responses. Metrics like perplexity, BLEU score, and human evaluations can be used to assess and compare language model performance.

This process requires a closer look at the following factors:

Authenticity and accuracy — How close a model’s outputs are to the correct answers or expected results. Precision, recall, and F1 scores point to a model’s accuracy level.

Speed — The speed at which a model can produce outputs is essential, especially in cases where rapid response times are critical.

Grammar and legibility — LLMs need to be able to generate text that is readable with correct grammar usage and sentence structure.

Fairness and equality — Making sure a model doesn’t have any bias toward specific groups and doesn’t generate discriminatory outcomes based on race, gender, and other factors.

Robustness — A model’s resistance to adversarial attacks and its ability to perform well across various conditions.

Explainability — LLMs must substantiate their predictions and outputs to build trust among users and provide accountability. They need to be able to provide sources and references.

Safety and responsibility — Guardrails are essential for AI models to make them safer and more reliable (more on this later).

Understanding context — The model needs to be able to understand the context surrounding a given question since that same question can be asked in different ways.

Text operations — LLMs should be able to carry out text classification, summarization, translation, and other basic functions.

Generalization — How well a model manages unseen data or scenarios.

Intelligence Quotient (IQ) — Just as humans are evaluated based on their IQs, so too can machines be.

Emotional Quotient (EQ) — The same applies to emotional intelligence.

Versatility — The number of domains, industries, and languages a model can work with.

Real-time updates — Models that are updated in real-time can produce enhanced outputs.

Cost — Cost needs to be factored in when considering the development and application of these models.

Consistency — Comparable prompts should ideally generate identical or similar responses from models.

Prompt Engineering — The engineering needed to obtain the best response can also be leveraged to compare two models.

By ensuring the points above are taken into account in our evaluation of LLMs, we can maximize the potential of these formidable models.

Here are five of the most common methods used to evaluate LLMs today:

Perplexity — calculates how well a model can predict a sample of text where lower values equal higher performance.

Human evaluation — human evaluators help to review the overall quality of a language model’s output based on certain criteria such as relevance, fluency, and coherence.

Bilingual Evaluation Understudy (BLEU) — is a metric comparing generated text with a reference one.

Recall-Oriented Understudy for Gissing Evaluation (ROUGE) — a metric that shows how well a model generates summaries compared to reference summaries.

Diversity — metrics that evaluate the multiplicity and individuality of responses generated by a model, where higher scores equal better outputs.

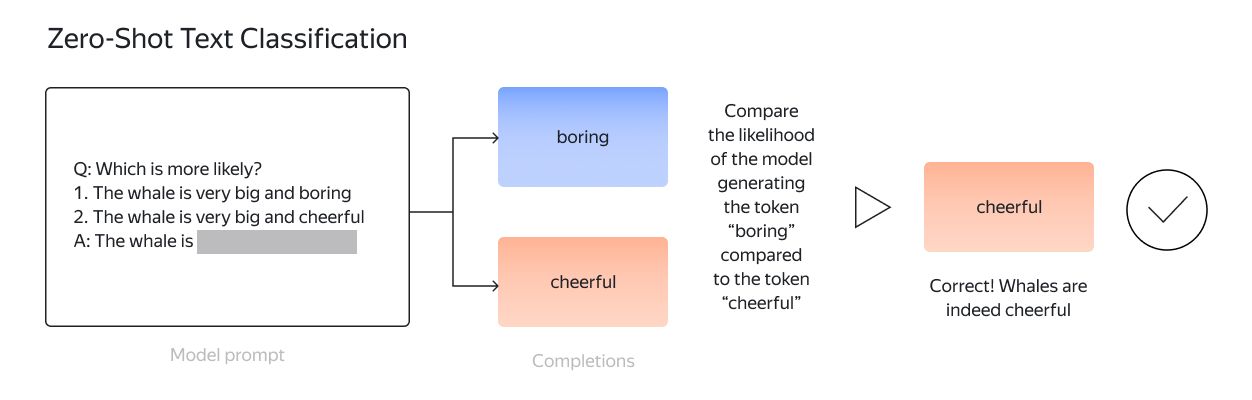

Zero-shot evaluation

Moreover, zero-shot evaluation can now be used for large language models. A cost-effective and well-known way to assess the performance of LLMs, zero-shot evaluation measures the probability that a trained model will produce a particular set of tokens without needing any labeled training data.

Challenges with current model evaluation techniques

Multiple frameworks have been developed to evaluate LLMs, such as Big Bench, GLUE Benchmark, SuperGLUE Benchmark, and others, each of them focusing on its own domain. For example, Big Bench takes into account generalization capabilities when evaluating LLMs, GLUE Benchmark considers grammar, paraphrasing, text similarity, inference, and several other factors, while SuperGLUE Benchmark looks at Natural Language Understanding, reasoning, reading comprehension, and how well an LLM understands complex sentences beyond training data, among other considerations. See the table below for an overview of the current evaluation frameworks and the factors they consider when evaluating LLMs.

Source: Analytics Vidhya, Table of the Major Existing Evaluation Frameworks

Apart from OpenAI’s Moderation API, most of the ones available today don’t take safety into account as a factor for their evaluation results. And not one of them is inclusive enough to be self-reliant.

There are still quite a lot of factors to overcome when it comes to evaluating large language models. Some of the most frequent challenges include:

Over-dependence on perplexity — For all the good that perplexity does, it still has its limitations, namely, it can’t determine context understanding, relevance, or consistency.

Human subjectivity — Despite the fact that human input is critical for assessing LLMs, humans are nevertheless susceptible to subconscious bias and differing views.

Insufficient reference data — Getting a hold of quality reference data poses a significant challenge, especially when you’re seeking multiple responses for tasks.

Limited diversity metrics — Current evaluation methods focus solely on accuracy and applicability of LLM outputs rather than diversity and creativity.

Inapplicability to real-life cases — The real world is filled with complexities and challenges that current evaluation methods are not equipped to handle.

Adverse attacks — LLMs are easy targets for deception, misuse, and manipulation via insidiously constructed input, and current methods don’t factor these attacks in.

Data leakage — Commonly occurs from the test set of datasets into the training set; details of a dataset can also go missing.

Coverage issues — Evaluation datasets don’t always have wide-ranging coverage of all the ways in which a task can be evaluated, which could show up as accuracy, variability, sample size, or robustness issues.

False correlations or duplicate test samples — The evaluation sets for many tasks often contain a “shortcut” solution, which can create a false illusion that a test sample is a reliable representation upon which to evaluate a particular task.

Partitioning and phrasing — Including evaluation dataset splits that come with an alternate application of the same issue.

Random seed — Upon which neural network outputs depend; this could lead to inaccurate results.

Precision versus recall — False positives and false negatives are not identical for each task and can be harmful in certain contexts.

Unexplained decisions — When it comes to getting rid of or keeping data, being able to explain the given thresholds is essential.

Best practices for assessing LLMs

The development of large language models has forever changed the field of Natural Language Processing and the fulfillment of natural language tasks, but we still need a comprehensive and standardized evaluation framework to assess the quality, accuracy, and reliability of these models. Current frameworks offer valuable insights, but they lack a uniform, more comprehensive evaluation approach and don’t factor in safety concerns.

Setting up an LLM

Before we dive into the best practices for evaluating LLMs, let’s take a look at the five steps required for setting up an LLM:

Selecting benchmarks — Depicting real-life cases on an extensive range of language-related challenges such as language modeling, machine translation, and so on.

Preparing datasets — These must be large enough to obtain a high-quality and objective evaluation, including training, validation, and test sets.

Training and fine-tuning models — For example, pre-training on a large text corpus and then fine-tuning on benchmark datasets specific to a certain task.

Evaluating models — On benchmark tasks using predefined evaluation metrics, the results of which illustrate the overall performance of the models.

Performing a comparative analysis — On the overall performance of LLMs and models trained for each benchmark task according to specific metrics.

Best practices

As mentioned above, a dependable evaluation framework should factor in authenticity, speed, context, and more, which will help developers release LLMs responsibly. Collaboration among key stakeholders and regulators is key to creating a reliable and comprehensive evaluation framework.

In the meantime, we've outlined several best practices for circumventing some of the challenges of evaluating large language models:

Including varied metrics — Additional metrics, such as fluency, relevance, context, coherence, and diversity, lead to a more well-rounded review of large language model output.

Improving human evaluation — Creating guidelines, standards, and benchmarks can help make human evaluation more reliable, consistent, and objective. Crowdsourcing can be particularly helpful in this case for obtaining diverse feedback on large projects.

Incorporating diverse reference data — Reference data that comes from multiple sources encompassing diverse views can improve evaluation methods for model outputs.

Implementing diversity indicators — Incorporating these metrics can prompt models to develop strategies that generate diverse responses, from which uniqueness can be evaluated.

Using real-life scenarios — Incorporating real-world use cases and tasks, as well as industry- or domain-specific benchmarks, can provide a more realistic evaluation.

Assessing robustness — Evaluation methods that assess a model’s robustness against adversarial attacks can add to the safety and dependability of LLMs.

Human input in LLM evaluation

Human input plays a key role in making large language models safer and more reliable. One of the main ways in which human input is valuable is in the establishment and enforcement of guardrails.

What are guardrails and how do they apply to AI?

While AI has the potential to simplify and improve our lives in many ways, it also comes with inherent risks and some real dangers, which cannot be ignored. That’s where guardrails come into play. In short, guardrails are essentially defenses — policies, strategies, mechanisms — that are put into place to ensure the ethical and responsible application of AI-based technologies. Guardrails are designed to preclude misuse, defend user privacy, and encourage transparency and equality. Without them, it could be a world gone mad!

With technology evolving at the speed of light, let’s take a closer look at how guardrails work and why they’re so important in effectively managing AI.

How guardrails work

Given the nature of the risks posed by AI — such as ethical and privacy concerns, bias and prejudice, as well as environmental and computational costs — guardrails work to prevent many of these challenges by establishing a set of guidelines and controls. Imagine if someone were to take unauthorized medical advice from an AI system. The results could be disastrous, and even deadly! That’s why building controls into AI systems that determine acceptable behavior and responses is so critical. We need them to ensure that AI technologies work within our societal norms and standards.

Below are some examples of guardrails:

Mechanisms that protect individual privacy and confidentiality of data

Procedures that avert biased results

Policies that regulate AI systems in accordance with ethical and legal benchmarks

Transparency and accountability go hand-in-hand when it comes to the importance of guardrails in AI systems. You need transparency to ensure that responses generated by these systems can be explained and mistakes can be found and fixed. Guardrails can also ensure that human input is taken into account in regard to important decisions in areas like medical care and self-driving cars. Basically, the point of guardrails is to make sure that AI technologies are being used for the betterment of humanity and are serving us in helpful and positive ways without posing significant risks, harm, or danger.

Why they’re so important in evaluation metrics

As AI systems continue to develop, they are endowed with an ever-increasing amount of responsibility and tasked with more important decisions. That means they have the power to make a greater impact, but also to inflict more damage if misused.

Here are some of the key benefits of guardrails:

Protect against the misuse of AI technologies

Promote equality and prevent bias

Preserve public trust in AI technologies

Comply with legal and regulatory standards

Facilitate human oversight in AI systems

Not only do guardrails ensure the safe, responsible, and ethical use of AI technologies, but they can also be used to detect and eliminate bias, promote openness and accountability, comply with local laws, and most importantly, implement human oversight to ensure that AI doesn’t replace human decision-making.

How guardrails can guarantee human input

By making sure that humans are involved in key decision-making processes, guardrails can help guarantee that AI technologies stay under our control and that their outputs follow our societal norms and values. That’s why keeping humans in the loop (HITL) — where both humans and machines work together to create machine learning models — is imperative to making sure that AI doesn’t go off the rails. HITL ensures that people are involved at every stage of the algorithm cycle, including training, tuning, and testing. As a result, AI outputs become safer and more reliable.

Overcoming the challenges of deploying guardrails

Implementing guardrails is no easy task, however, there are a couple of ways to overcome the technical complexities involved in this process, namely:

Adversarial training — Training an AI model on a series of contradictory examples meant to fool the model into making mistakes, thereby teaching it to detect rigged inputs.

Differential privacy — Adding noise (data that’s been corrupted, distorted, has a low signal-to-noise ratio, or contains a lot of meaningless information) to training data to hide individual data points, which protects confidential information as well as the privacy of individuals.

What’s more, the actual process of setting up guardrails poses a challenge in itself. You need predefined roles and responsibilities within your company, along with board oversight and tools for recording and surveying AI system outputs, not to mention legal and regulatory compliance measures. All this requires continual monitoring, assessment, and tweaking — it’s by no means an easy or static process! As AI evolves, so too will the guardrails needed to keep us safe.

Crowdsourcing in model evaluation

Human evaluation can be time-consuming and expensive, particularly when it comes to large-scale evaluations. That’s where crowdsourcing comes into play.

As mentioned above, crowdsourcing can be particularly helpful in improving human evaluation by obtaining diverse feedback on large projects. Human evaluation via crowdsourcing involves crowd participants evaluating the output or performance of a large language model within a given context. By leveraging the capabilities of millions of crowd contributors, you can obtain qualitative feedback and identify subtle nuances that traditional LLM-based evaluation might otherwise miss.

Overall, crowdsourcing can be a great solution for evaluating large language model projects. At Toloka, we help clients solve real-life business challenges and offer best-in-class solutions for fine-tuning and evaluating language models. We help clients monitor quality in production applications and obtain unbiased feedback for model improvement using the collective power of the crowd.

Key takeaways

Large language models are revolutionizing many sectors from medicine and banking to academia and the media. As these models become increasingly sophisticated, regulatory oversight is critical. Data privacy, accountability, transparency, and elimination of bias all need to be taken into account. LLMs must also be able to substantiate and provide sources for their outputs and decisions in order to build public trust. As such, public involvement can be an effective way to ensure that these systems comply with societal norms and expectations.

To unlock the full potential of such a language model, we need to incorporate suitable evaluation methods that cover accuracy, fairness, robustness, explainability, and generalization. That way, we can make the most of their strengths and successfully address their weaknesses.

We invite you to read through our blog to learn more about large language models and how Toloka can help in their evaluation process.

About Toloka

Toloka is a European company based in Amsterdam, the Netherlands that provides data for Generative AI development. Toloka empowers businesses to build high quality, safe, and responsible AI. We are the trusted data partner for all stages of AI development from training to evaluation. Toloka has over a decade of experience supporting clients with its unique methodology and optimal combination of machine learning technology and human expertise, offering the highest quality and scalability in the market.