How to blur people's faces in photos: Toloka's face blurring solution

Toloka provides an easy way to collect data about the offline world. When you need images of certain locations like buildings or streets, you can ask our Tolokers to grab their iOS or Android phones and go take some pictures. The tricky part is that sometimes photos include unsuspecting passersby — and we are responsible for protecting their privacy. We put a reminder in our apps and advise Tolokers to be careful, but accidental photobombs are bound to happen!

To avoid invading random people's privacy, we could detect faces and blur them on the server side. But from a security point of view, this would mean that a photo with potentially sensitive data would have to travel all the way to our backend first.

We have a better solution that performs anonymization right in our mobile apps — so no face ever leaves a Toloker's device.

In this blog post, we're going to talk about the fascinating world of ML-powered mobile face detection, the tools that make it happen, and the unexpected results we got.

Face detection

When we just started out, our expectations for the face detector were rather vague:

Something ML-ish (today's models trained on very large datasets of faces are believed to detect objects as effectively as humans).

Distributed under a license that lets us use it in Toloka.

Fast at detecting faces so that Tolokers don't have to wait.

Apparently, every other photo editing app today is capable of detecting faces, so we were certain we’d find plenty of tools that fit our short list of requirements. However, this was no more than an optimistic – and rather naïve – guess.

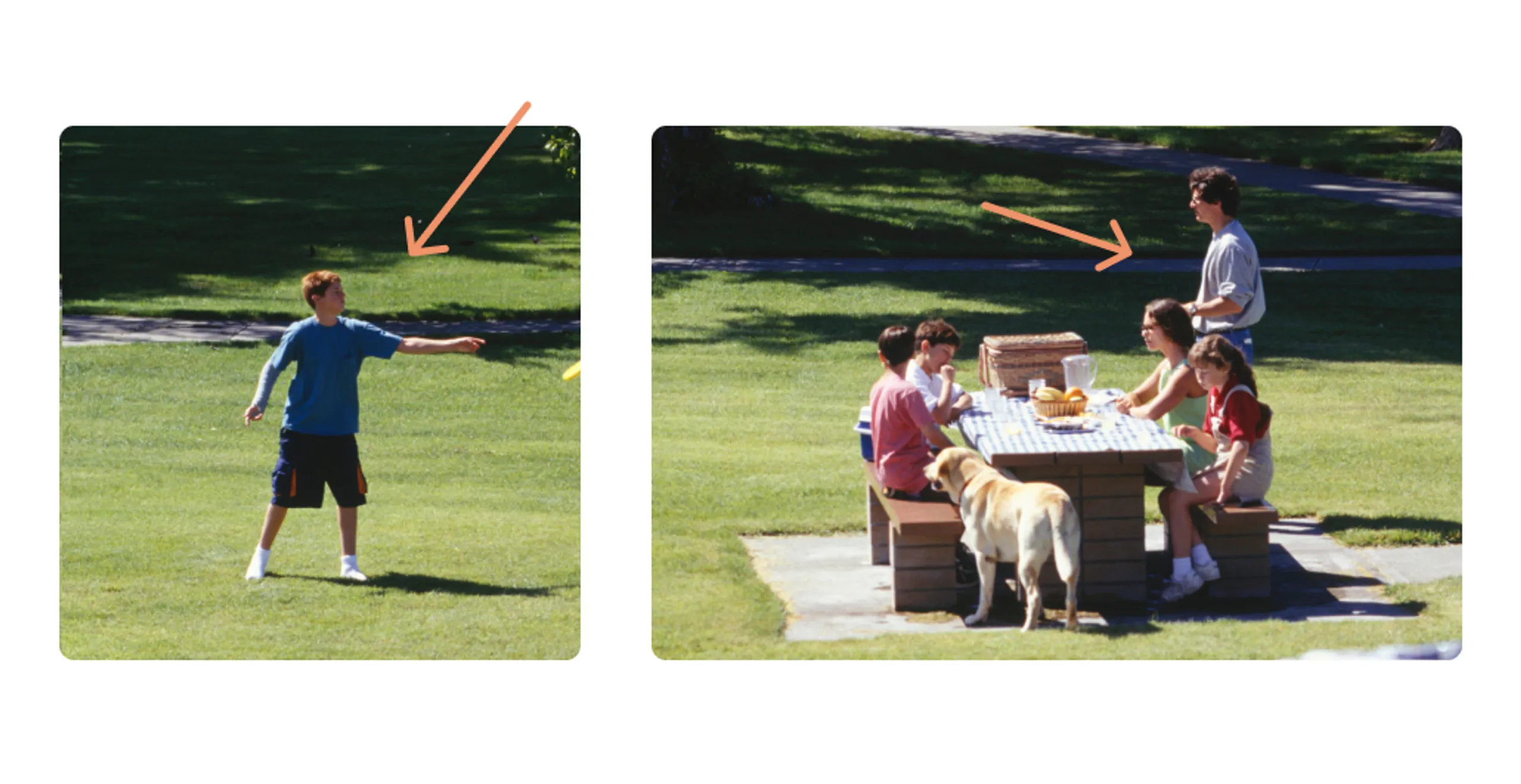

Most of those apps can, in fact, do fast and accurate face detection — but only when it comes to selfies. Our main goal was to detect smaller faces that got into the shot by accident, lacked focus, or were scattered all over the image.

So we added another requirement:

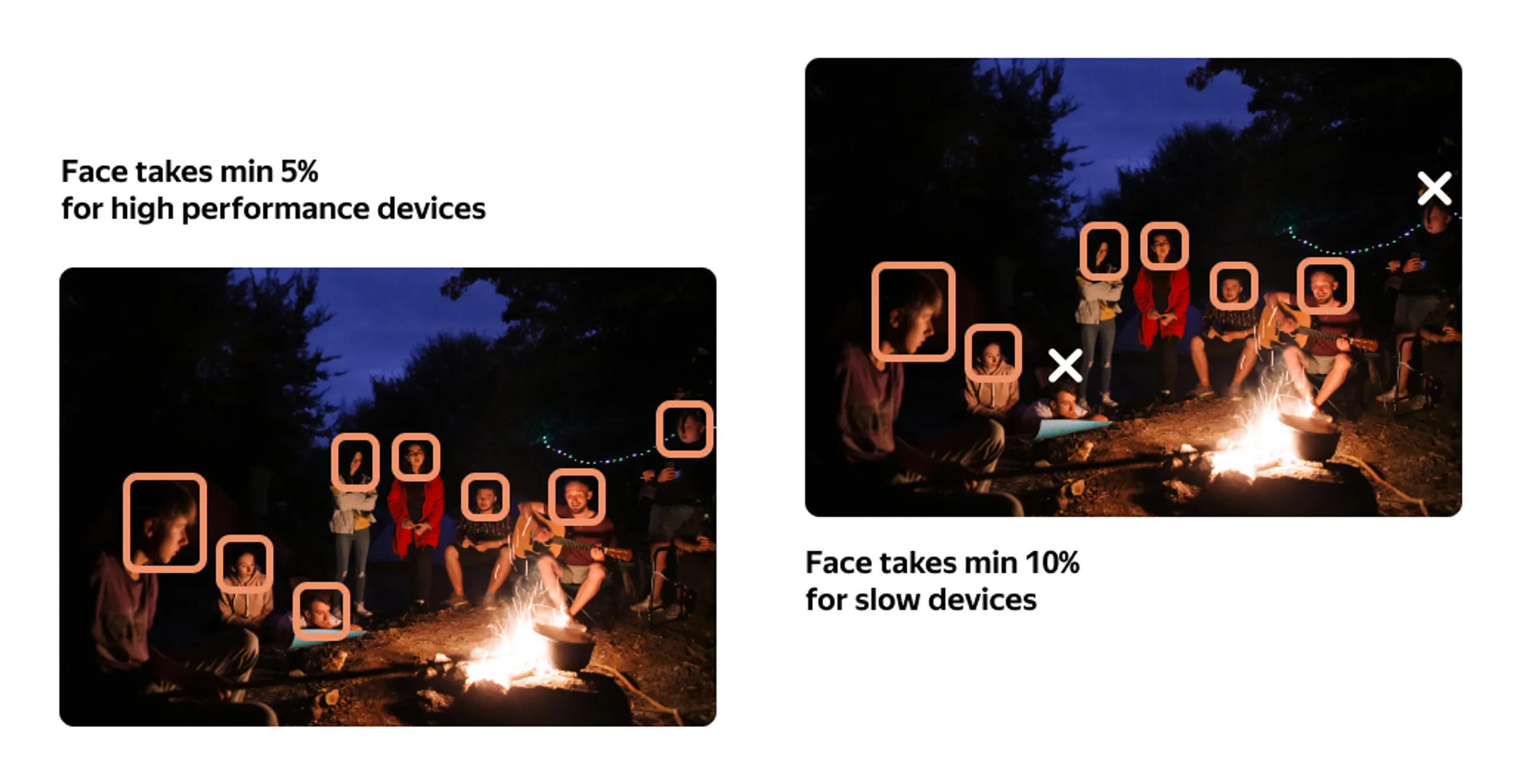

The detector should be able to find small faces that occupy less than 5% of the photo.

Built-in frameworks, like Vision from Apple and FaceDetector from Android, did great with selfies but failed to recognize "accidental faces", so we went looking for third-party solutions.

Our task description almost ideally matched the Anonymizer project by understand.ai. Their open-source model is trained to detect anything that might violate the General Data Protection Regulation.

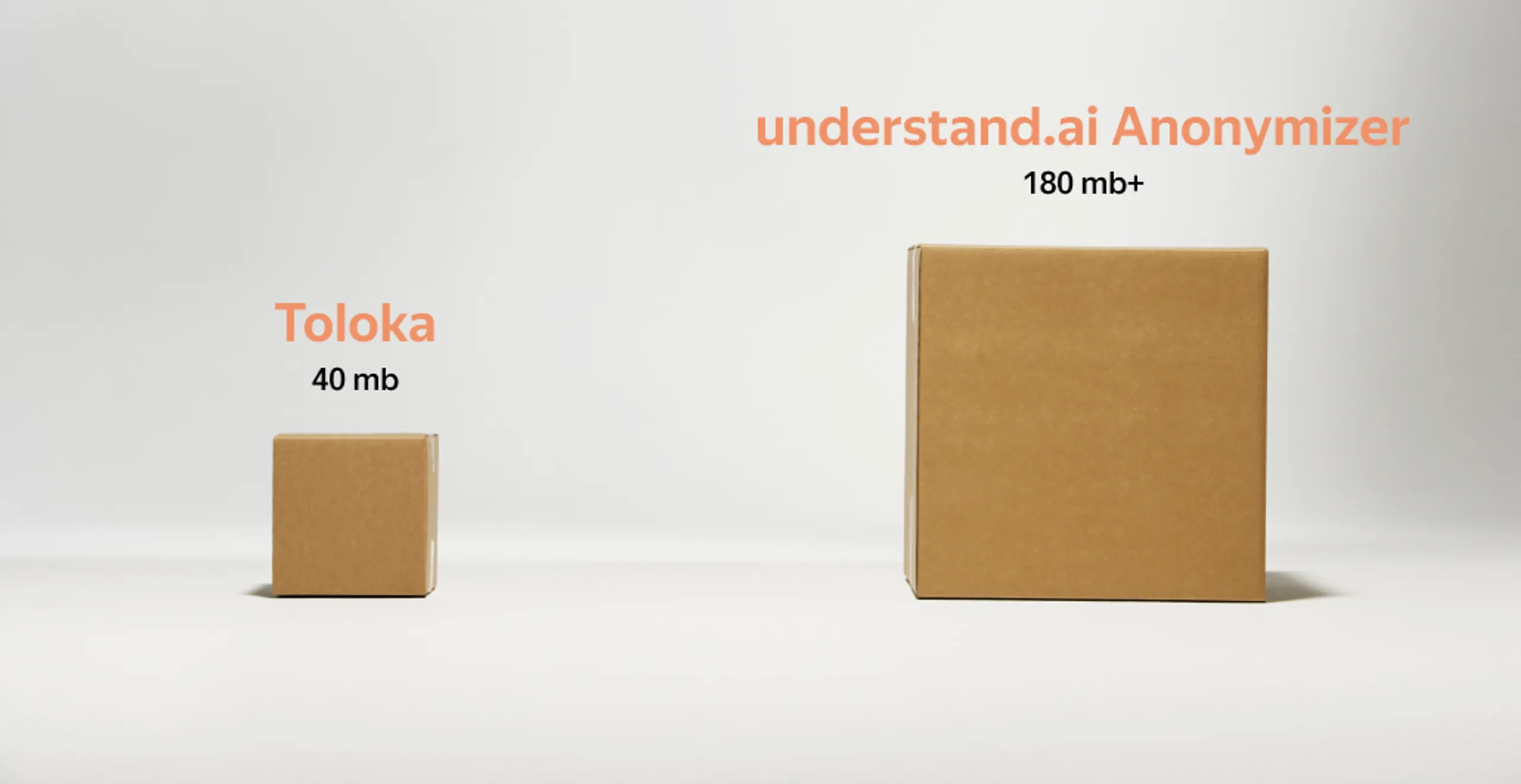

This model was just what we needed. To try it out, we had to convert it to a format that our mobile apps would understand. But even with subsequent optimization that greatly reduced its size, we still ended up with a model that was 180 MB.

To put things in perspective, Toloka's entire Android app is only 40 MB:

We looked into a few more solutions initially trained to run on servers, but with no luck. They were too heavy as well.

This led us to add another must-have to our wish list:

A detector that's able to find small faces should also be small in size.

We decided to look for something that wasn't just convertible to mobile but was initially trained with mobile usage in mind.

At this point, we also defined "fast detection" as a target value of 1 second. This decision was based on published benchmarks for mobile face detectors and keeping in mind that our input photos would be significantly larger than the images commonly used for assessing face detection speed and accuracy.

GitHub offered lots of search results for "Mobile Face Detection", but most of them failed to meet our requirements, because they:

Had an obscure license.

Were old and unsupported.

Were trained to recognize selfies.

Ultimately, we chose the "Ultra-Lightweight Face Detection Model". The model was supposed to occupy only 1 MB of space and perform in milliseconds. Fast, light, mobile, and bundled with an active community — the model seemed like a solid choice.

Although the model had been trained to run on mobile devices, it still required some tinkering on our side. We converted it into the correct format, prepared input photos, and learned to interpret the results. It was actually our first working solution, so we were extremely excited to see that (a) it found every distinguishable face it was shown and (b) did that in only 100 milliseconds.

The only drawback was the model's input requirements: it couldn't process images larger than 640 × 480 pixels, which is at least 5 times smaller than the average Toloker's photos. This meant that, prior to running the detector, we'd have to downsize the photos, turning smallish faces into literal pixels undetectable by the model.

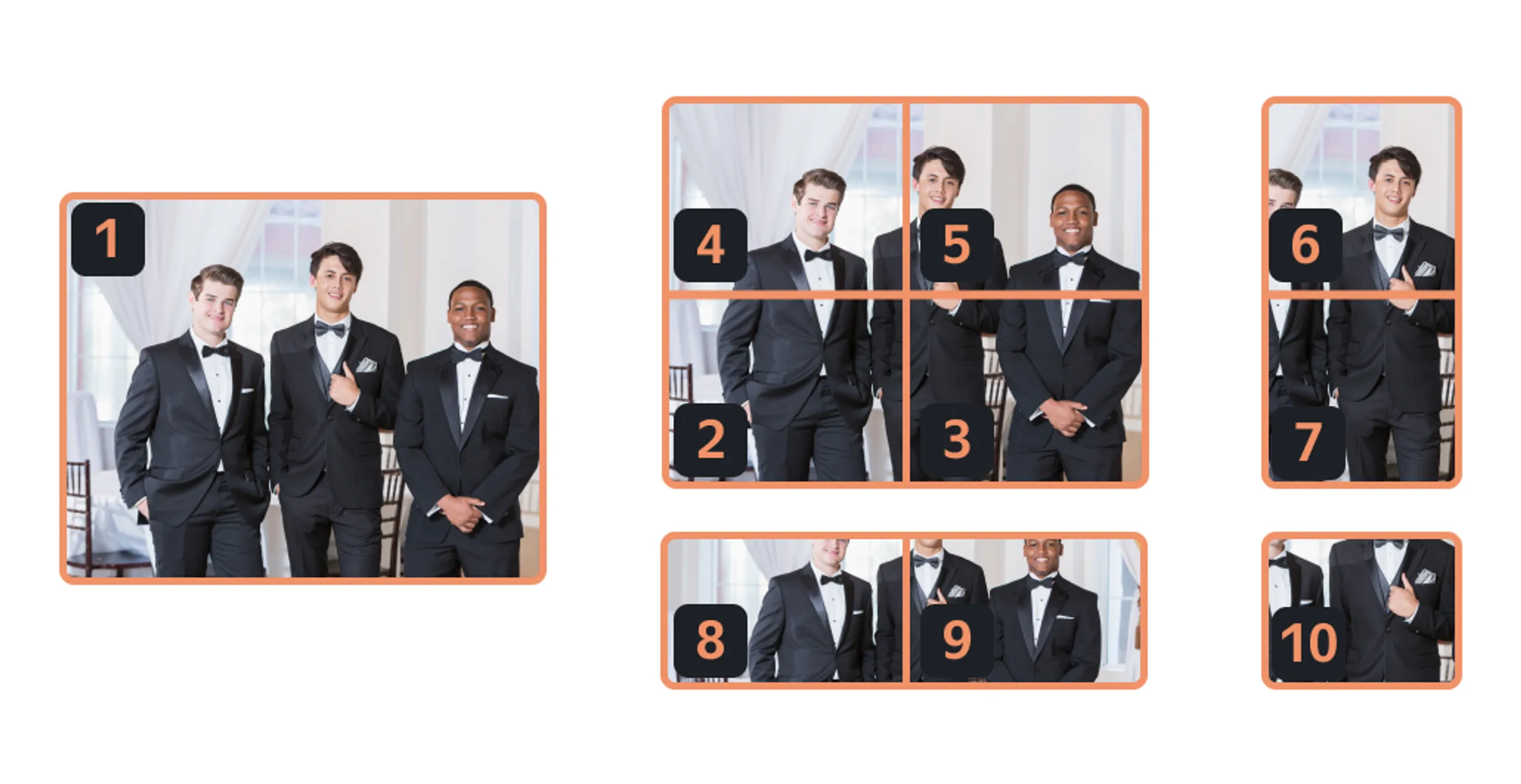

Striving for more accurate detection, we tried dividing photos into overlapping quarters. This produced 10 images instead of 1:

And just like that, 100 milliseconds turned into a whole second. The model required more time to split the image into segments and some extra code to reassemble them back together. We decided to continue looking.

Our next choice was MLKit by Google.

It's an SDK with a variety of embedded models, including one for face detection. The documentation stated that MLKit could find faces as small as 100 × 100 pixels, which was well within our requirements. No additional converting or optimization was required, so we tried it out immediately: MLKit actually detected even smaller faces (the smallest one in our test was 27x27 pixels). This let us downsize an input photo prior to detection without reducing our desired level of accuracy and get exactly 1 second on our median device.

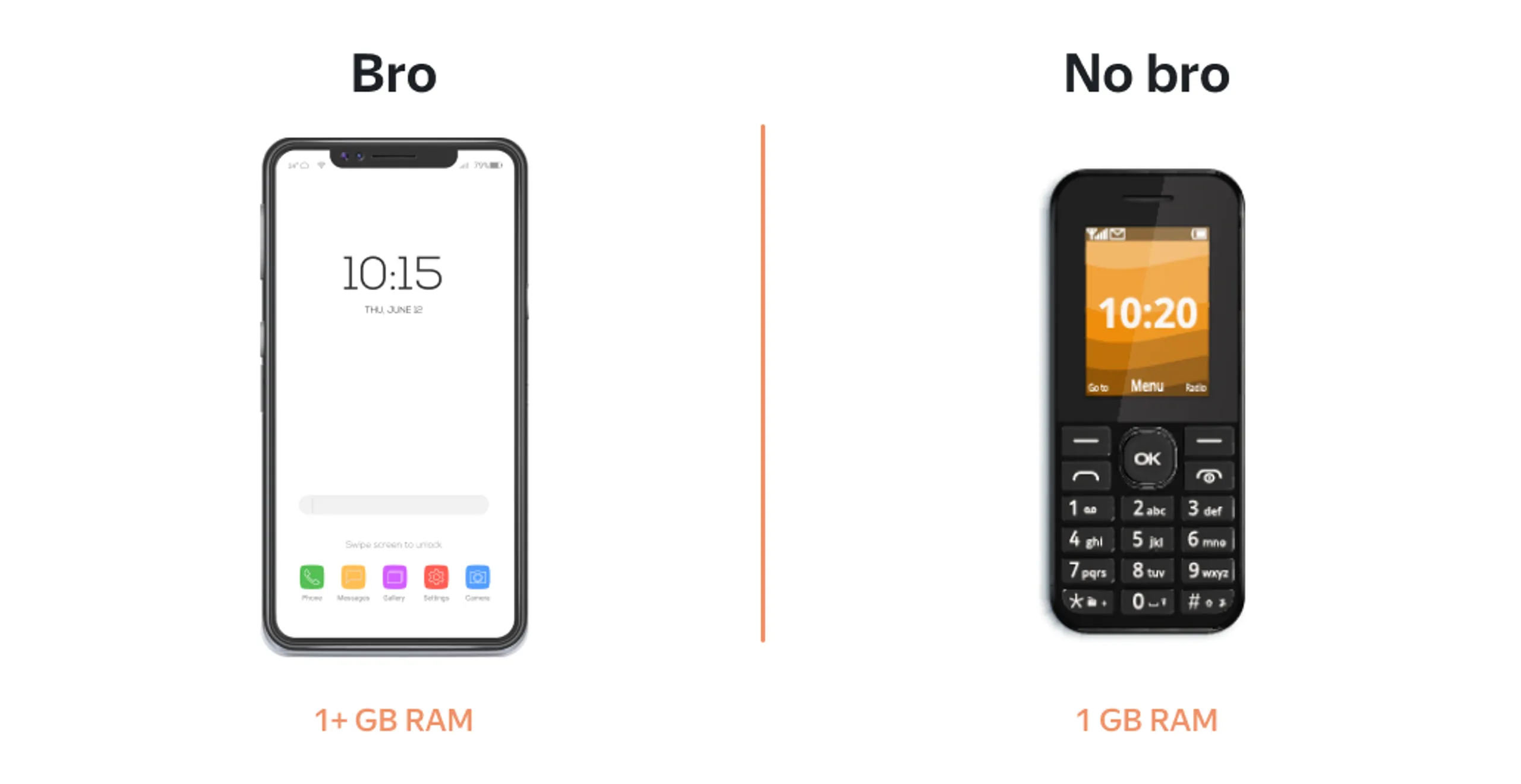

What we liked even more was the ability to change the smallest face size. This came in handy because our Tolokers own a wide range of devices that can be roughly divided into two groups in terms of their performance:

No-bros are equipped with only 1 GB RAM, and their best days are long gone. For these oldies, we increased the face size requirement to 10%, while bro phones were still expected to find tiny faces that occupy only 5% of the image. That seemed to be a good trade-off between losing some accuracy and staying within the one-second timeframe.

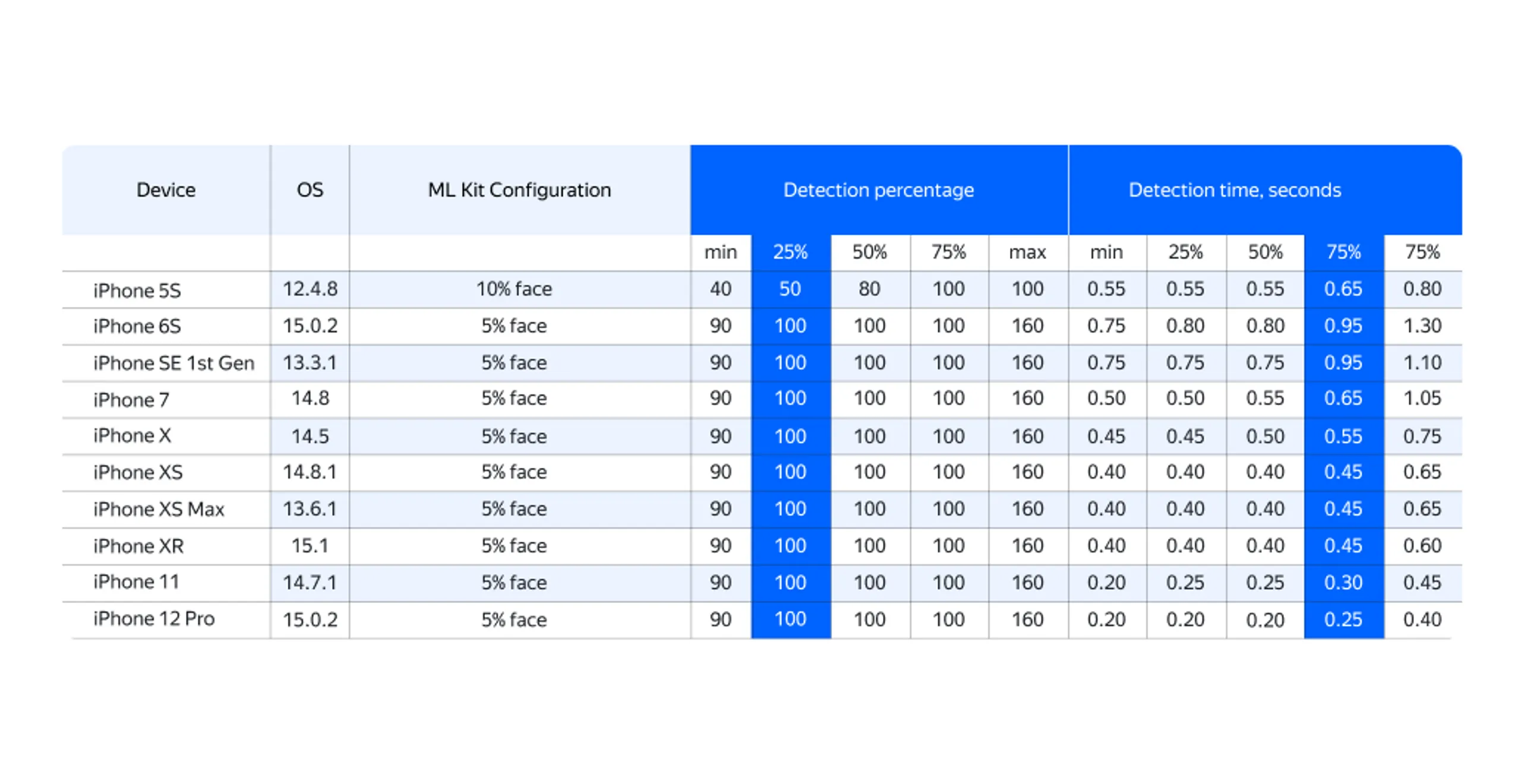

Now, everything was ready for an iOS prototype app. We equipped it with pre-labeled photos (to compare what MLKit could detect with what we could find manually), handed it over to our QAs, and gathered the following statistics:

The first blue column is the percentage of faces detected by MLKit in relation to the number of faces detected by us. Our labeling was a total overkill, since we included faces that were almost indistinguishable:

That's why we agreed that 50% for slow devices in the 25th percentile was a tolerable result. As for the time in the second blue column, MLKit performed within our target timeframe in the 75th percentile. So, MLKit covered all our performance requirements — and it wouldn't add much to the size of our apps.

We also collected statistics for Android. But as it wasn't that obvious how to distinguish Android phones in terms of performance, on each device we gathered at least 10 records of its detection speed. Then, based on the average value, we marked that device as fast or slow and used suitable MLKit configuration. With this adjustment Android statistics showed similar results, and MLKit was unanimously recognized as the winner of our face detector competition.

The blurring

The first part of our investigation was over, and now we had to decide how to blur the detected faces without adding too much time to the one-second detection.

At first, we tried to assemble a mask based on the coordinates gathered from the detector, and blur only the sensitive areas. We liked this obvious solution but weren't as excited about its execution time, which peaked at 2 seconds. The more faces there were in the image, the more intricate the mask had to be, and the longer it took to process.

Then we tried to cut out faces one by one, put them in a row, blur them, and put them back into the original photo. That worked faster, but the double cut-and-put-it-back-in operation still took way too much time, and the blurring was insufficient.

Next, we tried a more straightforward approach: blur the whole picture, cut out the blurred faces, and glue them back in a single pass. To our surprise, this method turned out to be the fastest, taking only 0.4 seconds per photo.

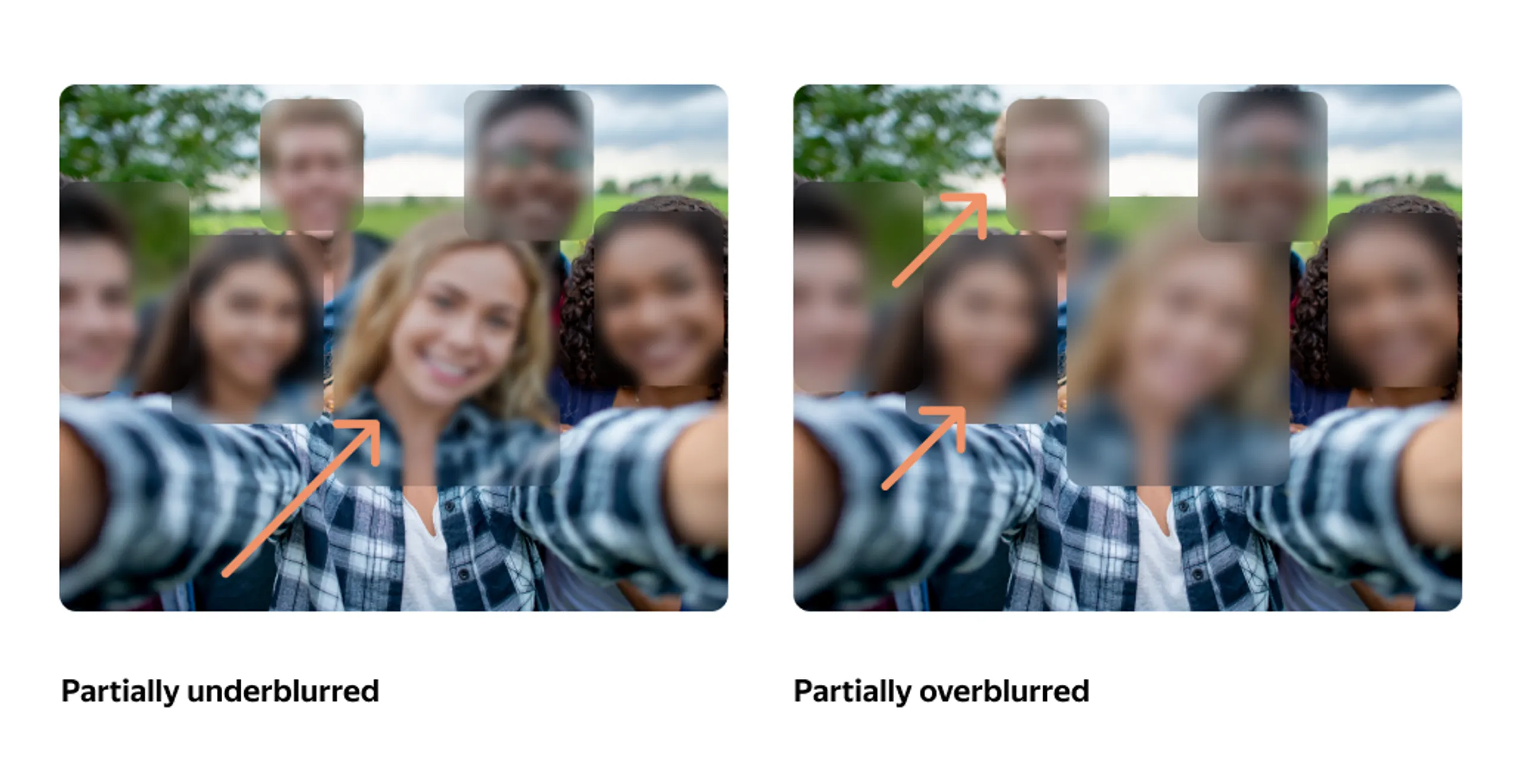

Technically, the second part of our investigation was now complete. However, we couldn't decide on a universal radius that would work equally well for all faces. A radius that blurred small faces nicely didn't do well on big faces, and a radius suitable for big faces turned small ones into grey rectangles:

That's why we settled on the following algorithm:

Divide all faces into three groups based on their size: small — medium — large.

Use three different radius values for blurring the original photos in the groups.

Cut out faces from the blurred images and put them back in the original.

As you may notice, we now run the blurring operation three times instead of just one. To compensate for this, we tried reducing our input photo three more times after detection and used the downsized version only for blurring. That's why this downsizing doesn't worsen the quality of the resulting image and allows us to stay within 0.4 seconds per blur, while producing a much nicer result:

This marked the end of our investigation and tool selection efforts. We put everything together, made sure that anonymization wasn't activated in some types of tasks (for example, those that explicitly require taking selfies), rolled out iOS and Android releases, and braced ourselves for finally seeing our creation in the hands of real Tolokers.

Of course, the most anticipated figure was the total time it would take to detect and blur the faces in every photo. We were pleased to see that it didn't surpass 1.5 seconds in the 75th percentile.

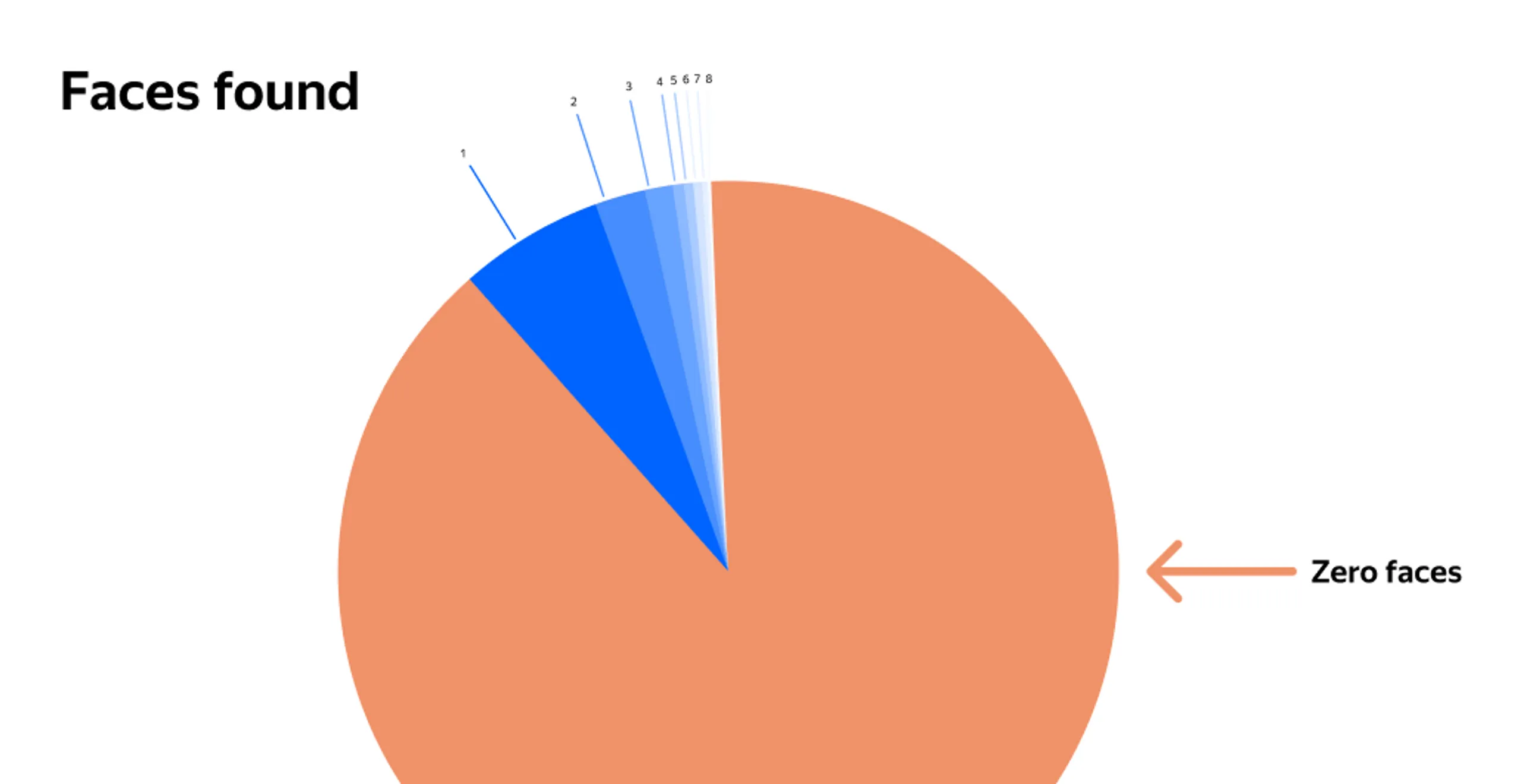

But we were also curious about the number of faces we managed to anonymize. Here's a pie graph showing the number of faces per photo for the first month in production:

The orange section is for 0 faces. Other sections contain all the possible numbers of detected faces up to 100.

On the very first day of the iOS release, someone sent us a photo with 17 faces, even though we specifically ask Tolokers not to do so. We'll never know what exactly that photo depicted, but there's a chance it was completely innocent. One of our QA managers was amused at how the app treated a picture she took in a clothing store — it blurred all the faces printed on the packaging of women's tights.

In Toloka, we treat all faces equally and make sure their privacy is protected. And to further strengthen our anonymization strategy, we taught our backend to check for any faces that may have slipped away from the mobile detector, as well as find and blur license plates… But that's a whole other story.