← Blog

/

The history, timeline, and future of LLMs

B2B SEO Content B2B SEO Content 100% 10 B44 Training datasets are what it needs to reason, adapt, and act in unpredictable environments Summarize this table Training datasets are what it needs to reason, adapt, and act in unpredictable environments Turn on screen reader support To enable screen reader support, press ⌘+Option+Z To learn about keyboard shortcuts, press ⌘slash

Introduction

The impressive speed at which AI has evolved has never been more apparent than it is now, with ChatGPT making headlines and the dramatic evolution of Large Language Models (LLMs) ever present in the media cycle. Millions of people worldwide have wasted no time adopting conversational AI tools in their day-to-day existence. These tools have not only enamored but also terrified audiences with their striking capabilities and efficiency and their potentially dangerous implications if not regulated well.

So, where did it all start and where is it all going? That’s the million-dollar question we’ll try to dig into further. To help you get a better sense of the rapidly evolving landscape, in this article, we cover the following topics:

A brief history of LLMs.

The rise of transformers and ChatGPT.

Training LLMs.

Types and applications of LLMs.

Current limitations, challenges, and potential hazards.

What the future looks like for these models. From the learning process to reinforcement learning from human feedback (RLHF), let’s delve deeper into the foundations of language models, how they’re trained, and how they work.

What is a large language model?

At its core, a large language model is a type of machine learning model that can understand and generate human language via deep neural networks. The main job of a language model is to calculate the probability of a word following a given input in a sentence: for example, “The sky is ____” with the most likely answer being “blue”. The model is able to predict the next word in a sentence after being given a large text dataset (or corpus). Basically, it learns to recognize different patterns in the words. From this process, you get a pre-trained language model.

With some fine-tuning, these models have a variety of practical uses, such as translation, or building expertise in a specific knowledge domain like law or medicine. This process is known as transfer learning, which enables a model to apply its acquired knowledge from one task to another.

What makes a language model “large” is the size of its architecture. This, in turn, is based on the artificial intelligence of neural networks, much like the human brain where neurons work together to learn from and process information. Furthermore, LLMs comprise a large number of parameters (for example, GPT has 100+ billion) trained on vast quantities of unlabeled text data via self-supervised or semi-supervised learning. With the former, models are able to learn from unannotated text, which is a big advantage considering the costly drawbacks of having to rely on manually labeled data.

Moreover, larger networks with more parameters have shown better performance with a greater capacity to retain information and recognize patterns compared to their smaller counterparts. The larger the model, the more information it can learn during the training process, which in turn makes its predictions more accurate. While this may be true in the conventional sense, there’s one caveat: AI companies and developers alike are finding ways around the challenges of excessive computational costs and energy required to train LLMs by introducing smaller, more optimally trained models.

Although LLMs have primarily been trained on simple tasks, like predicting the next word in a sentence, it’s amazing to see how much of the structure and meaning of language they’ve been able to capture, not to mention the huge number of facts they can pick up.

Key terms to know

This is a relatively new field in the public infosphere, and there are a lot of terms to familiarize yourself with. Here are the main ones that can help you gain a better understanding of the LLM landscape:

Attention — a statistical apparatus for evaluating the impact of each token fed through an LLM.

Embeddings — numerical representations of words that capture their meanings and relationships in terms of context.

Transformers — neural network architecture that forms the basis of most LLMs.

Prompt — the input a user provides to an LLM to illicit a response or carry out a task.

Instruction tuning — training a language model to answer different prompts to learn how to answer new ones.

RLHF (reinforcement learning with human feedback) — a technique that tunes a model based on human preferences.

A brief history of LLMs: In the beginning there was Eliza…

When the ancients carefully recorded their knowledge on scrolls of papyrus and housed them in the legendary Library of Alexandria, they could not have even dreamed it possible that all that knowledge and more would be available at the fingertips of their descendants millenniums later. That’s the power and beauty of large language models. Not only can LLMs answer questions and solve complex problems, but they can also summarize huge volumes of work, as well as translate and derive context from various languages.

The foundation of large language models can be traced back to experiments with neural networks and neural information processing systems that were conducted in the 1950s to allow computers to process natural language. Researchers at IBM and Georgetown University worked together to create a system that would be able to automatically translate phrases from Russian to English. As a notable demonstration of machine translation, research in this field took off from there.

The idea of LLMs was first floated with the creation of Eliza in the 1960s: it was the world’s first chatbot, designed by MIT researcher Joseph Weizenbaum. Eliza marked the beginning of research into natural language processing (NLP), providing the foundation for future, more complex LLMs.

Then almost 30+ years later, in 1997, Long Short-Term Memory (LSTM) networks came into existence. Their advent resulted in deeper and more complex neural networks that could handle greater amounts of data. Stanford’s CoreNLP suite, introduced in 2010, was the next stage of growth allowing developers to perform sentiment analysis and named entity recognition.

Subsequently, in 2011, a smaller version of Google Brain appeared with advanced features such as word embeddings, which enabled NLP systems to gain a clearer understanding of context. This was a significant turning point, with transformer models bursting onto the scene in 2017. Think GPT, which stands for Generative Pre-trained Transformer, has the ability to generate or “decode” new text. Another example is BERT - Bidirectional Encoder Representations from Transformers. BERT can predict or classify input text based on encoder components.

From 2018 onward, researchers focused on building increasingly larger models. It was in 2019 that researchers from Google introduced BERT, the two-directional, 340-million parameter model (the third largest model of its kind) that could determine context allowing it to adapt to various tasks. By pre-training BERT on a wide variety of unstructured data via self-supervised learning, the model was able to understand the relationships between words. In no time at all, BERT became the go-to tool for natural language processing tasks. In fact, it was BERT that was behind every English-based query administered via Google Search.

The rise of transformers and ChatGPT

As BERT was becoming more refined, OpenAI’s GPT-2, at 1.5 billion parameters, successfully produced convincing prose. Then, in 2020, they released GPT-3 at 175 billion parameters, which set the standard for LLMs and formed the basis of ChatGPT. It was with the release of ChatGPT in November 2022 that the general public really began to take notice of the impact of LLMs. Even non-technical users could prompt the LLM, receive a rapid response, and carry on a conversation, causing a stir of both excitement and foreboding.

Chiefly, it was the transformer model with an encoder-decoder architecture that spurred the creation of larger and more complex LLMs, serving as the catalyst for Open AI’s GPT-3, ChatGPT, and more. Leveraging two key components: word embeddings (which allow a model to understand words within context) and attention mechanisms (which allow a model to assess the importance of words or phrases), transformers have been particularly helpful in determining context. They’ve been revolutionizing the field ever since due to their ability to process large amounts of data in one go.

Most recently, OpenAI introduced GPT-4, which is estimated at one trillion parameters — five times the size of GPT-3 and approximately 3,000 times the size of BERT when it first came out. We cover the evolution of the GPT series in greater detail later on.

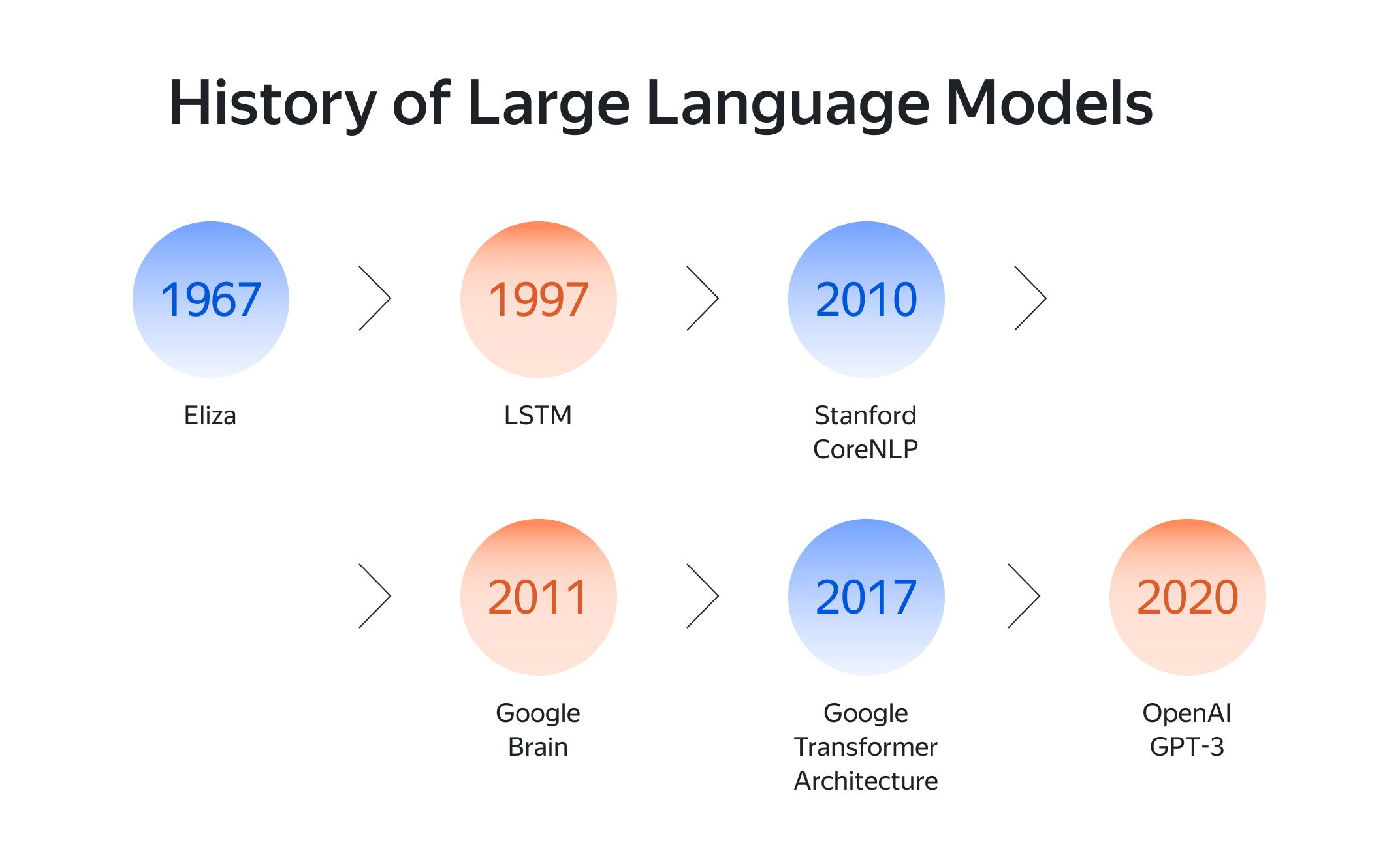

As a visual, here's a simple timeline of the development of LLMs from Eliza to OpenAI’s GPT:

History of Large Language Models

A closer look at how GPT LLMs have evolved over time

GPT-1 set in motion the evolution of LLMs by carrying out simple tasks such as answering questions. When GPT-2 came out, the model had grown significantly with more than 10 times the parameters. GPT-2 could now produce human-like text and perform certain tasks automatically. With the introduction of GPT-3, the public was able to access this innovative technology. GPT-3 introduced problem-solving into the mix. GPT-3.5 broadened the system’s capabilities, becoming more streamlined and less costly.

The most recent variation to date is GPT-4, which features significant enhancements, such as the ability to use computer vision to interpret visual data (unlike ChatGPT, which uses GPT-3.5). GPT-4 accepts both text and images as input. To the delight of students and the consternation of educators, GPT-4 is able to pass multiple standardized tests. Not only that, but it’s also learning humor. By being able to interpret computer vision, this new technology is learning to recognize some forms of humor in a similar way to how it learns and uses human language. Get ready for AI-generated jokes!

What’s more, “steerability” is the latest advancement, which allows GPT users to customize the structure of their output to meet their specific needs. Basically, steerability alludes to the ability to control or modify a language model’s behavior — which involves making the LLM adopt different roles, follow user instructions, or speak with a certain tone. Steerability allows a user to change the behavior of an LLM at will and command it to write in a different style or voice. The possibilities are endless!

Does bigger equal better?

As we briefly mentioned earlier, studies have shown that larger language models that are programming languages with more parameters have better performance, thus spurring a race among developers and AI researchers to create bigger and better LLMs — the more parameters, the better!

The original GPT, which only had a few million parameters, morphed into models like BERT and GPT-2, comprising hundreds of millions of parameters. More recent examples include GPT-3, which has 175 billion parameters, while Megatron-Turing’s language model has already exceeded 500 billion parameters (at 530 billion) — that’s approximately two times larger for every three and a half months over the last four years!

However, AI lab DeepMind’s RETRO (Retrieval-Enhanced Transformer) has demonstrated that it can outperform other existing models that are 25 times its size. This is an elegant solution to the notable downsides of training larger models, as they typically require more time, resources, money, and computational energy to train. Not only that, but RETRO has demonstrated its large model has the potential to reduce harmful and toxic information via enhanced filtration capabilities. So, bigger isn’t always better! The training process for the next enormous LLM could likely require all the text and training data available online, and smaller models that are more optimally trained could be the solution.

Furthermore, not long after the release of GPT-4, OpenAI’s CEO Sam Altman stated that he believed the age of giant models had reached a point of no return given the limited number of data centers and information on the web. Many researchers now agree that via customization, smaller LLMs can be just as effective as large models, if not more so.

Training large language models

LLMs are trained on massive amounts of unstructured data via self-supervised learning. During this process, the model accepts long sequences of words where one or more words are absent. Users provide prompts (bits of text that the model uses as a starting point) to the LLM. Initially, the model converts each token in the prompt into its embedding. Then, it uses those embeddings to gauge the probability of all imaginable tokens that could follow. The next token is chosen on a partially random basis and the process is repeated until the model selects a stop token.

So, how much data do you need to train an LLM? A good rule of thumb is to double the parameters and double the training dataset. Most LLMs are actually undertrained according to current research. DeepMind conducted a study to identify the optimal model, parameter size, and number of tokens needed to train a transformer language model. The team trained over 400 language models from 70 million to 16 billion parameters on 5-500 billion tokens. They discovered that for compute-optimal training, the model size and number of tokens should be equal.

Training compute-optimal large language models

By now, you may have heard of the famous Chinchilla case study. We cover it in more detail in one of our other posts, (Training Large Language Models 101)[https://toloka.ai/blog/training-large-language-models-101/]. As an overview, the team looked at three ways to determine the relationship between model size and number of training tokens. All three approaches pointed to the idea that increasing both a model's size and the number of training tokens in relatively equal measures would achieve better performance.

They tested their hypothesis by training a model called Chinchilla, which had the same compute budget as its larger model equivalent Gopher, but fewer parameters and four times the data. They discovered that smaller, more optimally trained models show better performance: their compute-optimal 70-billion model Chinchilla trained on 1.4 trillion tokens outperformed Gopher (a 280 billion parameter model) while significantly reducing inference costs.

Interestingly enough, the DeepMind team found that a 7.5 billion parameter RETRO model outperforms Gopher on 9 out of 16 datasets.

Types of large language models

LLMs can be broken down into three types: pre-training models, fine-tuning models, and multimodal models. Each has its own advantage, depending on the goal:

Pre-training models are trained on huge quantities of data, which helps them comprehend a broad range of language patterns and constructs. A plus is that a pre-trained model tends to be grammatically correct!

Fine-tuning models are pre-trained on a large dataset and afterward are fine-tuned on a smaller dataset for a specific task. They’re particularly good for sentiment analysis, answering questions, and classifying text.

Multimodal models combine text with other modes, such as images or video, to create more advanced language models. They can produce text descriptions of images and vice versa.

Applications of large language models

LLMs have a multitude of applications that include summarizing different texts, building more effective digital search tools, and serving as chatbots. There are four key applications that we’ll take a closer look at:

1. Evolving conversational AI

LLMs have proven to be able to generate relevant and coherent responses throughout a conversation. Think chatbots and virtual assistants. Likewise, LLMs are helping to make speech recognition systems more accurate. Keep an eye out for all the new apps that are sure to emerge in the near future thanks to this!

2. Understanding sentiments within text

LLMs are masters at sentiment analysis and extracting subjective information such as emotions and opinions. Applications include customer feedback and social media analysis as well as brand monitoring.

3. Efficient machine translation

Thanks to LLMs, translation systems are becoming more efficient and accurate. By breaking down language barriers, LLMs are making it possible for humans everywhere to share knowledge and communicate with each other.

4. Textual content creation

What’s even more amazing is that LLMs can generate various forms of text, such as news articles and product descriptions. They’ve even been known to dabble in creative writing with notable success — again, much to the delight of many students (and the horror of many teachers) when submitting their term papers!

Current limitations and challenges

Along with the incredible advancements in AI, machine learning models, and LLMs on the whole, there is still no shortage of challenges to overcome. Misinformation, malware, discriminatory content, plagiarism, and information that is simply untrue can lead to unintended or dangerous outcomes; this calls these models into question.

What’s more, when biases are inadvertently introduced into LLM-based products like GPT-4, they can come across as “certain but wrong” on some subjects. It’s a bit like when you hear a politician talking about something they don’t know anything about. Overcoming these limitations is key to building public trust with this new technology. That’s where RLHF comes into play to help control or steer large-scale AI systems.

Here are some of the main issues that need to be addressed:

Ethical and privacy concerns

Currently, there aren’t many laws or guardrails regulating the use of LLMs and since large datasets hold a lot of confidential or sensitive data (not limited to personal data theft, copyright and intellectual property infringements, and more), this raises the question of ethical, privacy, and even psychological issues among users seeking answers from AI-generated forums.

Bias and prejudice

Since LLMs are trained on different sources, they can unknowingly regurgitate the bias in those sources. Biases including cultural, racial, gender, and so on are up for grabs. These prejudices have real-life consequences, such as hiring decisions, medical care, or financial outcomes.

Environmental and computational costs

Training a large language model requires an enormous amount of computational power, which impacts energy consumption and carbon emissions. Not only that, but it’s expensive! Many companies, especially smaller ones, simply cannot afford it.

To counter these potentially harmful effects, researchers are focusing on designing LLMs around three main pillars: helpfulness, truthfulness, and harmlessness. If an LLM can uphold all three principles, it’s considered to be “aligned” — a term that has elements of subjectivity. RLHF provides a helpful solution in this case.

Reinforcement learning from human feedback

RLHF has the power to address each of the challenges listed above, which begs the question: can a machine learn human values?

On a basic level, RLHF implements human feedback to generate a human preferences dataset that ascertains the reward function for a desired outcome. Human feedback can be obtained in multiple ways:

Order of preference: People rank outputs in order of preference.

Demonstrations: Humans write preferred answers to prompts.

Corrections: Humans edit a model’s output to correct unfavorable behaviors.

Natural language input: Humans provide descriptions or critiques of outputs in language. Once a reward model has been created, it’s used to train a baseline model with the help of reinforcement learning, which leverages the reward model to build a human values policy that the language model then uses to produce responses. ChatGPT is a good example of how a large language model uses RLHF to produce better, safer, and more engaging responses.

RLHF constitutes a major advancement in the realm of language models, providing a more controlled and reliable user experience. But there’s a tradeoff: RLHF introduces the biases of those who have contributed to the preference dataset used to train the reward model. So, while ChatGPT is geared toward helpful, honest, and safe answers, it’s still subject to the annotators’ interpretations of these answers. While RLHF improves consistency (which is great for the use of LLMs in search engines), it does so at the expense of creativity and diversity of ideas. There is still a lot to uncover in this area.

What the future looks like for LLMs

While ChatGPT is the latest new shiny thing, it’s only one small step toward what’s to come in the realm of LLMs. While we can’t predict the future, there are some trends out there that we think will shape the path of innovation. Let’s take a closer look at what they are:

1. Autonomous models that self-improve

These LLMs will likely have the power to generate training data to improve their own performance. This may be especially helpful once the vast quantities of information available on the internet have been exhausted. As a recent example, Google’s LLM was able to generate its own questions and answers and then fine-tune itself accordingly.

2. Models that can verify their own output

LLMs that can provide sources for the information they generate can lend greater credibility to the technology as a whole. For example, OpenAI’s WebGPT is able to generate accurate, detailed responses with sources for backup.

3. The development of sparse expert models

Today’s most recognizable LLMs all have several features in common: they’re dense, self-supervised, pre-trained models based on the transformer architecture. However, sparse expert models are moving the technology in another direction. With these models only relevant parameters need to be activated, making them larger and more complex. At the same time, they require fewer resources and energy consumption for model training.

Key takeaways

To sum up, LLMs are foundation models, which are a type of large neural network that can generate or embed text. As LLMs are scaled, they can unlock new capabilities, such as translating foreign languages, writing code, and more. All they have to do is observe recurring patterns in language during model training. The technology really is amazing! But with this innovation come some drawbacks. For example, given the subjective nature of human perception, RLHF may inadvertently incorporate bias into AI products like GPTs during model training.

Here are some of the key takeaways:

With a history dating back to the 1950s and 60s, LLMs really only became a household name recently, with the introduction of ChatGPT.

There are many applications of LLMs including sentiment analysis, full text generation, categorization, text editing, language translation, information extraction, and summarization.

Bigger doesn’t always equal better: researchers have found that ultimately smaller, more optimally trained models outshine their behemoth counterparts and require less energy and fewer resources.

While LLMs have the power to help us solve a number of real-world problems, there’s still quite a ways to go in terms of making them reliable, trustworthy, and safe.

LLM-based GPT products can learn and communicate like humans, but our learning styles and behavioral patterns are still vastly more complex. So while professionals across industries may be biting their nails over job security as academia remains on edge over the essay becoming obsolete, amid the uncertainty are tremors of excitement given the limitless potential and countless opportunities.

To learn more about LLMs, check out our blog where we cover all the ins and outs of AI, machine learning, and the ever-evolving nature of this innovative technology.

About Toloka

Toloka is a European company based in Amsterdam, the Netherlands that provides data for Generative AI development. Toloka empowers businesses to build high quality, safe, and responsible AI. We are the trusted data partner for all stages of AI development from training to evaluation. Toloka has over a decade of experience supporting clients with its unique methodology and optimal combination of machine learning technology and human expertise, offering the highest quality and scalability in the market.

Subscribe to Toloka news

Case studies, product news, and other articles straight to your inbox.