Why Inter-Rater Reliability Isn’t Enough: Enhancing Data Quality Metrics

Introduction

If you’ve been involved in the data annotation industry, you are likely familiar with the concept of inter-rater reliability agreement. Inter-rater reliability measures the consistency among different raters when they assess the same set of data. It is an important concept in research fields like psychology, medicine, and education, where subjective judgments are needed. There is a general belief in the data labeling industry that high inter-rater reliability indicates high-quality data.

In this article, we will explain why this is not always the case. We will first explain basic methods to calculate inter-rater reliability, such as joint probability agreement, Cohen’s kappa, and Fleiss’ kappa, and then discuss their limitations. Finally, we will show you better ways to control and assess data quality in annotation projects.

What is inter-rater reliability?

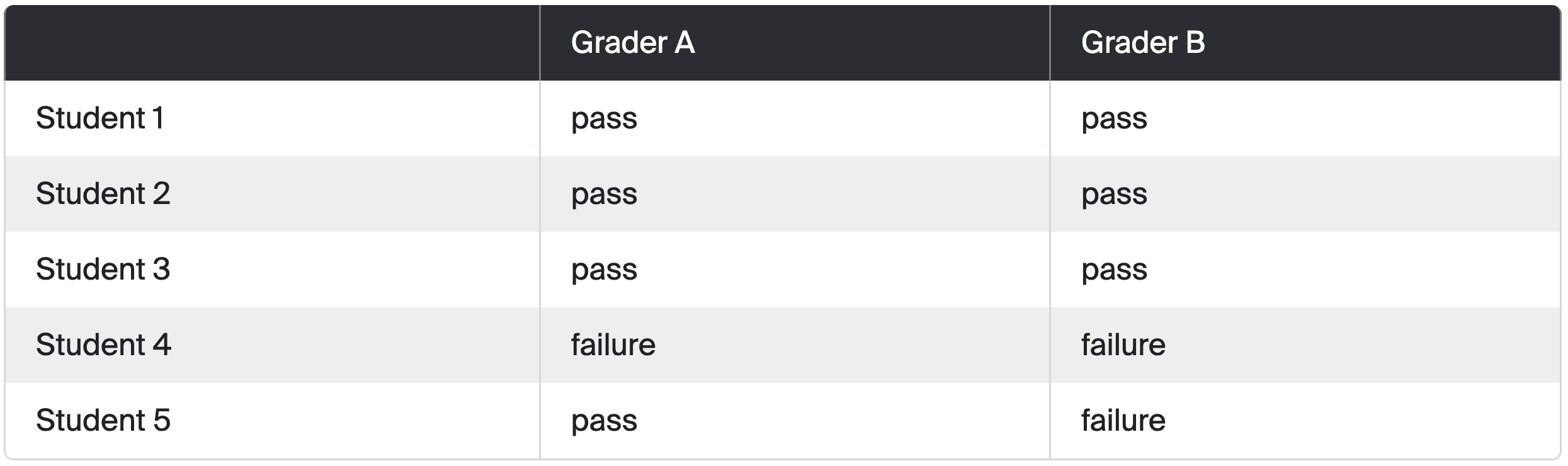

Inter-rater reliability, or inter-rater agreement, refers to the degree of agreement between different raters when scoring the same data. Let's use a practical example to visualize this concept better.

Imagine a scenario where a high school English class submits their essays on a literary analysis of a novel. The marks are either pass or fail and the class has 5 students. The teacher decides to have two different English teachers grade each essay independently to ensure fairness. In 4 cases both teachers coincide and assign the same mark for the student (3 passes and 1 failure), but in one case one teacher assigns a failure whereas the other one thinks it's a pass. This is a visualization of this scenario.

Joint probability agreement

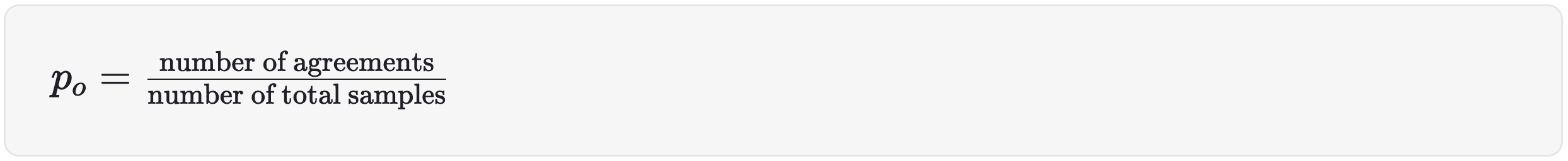

The most straightforward method to calculate inter-rater reliability is using joint probability agreement. To get a percentage, we take the number of agreements and divide it by the total number of samples.

In our example, the percent agreement is 0.8. We have four agreements and five samples, so 4/5 = 0.8.

This metric can range from 0 to 1; 0 indicates no agreement and 1 indicates perfect agreement.

Cohen’s kappa coefficient

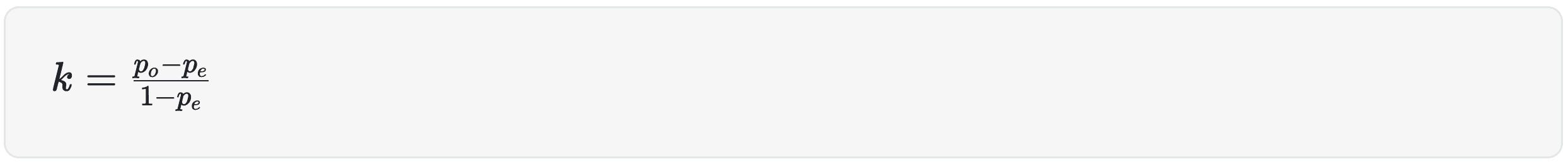

Estimating inter-rater reliability using a joint probability agreement has one important disadvantage. This method does not take into account that the graders could agree by chance. Imagine that you and your coworker flip a coin each. There is quite a high chance you will both get the same result (two heads or two tails). Similarly, when scoring a sample, the random coincidence between raters should be accounted for. This is why statisticians have come up with a better method to estimate inter-rater reliability called Cohen´s kappa.

where

po is the joint probability agreement we have calculated before

pe is the hypothetical probability of a random match between raters

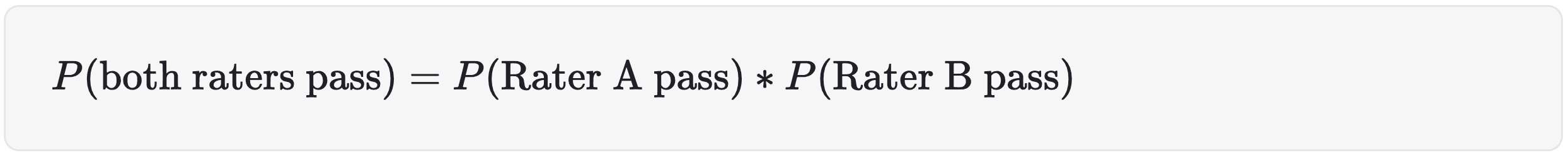

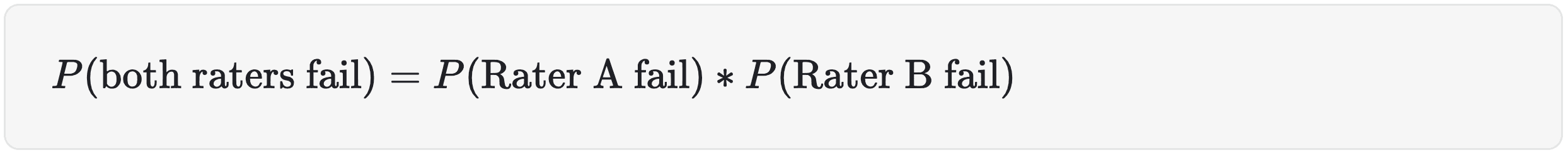

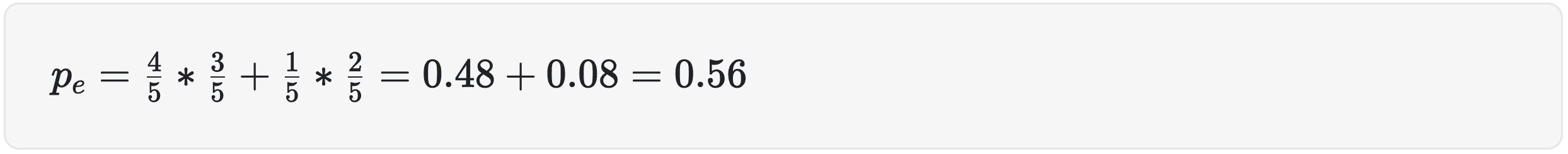

To calculate pe, we sum up the possibility of two raters giving the same results by chance. In our case, it is both raters giving a pass or both giving a failure.

where we can calculate individual numbers in the following way.

Let´s plug in the numbers for our essay grading example.

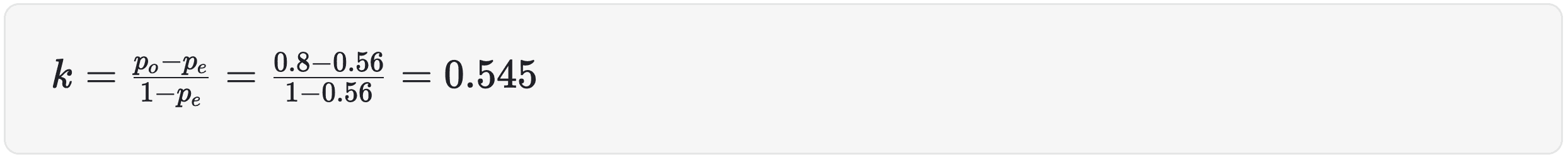

The probability of both graders agreeing by chance is 0.56. Now we can calculate Cohen´s Kappa score.

In our scenario, the kappa statistic is 0.545 meaning weak agreement. This metric ranges from -1 to 1, with negative one representing total disagreement between raters and positive one representing perfect agreement.

Fleiss´ Kappa and other metrics used for inter-rater reliability agreement

An important disadvantage of Cohen´s kappa is the fact that it can only be used with two raters. So in our example, if we had more than two teachers grading essays, it would not be possible to estimate inter-rater reliability using this metric. There is an improved version that can be used with more than two raters, called Fleiss Kappa. We will not go into detail about how this metric is calculated, but the logic behind the calculation is very similar to Cohen´s Kappa with a slight tweak to accommodate multiple raters.

You may encounter other ways to calculate inter-rater agreement, like Pearson’s 𝑟, Kendall’s τ, Spearman’s 𝜌, or Intra-class correlation coefficient. These are valid approaches used by research and industry, and the appropriate choice depends on the type of underlying data that is being analyzed. Whereas all of those metrics are important statistical methods to calculate the agreement between raters, none of these metrics used on their own can be an estimator of data quality, and the rest of this article will explain why.

Why inter-rater reliability is not a good metric for controlling data quality

Data annotation projects often use an approach similar to our essay scoring example. Multiple annotators label the same data, and the final labels are delivered with an inter-rater reliability score reflecting agreement across labelers for the dataset. Many data providers assume that having a high inter-rater reliability score means high-quality data. It seems logical that if many people agree on something, they must be right. This may be true in some cases, especially in simple or relatively trivial annotation tasks, but it does not always hold in more complex projects that require specific skills or attention to detail.

It is easy to imagine a real-life scenario where annotators agree with each other but are wrong. For example, annotators are asked to mark the color of traffic lights visible in a picture. If the traffic light is far away and partially covered by a traffic sign, less attentive annotators might mark the photo as having no traffic light at all. Only one annotator, paying close attention to detail, might correctly identify the traffic light’s color. This scenario would result in a high inter-rater reliability score but an incorrect answer.

Similar situations frequently occur in data annotation. Correctly labeling these harder cases distinguishes high-quality datasets from average ones. This is why Toloka does not use inter-rater reliability as a quality measure. Instead, we have developed a more complex process to understand and measure data quality accurately.

Better ways of ensuring quality

When working with clients, we receive an unlabeled dataset and return a dataset where each data point has a corresponding label. It sounds simple on the surface, but behind each label, there is a stack of technical decisions, statistics, and quality control mechanisms. Let’s take a closer look at the techniques we use to ensure data quality at Toloka and how we ensure our clients receive high-quality data.

Project decomposition

Our clients often come to us with complex annotation tasks, so the first step is to perform task decomposition. This helps to break up complex tasks, making the annotators’ job easier and allowing us to assign appropriate annotators to each subproject according to the skills required. It is better visualized with a simple example.

The task is to label mechanical tools visible in a picture. This could be broken into two projects: in project one, annotators identify if there are mechanical tools in the picture, and in project two, annotators label the actual tools. These are now separate projects and require annotators with different skills. The project identifying whether tools are present does not require any specialized knowledge, but the second one does. In the latter, we need expert labelers who can identify specific tools and write the proper names. At Toloka, we often deal with projects that are broken into many more subprojects, involving further decomposition.

Understanding and measuring annotator skills

Using this decomposition approach and assigning annotators to projects according to their skill levels allows us to control the desired level of confidence in aggregated labels. This means that several annotators label the same instance, and we aggregate the results, taking into account their skill levels. This provides us with a label that has an associated confidence score. If the confidence is too low, we ask more annotators with the required skills to label the instance. This approach enables us to balance the search for quality with cost-effectiveness, as we only use the number of annotators needed to achieve the desired confidence level.

This approach also means we need to monitor the skill levels of our annotators. We use initial tests to check annotator skill levels prior to participating in projects. During the annotation process, we mix control tasks with the normal tasks that annotators work on and use the control tasks for continual monitoring.

Final audit with meaningful quality metrics

As a final step, if the project was broken into parts, we recombine the results to produce the final labels. To ensure the quality of the dataset, we audit a small percentage of it and compute estimated metrics such as accuracy, precision, recall, and F-score. These metrics provide a better estimation of the overall quality of the dataset compared to measuring the inter-rater reliability. This is particularly true when dealing with complex annotation tasks, where annotators may often agree with each other and still be wrong.

Summary

Traditional inter-rater reliability metrics are insufficient for ensuring data quality, especially for complex annotation tasks. These metrics are often favored for their ease of computation rather than their correlation with quality data. A more thorough approach involves task decomposition, skill-based annotation, rigorous quality control, and regular quality audits.

If you’re planning to annotate large datasets with a focus on quality, consider partnering with Toloka and take advantage of our experience and expertise. Book a demo today.