LLM for code generation: a scalable pipeline to gather SFT data

Domain-specific LLMs

Many general-purpose LLMs underperform in specialized areas like law, engineering, coding, or medicine. To close the gap, numerous companies have started developing domain-specific models optimized for their particular areas of expertise. For instance, there are models like Med-PaLM for medicine and BloombergGPT for finance. In the coding domain, there are fine-tuned models such as GitHubCopliot, StarCoder2, and Code Llama. While these models are helpful for practical tasks, they still have limitations and struggle with more complex problems.

One of the toughest challenges for improving model performance is building high-quality Supervised Fine-Tuning (SFT) datasets that are niche-specific and can be used to fine-tune base LLM models to excel in the desired domain.

In this article, we will introduce a pipeline that Toloka developed to collect a high-quality dataset in the coding domain with a throughput of 2000 prompt-completion pairs a month.

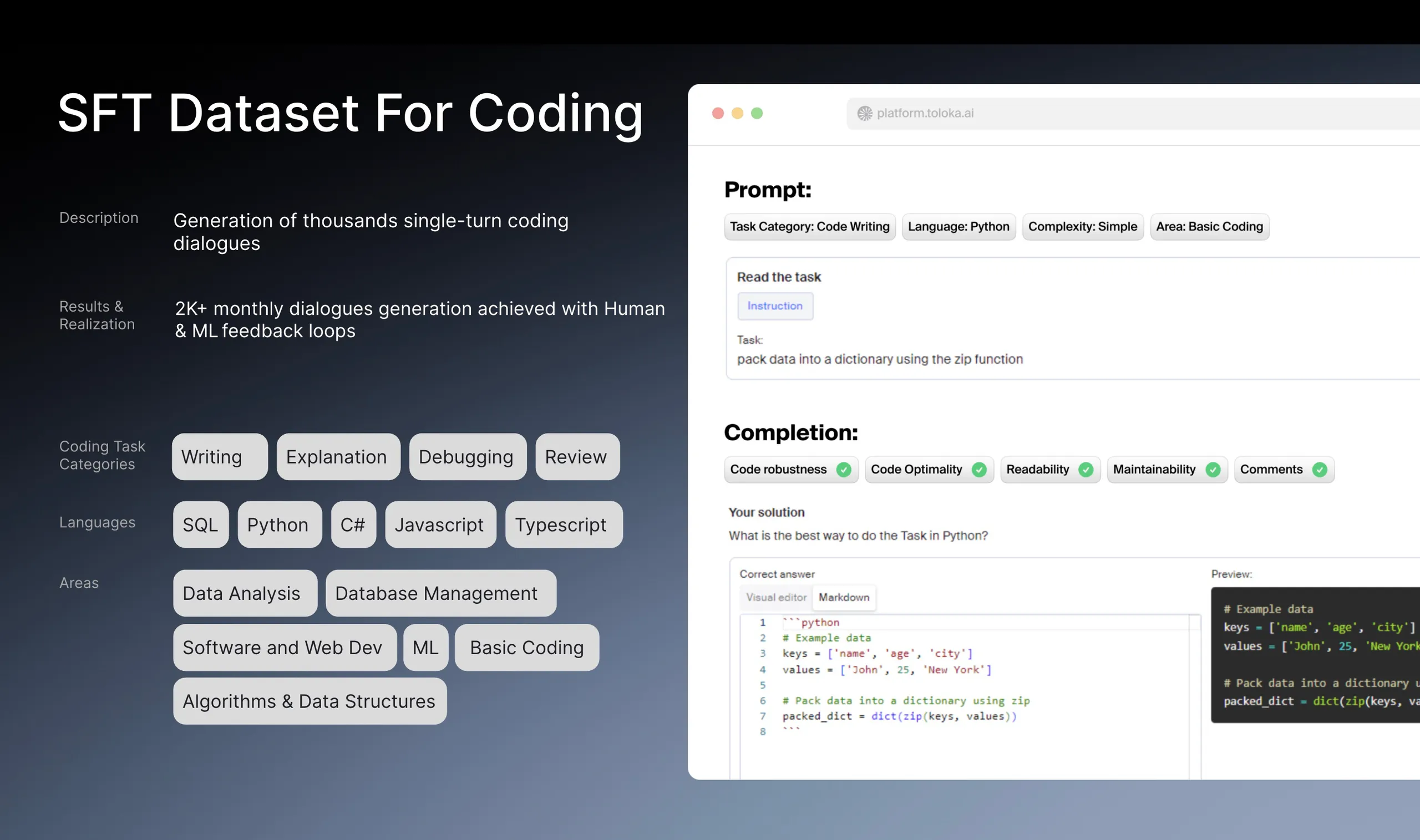

SFT dataset for the coding domain

The challenge involved creating an SFT dataset covering various programming languages, including Python, SQL, Scala, Go, C, C++, R, and JavaScript. Toloka aimed to generate a significant number of high-quality question-answer pairs addressing different coding challenges within this domain.

In terms of resources, we needed to coordinate highly qualified coding experts to write prompts and completions for each programming language. To build a useful dataset, the prompts needed to be complex and completions needed to be accurate, helpful, and concise. We designed the workflow for scalability to deliver the data on a schedule and ensure the quality of the final data.

Pipeline for coding SFT dataset

To tackle the challenge, Toloka crafted a solution that augments human expertise with modern Machine Learning (ML) techniques in a data-gathering pipeline. We assembled a team of coding experts proficient in all necessary programming languages, serving as the foundation of the project with their primary responsibility being to maintain high data quality.

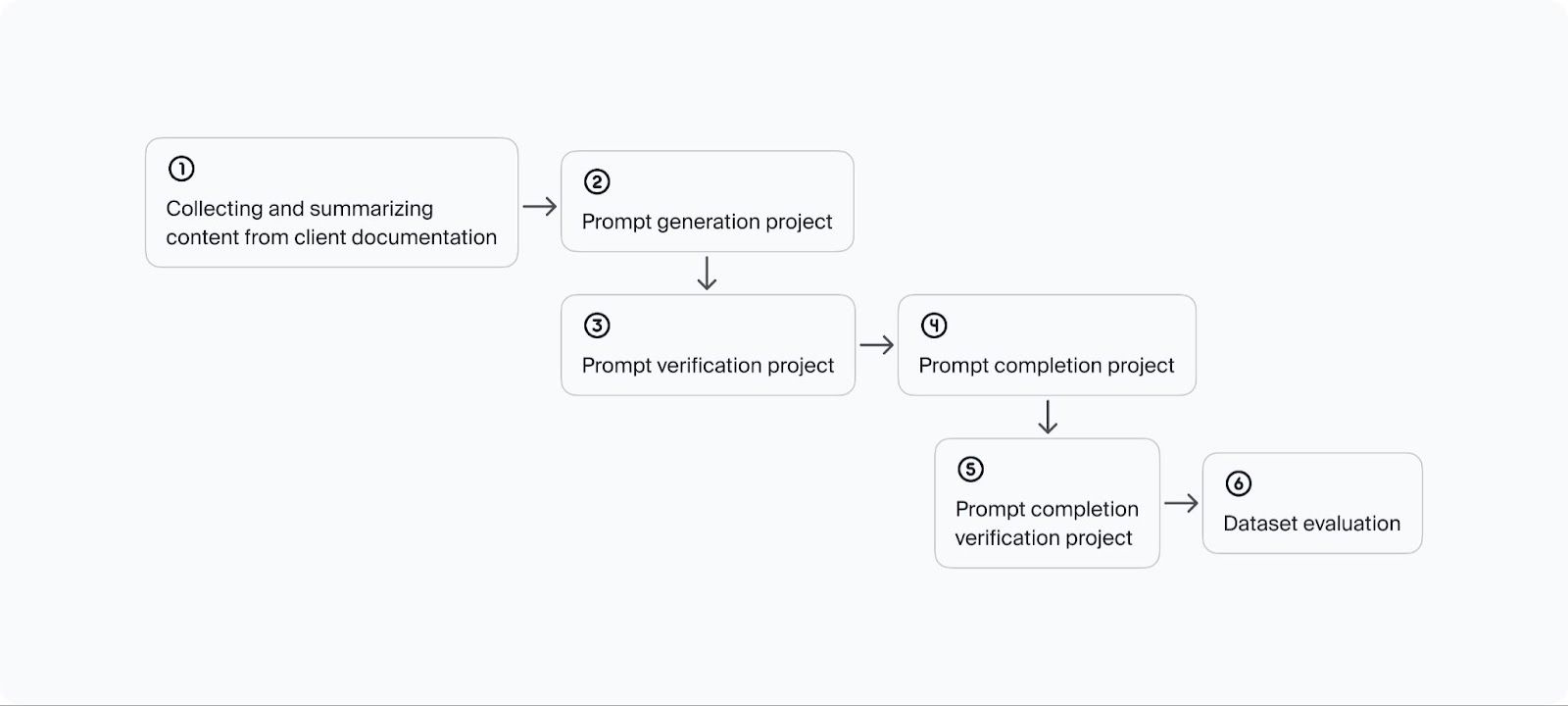

To assist the coding experts and speed up the process, we developed several ML algorithms that perform data extraction and automatic data checks. The constructed pipeline is shown in the schema below and each step will be further described in the next subsections of this article.

Step 1. Collecting and summarizing the context data from documentation

The initial step of the pipeline involves extracting information from clients’ documentation regarding the problems that the LLM must be able to address. This stage automatically gathers details about each coding problem, such as context, keywords, programming language, category, and subcategory. These details are then utilized in the subsequent step to generate prompts effectively.

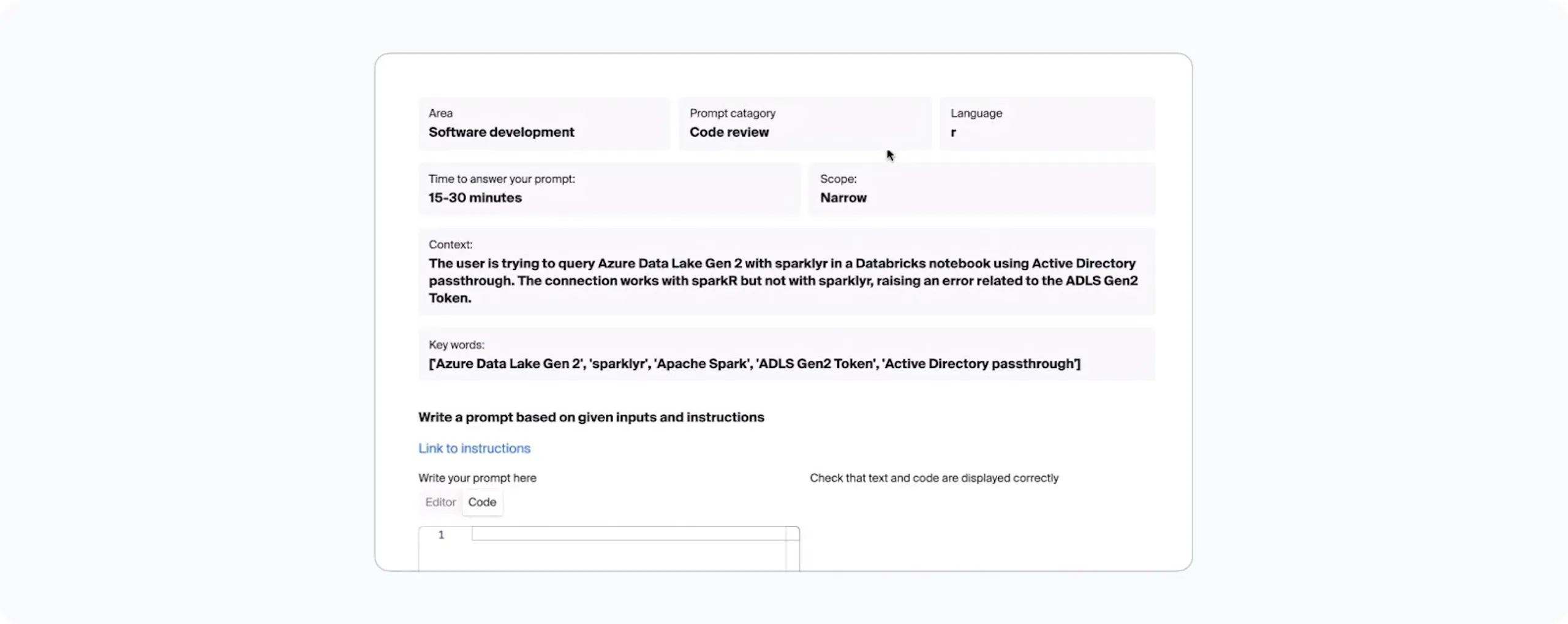

Step 2. Generating prompts with experts

In the second step, the extracted information is passed to a human coding expert. Their task is to create the actual prompt by following specific instructions and carefully considering the context and other relevant details. An example of such a task is illustrated in the screenshot below.

Prompt generation task

To support experts in generating prompts, we use open LLMs to automatically generate synthetic data across a range of topics, user cases, and scenarios. This data serves as examples to inspire creativity, with ideas that experts can build on. Ultimately, this approach improves the efficiency of experts and enhances the diversity and quality of prompts collected during this phase.

Step 3. Verifying prompts

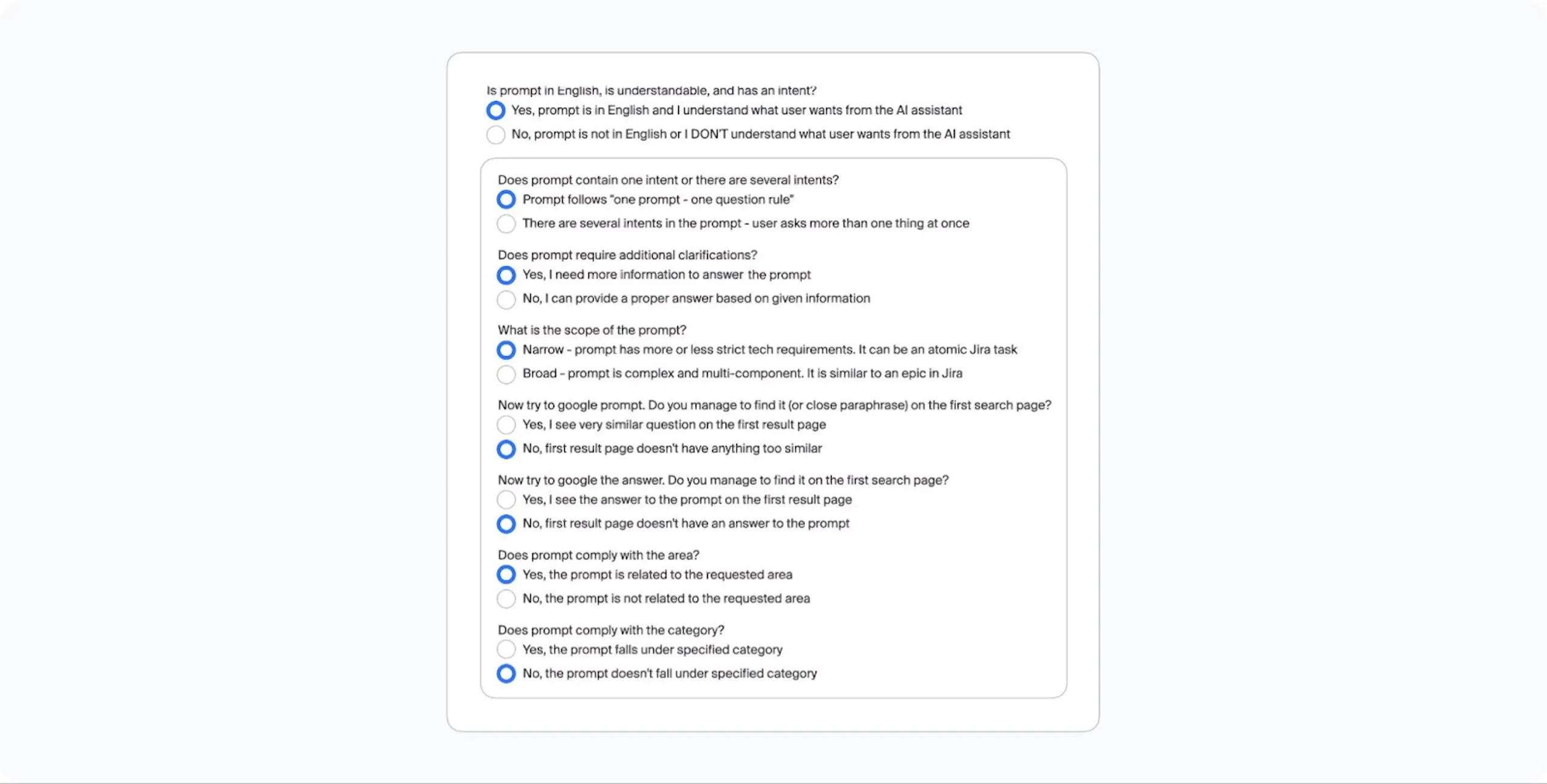

In this step, the prompts crafted in the previous project are reviewed by a second line of coding experts and ML models. The models perform basic classification checks to confirm that the prompt corresponds to the chosen category, subdomain, and programming language. Meanwhile, human coding experts assess the prompt’s quality, suitability, and adherence to instructions, context, and provided details. The screenshot below shows examples of some questions given to experts.

A subset of questions from the prompt verification task given to human coding experts assessing the prompt’s quality, suitability, and adherence to instructions, context, and provided details.

In our pipeline, the prompts that do not meet the required criteria are rejected and need to be rewritten. The accepted ones move on to the next step, where coding experts are tasked with writing completions.

Step 4. Writing completions with experts

The fourth step in our pipeline requires experts to follow carefully crafted instructions when writing answers to user queries. They must ensure that their completions fully address user requests and are truthful, elegant, and concise. Once an expert completes a response, it proceeds to the next step for further verification.

Step 5. Verifying completions with experts

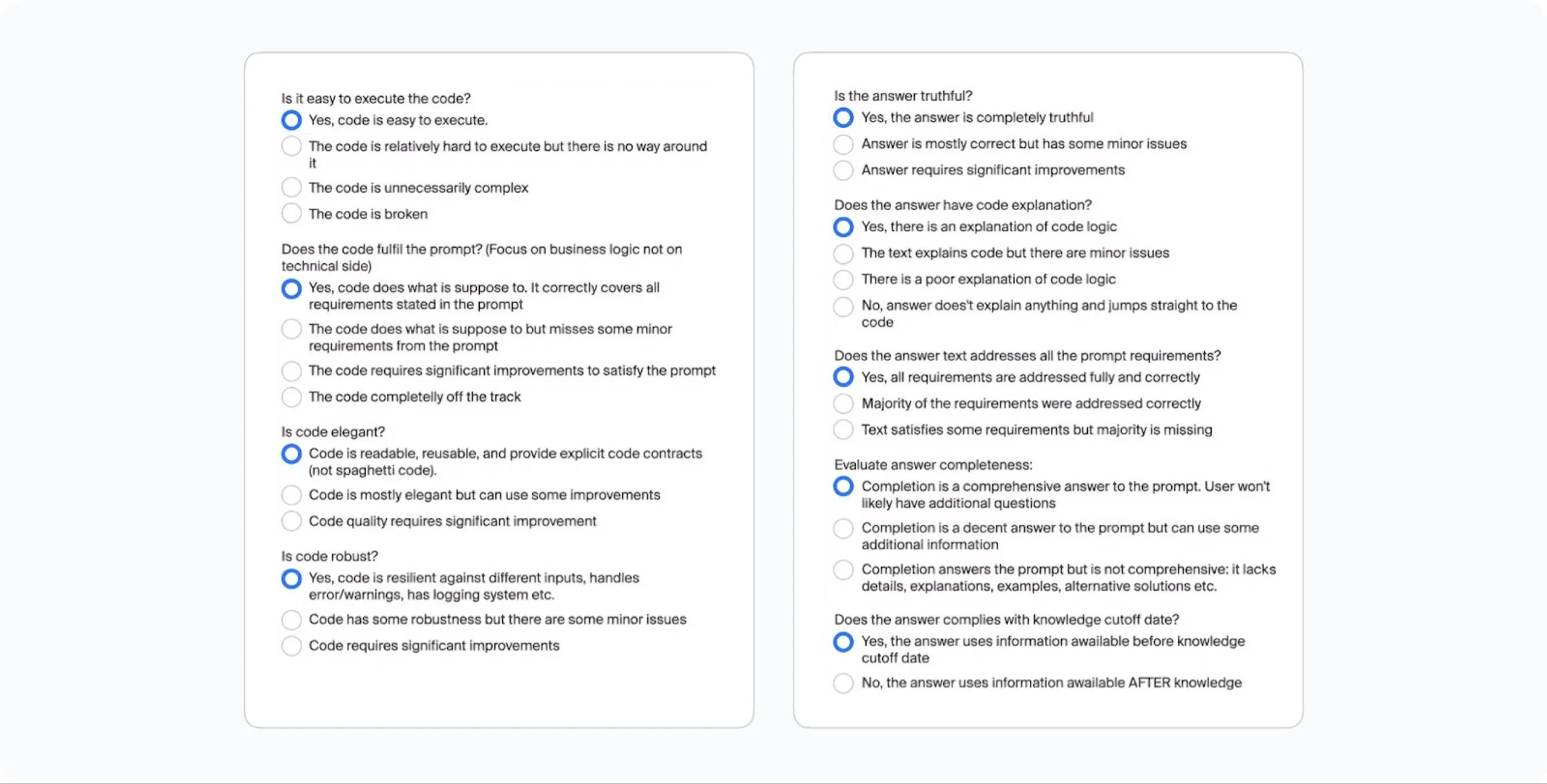

When completions are ready, a second line of human coding experts verify the text. This ensures that the prompt completion is assessed by a different person from the one who wrote it. Once again, experts must answer detailed questions about the completion’s quality. Examples of these questions are provided in the screenshot below.

Prompt completion verification task

Similarly to a prompt verification project, if the completion’s quality does not meet the required standards, it is rejected and must be rewritten. If it passes, it is accepted together with the corresponding question and becomes a new data point in the dataset.

Step 6. Evaluating the created dataset

This brings us to the final step in the pipeline, where we run an evaluation of the dataset to assess its overall quality before it is consumed by an LLM. For each data point in the dataset, we request different models such as GPT-3.5, LLama, and Mistral to complete the prompt, and then compare these answers to the responses given by our experts. This is done using both expert evaluation to ensure data quality, and automatic evaluation with GPT-4 to improve cost and scalability. In both scenarios, a decision maker (an expert or a model) is presented with a side-by-side comparison of two answers and asked which one is better. We collect the number of win rates against the previously mentioned models and a high number gives us confidence that the dataset will improve the LLM our client is developing.

In addition to ensuring data quality, we also examine the distribution of the dataset based on languages, technologies, and cases to ensure it aligns with the desired parameters before providing it to the client. Finally, we perform automated checks to identify and remove any semantically similar samples. Only rigorously checked datasets are delivered to the client. If any criteria are not met, we continue refining the dataset until all issues are resolved.

Summary

The above pipeline demonstrates how to structure an SFT data-gathering project by combining human expertise with ML solutions to boost efficiency and scalability. Collecting a domain-specific dataset poses unique challenges, necessitating a large pool of expert contributors and effective coordination of their activities. While we’ve applied this pipeline for coding, we can easily adapt it to other domains such as medicine, law, engineering, finance, pharmaceutical, and many more.

If you are interested in gathering a dataset for a specific domain, feel free to reach out to us. We can build a custom solution to meet your model’s needs.

Why is SFT important?

Supervised Fine-Tuning (SFT) is a crucial technique in transfer learning with pre-trained large language models. SFT tailors an existing model's architecture to meet the demands of a specific application, improving efficiency without extensive computational costs.

Fine-tuning involves using curated datasets to guide the adjustment of model parameters. Providing the model with pairs of input data and the corresponding desired outputs gives the model clear feedback during training, enabling it to learn specific patterns relevant to the particular task. The quality of the training data is paramount because the model's output can only be as good as the data it learns from.

Reliable SFT requires the involvement of numerous industry experts responsible for annotating data, designing prompts, and validating the model's outputs.

What’s unique about SFT datasets?

Businesses across various industries leverage Large Language Models (LLMs) by refining them for specific needs through transfer learning. However, fine-tuning an LLM necessitates niche-specific datasets, which require domain expertise, significant resources, and experience in similar process design.

This is where Toloka provides value, delivering high-quality Supervised Fine-Tuning (SFT) datasets to meet the needs of a specialized model. Our expertise spans multiple domains, allowing us to scale processes and ensure reliable fine-tuning, Reinforcement Learning from Human Feedback (RLHF), and evaluation. If you need help gathering domain-specific datasets, reach out.

What are domain-specific LLMs?

The mechanics of transfer learning enable companies to optimize general-purpose large language models for efficient performance in specialized areas rather than creating new ML models from scratch. Tailoring the original architecture helps overcome the limitations LLMs face when applied directly to specific domains.

There are many examples of widely used fine-tuned LLMs in various industries. For instance, CaseLawGPT assists in legal research, while LexisNexis AI is tailored for case predictions. ClinicalBERT is used for medical text analysis, ManufactureGPT and FactoryBERT improve production efficiency and predictive maintenance, BloombergGPT supports financial analytics, and FarmBERT aids in developing agricultural techniques.

What are some examples of large language models for code generation?

Several fine-tuned models are famous across the software development domain, including GitHub Copilot, StarCoder2, and Code Llama. These models enhance developers' productivity by generating code snippets and code completions, understanding code for review or debugging, providing hints for algorithmic tasks, providing documentation assistance, etc.

Beyond these are specialized code generation models like IntelliCode, which provides recommendations based on best practices from open-source projects, or Replit's Ghostwriter, which offers an integrated coding assistant experience within the Replit platform.

However, despite their advanced capabilities, many LLMs for code generation share some common limitations concerning accuracy, security, and handling complex problems. Sometimes, they generate incorrect code and struggle with solutions requiring deep contextual knowledge.

These limitations highlight the need for further refinement of large language models to better address domain-specific challenges and ensure high-quality outputs.