Classifying noisy images: Machines vs. humans

Machine learning models are constantly being improved to increase accuracy and robustness to noise — the ability to make correct predictions on noisy data. This is particularly crucial for image classification algorithms, since real-world images often contain noise from various sources, depending on the weather conditions, time of day, and other factors.

To evaluate noise robustness, we conducted an experiment to see if human labelers or ML models are better at classifying increasingly noisy images. We chose the fine-tuned ResNet-50 model as our classification model. Our goal was to identify the acceptable noise levels for both the model and humans and test our hypothesis that people are better at classifying noisy images. The results showed some intriguing and unexpected findings. Read on to see which group handles noisy images better.

How to add noise to images

Let's first look at the basic principles of image formation. Pictures are composed of small units called pixels, each with a value that represents its color and brightness. These values are represented by three components: red, green, and blue, each ranging from 0 to 255. The combination of these three numbers determines the final color of the pixel, with high values creating bright colors and low values creating dark colors. For instance, a pixel with red, green, and blue values of 255, 0, 0 is a purely red pixel, and 255, 255, 255 represents a white pixel. The arrangement of pixel values and components creates the overall appearance of an image.

Adding noise to images involves intentionally altering some pixel values in the image. This mimics real-world conditions like transmission errors, camera noise, etc. Two common types of noise that can be added are salt-and-pepper noise and Gaussian noise.

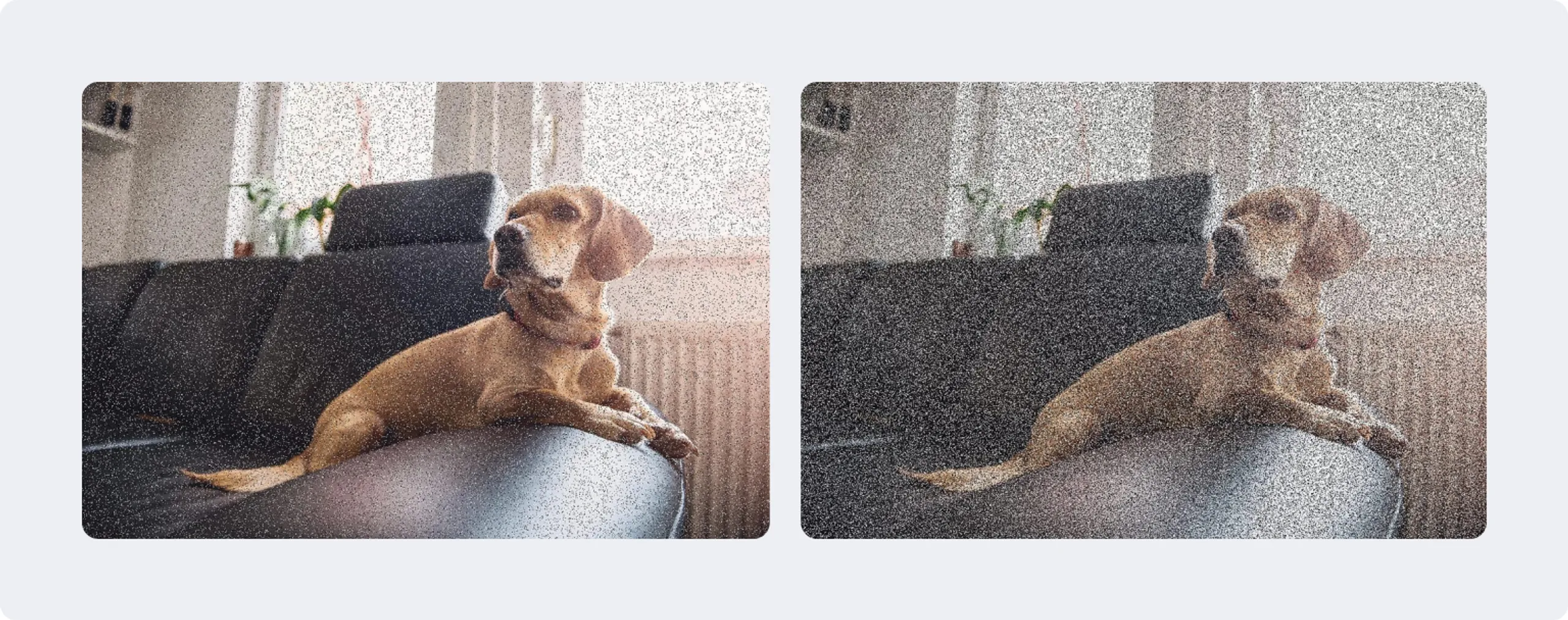

Salt-and-pepper noise is a type of image noise that randomly changes some pixels to either black ("pepper") or white ("salt"), at a frequency determined by a fixed probability value p. A larger value of p will result in more pixels being changed in the image. The proportion of black and white pixels is usually equal, but it can be adjusted.

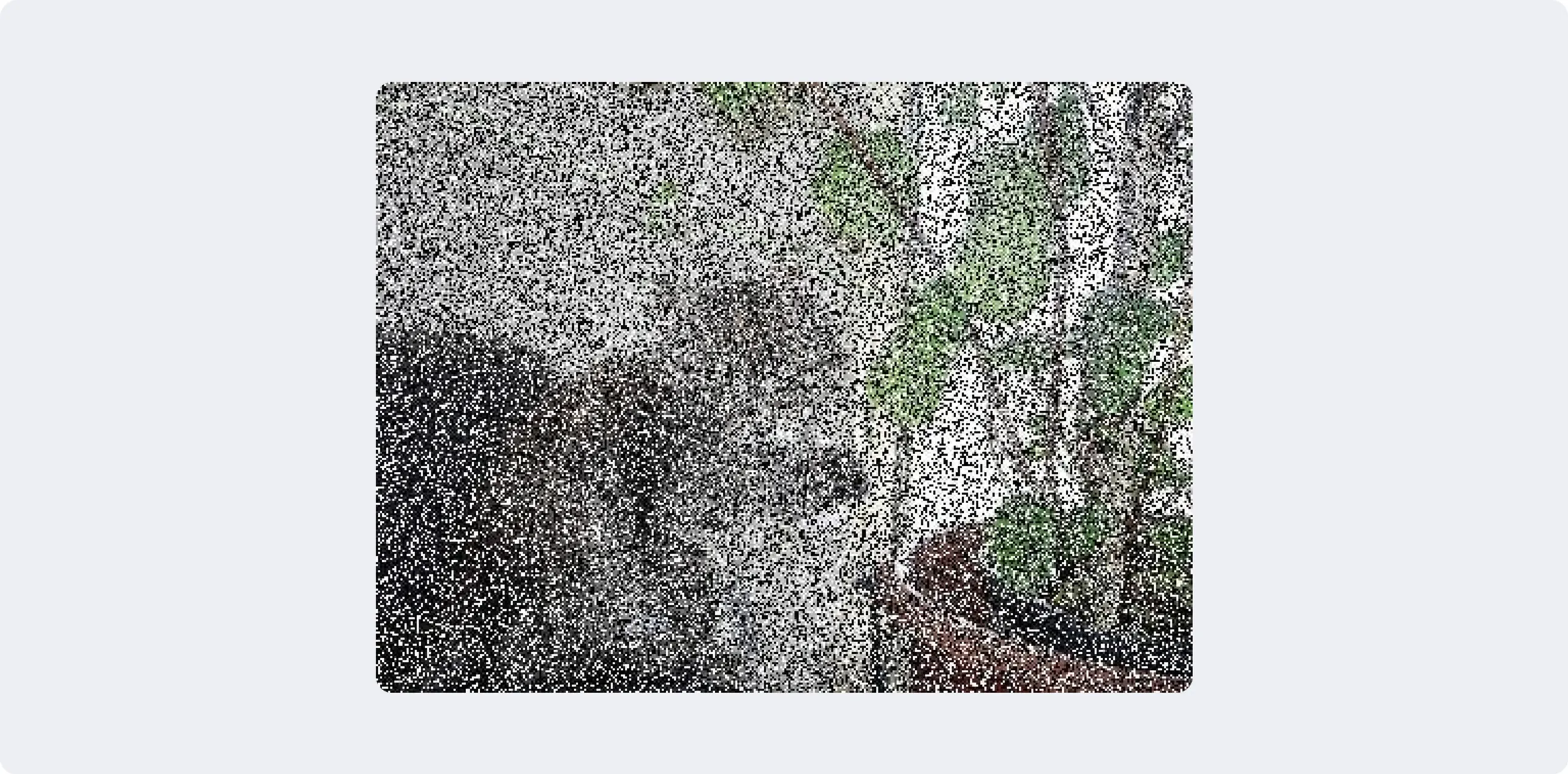

Image with added salt-and-pepper noise, p=0.1 (left) and p=0.4 (right)

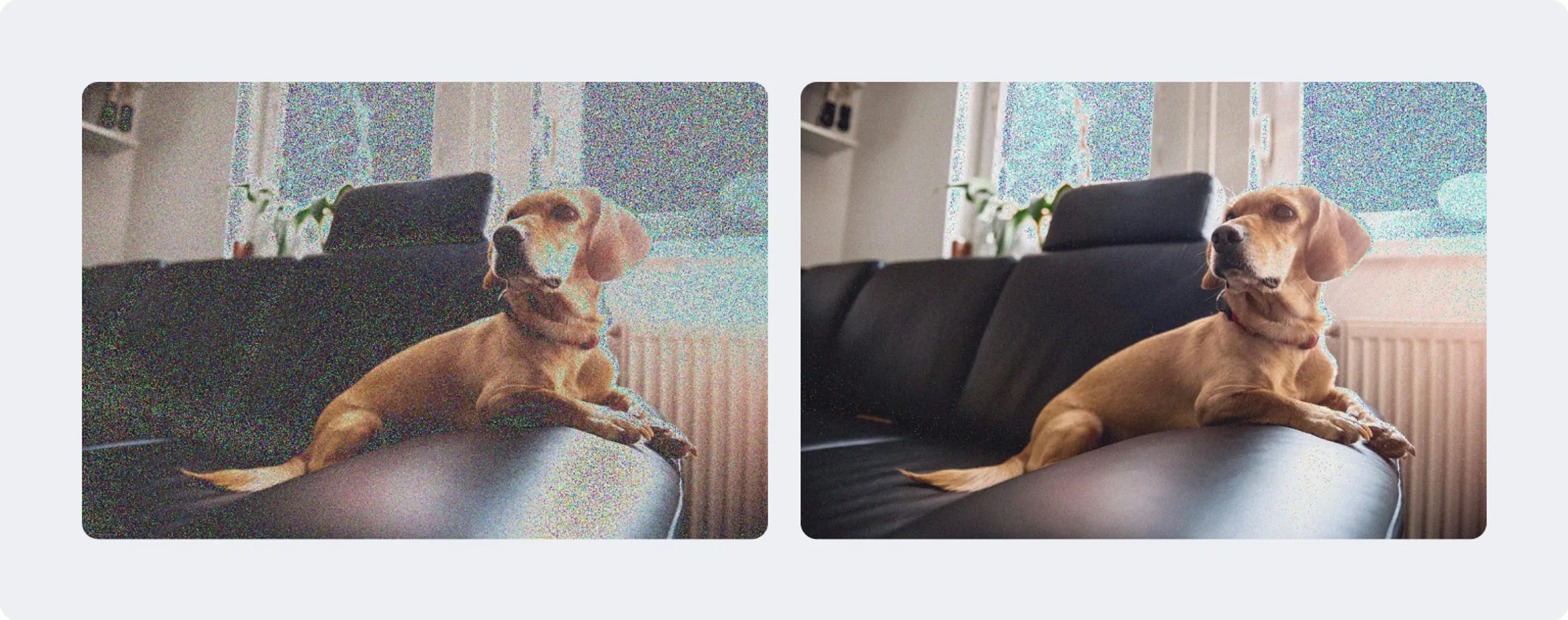

On the other hand, Gaussian noise introduces random variations to each pixel’s value based on a normal distribution. Random values, drawn from a normal distribution with an expected value of 0 and standard deviation σ, are added to each RGB channel. The larger the value of σ, the more the pixel values can be altered, making the image increasingly noisy.

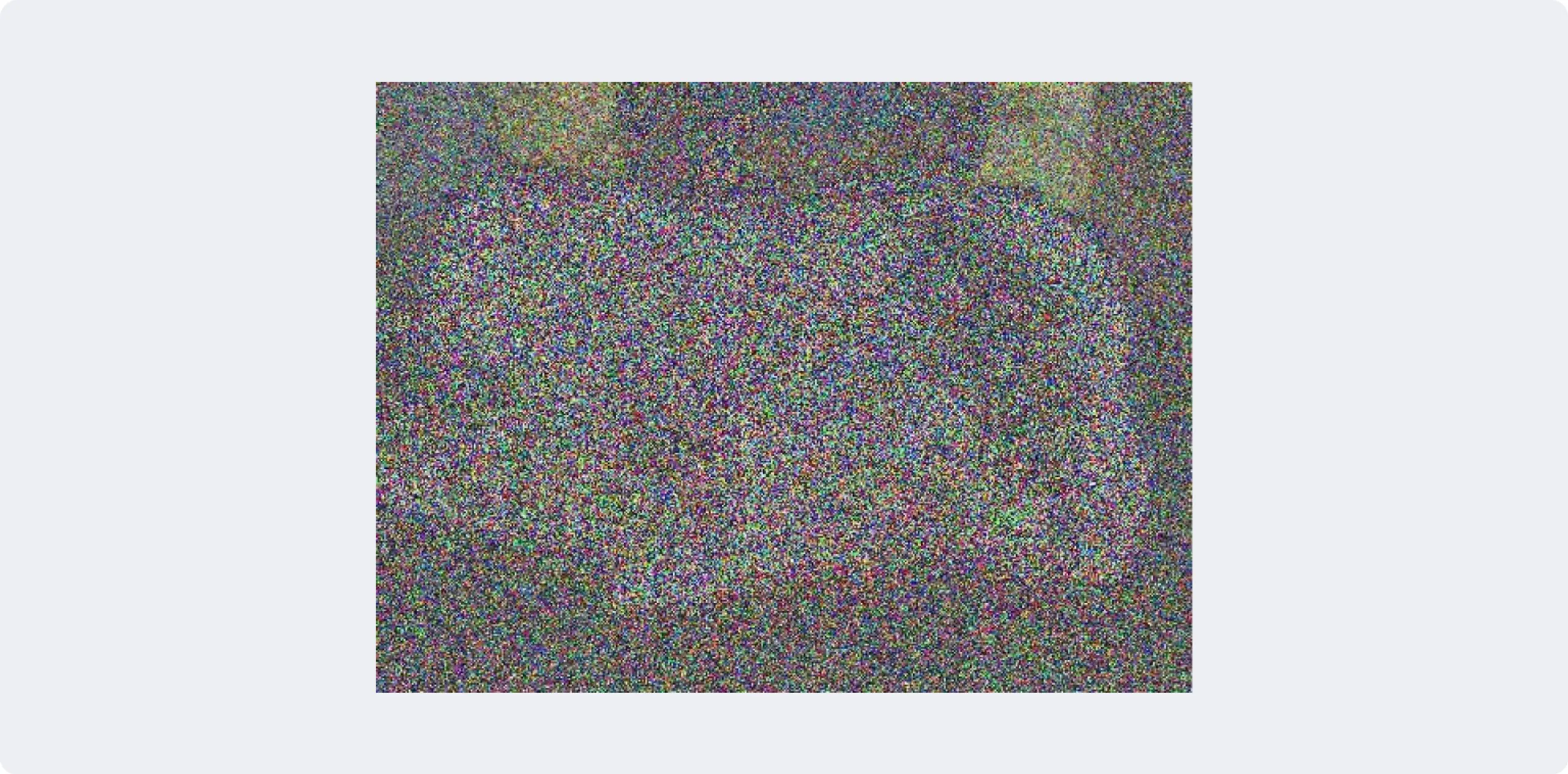

Image with added Gaussian noise, σ=10 (left) and σ=30 (right)

There are noticeable differences between the two types of noise. Salt-and-pepper noise is uniformly distributed throughout the image, while Gaussian noise tends to be more noticeable in the white and black parts of the image, particularly in areas with object contours. Gaussian noise makes white areas appear darker since it adds positive values to RGB values close to 255, 255, 255 (with a maximum value of 255). This causes overflow and results in a darker pixel. These differences play a critical role in the performance of ML models, which we’ll cover in more detail later.

ResNet-50 vs. crowdsourcing

As mentioned, our goal was to identify and compare the limits of human labelers and ML models in terms of classifying images with different noise levels. We wanted to understand the impact of the level and type of noise in the training dataset on the performance of these two groups. To do this, we used a binary classification dataset of cats and dogs with balanced classes. We added salt-and-pepper noise with probability values ranging from 0.1 to 0.9 and Gaussian noise with standard deviations ranging from 20 to 80. In total, we obtained 16 datasets with different levels of noise, including the original without any changes.

The model

We used the ResNet-50 model from Hugging Face pre-trained by Microsoft as our classification model. Its relative lightness, among other features, made it a good choice since we were only focused on conducting a binary classification and this model contained a huge amount of data in the pre-training dataset. We fine-tuned the model for 4 epochs on each of the 16 datasets, resulting in 16 different fine-tuned models. Then, we used each fine-tuned model to calculate the accuracy of the predictions on each of the 16 datasets, creating a matrix that showed how the noise in the training sample affects prediction quality on a dataset with different noise.

In addition, we combined all 16 datasets into one and fine-tuned the model on it for 1 epoch. This gave us a model with exposure to all types and levels of noise, which we expected to perform better than the others. Below, we compare this model with our crowdsourcing results.

Crowdsourcing

We used the Toloka platform to assess human performance. To ensure accuracy and avoid any misunderstanding of the task or potential fraud, we provided training to Tolokers and used quality control rules like majority vote, control tasks, and limiting fast responses, among others. We labeled all 16 datasets, with each image annotated by three different labelers. Finally, we used the Dawid-Skene model to aggregate the responses.

Results

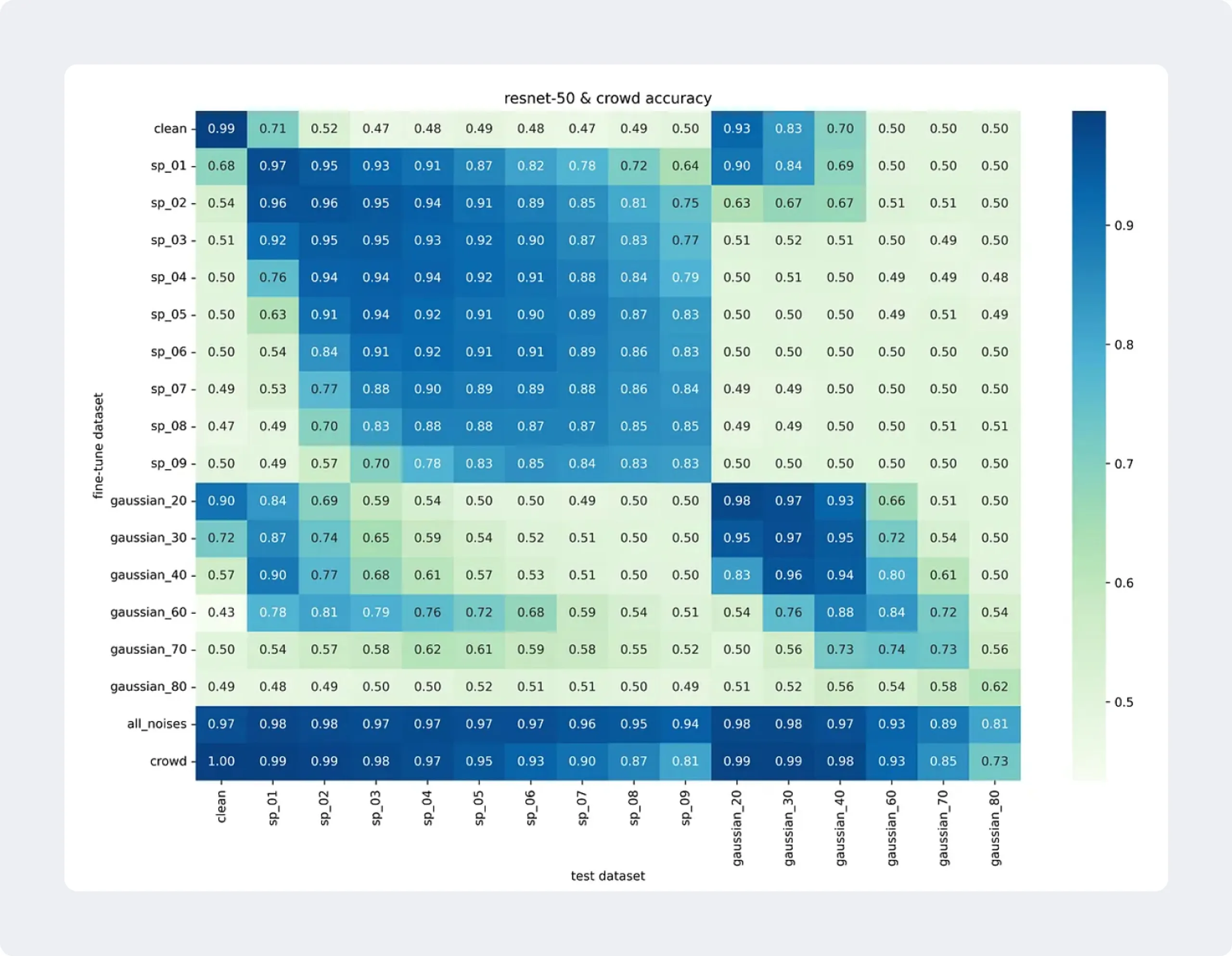

The results of the model's accuracy are displayed in the matrix below. Take a look at any cell. The label on the left indicates the dataset on which the model was fine-tuned, and the label at the bottom is the dataset on which predictions were made. The cell's value shows the accuracy of the fine-tuned model on the specific test dataset. The bottom two rows, "all_noises" and "crowd", show the results of the ResNet-50 model fine-tuned on all 16 datasets and crowdsourcing results, respectively.

Accuracy matrix comparing crowdsourcing and ResNet-50

From this figure we can clearly see that the model’s accuracy is lower when it is trained on one noise type and evaluated on another. This is especially evident when comparing performance on salt-and-pepper noise and Gaussian noise. For instance, if a model is trained on salt-and-pepper noise with p=0.3, it will perform well on salt-and-pepper noise, but give random predictions on images with Gaussian noise with std=10. This is because Gaussian noise differs from salt-and-pepper, as we explained above. Additionally, the first column reveals that the model's performance declines when making predictions on a clean dataset after being fine-tuned on a noisy dataset.

Humans vs machines

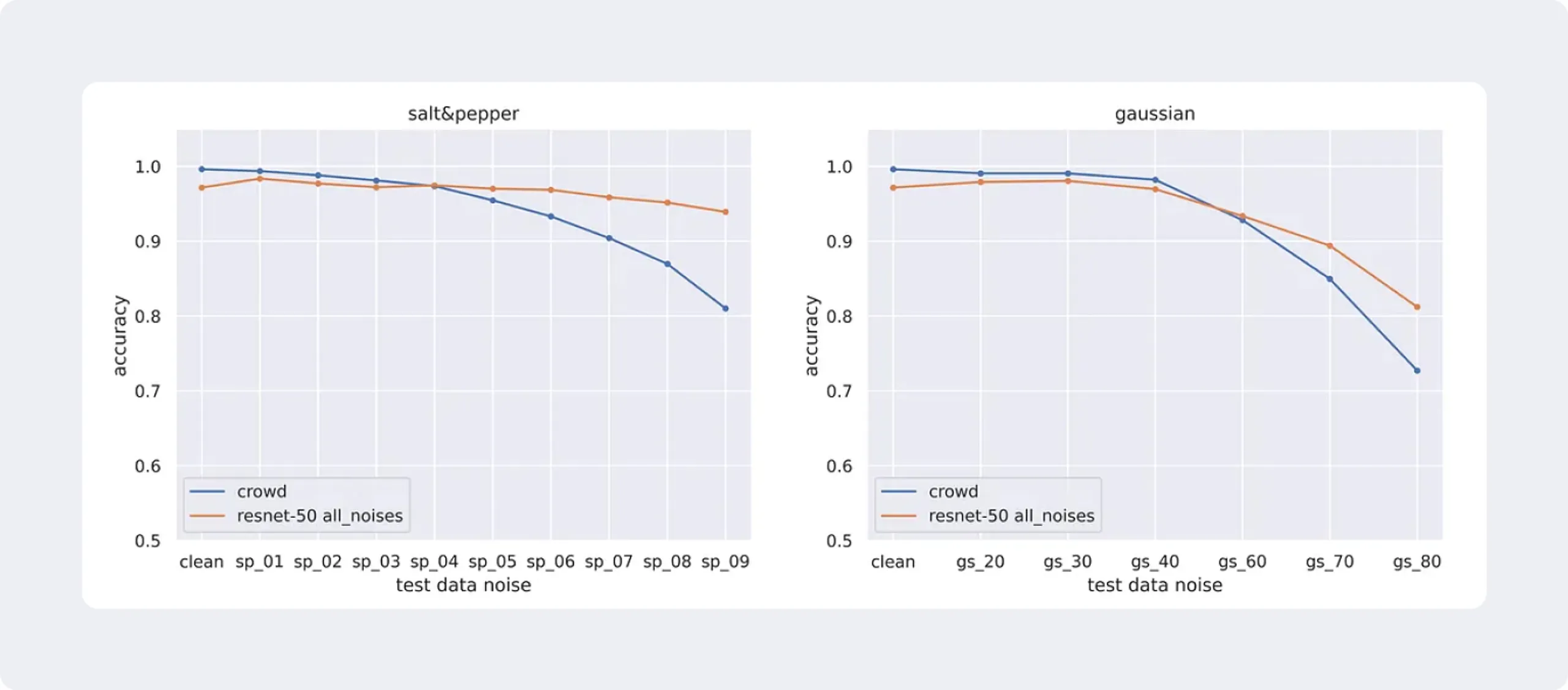

To compare human and model performance, we created another diagram that highlights the two bottom rows of the matrix.

Accuracy of crowdsourcing and ResNet-50 fine-tuned on all noise levels

The two graphs show that humans are outperformed on strong noise by the ResNet-50 model, which was trained on all types of noise.

At first, the crowdsourced labelers had nearly perfect accuracy on weak noise, but their performance declined significantly compared to the ML model once salt-and-pepper noise with p=0.4 and Gaussian noise with σ=60 was introduced. Surprisingly, the model was more robust to noise than humans.

How the crowd falls short

To understand why the individual humans made mistakes, we looked at the images that were mislabeled by every person (meaning no one answered correctly).

Can you tell if it's a cat or a dog in the images below? We couldn't either.

Example of image with salt-and-pepper noise with p=0.6 (cat)

Example of image with Gaussian noise with std=60 (dog)

We concluded that when the noise level is low, humans outperform the ResNet-50 model trained on all noises. However, as the noise level increases, the accuracy of humans drops from 100% on clean data to 81% for the strongest salt-and-pepper noise and 73% on the strongest Gaussian noise. In contrast, the accuracy of the model trained on all types of noise only drops slightly: from 97% to 94% and 81% for the strongest salt-and-pepper and Gaussian noises, respectively.

Making sense of the results

To understand why the model performs better with higher levels of noise, we would need to apply different techniques to interpret the model. A simple explanation could be the vast number of images (14 million) in the ImageNet dataset, which was used to pre-train the ResNet-50 model. We can also assume that fine-tuning the model with various types and levels of noise allowed it to learn how to recognize patterns on noisy images — since it was trained on the same dataset 16 times under different noise conditions.

What’s next?

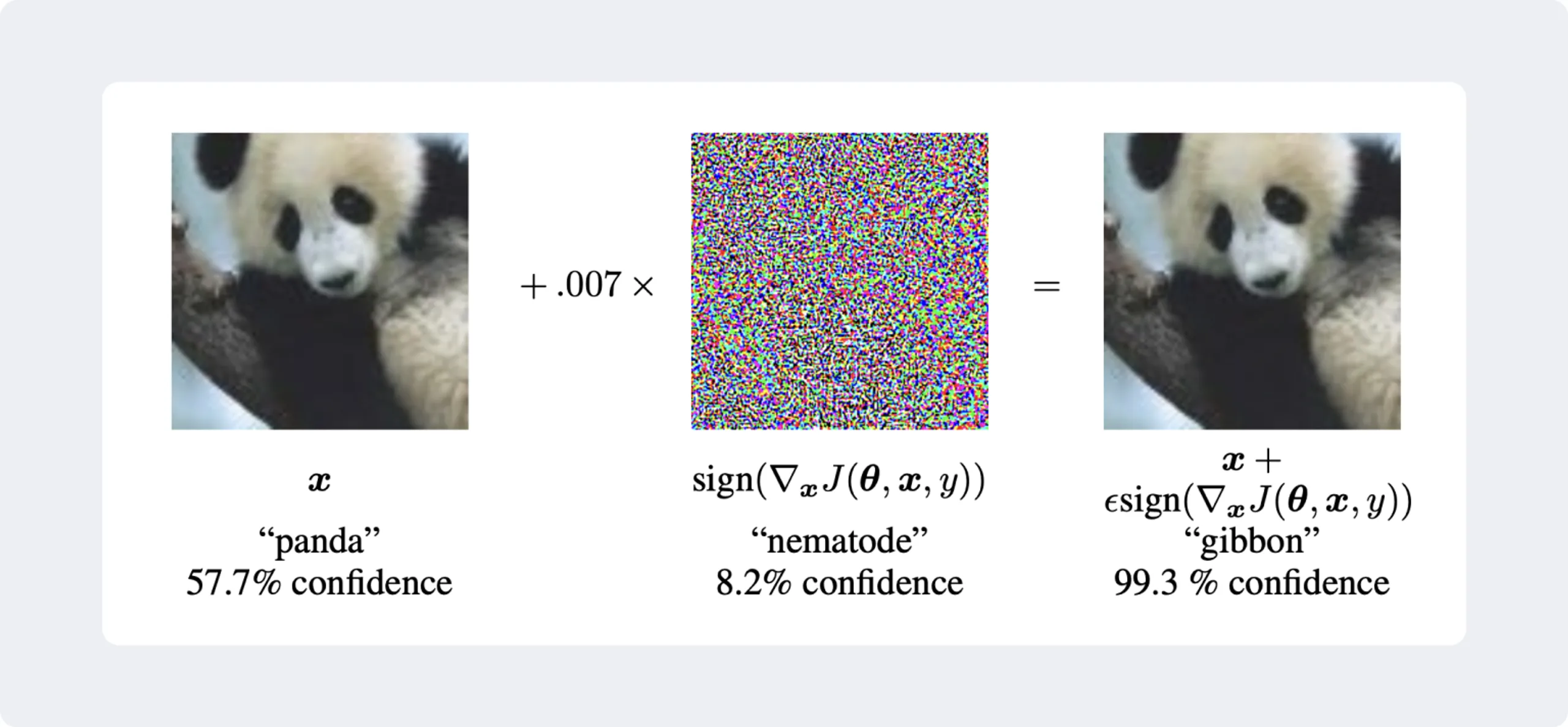

Having considered random noise, we can move on to adversarial attacks — an image change that can be used to fool computer vision and ML models. These attacks make small, intentional changes to an image that can’t be detected by the human eye and are designed to cause a model to make incorrect predictions. Adversarial attacks are a real problem in high-stakes areas like medicine or security where incorrect predictions can have serious consequences.

To understand how adversarial attacks work, imagine a scenario where you show an ML model a picture of a panda. The model can accurately recognize the panda and label it. However, by making small changes to the picture, like adding noise or changing certain pixels, it's possible to create an image that the model now incorrectly labels as a gibbon, for example.

This is the basic idea behind an adversarial attack: to trick the model into making a wrong prediction by making unnoticeable changes to the input.

It’s crucial to understand how these attacks work and how to defend against them. Some approaches include training models on adversarial examples to make them more robust, or using techniques such as image denoising to remove adversarial perturbations — a change to a physical object that is deliberately designed to trick an ML system into mistaking it for something else. As the use of ML models continues to grow, it's important to stay alert about the threat of adversarial attacks and work to reduce their impact.

One way to help ML models cope with adversarial attacks is to involve crowdsourcing. Humans can identify patterns and contextual information that a model may miss and provide input that improves the model's accuracy and robustness. This approach, known as human-in-the-loop, can help mitigate the impact of adversarial attacks on machine learning models. We’ll go into more detail on this in one of our next posts. Stay tuned.