Advantages of PEFT for your LLM application

Transformer-based large language models are getting smarter and bigger. So the number of model parameters grows, reaching billions or even trillions. Without a reliable data partner standard fine-tuning becomes costly and resource-intensive.

Additionally, fine-tuned models pose storage and deployment challenges that cannot be ignored. Each downstream task that the LLM performs, such as question answering, machine translation, text generation and others, requires a separate LLM. Keeping and running these models gets really costly, whether you use your own infrastructure or address cloud solutions provider, as fine-tuned models tend to weigh the same or even more than the initial pre-trained model.

These kinds of issues made data scientists wonder if the process of training a model to serve a specific purpose could be accelerated without worrying too much about the available storage and processing memory. And they discovered a group of methods called Parameter-Efficient Fine-tuning or PEFT in short. What their peculiarities are and how they facilitate fine-tuning of the model we discuss further in our overview.

What is PEFT?

Parameter-efficient fine-tuning refers to the method in natural language processing that allows adapting a pre-trained machine learning model to a new task or domain while minimizing the number of parameters that need to be updated during the fine-tuning process.

PEFT method is commonly used in transfer learning where a model trained on a large dataset is fine-tuned on a much smaller, task-specific dataset. Instead of training the entire model from scratch, which would require a large amount of labeled data, computational and storage costs, only a subset of the model’s parameters are updated during efficient fine-tuning (PEFT).

Parameter-efficient fine-tuning typically involves freezing some of the layers in the pre-trained model, especially those that capture generic features that are likely to be useful across tasks, and only updating the parameters in the remaining layers. By doing so, the model retains the knowledge learned from the pre-training stage while quickly adapting to the specifics of the new task.

The proper balance between layer freezing and parameter updates appears to play a decisive role in effective parameter fine-tuning. While too few updates may prevent the model from sufficiently adapting to a new task, updating too much may lead to overfitting or loss of valuable prior knowledge. For this reason, specialists often experiment with different configurations to find the best combination for a particular task and dataset.

Parameter-efficient fine-tuning is most useful in the field of natural language processing, as the latest large language models (LLMs) are getting progressively more intelligent. This means that they will be occupying more and more storage space and will consume higher amounts of computational power for training and customization. Therefore, numerous methods to ease the task of training pre-trained models have started to emerge as an alternative to conventional fine-tuning.

How Is PEFT Different from Standard Fine-Tuning?

Standard fine-tuning

Without a doubt, fine-tuning offers a tremendously potent tool for achieving high-quality text generation. It helps to adapt a pre-trained neural network to a specific task or goal, enhancing its performance and results. Fine-tuning typically involves modifying the pre-trained model's architecture to match the requirements of the new task.

LLMs are deep neural networks that consist of a multitude of layers with a heap of parameters amounting to billions of weights. These layers are often referred to as transformer layers, since such a deep neural network architecture as transformers can easily cope with natural language processing tasks.

During a standard full fine-tuning, all LLM parameters are adjusted using task-specific data. The entire architecture of the pre-trained language model is retained, and the weights of each layer are updated using backpropagation and gradient descent algorithms.

A full fine-tuning technique allows a model to utilize the knowledge gained during pre-training while tuning its parameters to the specific requirements of its follow-up objectives. In other words, the knowledge accumulated earlier is slightly modified for a new LLM task, which means that it will not be possible to recover the previous values of weights.

The only way to restore the weights would be to separately store initial pre-trained language models, which also requires additional storage resources, even though there may be no issues during fine-tuning and you will not need to restore the original parameter values.

As noted earlier, the process of fine-tuning in the full data setting can be computationally and memory intensive, especially for large-scale models with billions of parameters. This poses several difficulties that have to be solved using PEFT methods.

Full Fine-Tuning Issues

Parameter Efficient Fine Tuning (PEFT) has become an object of study for researchers due to the challenges encountered when using full fine-tuning of Large Language Models (LLMs). However, despite these challenges, traditional fine-tuning remains a widely used and effective approach for adapting pre-trained language models to specific tasks.

Storage and Memory Usage

One of the major issues posed by full fine-tuning is the high computational resources requirements. Firstly, a large storage overhead in full fine-tuning occurs because, as stated earlier, all model parameters, i.e. its layers, are altered to accomplish a particular task in the best possible way. To figure out why this happens, we need to explain the backpropagation process that is applied during fine-tuning.

Backpropagation and Gradient Calculation

Forward propagation is the primary way a trained neural network works. The opposite process to backpropagation is forward propagation, which is a process of a neural network making a prediction. Without forward propagation, there is no backpropagation. In forward propagation the input data is propagated through each layer of the network, starting at an input layer. The last output layer produces the final outcome called the prediction.

The final step in the forward propagation is the evaluation of the predicted output value relative to the expected output value (ground truth). These two results are compared and the difference is calculated, which produces the error value. The evaluation between the predicted output and the expected output is accomplished through a loss function.

From the gradient of the loss function, the model algorithms determine how much its parameters should be adjusted to get closer to the expected output value. The loss function increases or decreases depending on how the parameters change. The gradient shows this direction and rate of change of the loss function. The gradient is calculated via the backpropagation method also known as backward propagation of errors. It is a standard way to fine-tune models represented by multilayer neural networks, e.g. large pre-trained models.

So, the value (or the gradient) of the loss function which is used in updating the weights is calculated through the backpropagation method. The calculation requires the error value obtained in the last step of forward propagation.

Backpropagation attempts to minimize the loss function by adjusting the weights of the network. The degree of adjustment is determined by the gradients of the loss function. The gradient indicates the extent to which the parameter needs to be modified to lessen the loss. This process is called gradient descent.

In the backpropagation process, gradients are calculated using the chain rule moving backward through the layers of the neural network. During gradient descent, they allow optimizing the model parameters by finding the minimum of the loss function. The lower the value of the loss function, the more likely the model will provide accurate output results. In the gradient descent process, the parameters (or weights) of the model are combined in a way that minimizes the loss. It is an iterative process, that is, the changes occur gradually over several epochs.

Storage of Gradients

Fine-tuning requires all the mentioned above processes to be repeatedly executed for each parameter. Since modern LLMs have millions or even billions of parameters, it is clear why a huge amount of GPU memory is required to process all this data. In addition to the memory required to perform these mathematical calculations, all copies of the LLM have to be stored somewhere.

Besides the fact that you need to store the entire model with a multitude of weights, and probably a copy of an initial pre-trained LLM, the gradients computed during backpropagation need to be stored for each parameter to perform gradient descent updates. This means that for every parameter in the LLM, a corresponding gradient value needs to be stored in memory.

Since LLMs often have so many parameters, storing gradients for all of them can consume a significant amount of memory. The requirement for a variety of storage and processing hardware for fine-tuning significantly increases the cost of the entire project for customizing the LLM for a particular purpose. Apart from gradients, the fine-tuning process generates other data that must be stored and processed.

What Else Does Fine-Tuning Require Memory For?

In addition to parameter and gradient storage, memory is required for storing the intermediate activations during forward and backward propagation through the network. These activations are necessary for computing gradients during backpropagation and updating parameters during optimization. Storage resources are also required for data stored temporarily during each training iteration.

Optimizers such as gradient descent algorithms maintain additional information during the training of neural networks. This process may be characterized as the state of the optimizer, which includes various parameters and statistics used by the optimizer to update model parameters efficiently and effectively. This data also has to be stored somewhere in order for the fine-tuning to accomplish its mission.

Moreover, fine-tuning for a variety of tasks requires storing multiple versions of the model, each suited for a particular type of task, which increases both memory and computational costs. To fine-tune a model for multiple tasks, engineers often create separate copies or versions of a pre-trained model, each containing identical parameters. These copies are then tuned independently for each task, resulting in multiple instances of the model being stored in memory.

In an effort to mitigate these issues, researchers and practitioners are exploring methods such as parameter-efficient fine-tuning approach. Its focus is to exploit common knowledge across tasks and cut down excess consumption of memory and computational resources while fine-tuning models for different tasks.

Catastrophic Forgetting

Full fine-tuning is susceptible to the catastrophic forgetting phenomenon, where the model's previously learned knowledge is forgotten due to new task-specific information being introduced. This can result in losing valuable prior knowledge encoded in the pre-trained language model.

During full fine-tuning, all layers of the pre-trained LLM are usually tuned to maximize the performance on the target task. While this allows more flexibility for the model to adapt to new tasks, it also increases the risk of catastrophic forgetting: representations learned during pre-training are at risk of being overwritten or disrupted during fine-tuning, which would degrade the performance of tasks that rely on retained knowledge.

Key Differences Between PEFT and Full Fine-tuning

The Focus of the Two Approaches

The primary goal of full fine-tuning is to adapt the pre-trained model to perform well on the target task by updating its parameters based on task-specific data, no matter how computationally intensive it is. While PEFT strives to achieve performance comparable to or even better than that of a fully fine-tuned model, it also focuses on making the fine-tuning process more memory or computationally efficient.

Without a doubt, PEFT is particularly beneficial in tasks with limited resources available, such as storage, computational, as well as financial resources. It can be implemented in a variety of contexts where fine-tuning of large language models is needed.

How Layers Are Modified During Fine-tuning and PEFT

Both fine-tuning and PEFT involve modifying the layers of a pre-trained LLM. In traditional fine-tuning, all layers of the pre-trained large models are typically modified to some extent. Instead of fine-tuning all layers simultaneously, PEFT may selectively fine-tune only certain layers, typically those closer to the output layer. This reduces the number of parameters that need to be updated, thus reducing memory requirements.

Labeled Data Requirements

While PEFT focuses on reducing memory and computational costs during fine-tuning, it does not inherently alter the requirements or characteristics of the labeled data used for training. The labeled data that PEFT uses is substantially similar to that used in traditional fine-tuning and depends on the specific task or domain involved. Typically, labeled data consists of input-output pairs where the input is a data sample (e.g. text, image, audio) and the output is the corresponding target or label associated with that sample.

The process of acquiring labeled data for PEFT is similar to traditional fine-tuning approaches and depends on the specific requirements of the target objective. This may involve annotation by expert annotators, crowdsourcing platforms, using synthetic data or leveraging existing labeled datasets available in the public domain.

No matter which method of fine-tuning is employed, whether it's the traditional approach or Parameter-Efficient Fine-Tuning (PEFT), having access to quality data remains paramount. Data is the backbone of the fine-tuning process, providing the necessary information for the model to adapt and excel in new tasks or domains. Without sufficient and relevant data, fine-tuning efforts may not yield the desired performance improvements. Toloka offers easy access to multi-language data and domain-specific expertise with highly skilled experts and annotators. Get in touch to source human generated or high-quality synthetic data for any Large Language Model you build.

How PEFT works

Parameter-efficient fine-tuning works by leveraging the knowledge encoded in a pre-trained model and adapting it to a new task or domain with minimal updates to the model parameters. Here's a step-by-step overview of how it typically works:

Pre-training. A large transformer model for natural language processing (NLP) tasks is trained on a vast dataset for a specific task. During pre-training, it learns to extract meaningful features from the input data and encode them into its parameters. This pre-training phase usually requires significant computational resources and large datasets;

Fine-tuning. After pre-training, the model is fine-tuned on a smaller, task-specific dataset. This dataset might have different training data or specific characteristics compared to the dataset used in pre-training. Fine-tuning aims to adapt the pre-trained model to the nuances of the new task while retaining the general knowledge learned during pre-training;

Freezing Layers. In parameter-efficient fine-tuning, some layers of the pre-trained model are frozen, meaning their parameters are not updated during fine-tuning. These frozen layers typically include the earlier layers of the model, which capture low-level features that are likely to be useful across tasks. By freezing these layers, the model retains the general knowledge learned during pre-training while allowing the later layers to adapt to the new task;

Updating Parameters. Only a subset of the model's parameters are updated during fine-tuning. These parameters belong to the unfrozen layers, which are typically closer to the output layer of the model. During fine-tuning, the model is trained on the task-specific dataset using techniques such as gradient descent or its variants to update these parameters while minimizing a loss function specific to the task;

Optimization. Hyperparameters, such as learning rate, batch size, and regularization techniques, are optimized to ensure efficient fine-tuning. Unlike model parameters, hyperparameters are not extracted by the model from the data provided to it during training, they are usually set by humans. These hyperparameters play a crucial role in determining the training state when the model weights allow it to make accurate predictions and overall performance of the fine-tuned model;

Evaluation. The fine-tuned model is evaluated on a validation dataset to assess its performance. Depending on the results, adjustments may be made to the fine-tuning process, such as modifying the architecture, changing hyperparameters, or adjusting the balance between frozen and unfrozen layers;

Deployment. Once satisfactory performance is achieved, the fine-tuned model can be deployed to analyze new data, make predictions, or perform other tasks specific to the target application.

Benefits of PEFT

The benefits of Parameter Efficient Fine Tuning (PEFT) tackle the downsides of standard fine-tuning. PEFT methods has its own particular advantages, but here we will look at the key strengths inherent in all of its variants.

Reduced Storage Requirements

Storage requirements refer to the amount of memory needed to store the model parameters, gradients, and other intermediate data during training and inference.

During full fine-tuning, a full copy of LLM is created for each task. Parameter-efficient fine-tuning allows for combining new weights with the original weights of an LLM. New layers consisting of these new weights can be replaced with another set of layers. This way the model can quickly change its purpose. This means that there is no need to store several fine-tuned models simultaneously for various downstream tasks.

In addition, since fewer parameters are updated during PEFT, less storage is required for keeping temporary files, gradients, and other data to update these parameters. With fewer parameters to update and a smaller gradient size, the overall memory usage during the fine-tuning process is reduced.

Lower Computational Complexity

Computational complexity refers to the amount of computational resources, such as CPU or GPU memory, required to perform the mathematical operations involved in large model tuning. In PEFT, computational complexity is reduced compared to full fine-tuning approaches by updating only a subset of model parameters.

Updating only a subset of weights significantly reduces the number of computations required in the training process, resulting in faster results achievement and lower overall computational cost. This strategy of selective updating in PEFT enables achieving comparable performance on target tasks while using fewer computational resources compared to full fine-tuning approaches that update all model parameters.

Cost Efficiency

By reducing the memory and computational requirements of fine-tuned models, PEFT helps lower the overall costs associated with model training, deployment, and maintenance. Reduced computational complexity of fine-tuning results in shorter training time and lower resource consumption. This leads to reduced hardware and lower training costs, making it more affordable to fine-tune models on large datasets or for multiple tasks.

Following fine-tuning, deploying models in production environments frequently demands additional computational resources, infrastructure, and upkeep. Given that PEFT creates models with greater memory efficiency, it becomes more cost-effective to deploy fine-tuned models in production. Lower memory requirements also mean lower infrastructure costs to host and maintain models.

The cost-effectiveness of PEFT makes fine-tuning affordable for organizations with limited budgets or resources. Small businesses, startups, and academic researchers can use PEFT to develop and deploy intelligent applications without significant upfront investment in infrastructure or computing resources.

Catastrophic Forgetting Mitigation

PEFT can help mitigate catastrophic forgetting during the fine-tuning of large language models. Since most of the neural network layers that comprise machine learning models are frozen in the process of additional training of the model, forgetting simply cannot occur, as the model weights associated with previously learned data do not change.

Efficient Checkpoints

PEFT generates checkpoints that are significantly smaller in size compared to those generated through full fine-tuning. These checkpoints contain the updated parameters and data necessary for deploying the fine-tuned model, allowing for easy storage, transfer, and sharing of model checkpoints without excessive memory requirements.

Checkpoints allow saving the current state of a model, including its parameters (weights) and other training-related information, to internal or external storage. This allows resuming training from the same point or loading the model to make predictionslater without having to re-train it from scratch.

Model's Performance Comparable to Full Fine-tuning

Pre-trained language models that have been trained through a PEFT method demonstrate comparable performance to full fine-tuning. This is the most critical advantage of PEFT, which is decisive when choosing an approach for additional training of an LLM for specific downstream tasks. The PEFT model retains the high-quality representations learned during pre-training at the same time adapting effectively to the target task, without sacrificing model performance.

Methods of PEFT

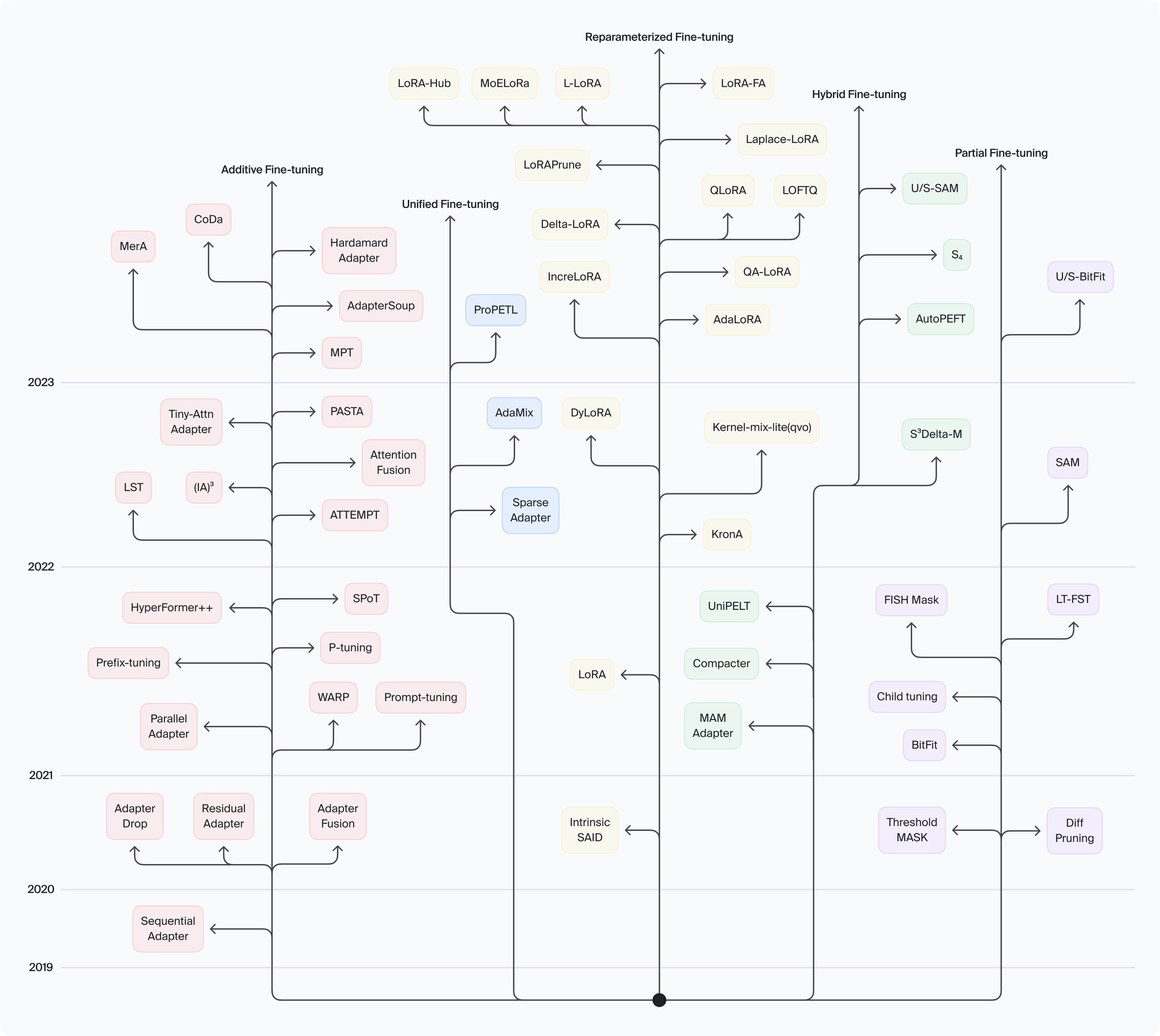

Development of PEFT methods in recent years

Parameter-efficient fine-tuning methods are extremely numerous, as shown in the picture above. We will examine a few of the most commonly supported PEFT methods.

LoRA (Low-Rank adaptation)

The idea behind low-rank adaptation is to approximate the weights of the pre-trained model with lower-rank matrices. This reduces the number of parameters that need to be fine-tuned while still capturing the essential information from the pre-trained model. By focusing on modifying a smaller subset of parameters represented by lower-rank matrices, LoRA indeed reduces computational and memory overhead compared to traditional fine-tuning methods.

It appears that in order to fully adapt large models to their tasks, it’s possible to train not the weights of the base model itself, but the changes in the weights. The parameters of the original model remain unchanged, but a small-sized lower-rank matrix is added to each layer of the neural network during LoRA. They describe only the changes introduced into the model, while not altering the original parameters. These lower-rank matrices capture essential information from the original model while reducing the number of parameters that need to be fine-tuned.

LoRA offers the privileges of reducing the number of parameters and speeding up the training process. The reason for this is that LoRA allows the reduction of parameter numbers in a neural network by replacing the conventional fully connected layers with low-ranked layers (matrices).

Fully-connected layers contain a large number of parameters, the modification of which can lead to model overfitting or catastrophic forgetting and high computational complexity. Lower-ranked layers, on the other hand, tend to have a much smaller number of parameters, which reduces the mentioned risks and speeds up the process of training.

Prompt tuning

Instead of fine-tuning the entire language model, prompt tuning focuses on providing a task-specific prompt to guide the model's behavior. This approach is an energy-efficient method that makes a massive model do narrow tasks. Businesses with limited data can truly appreciate the possibilities of prompt tuning, as they eliminate the necessity of collecting multiple labels for data.

Language models don't have to be retrained, their parameters stay the same, and despite this, thanks to prompt tuning they can grasp the essence of their new downstream task. Prompts with task-specific information are fed to LLM to give it a context that is characteristic of the task we want it to accomplish. There are two ways in which the prompts are enhanced with additional data. They can be supplemented by additional info provided by humans or AI.

In the case of human-provided data, the user can quickly retry and experiment with different prompts to improve the performance of the model. The prompt typically consists of a structured text input that specifies the task to be performed, along with any additional examples, context, or instructions. This process is called prompt engineering. Prompt tuning, on the other hand, happens when prompts are supplemented by task-specific information in the form of an AI-generated number that fits into large models' embedding layers.

Vast LLM memory allows it to find the appropriate response to the tuned prompt without additional fine-tuning. However, the more complex the objective, the more prompts are required. It would be difficult for a human to write hundreds or even thousands of prompts, so it is more common to utilize prompt tuning with its soft prompts that are generated by AI itself instead of hard prompts created by a human.

The string of numbers or embeddings that are soft prompts replace extra training data and guide pre-trained language models toward the required outputs. Though incredibly effective, the AI-generated soft prompts cannot be fully interpreted, meaning the reasoning behind AI's decision to pick exactly these task-related prompts stays unclear.

The prompt tuning allows for efficient parameter utilization and reduces the computational and memory required during the fine-tuning process. Overall, prompt tuning is a powerful method within the PEFT framework, enabling efficient fine-tuning of pre-trained LLMs for specific tasks by leveraging structured prompts with task-specific examples and AI-generated embeddings.

Prompt tuning represents an additive method of parameter-efficient fine-tuning. This type of approach assumes that the parameters of pre-trained models are supplemented with new ones, and training is carried out using only these new parameters, meanwhile, the original data remains frozen.

Prefix tuning

Prefix tuning is another additive method that is a more sophisticated adaptation of prompt tuning. In prefix tuning, the original input data is converted into an additional context for a particular task, however, it is not set by the user but is obtained through model training.

The name "prefix" is derived from the fact that this is the name given to the trainable module that is added to each transformer layer. This module consists of sequences of continuous task-specific training vectors. All the other parameters of the language model remain unchanged because only prefixes or sequences of task-specific vectors are modified during efficient fine-tuning.

This prefix module creates special embeddings that are inserted into the input sequence (represented by task-specific training vectors) prior to being submitted to the primary model. These embeddings serve as task-specific information that guides the behavior of the model for the target task. However, they are not natural language words or sentences, but abstract representations.

The number of trainable parameters introduced during prefix tuning is limited. It means that computational and storage costs are reduced which leads us to recognize prefix tuning as one of the truly powerful PEFT methods. According to “Prefix-Tuning: Optimizing Continuous Prompts for Generation” by Xiang Lisa Li and Percy Liangr, prefix tuning delivers results very similar to those achieved in full data settings, where all the parameters are tuned and superior to those obtained in low data settings. And all of this was achieved with only 0.1% of the parameters trained.

Adapters

Adapters are additive methods too, where instead of a prefix module, an adapter module is employed. In applying this technique, new trainable parameters are added to the frozen weights of pre-trained models. Adapter-based parameter efficient fine-tuning approach requires injecting an adapter layer with new weights between the original model layers at random.

Only these new weights are being trained with the initial LLM parameters remaining intact. It means that previously gained model insights are also undisturbed, which considerably reduces the problem of catastrophic forgetting. Adapter-based fine-tuning is computationally efficient since it only involves updating a small subset of parameters (the adapter weights) instead of fine-tuning the entire model.

PEFT Methods Excel at Effective Model Tuning in The Field of Natural Language Processing

The goal of PEFT (parameter efficient fine-tuning) is to reduce the resource requirements such as memory and computational power of fine-tuning while achieving better or comparable performance compared to traditional fine-tuning approaches. This approach is particularly beneficial in the field of natural language processing since the LLMs are becoming better and bigger, consequently more resource-demanding.

Since PEFT is a sort of sub-type of a standard fine-tuning, only with reduced training, i.e. parameter modification, we cannot claim that all the issues that are encountered with a standard fine-tuning completely vanish. ith PEFT storage space for gradients, optimizer states, temporary memory, and memory for the model itself is still needed. However, since fewer weights are changed, you need less space to store the data.

It may seem like a fairly insignificant amount of data and creating a whole separate group of methods to cut down on a small amount of usable storage space and model training memory is not worth it. However, when it comes to modern large language models such as GPT, LLaMA, BERT, etc., hundreds of gigabytes are saved, which can significantly reduce hardware costs and power consumption.

PEFT methods allow for achieving comparable performance to the one delivered during full fine-tuning. This works great in natural language processing tasks with billion parameter machine learning models like LLMs.