Proximal Policy Optimization

A deeper understanding of Deep Reinforcement Learning (RL) is necessary before understanding Proximal Policy Optimization (PPO). It is a machine learning (ML) method that involves system learning by means of trial and error, which means that it is rewarded if it does the right thing and penalized if it fails. This approach allows the intelligent algorithm to avoid future failures.

With RL, artificial intelligence agents learn by interacting with the environment rather than from historical data. Reinforcement learning is one of the top methods for training an agent in an environment it is not familiar with. One of the RL algorithms is PPO - a family of policy gradient methods. Let's examine it in more detail.

What is PPO?

Proximal Policy Optimization (PPO) is a reinforcement learning algorithm used in ML for training agents to perform tasks in an environment. Policies are strategies or rules that the model uses to decide what action to take in different situations. They are usually represented by deep neural networks.

RL training data generated by the agent during interaction with the environment is dependent on the current policy. It doesn't rely on a previously collected data set, as in the case of supervised learning. In the course of training the agents' observations constantly change as they learn something new after each action. That means that data and rewards are changing too, which is a major cause of instability during training.

Moreover, RL algorithms exhibit a high sensitivity to hyperparameter tuning (for example, changing the number of training epochs), and initialization that includes setting the values of neural network weights. These are the reasons why the RL training process is considered unstable. To tackle these challenges, experts at OpenAI set out to create a new RL algorithm called proximal policy optimization.

PPO Emergence: A Quick Overview

Before the PPO appearance, researchers have come up with other algorithms for training machines to make decisions in unknown environments. They've proposed different methods, like deep Q-learning, vanilla policy gradient methods, and trust region policy optimization (TRPO). However, each of these methods has its drawbacks, such as being not scalable, not efficient with data, or too complicated.

Research scientists at OpenAI presented a new Proximal Policy Optimization technique in a 2017 paper called Proximal Policy Optimization Algorithms. John Schulman and his colleagues referred to it as "a new family of policy gradient methods for reinforcement learning". It was designed to strike a balance between the three basic concepts:

Simple implementation;

Sample efficiency;

Stability.

The solution by Shulman and his team resulted in an algorithm that managed to achieve data processing efficiency and reliable TRPO performance. TRPO is known for its robust efforts in updating policies, but it involves complex second-order optimization techniques (such as the implementation of the Hessian matrix), which can be computationally expensive and challenging to implement.

PPO addresses some of the limitations of its predecessor by utilizing a simpler first-order optimization approach (gradient descent algorithm). Owing to its ease of implementation and good results, the proximal policy optimization became Open AI's default reinforcement learning algorithm.

Proximal Policy Optimization as A Policy Gradient Method

Proximal policy optimization is based on policy gradient methods, which optimize the policy directly. The PPO algorithm chooses the best action based on the agent's current observations at the current point in time. The agent interacts with the environment and performs actions according to its strategy (policy). As a result, a transition from one state to another is accomplished.

An agent obtains reward from the environment for each of its actions, by which the agent can assess the efficiency of the performed activity. Policy gradient methods specifically contribute to the development of an agent's behavior strategies and policies to increase its usefulness.

According to the OpenAI paper PPO combines the acquisition and processing of data via interactions with the environment and finding an extremum (minimum or maximum) of a "surrogate" objective function through stochastic gradient ascent, which is a method of finding the minimum or maximum of a function by moving along the gradient.

A gradient, in turn, refers to a vector that indicates the direction of maximum increase in the loss function or model error. The gradient is employed in optimization algorithms to update the model parameters in the direction that minimizes the loss.

The primary purpose of a loss function in RL is to guide the learning process by providing a measure of how well the agent's current policy or value function aligns with the desired or optimal behavior. The agent's objective is typically to minimize this loss, so a lower value means a better model performance or a more accurate policy.

Gradient ascent or descent is an optimization algorithm used to minimize errors in a machine learning model. In conventional gradient descent, the gradient of the function to be optimized is calculated at each step as the sum of gradients from each element of the sample. Stochastic descent calculates the gradient of a single, randomly selected element.

PPO is learning as the agent receives feedback from the environment without retaining its past experience in a replay buffer from the previous training iteration. After the entire gained experience has been used for a gradient update, it is forgotten and the policy is further updated based on the new activities.

Therefore, Policy Gradient Methods are considered less sample efficient, as they utilize the entire accumulated experience for only one update. However, PPO has succeeded in achieving sample efficiency since it leverages the clipped surrogate objective function, which lets it reprocess the data.

Exceptional Stability

PPO is distinguished for its stability and strong performance in diverse environments, hence it has become a favored choice among other RL algorithms. The stability of PPO is driven by the fact that during the training of an agent, current policy improvement is achieved through constraining policy changes.

PPO algorithms are generally regarded as more effective than other policy gradient methods such as Trust Region Policy Optimization (TRPO) for example. Their stability is what makes them suitable for real-world applications. This is why PPO algorithms have found great success in robotics, video games, and autonomous driving.

How PPO works

In PPO, an actor-critic approach is employed, where the actor (policy) is updated to improve the choice of actions. The critic (evaluator) evaluates how well the agent's actions match the expected rewards. The objective of PPO is to maximize the expected cumulative reward over time. This is done by adjusting the policy in a way that increases the probability of actions that lead to higher rewards.

There are two main variations of PPO: PPO-Penalty and PPO-Clip. PPO-Clip is more frequently applied, so further our attention is centered on this version of the algorithm. These variations are distinct in the way they confirm that the new policy is not dramatically different from the old one.

PPO enhances the training stability of an agent through a clipping mechanism. The method consists of clipping a coefficient in the PPO objective function called the Clipped surrogate objective function from a certain range.

The objective function helps the agent execute multiple epochs or improvements based on a batch of data it has collected. You may think of it like practicing a skill multiple times to get better at it.

The clipped objective function coefficient shows the difference between the current and the old policy. The mechanism allows us to limit how much the policy can be changed at each step. Avoiding too many policy updates ensures that learning becomes more stable.

Such constraint is what makes PPO different from other algorithms, like TRPO, for example, that uses KL divergence constraints as an approach to limit the policy update. KL divergence stands for Kullback-Leibler divergence, which is a measure of how one data distribution diverges from a second, expected data distribution. The KL divergence constraint ensures that the new policy, after an update, does not deviate too much from the previous policy. This helps maintain stability in the learning process.

PPO Objective Function

In Proximal Policy Optimization (PPO), the objective function is a key element that guides the training process by quantifying the difference between the current policy's predicted actions and the actual actions taken in the environment.

Clipped Objective Function Lclip(θ)

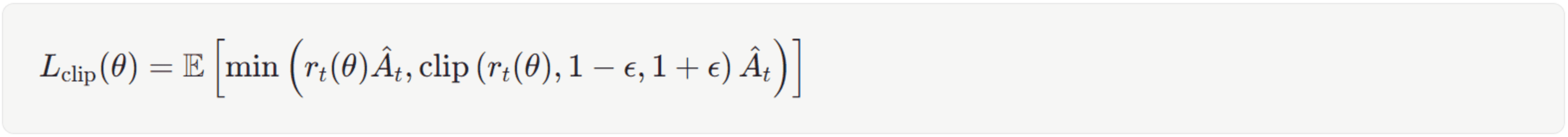

The objective function of PPO motivates the agent to choose actions associated with greater rewards. The PPO objective function is formulated as:

The clipped objective function is an expectation operator (Et), which means that it is calculated over batches of trajectories.

min(...) chooses the smallest value between the clipping function expression and the other one without the clipping function.

rt(θ) calculates the disparity between the new policy and the old one. This function calculates the probability ratio for each action, comparing the new policy to the old one. This ratio indicates the likelihood of the agent selecting a particular action under the new policy relative to the old policy. It is then multiplied by the advantage function (A^t).

A^t is an advantage function that assesses the quality of an action in comparison to the average action within a specific state. The expectation that the action will lead to a more favorable outcome is indicated by a higher value.

The function clip(rt(θ),1−ε,1+ε) restricts the value of rt(θ) within the interval [1−ε,1+ε] where ϵ - epsilon is a small positive constant. This mechanism ensures that the PPO algorithm strikes a balance between enhancing the policy and maintaining its stability. PPO's objective function guides the agent to make decisions that not only consider the likelihood of actions under the new policy but also account for their advantages

Conservatism in Updates

The term "proximal" in the name of an algorithm refers to a constraint that is added to the PPO objective function in order to limit the magnitude of parameter updates. The key idea behind such proximal constraint is to control the policy changes to avoid large updates that could lead to instability or divergence in the learning process. By staying "close" to the previous policy, PPO aims to ensure smoother and more reliable learning.

PPO trains a policy that makes random decisions but adheres to the rules that are already known. This means that the algorithm explores new possible actions by considering an already existing strategy (policy). The extent of randomization of the next move depends on the initial conditions and the learning process. As learning progresses, the strategy becomes less random as the proximal policy optimization algorithms imply that the steps that led to successful outcomes should be retained.

The clipping function in Proximal Policy Optimization (PPO) serves two main purposes:

Limiting Credit for Good Actions: When an action suggested by the new policy is considered exceptionally good based on the advantage function (indicating a positive impact), the clipping function restricts how much credit is given to the new policy for these highly rewarded actions. This prevents the algorithm from overly boosting the influence of positive actions.

Constraining Slack for Bad Actions: On the other hand, if an action under the old policy is deemed as a poor choice (indicating a negative impact), the clipping function restricts how much the new policy can be lenient toward these poorly rewarded actions. It prevents the algorithm from being too forgiving for actions that are considered bad.

The overall goal of the clipping mechanism is to discourage the algorithm from making updates that could lead to significant changes in action distribution. By constraining both positive and negative signals, it helps maintain a stable learning process.

The advantage of this approach is that it allows for optimization using gradient ascent (descent), making the implementation faster and cleaner compared to Trust Region Policy Optimization (TRPO), which enforces a strict KL divergence constraint.

Once the clipped surrogate objective function is calculated, the algorithm generates two probability ratios: one that is clipped and one that is not. The final objective is then determined by selecting the minimum value between these two ratios. This process establishes a lower bound or a pessimistic estimate of what the agent perceives as achievable. In essence, adopting such a minimum method ensures that the agent executes the most conservative and secure policy update possible.

According to the paper Secrets of RLHF in Large Language Models Part I: PPO, proximal policy optimization, as well as trust region policy optimization, are key techniques in Reinforcement Learning (RL) designed to train policies effectively without compromising stability. The fundamental idea behind these methods is to embrace a strategy of making incremental, controlled adjustments to the policy. Instead of opting for drastic updates that could potentially disrupt the learning process, the focus is on gently guiding the policy toward optimization. PPO aims to avoid these big jumps in policy, ensuring a safer learning process.

Advantages of the PPO Approach

Stability. As we already mentioned, PPO is designed to provide stable and reliable updates to the policy. The clipped surrogate objective function helps stabilize the process of training. By limiting the policy updates, PPO prevents large and potentially destabilizing changes to the policy, leading to smoother and more consistent learning;

Ease of Implementation. PPO is relatively straightforward to implement compared to other advanced algorithms like Trust Region Policy Optimization (TRPO). It does not require second-order optimization techniques, making it more accessible to novice specialists;

Sample Efficiency. PPO attains data efficiency by employing a clipped surrogate objective. The introduction of the clip function further regulates the policy updates, ensuring stability and reusing training data effectively. As a result, it tends to be more sample-efficient compared to some other reinforcement learning algorithms. It achieves good performance with fewer samples, making it suitable for tasks where data collection is expensive or time-consuming.

Conclusion

Proximal policy optimization (PPO) is a reinforcement learning algorithm that is a part of the policy gradient methods family, which is generally not distinguished by high sample efficiency. Nevertheless, the use of a clipped objective function enables PPO to be more data efficient and contributes to stable training by preventing large policy updates, allowing PPO to handle a variety of environments and adapt to diverse real-life tasks.

In tests, PPO has shown to be efficient and effective in various tasks, like robotic control or game playing, outperforming other similar methods in terms of both simplicity and learning speed. As reinforcement learning research progresses, the results of PPO's application in various fields and contribution to the development of reliable and effective algorithms emphasize its importance in advancing the capabilities of intelligent systems and help set the stage for further breakthroughs in the field of artificial intelligence.