Reinforcement Learning from Human Feedback: Improving AI with LLM Alignment

Large Language Models (LLMs) have transformed the AI landscape, impressing experts and the broader public with their ability to process natural language, generate coherent responses, and solve problems across various domains. However, raw performance is rarely enough for real-world applications. To be truly effective, these models must align with human values and intentions, addressing practical needs while minimizing ethical risks.

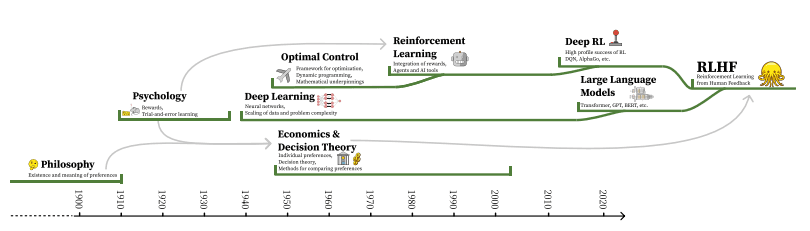

The timeline of the integration of various subfields into the modern version of RLHF. Source: The History and Risks of Reinforcement Learning and Human Feedback

RLHF, which stands for Reinforcement Learning from Human Feedback, has emerged as a key technique for reducing the risk of biased or problematic AI outputs that could mislead or harm users. By helping LLMs capture the nuances of human communication, RLHF enables them to interpret human input and generate relevant and context-aware responses rather than mimicking arbitrary human behavior.

In this article, we’ll explore the fundamentals of RLHF, outlining its workflow, available methods, best practices, and emerging alternatives for aligning AI with human values.

What is RLHF? A High-Level Overview

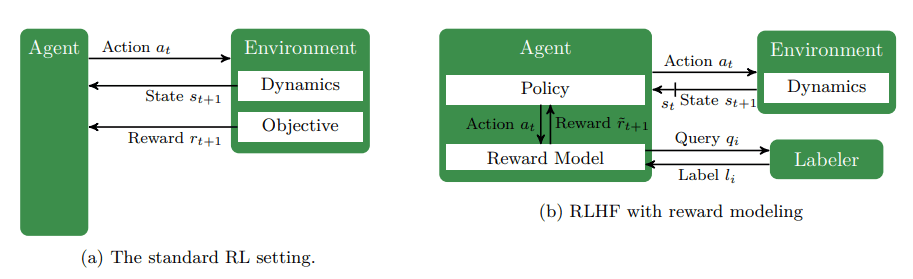

Reinforcement Learning from Human Feedback (RLHF) is a technique that incorporates human evaluation into the training process of large language models. By combining reinforcement learning principles with human preferences, RLHF fine-tunes an LLM to behave in ways that humans find helpful, honest, and harmless. This iterative approach ensures that AI systems produce more reliable results and mitigates the risks of producing unsafe or offensive content.

Standard Reinforced Learning vs. RLHF. Source: A Survey of Reinforcement Learning from Human Feedback

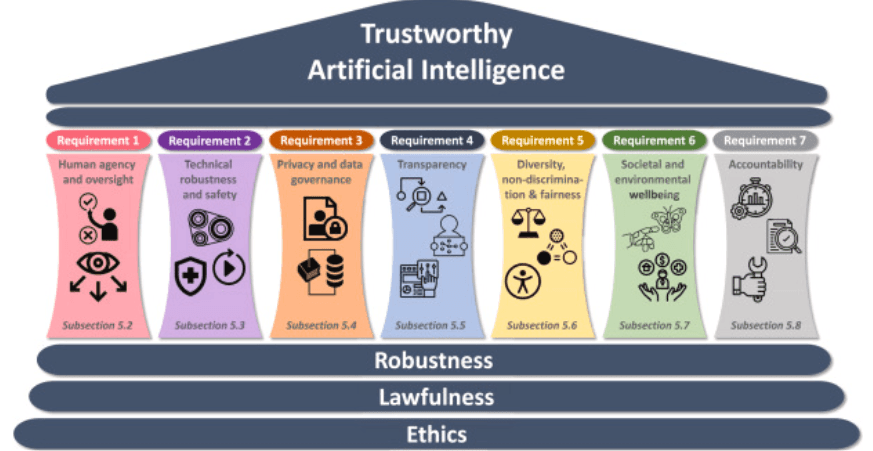

Why Must AI Be Helpful, Honest, and Harmless?

As AI systems become deeply integrated into society, their outputs directly impact decision-making, communication, and security. When LLMs are deployed without proper alignment, sectors such as healthcare, finance, law, and education risk being exposed to misleading or harmful outputs, eroding trust and introducing unprecedented dangers.

Thus, developing AI that adheres to helpful, honest, and harmless principles—commonly abbreviated as HHH—is critical for ensuring it effectively serves people.

Helpful: AI systems should deliver solutions that meet user intent and provide practical value.

Honest: Outputs must be as truthful and accurate as possible, reducing the possibility of misinformation.

Harmless: AI should avoid producing harmful or inappropriate content, even when prompted in that direction.

The 'helpful, honest, and harmless' concept emerged in response to concerns about AI systems trained solely on large datasets. Without targeted alignment, these models often suffer from issues such as hallucinations—confidently presenting false information—as well as generating toxic, unsafe, or irrelevant responses that fail to meet user expectations.

Pillars of Trustworthy AI as established by the European Commission High-Level Expert Group on AI. Source: Connecting the dots in trustworthy Artificial Intelligence

The HHH framework highlighted the need for an approach that directly incorporates human judgment and societal values into the model's learning process.

The Origins and Evolution of RLHF

Traditional reinforcement learning (RL), which relies on pre-programmed reward functions, proved insufficient for aligning AI systems—particularly language models—with nuanced human preferences.

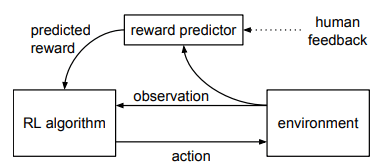

Reinforcement Learning from Human Feedback (RLHF) emerged as a solution, initially focusing on eliciting complex behaviors from AI agents in controlled environments to bypass the challenge of crafting intricate reward functions. The paper by Christiano et al., 2017 suggested this approach to help the model master in Atari games.

Schematic illustration of the reward predicting approach, initially presented in 2017 to overcome the complex task challenges for reinforcement learning scaling. Source: Deep Reinforcement Learning from Human Preferences

RLHF quickly evolved into scalable reward modeling techniques that allowed AI systems to generate text aligning with human intent. Foundational research by Leike et al. (2018) highlighted its potential to enable scalable value alignment, agnostic to ethical paradigms or social contexts, as long as sufficient human feedback is provided.

The integration of RLHF into LLMs marked a significant milestone, shifting its focus to refining AI outputs through direct feedback from human preference signals. Early implementations could adopt user rankings of multiple model completions to train reward models, while subsequent methods adopted pairwise comparisons for tasks like summarization.

This progress laid the groundwork for broader applications. RLHF expanded to question-answering systems and web agents facilitated by scaling human feedback datasets. OpenAI’s release of InstructGPT in 2022 was another pivotal moment—demonstrating that RLHF, combined with human evaluators’ input, could significantly outperform GPT-3, producing responses that were more accurate, ethical, and aligned with user expectations.

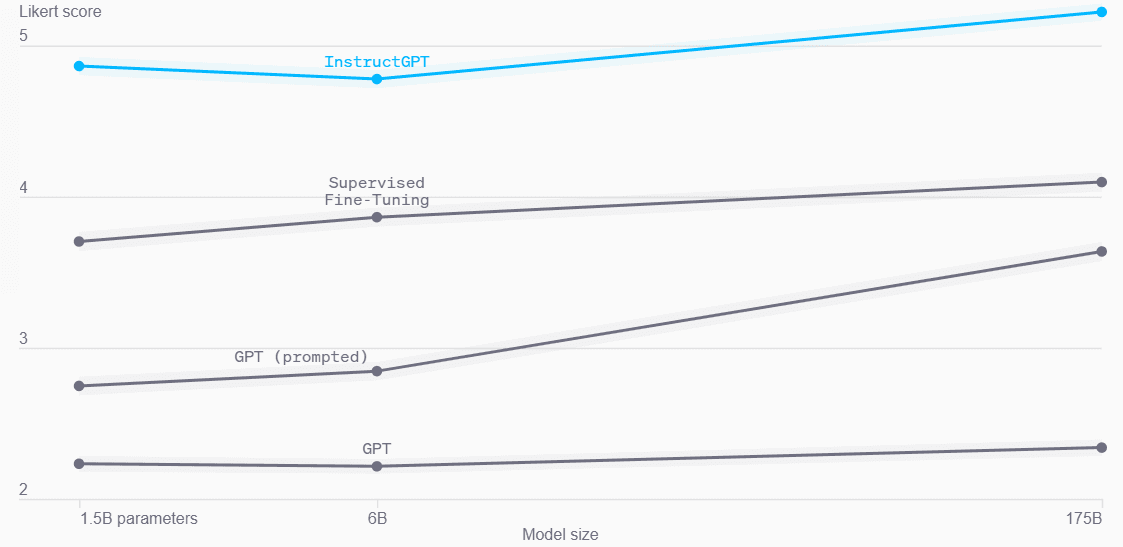

In 2022, InstructGPT outputs received much higher scores by labelers than outputs from GPT-3 with or without a few-shot prompt and supervised fine-tuning. Aligning language models to follow instructions

Since then, RLHF has become a foundational method for training state-of-the-art language models, addressing the limitations of conventional supervised learning. It represents a significant shift in the entire sphere of AI development—one that prioritizes real-world usability and safety over raw performance.

The RLHF Workflow: From Pretraining to Fine-Tuning

The Reinforcement Learning from Human Feedback (RLHF) process fine-tunes a pretrained natural language processing model to capture human evaluations based on their values and preferences. The workflow can be broken down into four key stages, each contributing to the model's ability to align with users’ expectations.

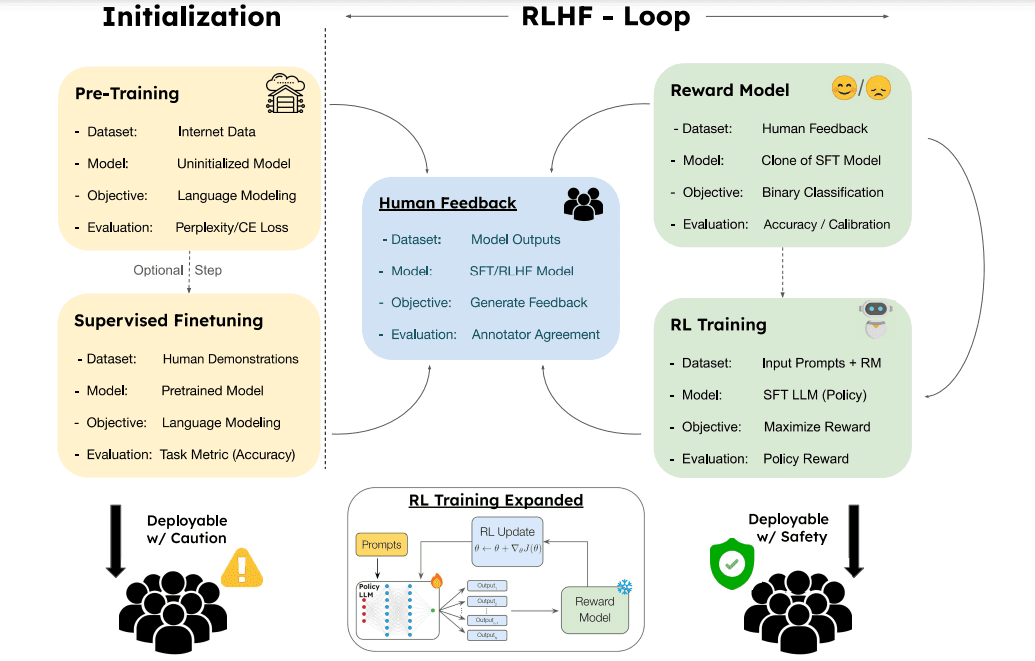

Workflow of RLHF. Source: RLHF Deciphered: A Critical Analysis of RLHF for LLMs

Pretraining the Base Large Language Model

The training process begins with pretraining a base LLM on massive datasets containing written content from books, articles, and websites. Using unsupervised learning, the model learns to predict the next word in a sequence—whether a sentence or passage—which allows it to shine in natural language processing.

For example, GPT-3 was pre-trained on a diverse dataset containing over 500 billion tokens sourced from public text repositories like Common Crawl, Wikipedia, and open-access books. Similarly, Meta's LLaMA (Large Language Model Meta AI) was pre-trained on 1.4 trillion tokens, making it a competitive open-source alternative for research and enterprise use.

This pretraining stage equips the model with a broad ability for natural language understanding and text generation, but it does not align the existing model with human ethical judgments or behavioral expectations—which is where RLHF comes into play.

Most companies, especially those without the scale of Google, OpenAI, or Meta, typically do not pre-train their large language models due to the significant computational costs and data requirements. Instead, they borrow or license existing pre-trained language models, such as GPT, Claude, or open-source alternatives like LLaMA, and fine-tune them using RLHF for their needs.

Collecting Human Feedback

Once the Large Language Model understands the basic task, the project team gathers feedback to guide the model's learning. Incorporating human feedback is essential for imparting subtleties and priorities that traditional programming or predefined reward mechanisms struggle to define.

Human evaluators are asked to rank different outputs generated by the model from the same input prompt. They may assess responses based on their helpfulness, truthfulness, or non-toxicity to fine-tune the LLM to align with the project's specific goals and human values.

The feedback comes in various forms, depending on the evaluation task:

Pairwise Comparisons: Human annotators rank two different outputs for the same prompt, selecting the one they prefer. For example, when given two AI-generated summaries, annotators choose the more accurate and coherent one.

Preference Scores: Humans rate outputs on a scale (e.g., 1 to 5) to indicate how well the response satisfies the intended query or task.

Behavioral Evaluations: Annotators identify outputs that display unwanted behaviors, such as hallucinations (fabricated facts), biases, or unsafe content.

For instance, when refining an AI model to ensure safe conversational behavior, annotators might evaluate responses for offensive language or ethical br when refining an AI model to ensure safe conversational behavioreaches. The feedback they supply then serves as the foundation for the RLHF optimization process.

Building a Reward Model

The reward mechanism is a critical component of RLHF, using the collected human feedback to predict how well an output aligns with human preferences. This model translates human evaluations into numerical scores, enabling the AI system to optimize its behavior based on desired outcomes.

How It Works

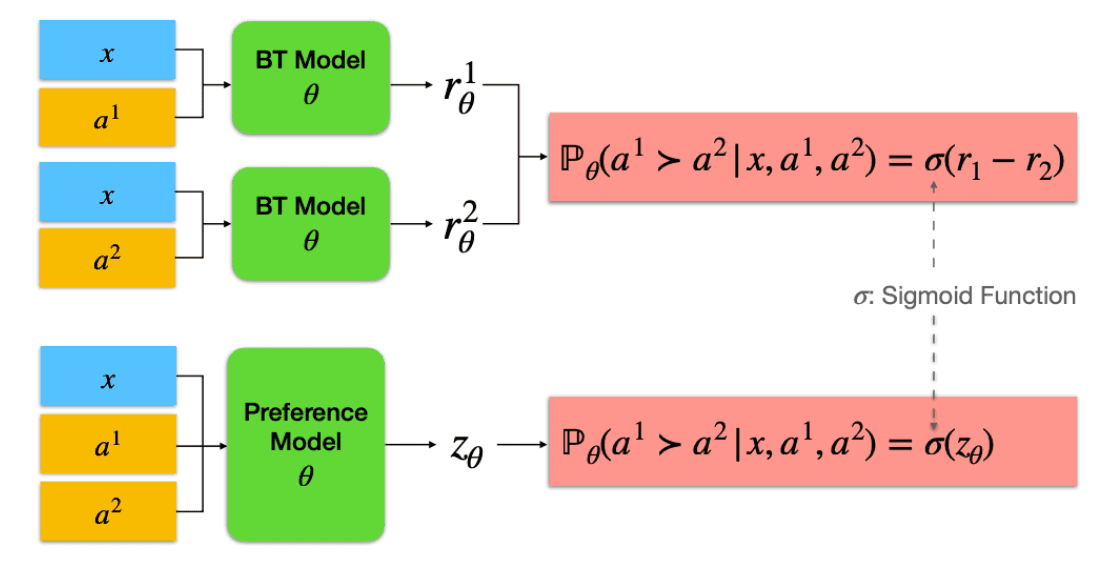

After incorporating human feedback, the reward model assigns scores to outputs by analyzing patterns in feedback data, helping the AI system understand which responses are preferred. Methods like the Bradley-Terry model provide a probabilistic framework for modeling preferences, estimating the likelihood that one output is preferred over another based on real-valued scores.

For instance, annotators consistently ranking one output higher in pairwise comparisons allow the Bradley-Terry model to identify the underlying features contributing to its preference. This relative comparison framework simplifies the annotation process, as pairwise rankings are generally more intuitive for annotators than absolute scoring.

Bradley-Terry and preference models. Source: RLHF Workflow: From Reward Modeling to Online RLHF

These scores guide the reinforcement learning process, with human preference data helping the AI prioritize responses that align with project-specific goals. This approach also addresses variability in human feedback by focusing on relative preferences rather than absolute judgments, ensuring more consistent results.

Advanced Techniques: ODPO and Sample Selection

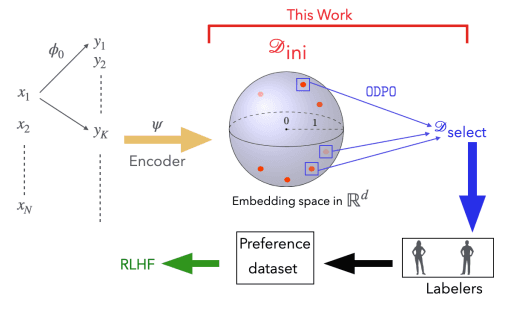

Building on probabilistic foundations like the Bradley-Terry model, techniques such as Optimal Design for Policy Optimization (ODPO) enhance the reward modeling process by addressing inefficiencies in feedback collection. ODPO formalizes feedback selection as an optimal design problem, ensuring that human annotators focus on the most informative output pairs rather than evaluating all possible pairs.

For example, ODPO leverages a contextual linear dueling bandit framework to strategically identify pairs of outputs with the highest potential to improve the reward mechanisms. This approach significantly reduces the cost and time associated with feedback collection while maintaining high alignment quality. In the context of RLHF, ODPO integrates seamlessly into the workflow, improving the model's training efficiency and scalability across large datasets.

Illustration of ODPO among the whole RLHF framework. Source: Optimal Design for Reward Modeling in RLHF

The Bradley-Terry model, along with techniques like ODPO, can play a critical role in transforming human feedback into actionable insights, bridging the gap between raw AI output and human-aligned performance.

Outcome of the RLHF Workflow

The reward model automates evaluation by generalizing from the training data, predicting human preferences for unseen outputs. This reduces dependency on manual evaluation, streamlining the fine-tuning process. By incorporating only the most informative feedback, as demonstrated in approaches like ODPO, the reward mechanism ensures that the system learns efficiently without wasting resources on redundant data points.

OpenAI’s InstructGPT: The reward model trained on human rankings helped GPT-3 produce more accurate and contextually appropriate responses, aligning better with user expectations.

Safety in Conversational AI: Reward models penalize outputs flagged for harmful or biased content, ensuring safer interactions with conversational agents like ChatGPT or Claude.

Efficient Feedback Selection: The ODPO framework optimizes dataset selection, focusing on the most informative samples to reduce labeling costs while maintaining alignment quality.

By following the stages outlined above, RLHF transforms a pre-trained language model into one that not only generates coherent text but also aligns with the criteria of being helpful, honest, and harmless. This iterative process combines the general knowledge from pretraining with the targeted alignment of human feedback, making AI systems more reliable and ethically sound for real-world applications.

RLHF Approaches: Preference Learning and Beyond

While preference learning serves as a foundational approach to Reinforcement Learning from Human Feedback (RLHF), it’s not the only method to align large language models (LLMs) with human expectations. Researchers explore various other techniques to improve model behavior, including Inverse Reinforcement Learning (IRL).

Inverse Reinforcement Learning: Understanding Human Intent Through Demonstration

Inverse Reinforcement Learning (IRL) shifts the focus from ranking preferences to understanding human intent through observed behaviors. Instead of manually annotating outputs, this technique allows the model to capture the underlying reward function based on expert demonstrations.

For instance, IRL could be used to analyze how a human responds to various conversational prompts, and the model would learn the reward structure that reflects the human's actions. This can help AI systems better understand the subtleties of human decision-making.

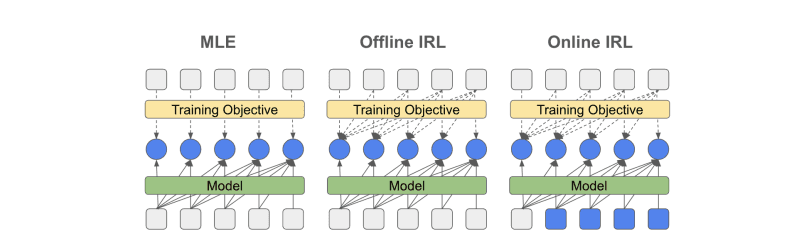

Maximum Likelihood Estimation (MLE), which is commonly used in the pretraining phase of LLMs, focuses on predicting the most likely next token in a sequence given a set of previous tokens. While MLE is foundational for generating language, it doesn't account for underlying human preferences. In contrast, IRL aims to infer those deeper motivations by observing human behavior, providing a more direct way to align the AI's actions with human values.

Data usage and optimization flow in MLE, offline and online IRL. Source: Imitating Language via Scalable Inverse Reinforcement Learning

In the context of RLHF, IRL can be beneficial for scenarios where human feedback is hard to define. By observing experts perform tasks or engage in interactions, the model can build a nuanced understanding of optimal behavior in complex situations.

Meta-Learning for RLHF

Meta-learning, or "learning to learn," is becoming increasingly important in RLHF. It involves training models to generalize across various tasks using fewer examples, reducing the need for large-scale human feedback. This innovation helps scale RLHF to more diverse use cases by enabling the model to adapt more quickly to new environments with limited human interaction.

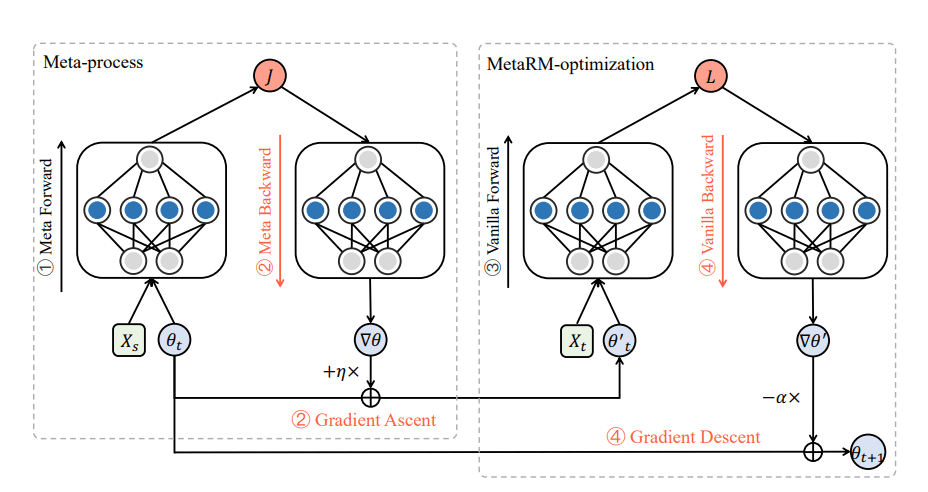

A notable example is MetaRM, a meta-learning-based approach designed to address the challenges posed by distribution shifts during the iterative optimization of reward models. As reinforcement learning progresses, the output distribution of the model shifts, which can reduce the reward mechanism's ability to distinguish between responses effectively.

The proposed MetaRM approach. Its pipeline consists of four steps. Source: MetaRM: Shifted Distributions Alignment via Meta-Learning

MetaRM counters this by introducing a meta-learning process to align the reward model with the shifted environment distribution. By optimizing the model for data that enhances its differentiation ability on out-of-distribution (OOD) examples, MetaRM ensures that it remains robust in capturing subtle differences between responses.

For instance, MetaRM has been shown to improve reward mechanisms in tasks like summarization and dialogue generation by iteratively adapting them to new data distributions without requiring additional labeled data. This iterative alignment process not only enhances the model’s robustness but also mitigates the resource-intensive nature of retraining on new preference data.

Human-in-the-Loop (HITL) Models

RLHF is a Human-in-the-Loop (HITL) approach at its core. However, traditional RLHF often involves static feedback, where evaluators rank outputs or provide annotations only during the training process. Once this feedback is incorporated into the reward model, the loop typically closes, and the model enters deployment.

Modern HITL systems, on the other hand, extend RLHF by offering dynamic and real-time feedback throughout a model’s lifecycle. These systems allow human evaluators—or even end-users—to continuously refine the AI’s outputs in response to new data, use cases, or contexts.

For example, in a customer service chatbot, HITL techniques could enable human agents to intervene when the artificial intelligence provides an inappropriate or insufficient response. These interventions not only resolve the immediate issue but also serve as real-time human guidance for future interactions.

Additionally, HITL systems can leverage active learning to prioritize which outputs or tasks require human evaluation. By focusing on uncertain or edge-case scenarios, active learning reduces the burden on human evaluators while maximizing the impact of their feedback.

Reward Shaping

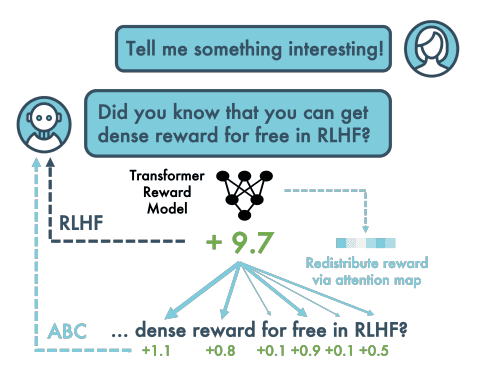

Modifying the reward function helps steer the model’s learning process. Recent advancements in reward shaping leverage dense feedback signals rather than sparse scalar rewards to stabilize and accelerate training.

For instance, an attention-based approach, suggested by researchers from the University of Cambridge, redistributes rewards across tokens in a sequence by utilizing attention weights from the reward model. It ensures that each part of the model’s output receives more granular feedback and preserves the model’s optimal policy while enhancing its ability to learn from feedback.

Illustration of the Attention-Based Credit concept. Source: Dense Reward for Free in Reinforcement Learning from Human Feedback

Researchers are also exploring ways to make reward shaping more transparent, aligning it with human ethical concerns rather than focusing solely on performance metrics.

Final Thoughts: The Future of RLHF and Its Advancements

The field of Reinforcement Learning with Human Feedback (RLHF) continues to evolve, with researchers addressing challenges and expanding its potential. One notable advancement is Reinforcement Learning with Generative Adversarial Feedback (RLGAF), which reimagines the feedback loop by incorporating adversarial principles.

Instead of relying on human annotators or pre-trained reward models for feedback, RLGAF uses a GAN-like architecture where a generator produces outputs, and a discriminator evaluates them. This method significantly reduces human dependency during the training process while maintaining sample efficiency and alignment quality.

Another emerging direction is the use of self-feedback mechanisms and reward model calibration. These innovations empower AI systems to autonomously assess and refine their outputs, reducing reliance on human evaluators. Coupled with advancements in multi-agent interactions, where multiple AI systems simulate negotiation and collaboration, these techniques enhance the models' social intelligence.

While RLHF has made significant strides in aligning artificial intelligence with human values, challenges remain, particularly in managing biases, scaling feedback collection, and ensuring consistency across tasks. New approaches like RLGAF offer promising alternatives to traditional RLHF workflows, paving the way for more efficient, adaptive, and scalable AI alignment strategies.

These advancements represent a critical step toward creating AI systems that are not only powerful and capable but also deeply attuned to the ethical and practical needs of human society.