Best Architecture for Your Text Classification Task: Benchmarking Your Options

In our previous article we tried to cover various different approaches to building a text classifier model based on what modern NLP offers us at the moment.

There are classic old school TF-IDF approaches, pre-trained embedding models, and transformers of various shapes and sizes, which you can choose from – we wanted to give you practical advice based on our own experience which models are best suited for different situations and use cases you can find in your own line of work.

Now, to add a bit of extra flavor to that knowledge we want to show you a concrete real life example of the benchmarks for these different approaches and compare them with each other on a particular dataset that we chose for this quick follow up article.

Describing the Dataset and the Task

To illustrate our ideas we chose The Twitter Financial News dataset which is an English-language dataset containing an annotated corpus of finance-related tweets. This dataset is commonly used to build finance-related tweets classification models that categories tweets into a number of topics.

It is a medium-sized dataset, which is just the right amount of data for us to illustrate how different models will perform on such tasks. It is fairly diverse and its size allows for relatively fast training and evaluation of the produced models.

What is interesting about its domain is that the financial language is usually very crisp and laconic, it has a lot of terminology, a lot of proper nouns describing brands and terms and related entities, which the models should train to distinguish from the common nouns that have completely different meanings. The intuition about this dataset property is that the fine-tuning of the pre-trained generic-language model into this domain should give a considerable boost in the overall model performance and accuracy.

The dataset consists of around 21 thousand data items – it's not too small, but also not too large, so we think we will be able to see all the positive and negative effects of each model and each approach we will be taking on this dataset. Interesting topic to discuss further, but we will return to this in the conclusion once we have the model's results.

And finally, the dataset has 20 classes so it's not quite a trivial classification task, when you need to distinguish between a handful of sentiment classes and emotional tones – it's a bit trickier. There is some degree of imbalance in this dataset – 60x+ difference between the most and least frequent classes support, which can usually cause some approaches to underperform. Okay, now let's see how different models will perform in our benchmarking test.

Describing the Approach

Based on our previous article, we chose FastText, BERT, RoBERTa (with an idea of a second-stage tuning) and GPT-3 to assess their performance and efficiency on the described dataset. The dataset was splitted into train and test sets (with 16.5K and 4.5K items respectively), models were trained on the train set, and their performance and efficiency (in terms of inference time) were measured on the test set.

To train a FastText model we used the fastText libraryt with the corresponding command line tool. After dataset preparation (like inserting labels into texts with proper prefix) we run the fasttext supervised command to train a classifier. It took a couple of minutes to produce a model, using a CPU-only machine. The next command, fasttext predict allowed us to obtain predictions for the test set and evaluate models' performance.

As of Transformers, we chose three slightly different models to compare – BERT (more formally, best-base-uncased), RoBERTa-large and an adapted version of the latter one, tuned for sentiment prediction on a couple of finance-related datasets (you can refer to this model on the HuggingFace website). We used the transformers library to perform our experiments, though it requires the user to write some code to actually run training and evaluation procedures. We used a single machine with the A100 GPU to run training, and it took about 20-28 minutes to train each model until early stopping conditions were met. Trained models were stored to a MLFlow registry for further usage.

To train a classifier based on the GPT-3 model, we referred to the official documentation on the OpenAI website, and used the corresponding command line tool to submit data for training, track its progress and make predictions for the test set (more formally, completions, as this term is more suitable in the case of generative models). We have not used any specific hardware as all the actual work is done on the OpenAI’s servers, so we have created this cloud-based model using a regular laptop. We trained two GPT-3 variations – Ada and Babbage, to see if there will be remarkable difference in performances of these models. It takes about 40-50 minutes to train a classifier with these settings.

As of hyperparameters, we used the following settings. A fastText model was trained for 30 epochs with the learning rate of 0.5. Other parameters were kept to defaults, and you can refer to their values on the official documentation. Worth saying, though, that we used only word unigrams and the size of the word vector were set to 100. Transformer models were trained with learning rate of 5e-6, batch size of 32, and using 32-bit float precision. With the fixed patience of 5 epochs, the algorithm automatically took “the best” checkpoints for each model (the best performances on the validation set were obtained on third or forth epochs, so these checkpoints were used later to produce predictions). As for GPT-3, we have not set any specific hyperparameters, and relied on the defaults used by OpenAI. You can see their values on the corresponding page.

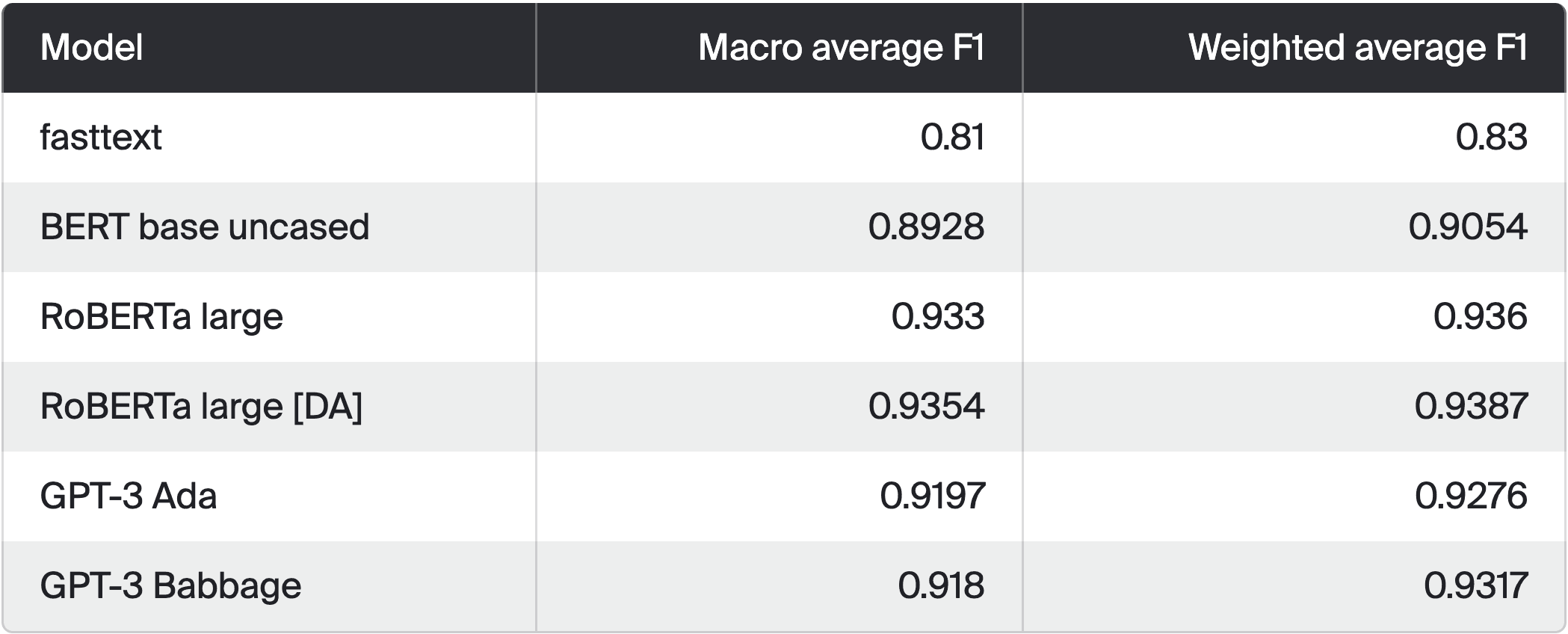

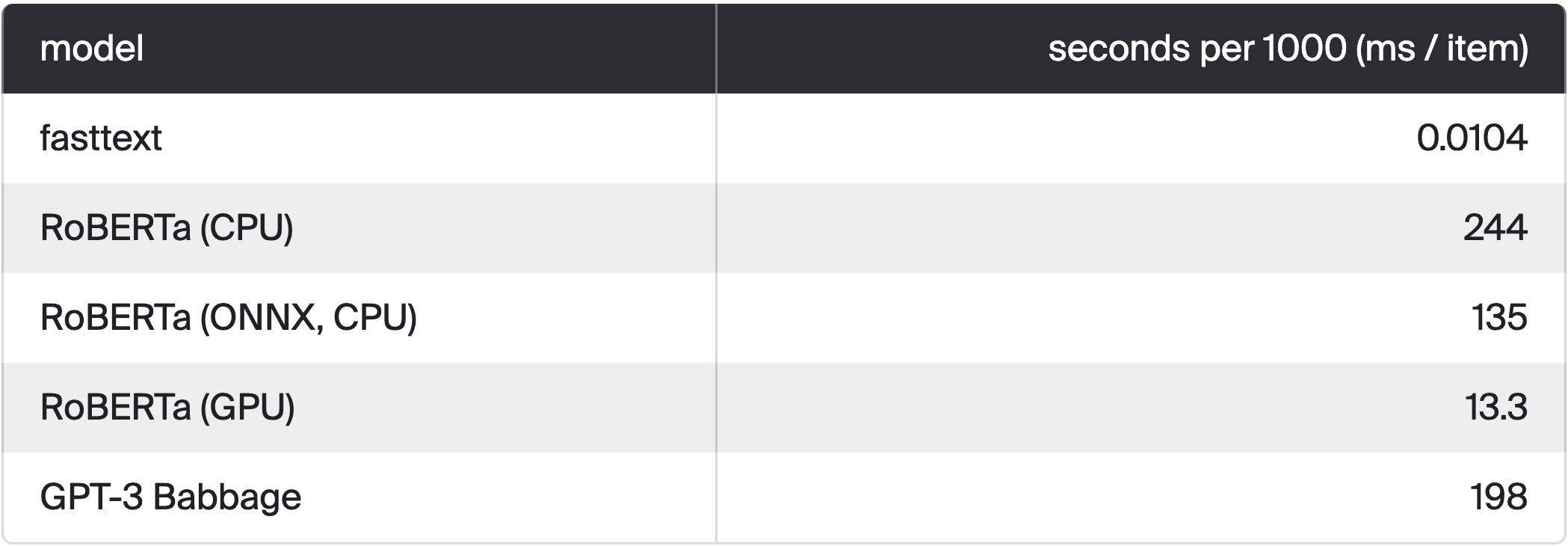

After training, we have evaluated all the models on the test set to obtain classification metrics. We chose macro average F1 and weighted average F1 to compare models, as it allows us to estimate both precision and recall, and also see if dataset imbalance influences the metrics. We also compared models based on their inference speed (in terms of milliseconds per item) with a batch size of 1. For the RoBERTa model we also include ONNX-optimized version, as well as inference using an A100 GPU accelerator. To assess the GPT-3 speed we measured the average response time from our tuned Babbage model (note that OpenAI applies some rate limiters, so the actual speed might be lower or higher, depending on your terms of usage).

Describing the Results

Now let's discuss the results of these training attempts. We arranged all the results of the trainings in a couple of tables to present you the conclusions and observed effect.

What catches our eye first of all is that fasttext indeed demonstrated far worse performance, but with all fairness it took the tiniest amount of computational resources, time and setup to train, so ok, it gives us some low bar benchmark for all the other models evaluation.

Next, let's discuss the transformers. As expected, RoBERTa shows us better results compared to BERT, and it's very easy to attribute it to the fact that RoBERTa is simply larger than BERT and in general demonstrates better results on such domain specific classification tasks. To be fair, we specifically selected RoBERTa large architecture to draw this comparison, however the base RoBERTa model might have been returning the same level of metrics as BERT, even though they have some differences in the underlying corpus and ways they were trained.

What is more, we can say that this tangible gap between F1 metrics of BERT and RoBERTa might be caused by the fact that we are dealing with a fairly large number of classes and the dataset has some imbalances that larger models tend to capture better. However, it's our intuition and it probably requires more experiments to get a more solid proof. You can also see that the domain-pretrained RoBERTa gave us a tiny boost in accuracy, but it's rather insignificant and we can't say that seeking this pre-trained domain-tuned model actually was worthwhile for our experiment.

Now comes GPT-3. We selected Ada and Babbage models to draw a fair comparison with BERT and RoBERTa large since their parameter sizes are nicely and gradually growing (from 165M parameters in BERT to 355M params in RoBERTa large to 2.7B in Ada and 6.7B in Babbage) and can demonstrate to us whether the size of the model really will give more and more performance boost going forward or if it reaches its performance plato somewhere. So, surprisingly, as you can see, both Ada and Babbage give almost the same metrics and they actually lose to RoBERTa even without domain-specific pre-training, but there is a reason for it. We need to remember that GPT-3 API-accessible models are actually giving its users the generative inference interface, so they try to predict a token that would classify each given example in the classification task. Whereas, RoBERTa and other models from transformers will have the last layers of their architecture configured for the classification appropriately (imagine a proper logit or softmax at the end that returns the likelihood of all the classes for any data item that you pass to it). So yes, maybe the huge GPT-3 will be able to tackle the classification to 1 of 20 classes by generating the right token class well enough, but that seems to be a slight overkill for such a task. However, let's not forget that the GPT-3 model is fine-tuned and accessed literally with 3 lines of code unlike RoBERTa, which you should roll out on your architecture with various amounts of sweat here and there.

And now finally let's compare these models (and their respective inference setups) in terms of their request execution speed – we are not training the models just for their performance and accuracy, we also need to take into account how fast they will return us their inference for the new data we will be feeding them. We clocked the online synchronous requests to these models and tried to understand, which one has its own preferred scenario when it will fit the best.

So here we have an absolute winner in fasttext. However, its accuracy leaves us with no choice, but to take a look at the other models in our list… Okay, moving on.

Between various setups of RoBERTa and GPT-3 we can see that GPT-3 despite being the large model among them actually performs relatively fast, especially taking into account the fact that it response time includes two-sided network communication to their API endpoint, so the actual time model computes its inference here is actually fairly small. And that's obviously good, especially keeping in mind that this is a pretty simple solution in terms of setting it up, fine-tuning your model and implementing the model calls in your project. Can be a bit too expensive, especially if you plan on sending fairly a lot of data fairly frequently, but then it becomes a cost-benefit trade off for your tasks.

In terms of RoBERTa's the obvious winner is the GPU hosted version. It's clear that the GPUs add a huge performance boost to inference computations, but again, first of all hosting your model server on GPU machines might cost a bit too much than you can afford in your project, plus rolling up a GPU based model server might be tricky and challenging, especially if you haven't done it before.

However, you also need to remember that these benchmarks are still pretty fast – all of them – in terms of returning you the results of your model requests. Don't forget to do some arithmetics and break down how you plan on using these models in your project – will it be for real-time inference or asynchronous batch requests, will you be accessing it over the internet or inside your own local network, is there any other overhead for your business logic operations on top of the model response etc. – all this can actually add significantly more time to each request rather than the actual model inference calculation itself. So be mindful about the requirements and limitations of your end use case here.

Conclusions and Ideas to Follow Up

So you are still with us, great, let's draw some conclusions together. We tried to demonstrate to you a real life vivid example of the balance between the difficulty of running various models, their resulting accuracy metrics and their response speed when they are ready to be used. Obviously it is not an easy task to figure out what to use when and which approach will be the most optimal shot at a task at hand, but at least we hope to leave you with some sort of a guideline on what to consider and when – unfortunately, GPT models are not the silver bullet for any task you will face, plus sometimes everyone of us has to count their money and be smart about how they spend it on solving their problems, even in machine learning.

On our side at Toloka we are working hard on the platform that would enable users like you to be able to train, deploy and use a transformer like RoBERTa in the same 3 API calls fashion as GPT-3 API – might come very handy in your next text classification project – you can check our free beta here: www.tolokamodels.tech

In the next article we will run a couple more experiments and investigate how you can mitigate the effects of disbalanced datasets and how you can up- or downsample some classes to introduce some balance to the dataset – we have an intuition that in this case GPT-3 generative approach can actually perform better that RoBERTa large. And also we will discuss how ONNX optimization can be implemented for GPU-based model servers to give you a lightning fast boost in model performance speed. Stay tuned, and there will be more interesting topics from us at the Toloka ML team blog.