Unveiling the Dynamics: Introduction to Diffusion Models for Business

The buzz surrounding artificial intelligence in recent years has reached unprecedented levels, with the spotlight often shining on the remarkable capabilities of diffusion models for machine learning. These models, including notable image-generative platforms like DALL-E, Midjourney, and Stable Diffusion, have captivated the imagination of professional and general audiences alike.

Pure experiments with cutting-edge AI technologies are engaging, but the true value lies in their practical application across diverse industries. Simply marveling at their complexity can hardly drive tangible results. In this article, we delve beyond the hype to explore the real-world implications of diffusion models for businesses.

DALL-E, announced by OpenAI in a blog post on 5 January 2021, demonstrated the ability to combine two unrelated ideas for object generation. (Source: OpenAI)

We’ll start with a brief introduction to diffusion models and then list practical use cases in different domains. Finally, we’ll focus on what a particular business needs to succeed in its diffusion model-based project.

What Are Diffusion Models?

Diffusion excels at generating data that closely mirrors their training dataset. Such a model learns by introducing incremental Gaussian noise to the training data and then mastering the process of reversing this noise to reconstruct the original information. Once trained, diffusion models effortlessly produce synthetic data by applying randomly sampled noise through their learned denoising mechanism.

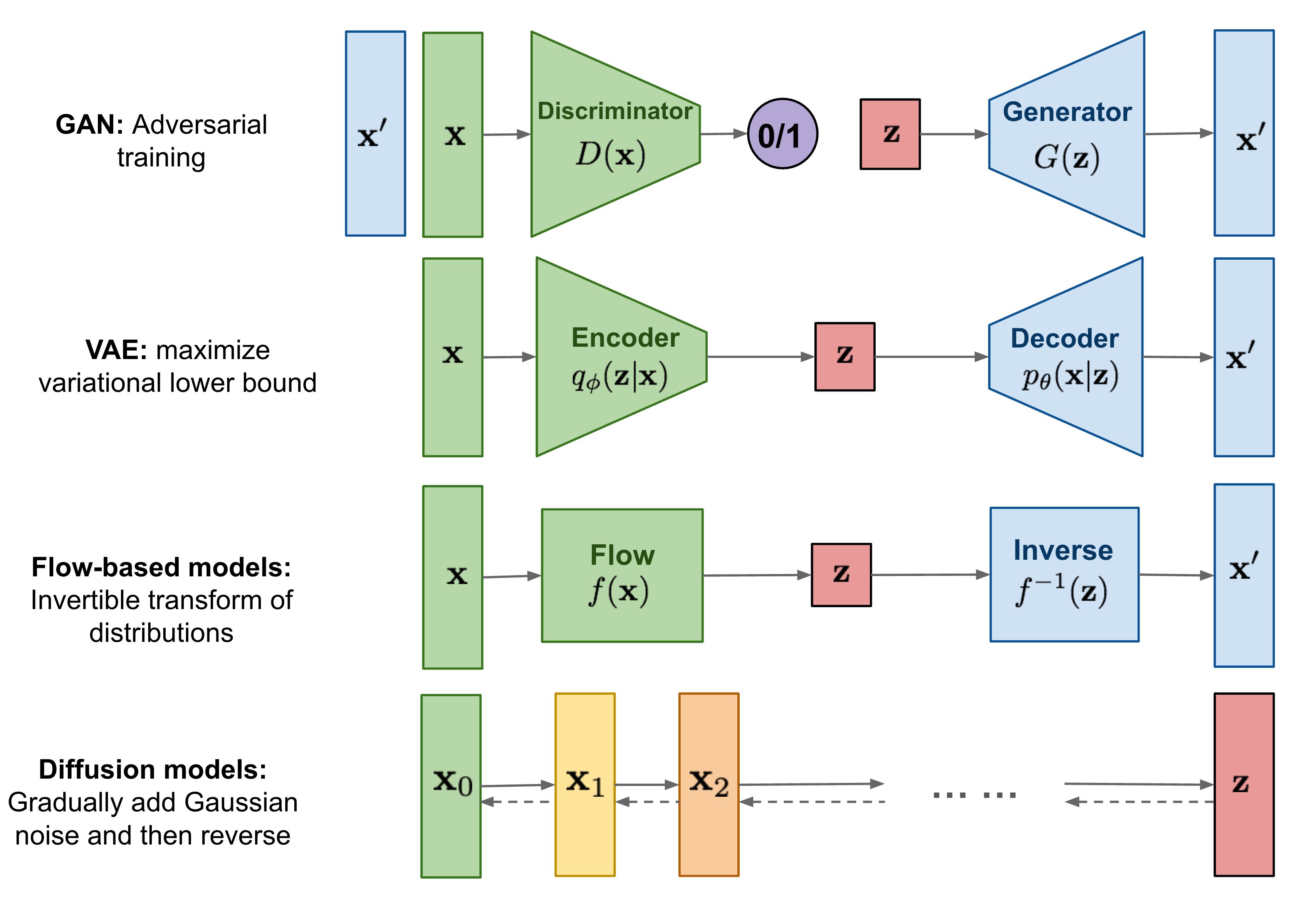

Diffusion models in comparison to other types of generative models. (Source: Lil’Log)

This capability enables diffusion models to generate realistic synthetic data, including high-resolution images that closely resemble the originals used during training.

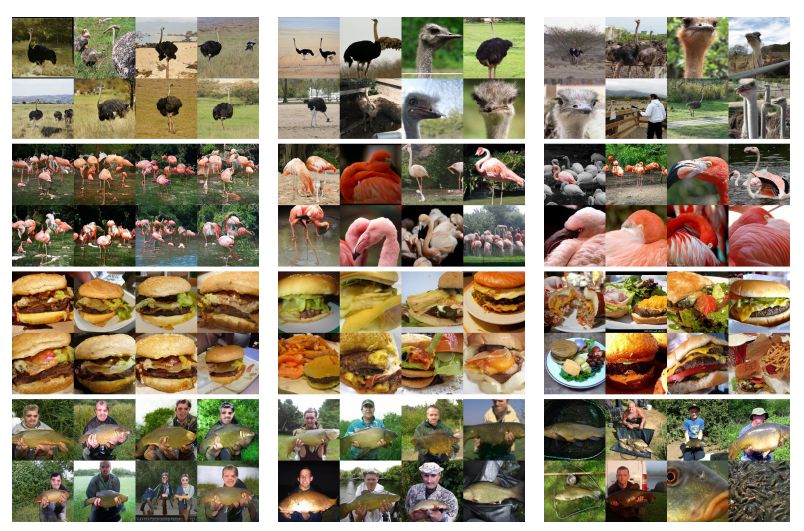

Image generation by a diffusion probabilistic model trained on the unconditional CIFAR10 dataset. (Source: The Denoising Diffusion Probabilistic Models by Jonathan Ho et. al.)

Diffusion models have gained prominence for their remarkable ability to generate realistic (or surrealistic) images. However, their versatility extends beyond image creation, encompassing data denoising, text generation, market prediction, and audio and video synthesis. These models create invaluable tools for enhancing data quality and generating diverse media content, constantly drawing technical experts' attention to explore their applications across multiple domains.

Comparison of image samples produced by a GAN and a diffusion model vs. a training set. (Source: Diffusion Models Beat GANs on Image Synthesis)

Brief Theory of Diffusion Models

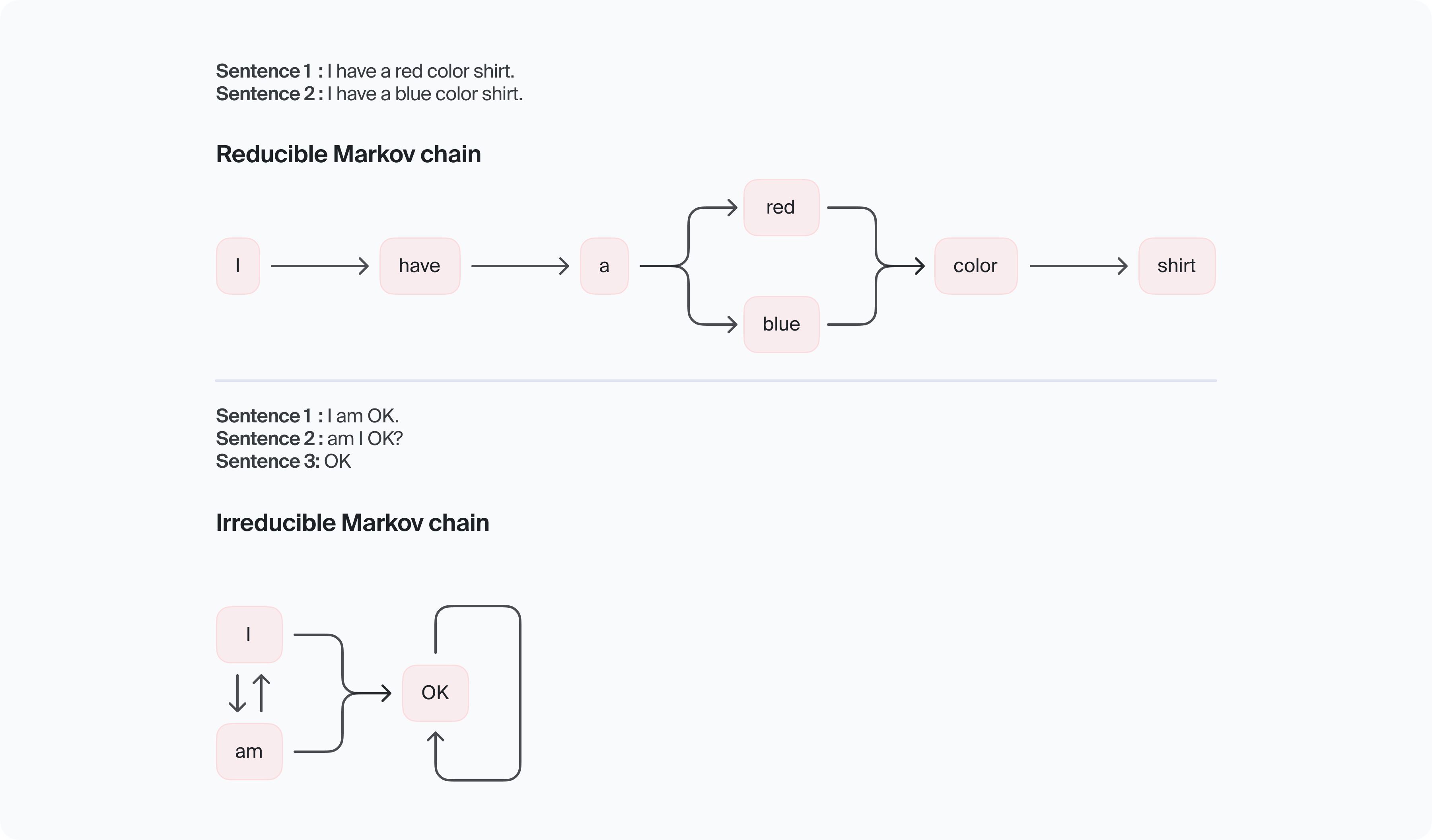

Diffusion models, inspired by non-equilibrium thermodynamics, simulate the gradual diffusion of randomness within data to generate novel samples. At their core, they rely on probability theory's fundamental concept of Markov chains.

A Markov chain is a mathematical model that describes a sequence of events where the probability of each event depends only on the state attained in the previous one, making it memoryless.

Examples of Markov chains. (Source: Analytics Vidhya)

In the context of diffusion models, a Markov chain is employed to iteratively add Gaussian noise to the input data, creating a series of diffusion steps. Each step in the diffusion process alters the data slightly, introducing randomness and gradually transforming the original input into a new, synthetic sample.

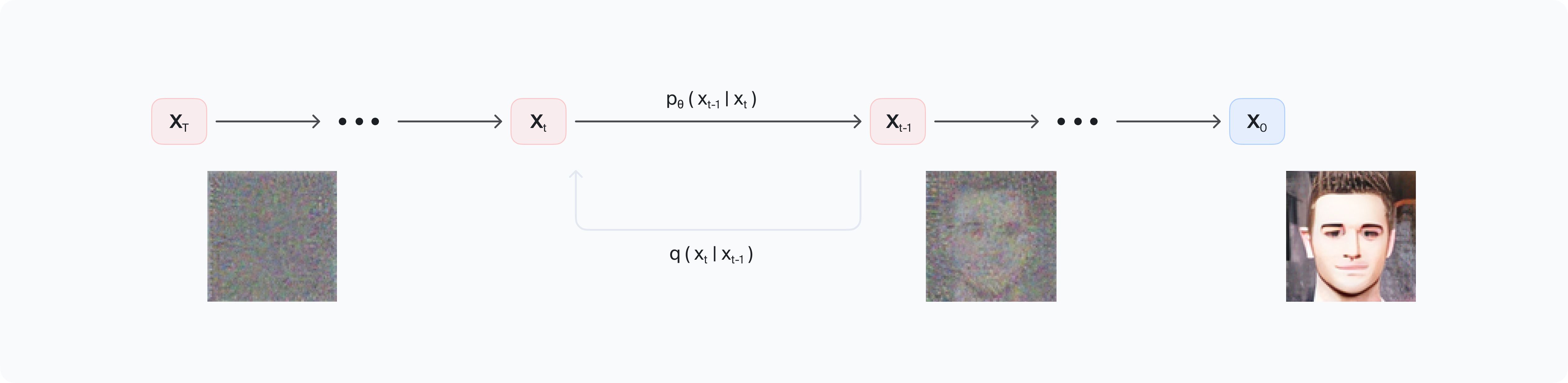

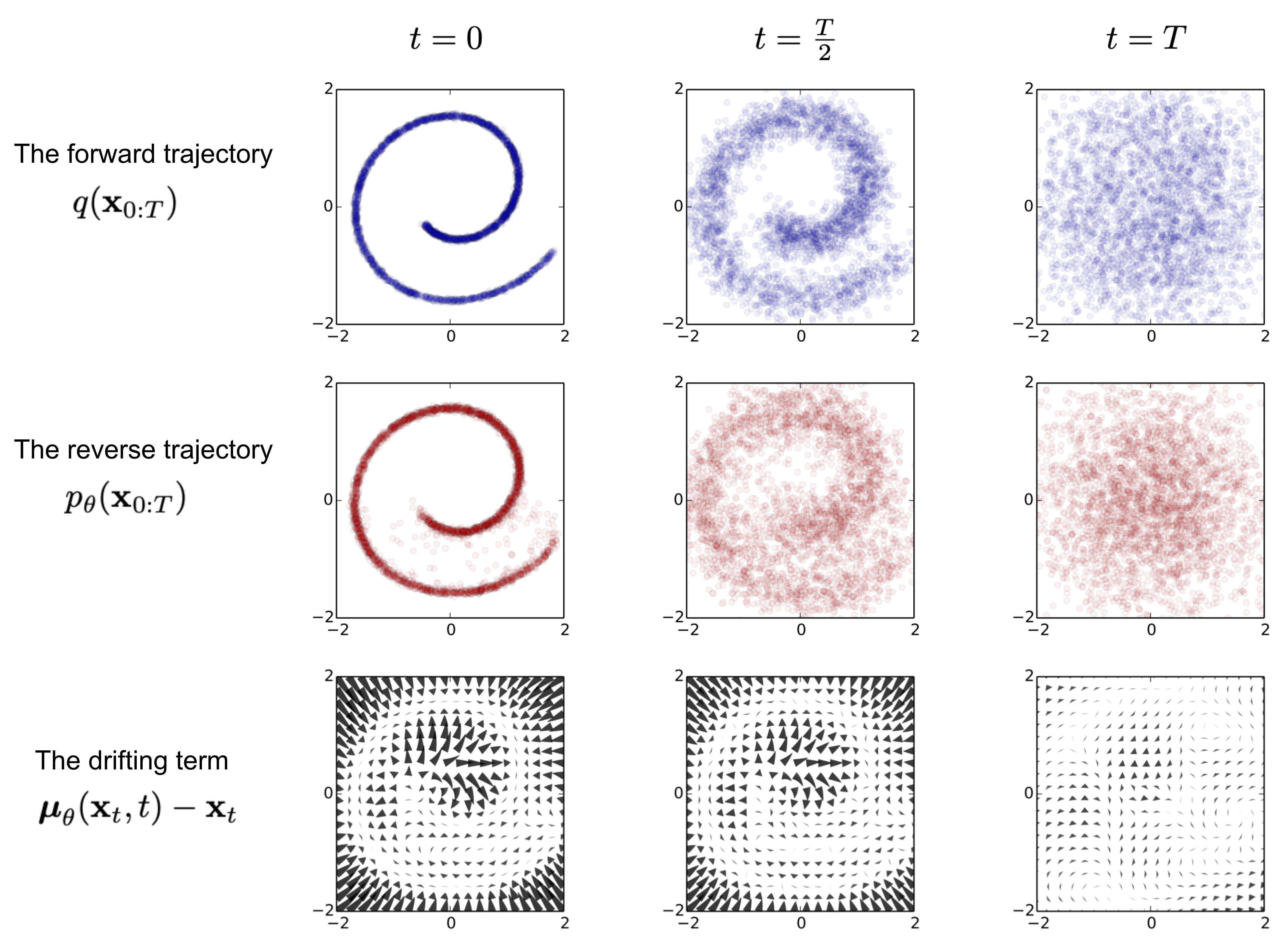

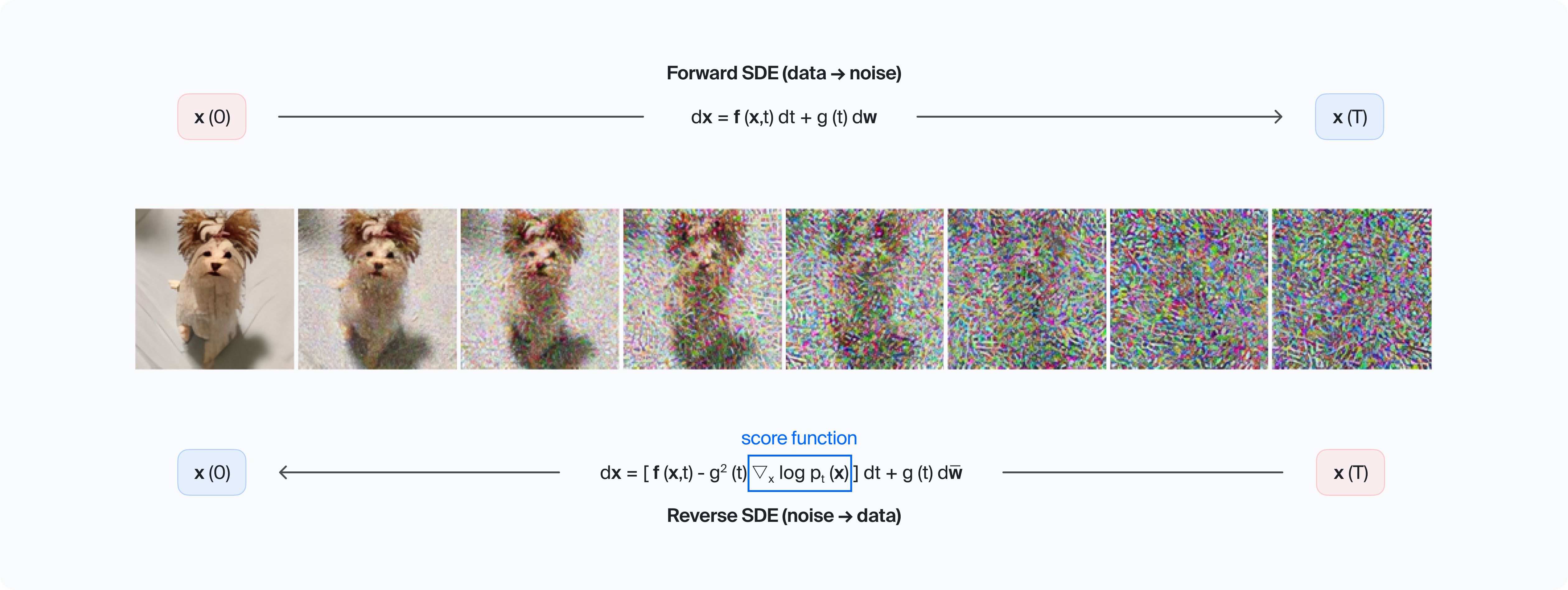

Similar to other generative models, the diffusion model undergoes a gradual denoising process, beginning with a sample comprised entirely of noise. Illustrated in the image below, the forward diffusion process based on a Markov chain, denoted as q, progressively introduces Gaussian noise to a source image until a "pure noise" image is achieved. Subsequently, the reverse diffusion process, denoted as pθ, ensues, culminating in the generation of a noise-free image.

The directed graphical model considered in the broadly cited work ‘Denoising Diffusion Probabilistic Models’ by Ho, et al. from Berkeley (Source: ArXiv in PDF)

This iterative process allows diffusion models to strike a delicate balance between fidelity to the original data and creating novel yet plausible samples.

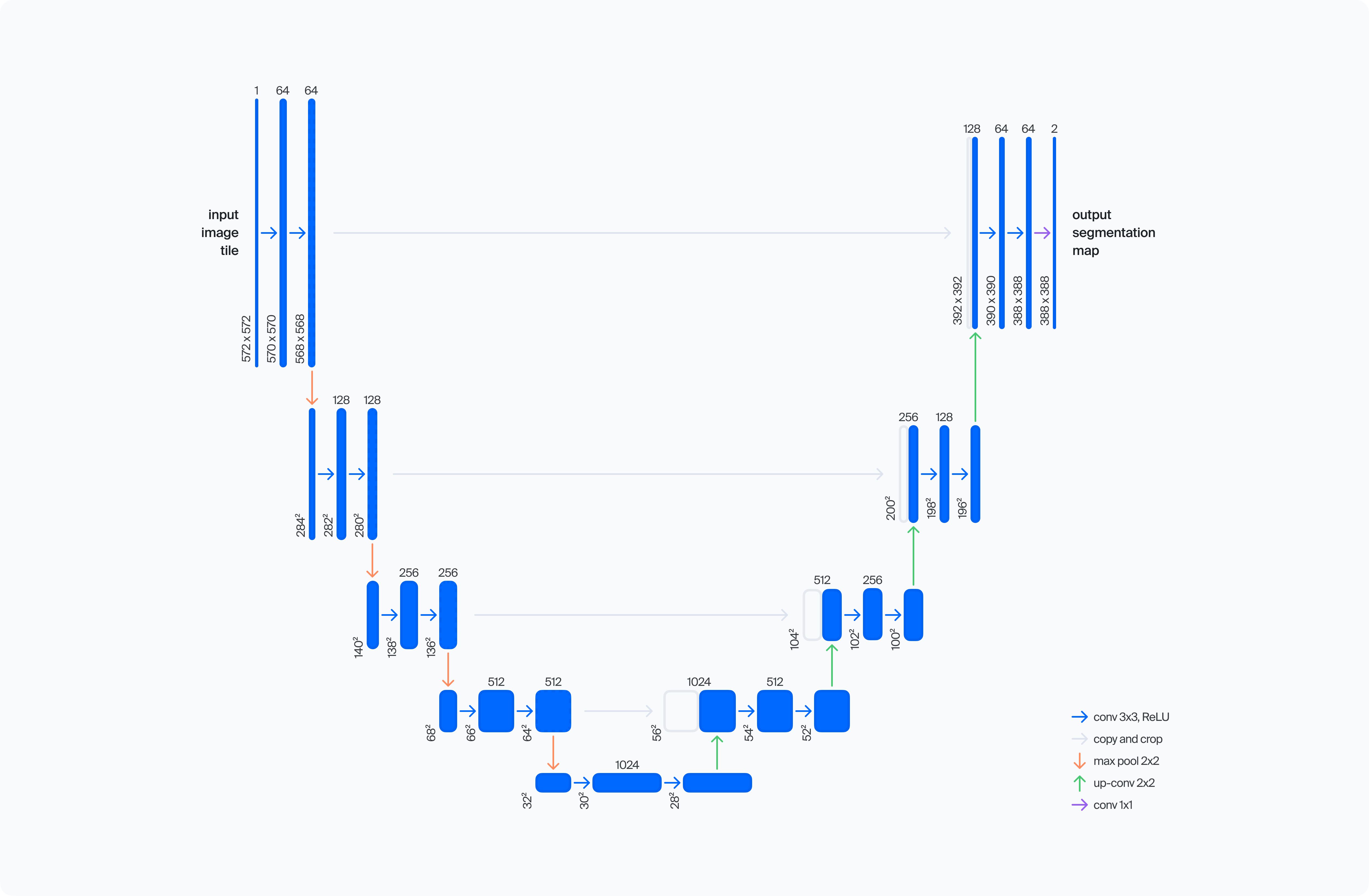

Multiple diffusion models leverage the U-Net architecture as their foundation. Originating from the field of biomedical image segmentation, it is characterized by its unique U-shaped design, featuring symmetric encoder and decoder pathways linked by skip connections, facilitating the precise reconstruction of high-resolution images from noisy inputs.

The original U-Net architecture form the article Convolutional Networks for Biomedical Image Segmentation by Ronneberger et al. (Source: ArXiv in PDF)

In the illustration, the left-hand side comprises down-sampling layers, while the right-hand side encompasses up-sampling layers, with skip connections linking features between the downsampled and upsampled paths.

Historical Overview of Diffusion Model Research

Diffusion models represent a contemporary advancement in the field of deep learning. Originating from the principles of nonequilibrium thermodynamics, they have gained prominence for their unique approach to generating synthetic data by perturbing and recovering training data.

The 2015 paper "Deep Unsupervised Learning using Nonequilibrium Thermodynamics" marked a significant milestone in this trajectory. Its authors, Sohl-Dickstein et al., demonstrated the potential of diffusion models to reverse noise perturbations and construct novel data samples.

An example of a modeling framework trained on a 2D swiss roll from the paper by Sohl-Dickstein et al. (Source: ArXiv in PDF)

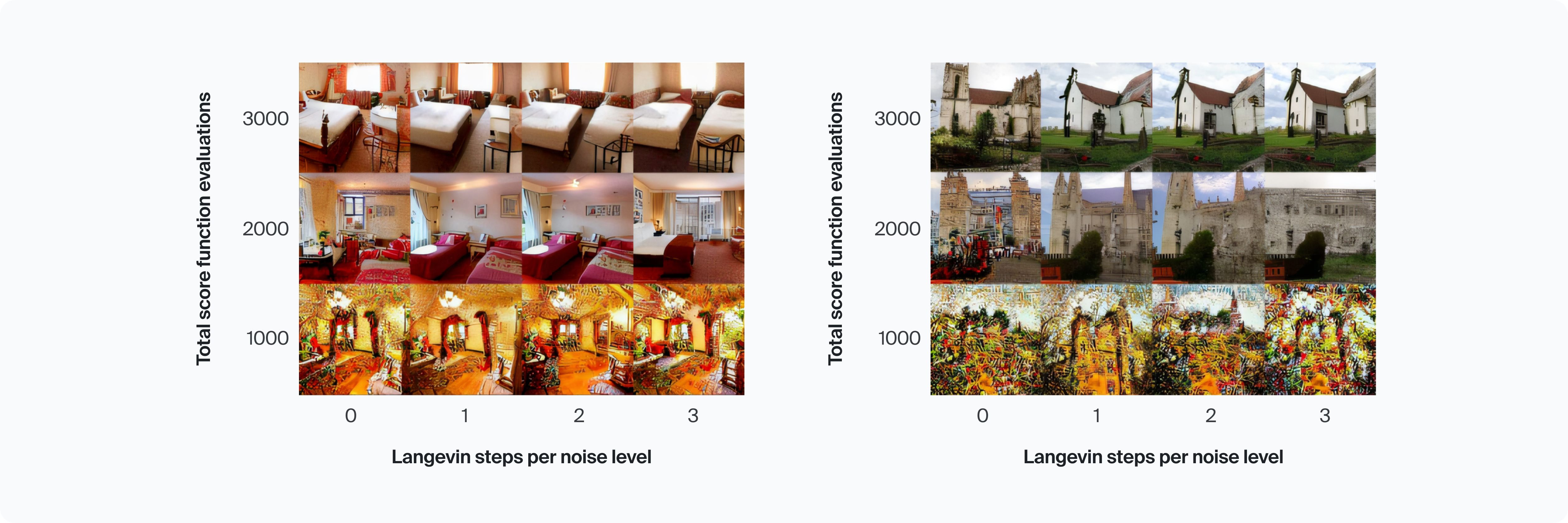

Around 2019, Song et al. made significant contributions to the field with their work on score-based generative modeling. Their research focused on developing algorithms capable of learning generative models directly from data through maximum likelihood estimation, leading to advancements in the training and evaluation of diffusion models.

In 2020, Evidence Lower Bound (ELBO) became a fundamental principle in probabilistic modeling, providing a framework for variational inference and Bayesian modeling. ELBO is critical for training and optimizing diffusion models, facilitating efficient parameter estimation and uncertainty quantification.

In 2021, Song and colleagues presented a stochastic differential equation (SDE), leveraging neural networks to estimate score and merging score-based generative modeling and diffusion probabilistic modeling. Experimental results demonstrated superior performance, achieving record-breaking image generation on the CIFAR-10 dataset.

PC sampling for LSUN bedroom and church. (Source: Score-Based Generative Modeling through Stochastic Differential Equations by Song et al.)

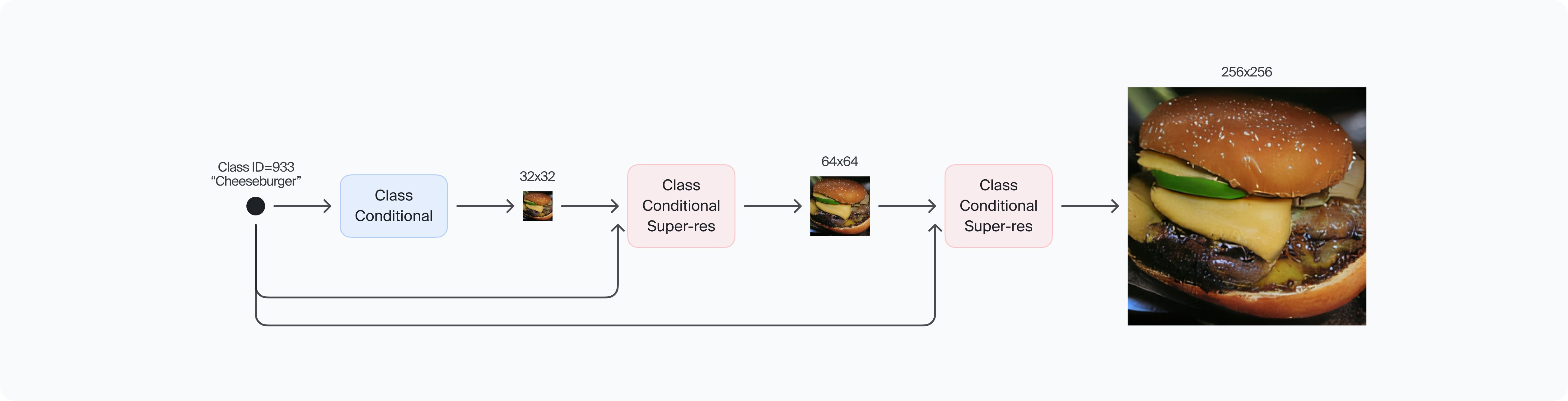

Also in 2021, Ho et colleagues introduced cascade diffusion models to generate images. This innovative approach involves a sequential pipeline of diffusion models, each generating progressively higher-resolution images. Each model enhances the quality of the generated samples compared to its predecessor through successive upsampling and adding finer details.

Detailed CDM pipeline for generation of class conditional 256×256 images. (Source: Cascaded Diffusion Models for High Fidelity Image Generation by Ho et. al.)

Throughout its history, diffusion model research has continuously evolved, driven by a quest to develop more accurate, scalable, and interpretable models for understanding and predicting the dynamics of innovation adoption and spread.

(a) Timeline of notable developments (non-exhaustive) in diffusion modeling. (b) The number of per-month and accumulative papers in diffusion models in the last 12 months based on Google Scholar search (as of October 2022). (Source: Efficient Diffusion Models for Vision: A Survey)

Researchers and practitioners constantly look for new ways of speeding up diffusion, suggesting various optimization methods and architectural solutions.

How Do Diffusion Models work?

The workflow of a diffusion model involves two key phases, i.e., forward diffusion process and reverse diffusion process. First, the model gradually adds Gaussian noise to the input data. Conversely, during the reverse process, the model learns to reconstruct the original data by removing the added noise.

Perturbing an image with multiple scales of Gaussian noise. (Source: Yang Song’s blog)

Training diffusion models requires a large dataset of existing samples. For instance, it needs to process thousands of images of cats of various breeds, sizes and colors to start generating new pictures of cats.

The training of diffusion models for image generation is initiated by inputting a genuine high-dimensional image into an encoder, which converts it into a reduced-dimensional representation. Essentially, this process condenses the initial sample into a numerical vector, encapsulating solely its fundamental attributes. Subsequently, the image is embedded within the latent space, where each point corresponds to a specific data characteristic. For instance, concerning images of cats, these points may relate to their size, color, or breed.

Then, the image undergoes diffusion until it becomes pure noise where the original sample is totally unrecognizable. After that, the reverse diffusion process denoises the vector and sends it to an image decoder, converting the numerical representation back to an image sample.

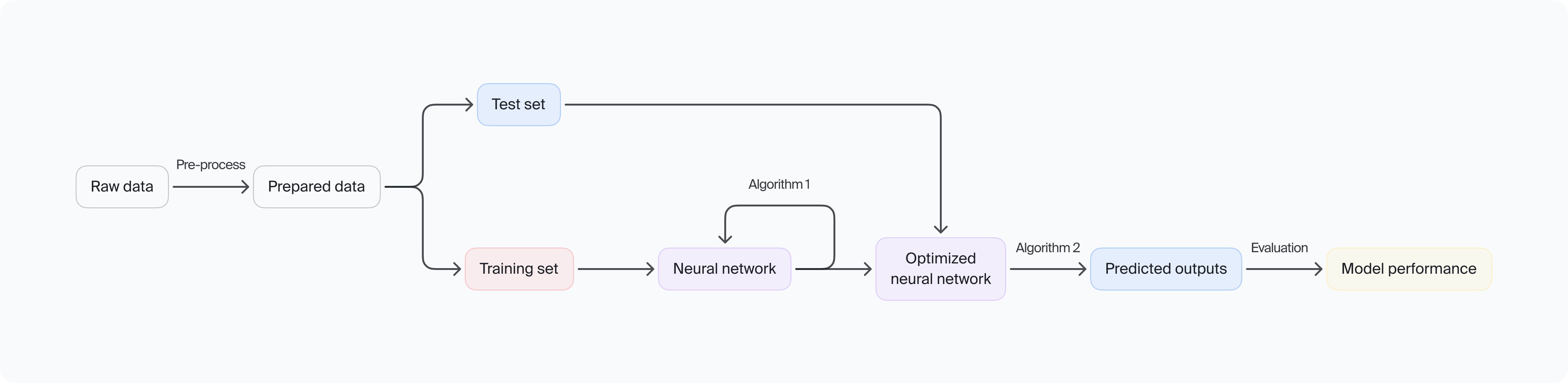

During the training phase, the diffusion model learns to predict the input data distribution from noisy images. It refines the sample’s parameters through optimization algorithms and trains to convert data points in the latent space to images, closely resembling the original data initially fed to it.

The model moves through several training stages. Each aims to improve its ability to generate realistic samples and minimize the reconstruction error between the original and reconstructed data.

A practical project using diffusion models requires several key components:

A high-quality dataset relevant to the target domain.

Sufficient computational resources.

A clear training pipeline, including data preprocessing and optimization techniques.

The forward diffusion process in practical projects involves feeding the input data into the trained model. Then, it applies a series of diffusion steps to create the data noisy version. During the reverse diffusion process, the model reconstructs the original data, effectively removing the added noise. Then it generates a high-fidelity sample, utilizing the previously learned parameters.

Process of image generation from a text prompt. (Source: Stable diffusion models explained with code)

Overall, diffusion models offer a robust framework for generating synthetic data that closely resembles real-world samples. By implementing the forward and reverse diffusion process, practitioners can leverage these models to tackle many practical projects, from image generation to data denoising and molecule design.

With the suitable dataset, computational resources, and training pipeline, diffusion models hold immense potential for solving complex real-world challenges in multiple industries.

Advantages of Diffusion Models

Diffusion models have their advantages over Generative Adversarial Networks (GANs) and other alternative methodologies. They allow users to vary the diversity and detail of the synthetic data, demonstrate high stability of training and prove to be efficient for various tasks due to their accuracy and stability. The list of diffusion models benefits include:

High-quality output: Synthetic images, sounds, formulas, and process descriptions created by diffusion models can closely mirror data they were trained on. They can also consider complicated text prompts and include nuanced details out of reach for other methodologies.

Stability: Diffusion models training process tends to be more stable than GANs. The likelihood-based learning is reliable and less subject to mode collapse and similar issues.

Scalability: Diffusion models show high quality results when working with high-dimensional data. For instance, this allows them to handle tasks of compression and expansion of large-resolution images.

Adaptivity: Diffusion models can generate realistic samples even when they have to deal with incomplete input data. The ability to handle the issue of missing information allows them to adapt to complex scenarios.

Confidentiality: Diffusion models offer invertible transformation, enabling synthetic data generation without compromising the original information fed to them. It means a lot in domains with essential privacy focus like healthcare or finance.

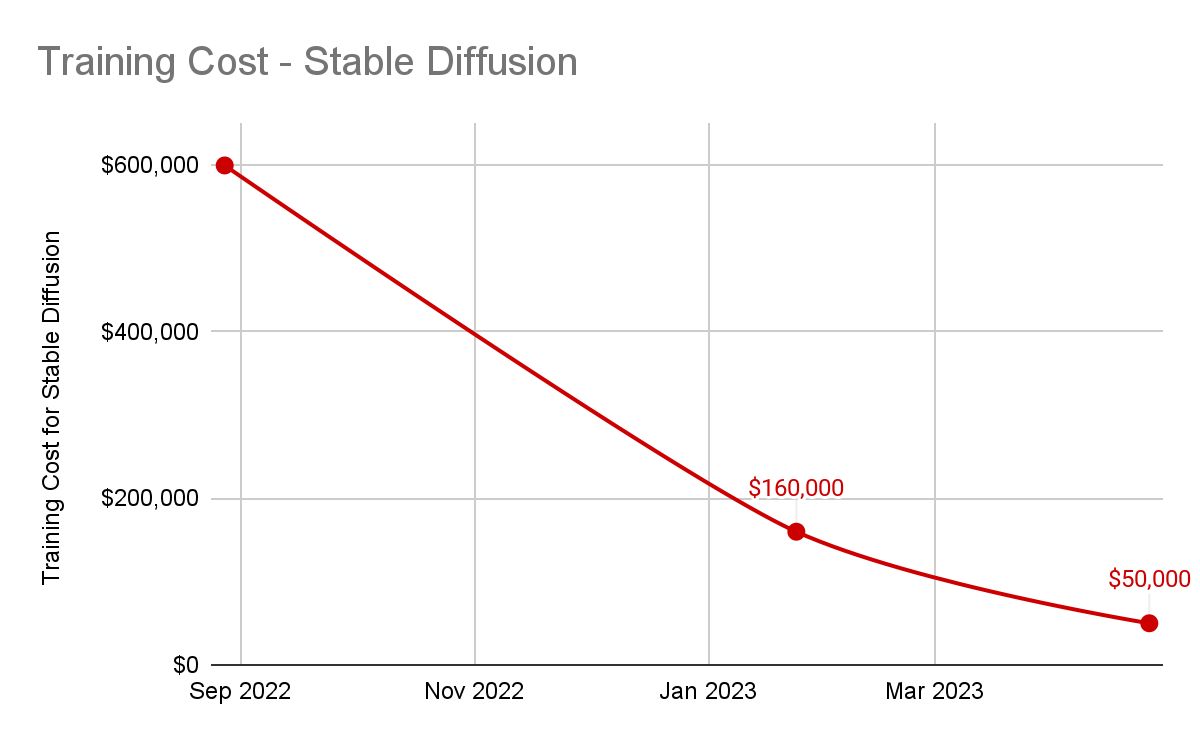

The price for implementing AI tends to come down, and diffusion models are in the vanguard of the trend. (Source: Unsupervised Learning)

Diffusion Model Concerns

The widespread adoption and applicability of diffusion models are not without constraints. Their deployment and integration require considering at least some fundamental limitations.

Data Dependency: Diffusion models heavily rely on extensive and diverse training data. While the latter ensures the models' robustness, acquiring high-quality data can be tricky and sometimes impractical, particularly in specialized domains.

Resource Consumption: Complex computations can strain resources, necessitating access to high-performance infrastructure or cloud services. Such intensive requirements may be financially challenging and limit diffusion models' accessibility to individuals and smaller organizations.

Interpretability: Compared to traditional ML models, which offer clear insights into their decision-making processes, diffusion models lack transparency. This makes it challenging to understand the rationale behind their predictions or outputs. This lack of interpretability poses significant hurdles, particularly in critical domains such as healthcare.

Long-Term Dependencies: Diffusion models may falter in scenarios where information from a distant past significantly influences the outcome. This weak point can limit diffusion models' performance in tasks requiring temporal understanding and context preservation.

While diffusion models demonstrate remarkable capabilities in various machine learning tasks, it is essential to acknowledge and address these limitations to enhance their practical utility and foster broader adoption across diverse domains.

Diffusion Model Categories

All diffusion models work on shared principles. They always add random noise to training data and then remove it to create similar yet original new samples. However, there are several distinctive diffusion model types, each with specific features and peculiarities.

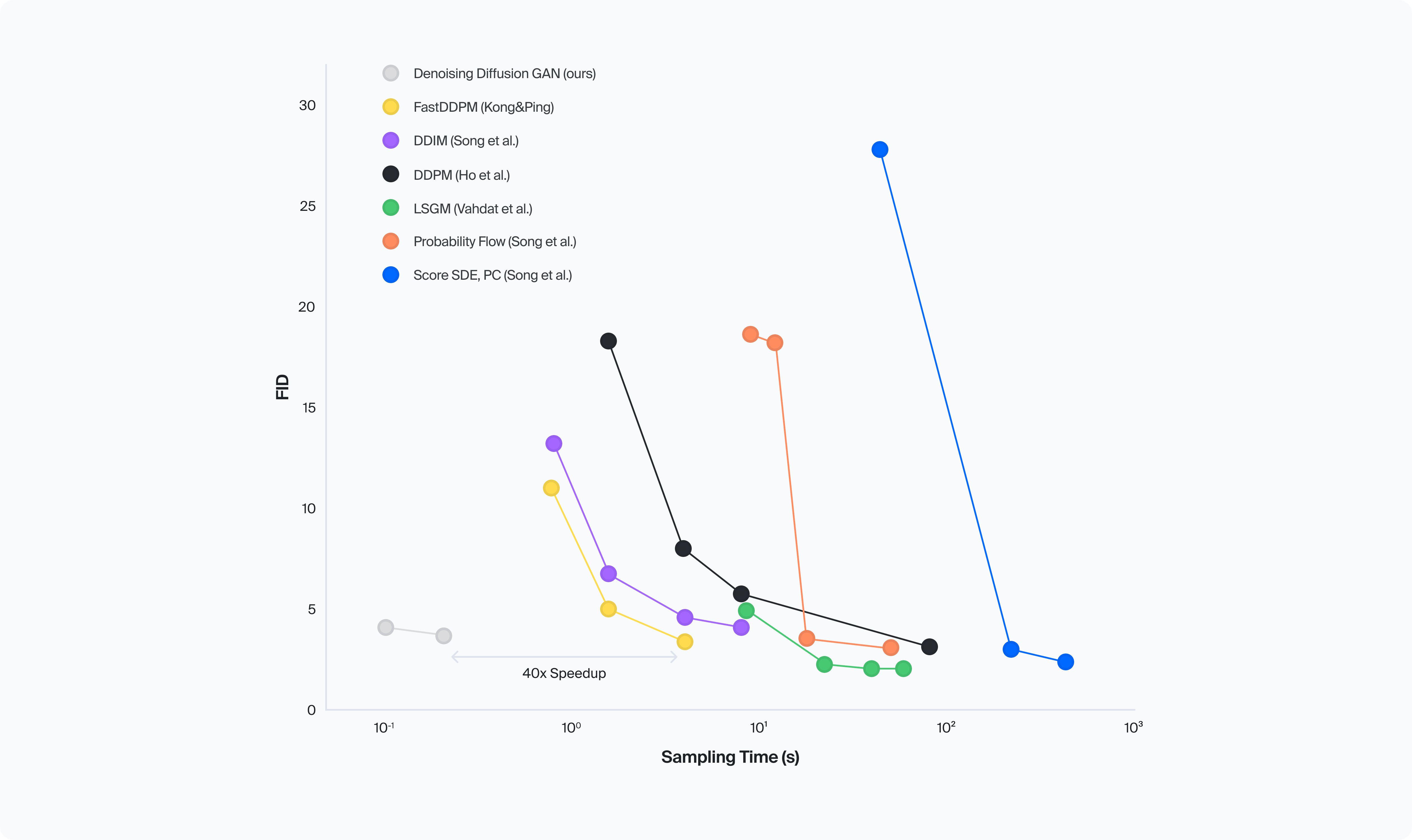

Sample quality vs. sampling time for different diffusion-based generative models (Source: NVIDIA Developer)

We’ll review three of them based on different mathematical frameworks and give a brief overview of their alternative classification.

Denoising Diffusion Probabilistic Models (DDPMs)

Denoising Diffusion Probabilistic Models (DDPMs) are at the forefront of generative models. They are primarily used to alleviate noise from visual or audio data. Their versatile applications span domains, encompassing tasks like image quality enhancement, detail restoration, and file size reduction.

For instance, the entertainment industry harnesses advanced image generation process and video processing techniques to integrate realistic backgrounds into movies seamlessly.

DDPMs inherit their core principles from Diffusion Probabilistic Models (DPMs), which model the propagation of uncertainty or probability distributions across data points. This enables probabilistic inference and quantification of uncertainty, offering a structured framework for capturing dependencies and interactions within complex data distributions.

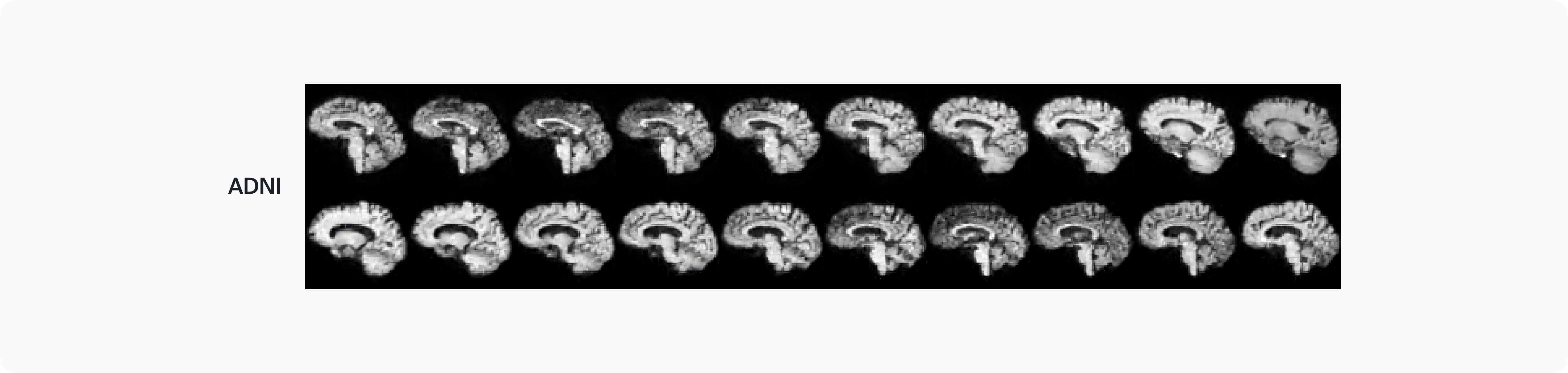

Medical DDPM-generated synthetic images of brain MRI scans. (Source: Nature)

Through parameterizing conditional distributions over variables within a probabilistic graphical model framework, DPMs incorporate diffusion processes like random walks or Markov chains to facilitate the propagation of uncertainty and information flow.

However, DDPMs represent just one instantiation of diffusion models, and they have unique characteristics. The entire Markov chain replication can lead to slower generation of new samples compared to alternative generative models such as Generative Adversarial Networks (GANs).

Recognizing this limitation, extensive research endeavors have been undertaken to mitigate this challenge. One notable solution is Denoising Diffusion Implicit Models (DDIM), where the traditional Markov chain is replaced with a non-Markovian process, resulting in expedited sampling.

Church samples from 100 step DDPM and 100 step DDIM. (Source: Denoising Diffusion Implicit Models)

This innovation addresses the computational bottleneck associated with DDPMs, offering a more efficient approach to generative modeling.

Noise-Conditioned Score-Based Generative Models (SGMs)

Noise-conditioned Score-Based Generative Models (SGMs) emerge as a pioneering approach, seamlessly blending randomness with real data to refine and enhance images. Guided by a "score" tool and a "contrast" guide, SGMs prioritize real data over created samples, making them invaluable for image crafting and modification tasks.

1024 x 1024 samples generated from score-based models. (Source: Yang Song’s blog)

These models shine particularly in crafting lifelike and high quality images, with a special knack for generating realistic portraits of familiar faces. Their applications extend beyond the entertainment industry, finding significant utility in enriching healthcare datasets, often constrained by stringent regulations and industry norms.

Noise-conditioned SGMs operate by generating new samples from a given data distribution, leveraging an estimation score function capable of estimating the log density of the target distribution. This score function, trained on available data points, can generate new data points from the data distribution, offering a versatile data augmentation and synthesis tool.

Stochastic Differential Equations

Stochastic Differential Equations (SDEs) offer a mathematical framework to describe changes in random processes over time. Widely employed in physics and financial markets, SDEs provide invaluable insights into the dynamics of systems significantly influenced by random factors.

In finance, SDEs play a crucial role in modeling and predicting asset prices as they can consider the market's volatility and uncertainty. By accurately modeling fluctuations, SDEs prove instrumental in calculating financial derivatives such as futures contracts.

Moreover, SDEs extend their utility to various machine-learning tasks. For instance, in image processing, SDEs are employed to model the evolution of image features over time. They effectively facilitate video prediction or motion estimation. In natural language processing, SDEs serve as a foundation for modeling the temporal dynamics of linguistic structures. They enable tasks like sentiment analysis and text generation.

Despite their complexity, SDEs offer a robust framework for capturing the intricate interplay between random processes and time evolution.

Alternative Categorization

Another approach to diffusion models classification is based on the spread of a phenomenon across time and space instead of the models’ mathematical frameworks. In this case, we can split them into following categories.

Continuous Diffusion Models: These models operate on the principle of gradual change and are perfect to process data evolving in an uninterrupted manner. The rate of the phenomenon's propagation, often exemplified by the addition and removal of noise in images, is described by a continuous function.

Discrete Diffusion Models: These models assume that the phenomenon undergoes changes from one state to another in separate steps within specific time intervals. These models work well for tasks with categorical data, including text generation.

Hybrid Diffusion Models: These models combine the flexibility of continuous diffusion with the granularity of discrete diffusion, providing comprehensive insights into phenomena with intricate dynamics.

Diffusion Transformers

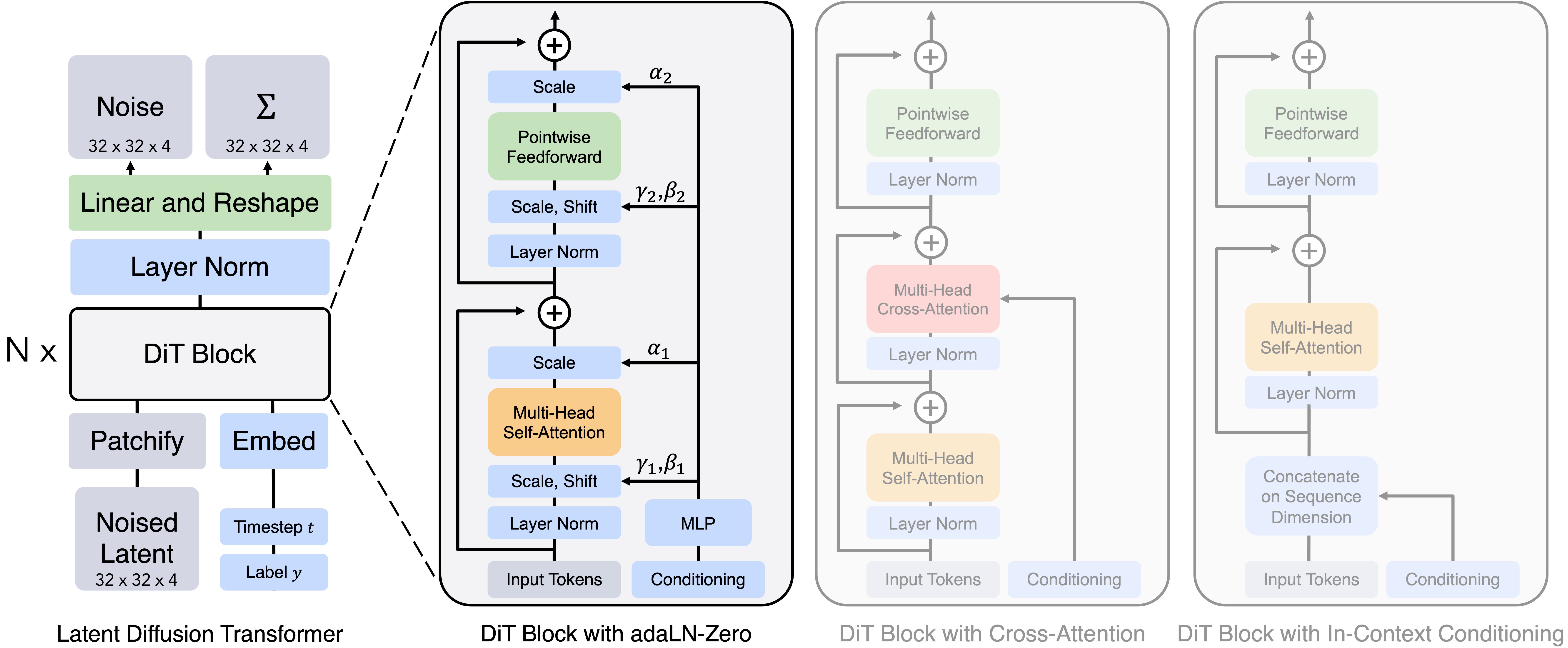

Researchers from prominent scientific institutions and tech giants constantly search for optimized approaches to diffusion models. One of the recent breakthroughs in AI, powering projects like OpenAI's Sora and Stability AI's Stable Diffusion 3.0, is the combination of diffusion and transformer architectures.

Saining Xie and William Peebles suggested the idea back in 2022. Employing transformers allows for simplifying traditionally complex U-Net architecture and parallelization for efficient training.

The scheme from the original article Scalable Diffusion Models with Transformers Saining Xie and William Peebles. (Source: William Peebles’s website)

Diffusion transformers promise to offer superior speed, performance, and scalability, paving the way for innovative applications across diverse domains. As quoted by Techcrunch, Xie admits that the current process of training diffusion transformers potentially introduces some inefficiencies and performance loss, but believes this can be addressed over the long horizon.

Some Famous Diffusion Models and Services

In 2022, the landscape of diffusion models in machine learning underwent a transformative shift with the release of groundbreaking platforms such as MidJourney by OpenAI, DALL·E 2, Imagen by Google, and Stable Diffusion by Stability AI. These advancements marked significant milestones in the evolution of diffusion models. However, it's worth noting that several systems had been introduced even earlier, and the emergence of new applications continues to reshape the field dynamically.

In this article, we will mention only some models to give the overall idea of the phenomenon’s scale.

Stable Diffusion: Released in 2022, Stable Diffusion is a text-to-image model built on deep learning principles rooted in diffusion techniques, marking its entry into the ongoing AI expansion. Developed primarily to generate high quality images based on text descriptions, it also finds application in tasks like inpainting, outpainting, and text-guided image-to-image translations.

This innovative model was crafted through collaboration between researchers from the CompVis Group at Ludwig Maximilian University of Munich and Runway, supported by computational resources from Stability AI and training data sourced from non-profit organizations.

Like other latent diffusion models, it operates as a deep generative artificial neural network, boasting open-sourced code and model weights, enabling its deployment on consumer hardware equipped with a modest GPU of at least 4 GB VRAM. This accessibility represents a notable departure from previous proprietary text-to-image models like DALL-E and Midjourney, which were solely accessible through cloud services.

DALL-E: DALL·E, DALL·E 2, and DALL·E 3 represent a series of text-to-image models developed by OpenAI. These models have undergone significant evolution and refinement over time, with DALL·E 3 marking the latest advancement in this progression. Its release into platforms like ChatGPT for ChatGPT Plus and ChatGPT Enterprise customers underscores its integration into a variety of applications beyond traditional AI spheres.

Initially unveiled in a blog post by OpenAI in January 2021, DALL·E leverages a modified version of the GPT-3 model to generate images, introducing a novel approach to content creation. Subsequent iterations, such as DALL·E 2, aim to enhance image realism and resolution, signifying a continuous quest for improved performance and capability.

The technological underpinnings of DALL·E involve a multimodal implementation of the GPT-3 model, with 12 billion parameters, designed to bridge the gap between text and image modalities seamlessly. Leveraging text–image pairs sourced from the Internet for training, DALL·E's model intricately "swaps text for pixels," facilitating the translation of textual prompts into cohesive visual representations.

Furthermore, its integration with CLIP (Contrastive Language-Image Pre-training) underscores holistic approach to language and image understanding, ensuring the relevance and coherence of generated visual outputs.

Midjourney: Midjourney also offers a service that generates image data based on natural language prompts, and it has become emblematic of the ongoing AI boom, reflecting a surge in innovation within the field.

The Midjourney team has brought the platform into open beta since July 12, 2022, facilitating access to its image generation capabilities. Leveraging Discord bot commands, users can seamlessly create artwork by providing prompts, underscoring Midjourney's user-friendly approach to content creation.

The platform's versatility is evident in its diverse applications, which range from rapid artistic prototyping to facilitating brainstorming sessions in advertising and aiding architects in generating mood boards for project visualization.

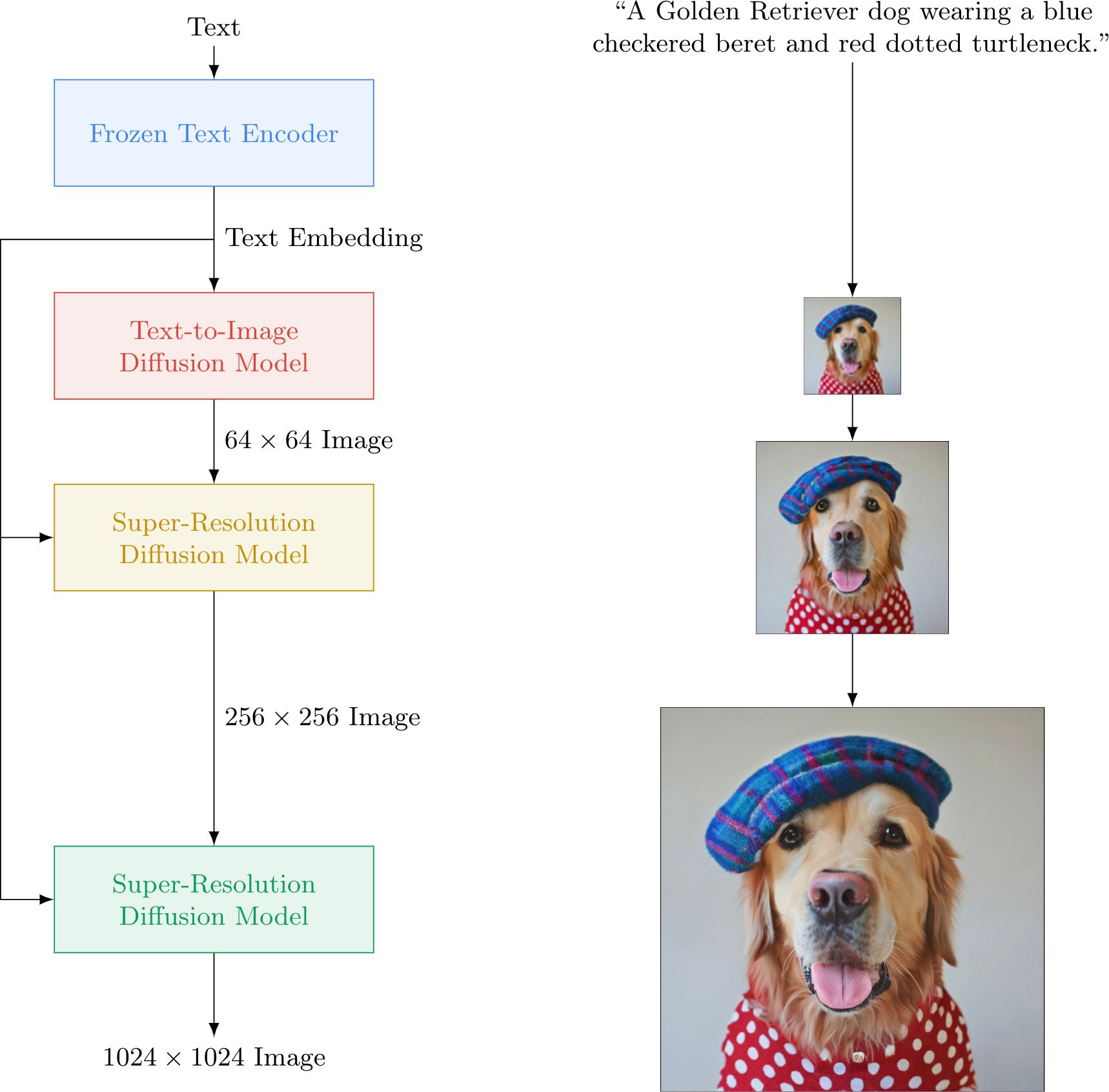

Imagen: Google's Imagen leverages the capabilities of large transformer language models and the strength of diffusion models in image generation. This allows the platform to achieve unprecedented fidelity and alignment between text prompts and generated images.

Imagen uses a large frozen T5-XXL encoder to encode the input text into embeddings. A conditional diffusion model maps the text embedding into a 64×64 image. Then, a super-resolution diffusion model to upsamples the image. (Source: Google)

With Imagen 2, Google introduces a sophisticated advancement in text-to-image technology, offering high-quality, photorealistic outputs closely aligned with user prompts. This updated model, released in December 2023, builds on the success of its predecessor, can generate images that reflect natural distributions from the training data rather than predetermined styles.

Imagen 2's integration into various Google platforms, including Gemini, Search Generative Experience, and Google Labs' ImageFX, signifies a step forward in empowering users to explore creative possibilities effortlessly.

The deployment of Imagen 2 extends beyond conventional applications, with Google Arts and Culture utilizing the technology in their Cultural Icons experiment. This initiative enables users to engage with cultural content and enhance their knowledge through immersive experiences facilitated by Google AI. Moreover, developers and enterprises can leverage the Imagen API in Google Cloud Vertex AI, opening avenues for integrating advanced image generation capabilities into various applications and services.

Generative AI by iStock: Generative AI by iStock, unveiled by Getty Images in January 2024, offers designers and businesses an accessible and legally sound solution for creating visuals.

Leveraging NVIDIA Picasso, a platform for custom AI models, Generative AI by iStock taps into Getty Images' extensive library of licensed and proprietary data to produce high-quality images in response to simple text prompts. This service ensures legal protection and usage rights for the generated image data.

By training bespoke AI models tailored to their vast catalog of licensed images and videos, Getty Images has created a service that empowers users to streamline their design workflows.

Stable Audio: Stable Audio, developed by Harmonai, the audio research lab of Stability AI, represents a significant advancement in text-controlled audio generation. Trained on an extensive dataset of 19,500 hours of audio data, Stable Audio leverages a U-Net-based diffusion model to generate high-quality audio in real time, using just a single NVIDIA A100 GPU.

The release of Stable Audio in 2023 came amidst a growing interest in generative AI for music production. While previous efforts such as OpenAI's MuseNet and Google's MusicLM focused on generating music sequences or audio tokens, Stable Audio represents one of the first diffusion process-based approaches to music generation.

Building on the success of Harmonai's earlier project, Dance Diffusion, Stable Audio employs a pre-trained model called CLAP to map text prompts into an embedding space shared with musical features, facilitating the generation of diverse audio outputs.

Harmonai has announced plans to release open-source models based on Stable Audio and code to train custom models.

CyberRealistic: CyberRealistic stands out as a diffusion model tailored to produce remarkably lifelike data across diverse domains. With its proficiency in generating images, text, and audio that closely resemble real-world characteristics, CyberRealistic finds utility across a spectrum of applications ranging from immersive simulations to content generation.

RealisticVision: Realistic Vision is another diffusion model renowned for its capacity to produce authentic and top-tier visual content. This model finds extensive application in various tasks, including image synthesis, style transfer, and elevating the realism of computer-generated graphics.

ChilloutMix: ChilloutMix is a diffusion model acknowledged for its capacity to create calming and peaceful content. Whether it's serene images, ambient sounds, or tranquil text, this model plays a role in crafting soothing and stress-relieving experiences.

SDXL: SDXL is a diffusion model distinguished by its exceptional capacity for handling extensive datasets. With the ability to generate high-resolution content, it is particularly well-suited for large-scale image generation, data augmentation, and creating high-fidelity simulations.

Anything V5: Anything V5 is a versatile diffusion model that fully embodies its name. Capable of producing an array of content types, including images, text, and audio, its versatility and adaptability render it an ideal option for an extensive array of generative tasks.

AbsoluteReality: AbsoluteReality is a diffusion model celebrated for its capability to generate content that blurs the boundaries between reality and simulation. Whether utilized for virtual reality encounters or crafting hyper-realistic data, this model leads the way in achieving unparalleled realism.

DreamShaper: DreamShaper is a diffusion model distinguished by its proficiency in crafting inventive and imaginative content. It is frequently employed in artistic pursuits, empowering artists and designers to manifest their dreams and visions across various media forms.

ModelShoot: ModelShoot, a Dreambooth model trained with a VAE on a diverse set of photographs of people, is designed to excel in capturing full to medium-body shots with a focus on cool clothing and a fashion-shoot aesthetic.

Business Cases across Industries

Diffusion models have emerged as powerful tools across many industries, revolutionizing processes and driving innovation. From high-quality video and text-to-image generation to market and weather forecasting, these models are reshaping the landscape of artificial intelligence. Here, we will explore only several examples of practical use cases to give an idea of their capabilities.

Entertainment Industry

Entertainment is the most apparent sphere for diffusion process application. Their unprecedented capabilities revolutionize image, video, and sound production processes, reshaping the entire industry. AI-generated content is widely used in media and advertisements and plays a crucial role in concept creation across various specific domains.

In video production, diffusion models are employed to enhance visual effects and post-production editing. For example, Adobe After Effects has long incorporated diffusion-based algorithms to generate lifelike animations.

Sora, a generative AI system previewed by OpenAI in February 2024, creates photorealistic clips based on natural language inputs. It transcends the boundaries of traditional filmmaking and promises unprecedented opportunities to professionals and amateurs.

Video: https://media.wired.com/clips/65cd609a1b47a15ce1b4001e/360p/pass/tokyo.mp4

Open AI claims Sora will allow its users to make longer clips than its competitors, up to one minute. (OpenAI via Wired)

The underlying technology driving Sora is the same that powers DALL-E 3. OpenAI describes Sora as a diffusion transformer, essentially a denoising latent diffusion model with a single Transformer functioning as the denoiser. Besides the impressive visual quality, Sora demonstrates a nuanced understanding of cinematic language and an unusual ability for convincing storytelling.

Additionally, diffusion models are utilized in video upscaling and restoration, where they can enhance the resolution and quality of video footage, making it suitable for high-definition displays and streaming platforms.

Moreover, diffusion models play a crucial role in sound production and music composition. These models can analyze audio data to generate realistic instrument sounds and music compositions, facilitating the creation of original music tracks and sound effects for films, video games, and multimedia projects.

Video: https://twitter.com/i/status/1758347012909474137

Sora generated Minecraft digital world simulation. (Source: Twitter)

Diffusion models are utilized to create immersive and interactive experiences in virtual reality (VR) and augmented reality (AR) applications. These models can generate realistic 3D environments, textures, and animations, enhancing the realism and immersion of VR and AR content. For example, Unreal Engine incorporates diffusion-based algorithms to render lifelike graphics and visual effects in VR and AR applications, enabling developers to create immersive virtual worlds and interactive experiences.

Despite the groundbreaking potential of generative AI technologies like Sora, their adoption in the movie industry has not been without controversy. The Hollywood strike of 2023 underscored concerns about the impact of AI on the rights, credits, and compensation of human workers. The strike culminated in a tentative agreement that sought to establish safeguards against the undue replacement or marginalization of human creatives by AI-generated content.

Healthcare

Medical Imaging

Machine learning diffusion models enhance medical imaging by improving images' quality and resolution. They can produce high-resolution images from scans of lower quality, thereby supporting precise diagnosis and facilitating treatment planning. Such models play a vital role in helping healthcare professionals visualize intricate details and identify abnormalities in medical images with heightened clarity.

Diffusion models can perform some of the tasks that previously required an expert team involvement. In 2022, Boah Kim and colleagues suggested an innovative diffusion adversarial representation learning (DARL) model for self-supervised vessel segmentation to assist diagnosing vascular diseases. It can help physicians in the area where supervised learning models require large labeled datasets that are challenging to collect, particularly due to confusing backgrounds.

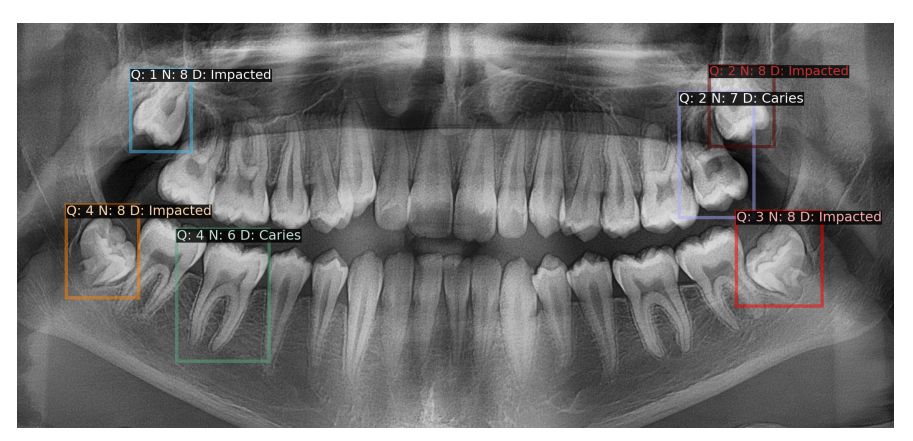

Diffusion models are dominant among generative AI systems aimed at medical anomaly detection. In recent years, many researchers have created their own models in specific medical areas, suggesting various approaches to model training. However, they generally demonstrate impressive results when analyzing X-rays or MRIs.

The HierarchicalDet model displays bounding boxes around unhealthy teeth. (Source: Diffusion-Based Hierarchical Multi-Label Object Detection to Analyze Panoramic Dental X-rays)

Besides that, synthetic data created by generative AI systems promise to be helpful for medical statistics and research. Diffusion models' ability to generate samples of unprecedented likeness to the original information allows them to preserve all the essential data properties. At the same time, their output can be used for further research without compromising actual patients' privacy.

Drug Discovery

Scientists use diffusion models to understand how molecules diffuse and react within biological systems, offering a comprehensive view of potential drug behavior. By accurately predicting molecular interactions, researchers can expedite the discovery of promising drug candidates.

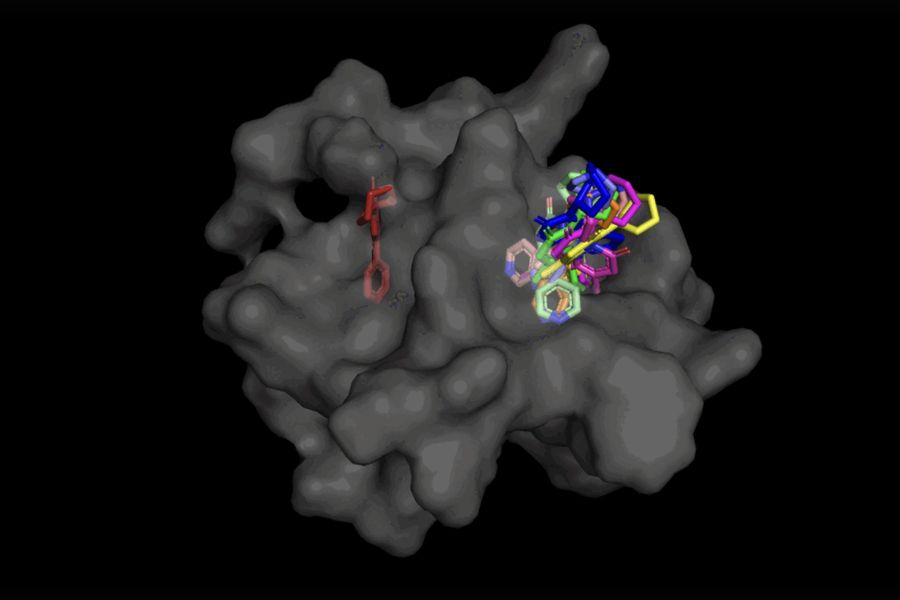

In 2023, a group of MIT researchers proposed a molecular docking model named DiffDock, representing a novel methodology in computational drug design. By leveraging diffusion generative models, DiffDock facilitates the identification of multiple binding sites on proteins, offering unprecedented insights into molecular interactions crucial for drug efficacy.

DiffDock generates a series of potential poses for protein-ligand binding. (Source: MIT News)

Traditionally, drug discovery relies on laborious and expensive experimental procedures, taking years to identify potential drug candidates and elucidate their mechanisms of action. Diffusion models promise to streamline this process by virtual screening numerous proteins in a fraction of the time.

Still, any AI-based research is heavily dependent on the original data collection and preparation. That’s why speeding up the process of drug discovery causes reasonable concerns among professional communities. At the same time, diffusion models have a chance to demonstrate better results in case of insufficient data to chart the path for further investigation.

Diffusion models also aid in virtual screening, where vast databases of compounds are assessed for their potential to bind to specific drug targets. This computational approach significantly reduces the time and costs associated with bringing new medications to market.

Autonomous Vehicles

Diffusion models may become a critical component for autonomous vehicle’s decision-making systems. These models assimilate data from an array of sensors, including LiDAR, cameras, and radar, to create a dynamic representation of the vehicle's surroundings.

In January 2024, a group of scientists from Tongji university introduced DDM-Lag, a Diffusion Decision Model, augmented with a Lagrangian-based safety approach. They conceptualized decision-making when driving as a Constrained Markov Decision Process (CMDP) and crafted an Actor-Critic framework with the diffusion model employed as the actor.

Utilizing probabilistic modeling, diffusion models facilitate vital functions such as path planning, object detection, and collision avoidance. By predicting the future positions and behaviors of objects in real-time, autonomous vehicles can navigate safely through complex traffic scenarios, adhering to traffic rules and ensuring passenger safety.

Finance

Diffusion models offer advanced techniques for analyzing complex financial data and making informed decisions. Simulating the dynamics of financial assets enables the prediction of price movements and portfolio optimization. By incorporating deep learning algorithms, diffusion models can capture intricate patterns within financial time series data, providing traders and investors with valuable insights into market trends.

Diffusion models can analyze vast amounts of market data in real time, identifying trading opportunities and executing deals with minimal human intervention. Additionally, diffusion ML models are helpful for risk management strategies, where they can assess volatility.

Generative models are also employed to face the challenge of confidential data analysis. Strict privacy regulations limit the opportunity to share microdata like fund holdings, hindering collaborative research efforts. But the capability of diffusion models to generate lifelike data distributions offers a solution, enabling scientists and practitioners to analyze trends without compromising individual financial information.

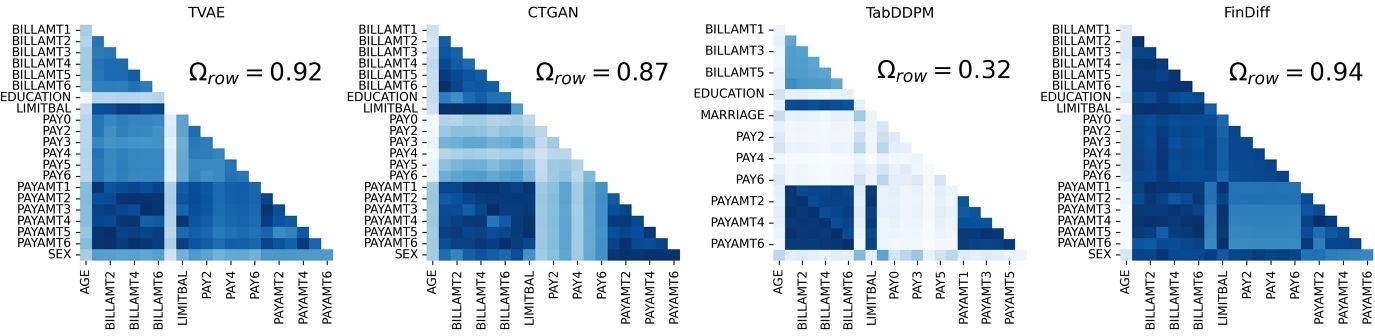

In November 2023, Timur Sattarov and colleagues suggested Financial Tabular Diffusion (FinDiff), a diffusion model for generating realistic financial tabular data. The model is tailored to provide researchers and financial sector specialists with information suitable for scenario modeling, stress tests, or fraud detection.

Correlations between the Credit Default dataset and its synthetic version generated by different models. A more intensive color gradient indicates a higher correlation, and proves the FinDiff’s efficiency. (Source: Proceedings of the Fourth ACM International Conference on AI in Finance)

Transportation

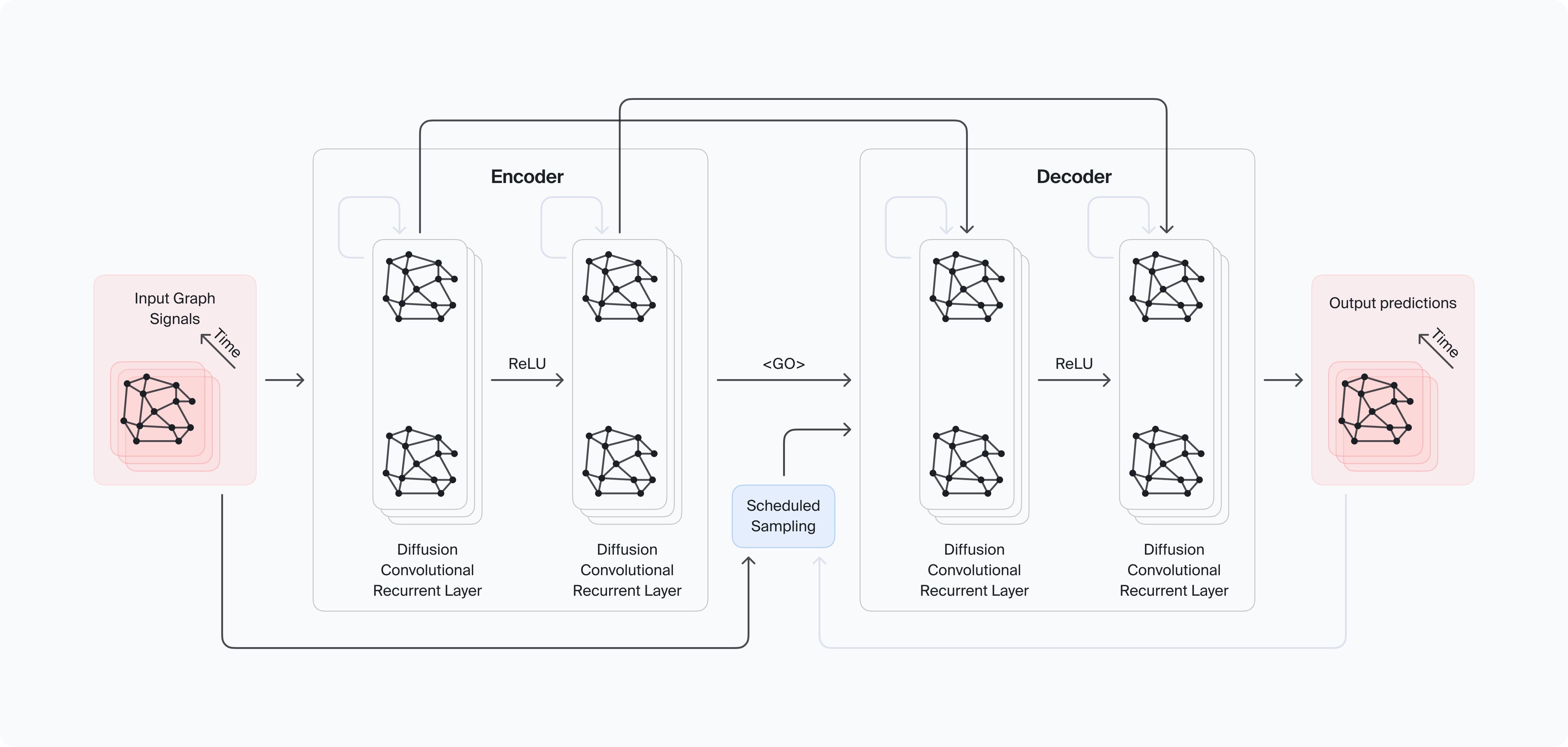

Diffusion machine learning models are applied to analyze transportation flows, offering powerful tools for understanding and optimizing complex traffic patterns. Diffusion process helps capture the dynamic interactions and propagation of traffic within transportation networks.

Diffusion models prove instrumental in traffic forecasting. Researchers and transportation agencies can employ them to analyze historical traffic data and predict future traffic conditions, including congestion levels, travel times, and traffic volumes.

The architecture for a diffusion convolutional recurrent neural network for bus passenger flow prediction. (Source: MDPI)

Additionally, diffusion models are helpful for anomaly detection, identifying abnormal traffic patterns such as accidents or road closures. This allows timely responses and facilitates work on mitigation strategies to minimize disruptions, enhance transportation system resilience, and sustain urban mobility.

Neuroscience

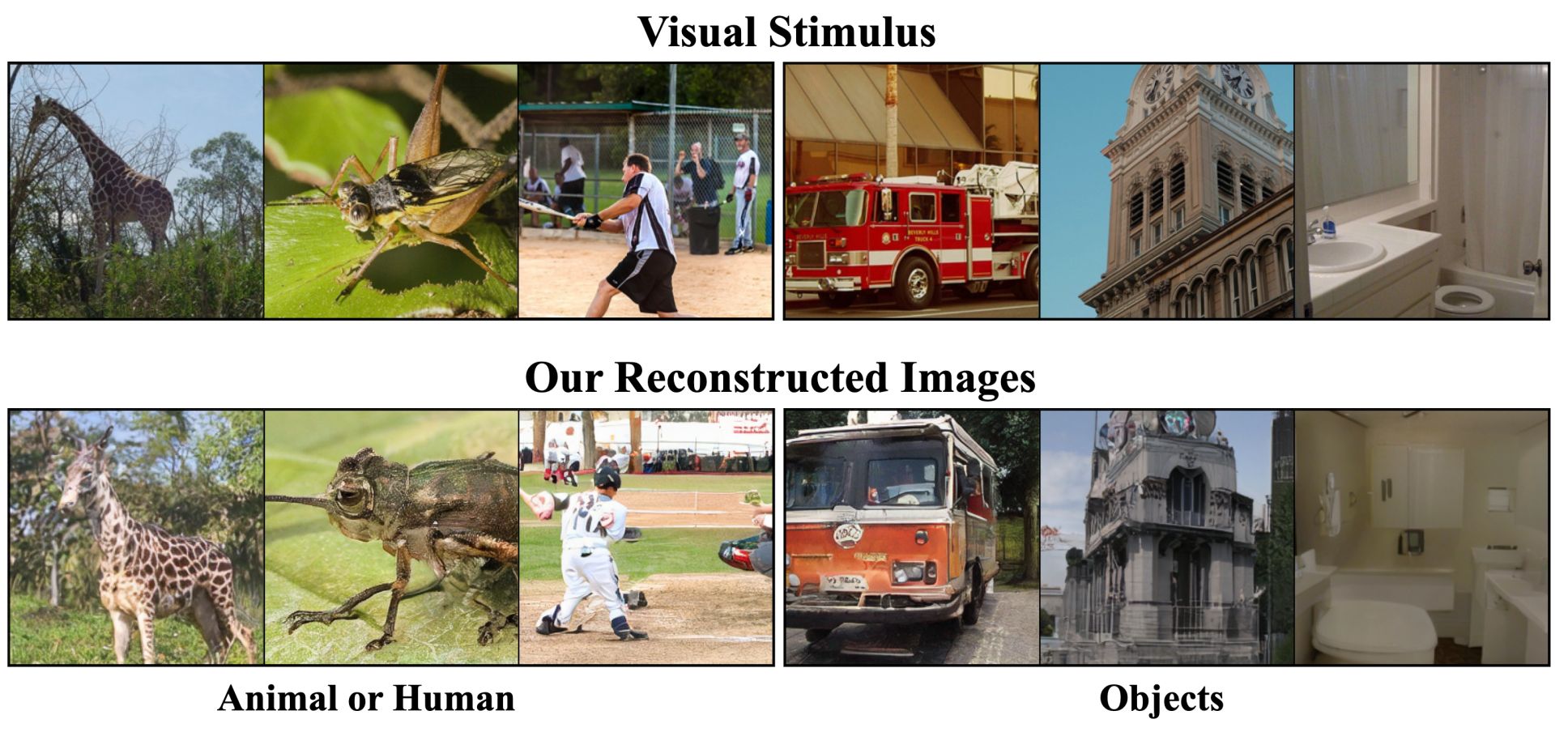

Diffusion models can analyze large-scale neuroimaging data, such as functional magnetic resonance imaging (fMRI) and diffusion tensor imaging (DTI), to uncover hidden structures in brain connectivity networks. Simulations of signal propagation and neuron interaction contribute much to our understanding of complex brain dynamics and neural processes.

Specific diffusion models can map the interactions between different brain regions and identify key functional networks involved in various cognitive functions and behaviors. This allows scientists to get closer to brain decoding, backing the images a person saw or even dreamt of from their brain activity.

Brain decoding with DDPMs, from Chen et al. (Source: Xcorr)

Generating such brain-conditioned samples has an important practical application, giving a chance to enhance communication with locked-in patients.

Additionally, the diffusion process can be used for neuroimaging data synthesis and augmentation, generating synthetic brain images and data to enhance the training and validation of neuroimaging algorithms and diagnostic tools.

Fashion

Brands have started experimenting with diffusion models to come up with novel design patterns for apparel, tapping into the model’s ability to generate unique and aesthetically pleasing visuals.

In January 2024, a group of scientists from Shanghai University of Science and Technology suggested an innovative design method using the shared multi-stage diffusion model. This approach encompasses high-level design concepts (like business style or clothes for a party) and low-level, measurable clothing attributes (like sleeve lengths or collar type) in a hierarchical structure.

The authors of the HieraFashDiff model categorized the input text into different levels by criteria used by professional clothing designers, allowing the latter to add low-level attributes after high-level prompts.

HieraFashDiff demonstrates better performance in comparison with other, non-specific models. (Source: Hierarchical Fashion Design with Multi-stage Diffusion Models)

Another example of fashion-oriented diffusion model research is the paper from 2023 where a group of scientists from Hong Kong Polytechnic University suggested a new style guided diffusion model. Their SGDiff overcomes some issues faced by designers using well-known image generation apps. It also incorporates supplementary style guidance, substantially reducing training costs.

Energy Sector

Machine learning diffusion models optimize power grid operations by predicting demand and mapping energy distribution. They allow researchers and engineers to analyze historical energy consumption data and weather patterns to accurately forecast future demand, enabling utilities to allocate resources and prevent potential grid failures. They contribute to the energy infrastructure's reliability and stability, ensuring consumers' uninterrupted power supply.

Diffusion models work perfectly well for Non-intrusive Load Monitoring (NILM), enabling detailed analysis of household energy consumption without requiring individual metering. In 2023, Ruichen Sun and colleagues suggested DiffNILM, a novel energy disaggregation framework. Based on diffusion probabilistic models, it can distinguish power consumption patterns of individual appliances from aggregated power.

The workflow of the DiffNILM experiment. (Source: National Library of Medicine)

How to Train Your Own Model

Training a diffusion model involves iteratively refining a generative model's understanding of data patterns through the sequential generation and improvement of data representations. This process is pivotal for various applications, from image denoising to natural language generation.

Getting Your Hardware Ready: Training large diffusion models demands substantial computing power, which must be considered before the start of a project.

Preparing a Suitable Dataset: The quality and diversity of the dataset significantly impact the efficacy of the trained diffusion model. Curating or collecting datasets from reputable sources and ensuring high resolution and ample sample size is imperative. Then, the project team must preprocess the data to facilitate efficient model consumption.

Fine-Tuning Hyperparameters: The learning rate and training steps are pivotal in the model's learning efficacy. Experimenting with various settings to determine optimal configurations tailored to specific tasks and hardware resources is a natural solution. Continuous evaluation of model outputs proved its efficiency across multiple domains.

Unlocking the diffusion model's full potential requires tailoring a project's path according to its specific goals. A particular model’s efficiency is crucial and must be constantly checked and reconsidered.

Anwaar Ulhaq and colleagues list remarkable calculations on different diffusion model’s resource consumption. According to them, OpenAI trained GPT-3 model on 45 terabytes of data, and Nvidia trained the final version of MegatronLM, a language model comparable to but smaller than GPT-3, using 512 V100 GPUs for nine days. It means that training MegatronLM required nearly as much energy as three houses would use in a year.

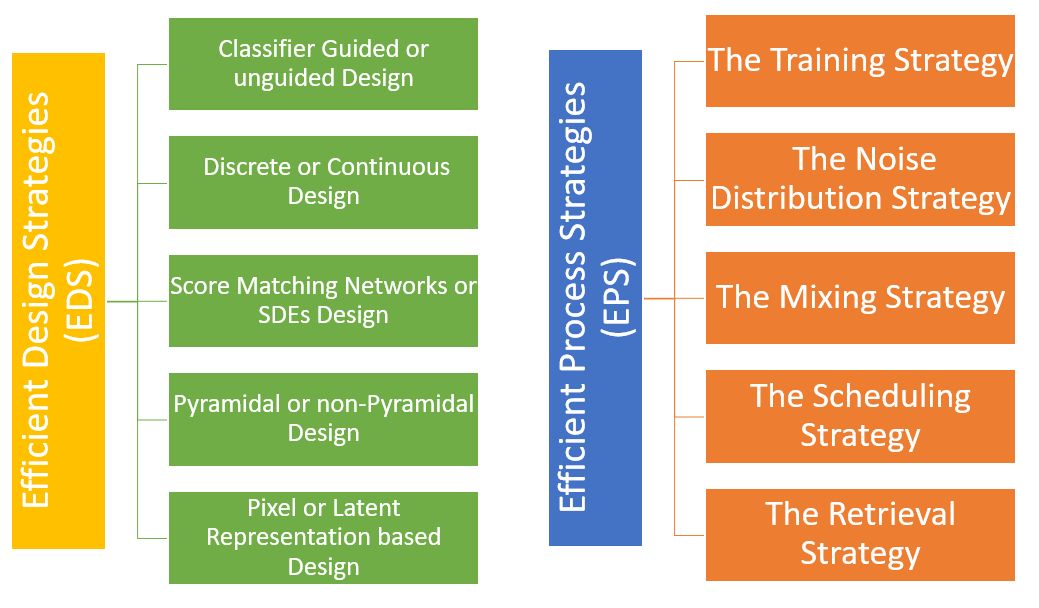

Dr. Ulhaq and colleagues divide influencing strategies for diffusion models into two categories: Efficient Design Strategies (EDS), and Efficient Process Strategies (EPS). (Source: Efficient Diffusion Models for Vision: A Survey)

The training of Stable Diffusion, the most effective among the hyped diffusion models, required energy equivalent to burning nearly 7,000 kgs of coal.

Final Thoughts

Diffusion models have emerged as a revolutionary advancement in artificial intelligence, captivating researchers, practitioners, and curious individuals with their ability to generate synthetic data closely resembling real-world samples. From image creation to data denoising and beyond, these models offer a reliable framework for addressing complex challenges across various domains.

One of the primary advantages of diffusion models is their ability to produce high-quality outputs that faithfully mirror their training data. Unlike other methodologies, such as Generative Adversarial Networks (GANs), diffusion models offer stability in training, ensuring reliable performance.

Diffusion models demonstrate scalability, making them suitable for handling high-dimensional data. Moreover, they prioritize confidentiality by offering synthetic data generation without compromising the original information.

As the costs associated with training diffusion models go down, they become increasingly accessible. However, the widespread adoption of diffusion models also presents some specific challenges. These include interpretability issues, resource consumption, and limitations in handling long-term dependencies.

Nonetheless, with ongoing research and innovation, diffusion models are promising to address real-world issues.