Apartment, flat, or crib? Toloka helps train voice assistant in telling close synonyms apart

What happens when synonyms are synonyms...until they aren't? One of our clients is a well-known voice assistant, and they came to us with a synonym project. As we look back, we'd like to dive into why the voice assistant needed to tell similar words apart and how we structured the task for Tolokers.

How it went down

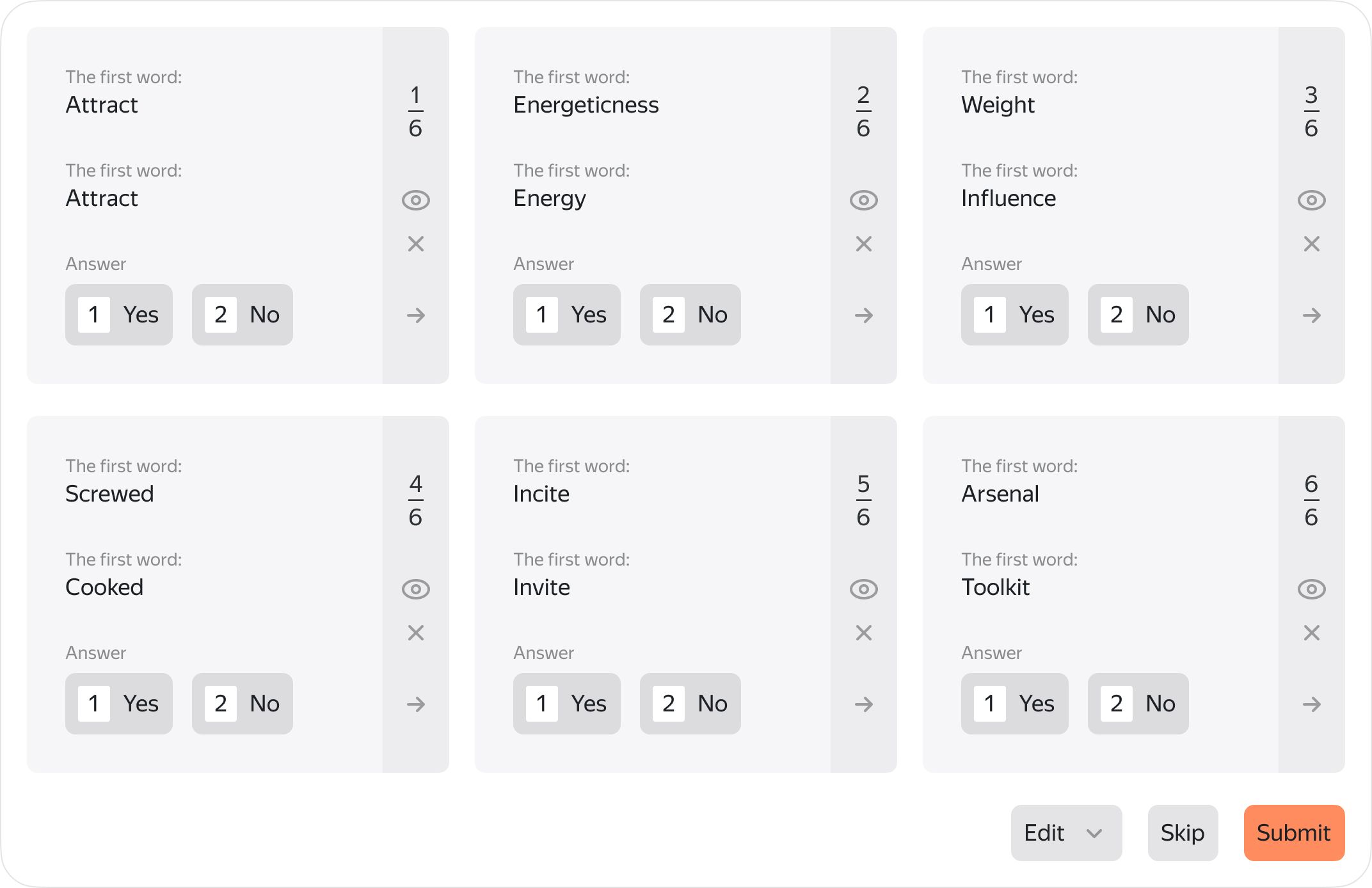

The client asked our team of Crowd Solution Architects to set up and manage the entire project from start to finish. Their analysts handed us a dataset with 372,000 word pairs we needed to mark as synonymous or non-synonymous. For Tolokers, that's a piece of cake. Here's what the task looked like for Tolokers:

For each pair of words, Tolokers needed to mark Yes if they thought the pair was synonymous or No if they didn't. We used an overlap of 5 to optimize quality on this task (each pair of words was shown to five different people and the results were aggregated).

A quick dig through our textbooks from school told us that two words are synonyms if they are:

Close in meaning.

Different in their spelling and pronunciation.

The same part of speech.

The instructions were ready, we had examples for the tutorial and a strict test, and a set of 1000 pairs was primed for sample markup to make sure the client was happy with our quality level. Back then, we naively assumed this was simple and straightforward.

Looks great, just do it all over again

When we shipped the test results off to the client, it turned out that we had our work cut out for us. For instance, we'd marked red and crimson as synonymous. The client disagreed. From a purely linguistic standpoint (and according to a quick internet search), we were right. The two words refer to very similar shades, which means they're close in meaning. But the client then filled us in on a little detail: while we were taking a big-picture view and looking for similarities, we were supposed to be finding exact synonyms. Not just in the same ballpark, pairs were synonymous if they could be used interchangeably in a variety of contexts. Think: pail and bucket or sick and ill.

That narrowed down the task: find the pairs that are exact synonyms. "Okay, now it's going to be smooth sailing," we thought once more. But it turned out that even complete similarity isn't enough to guarantee interchangeability. To take one example, words could be from different periods in the language's historical development - twain and two, say. Languages are constantly evolving, new contexts for words appear, some words fade into the past, and others are introduced. While twain and two are synonymous, they can only rarely be used interchangeably.

The problem is that languages are living, breathing organisms. Finding exact synonyms is a challenge. Even when words appear to have identical meanings, they might drift away from each other over time as stylistic differences beget definitional differences.

Houston, what do we do now?

The problem couldn't have been more foundational. We went back to the client and asked them what business need we were meeting. As it turned out, they were building a tool that writes rules for parsing intents , which are user queries that might have synonyms in them. When a user says, "Call mommy," the voice assistant is supposed to know that they're talking about their mother. If they're talking about what color their new "ride" is, it's their car.

Filling us in on the business logic told us what the markup needed to look like. It wasn't exact linguistic synonyms we were looking for. We needed to pick out the synonyms users see as exact matches. In other words, word pairs that are interchangeable in most contexts. Tolokers had new definitions to work with. For our project, two words were synonymous if they were:

The same part of speech.

Different in their spelling and pronunciation.

Not just similar in meaning, but interchangeable in most contexts.

To make things simpler for Tolokers, we came up with a rule: If you can think of multiple contexts where these words can stand in for each other without changing the meaning, they're synonymous.

The test for Tolokers featured clear-cuts examples without any ambiguity, while the control tasks used to filter out cheaters had a few word pairs that were written and pronounced identically as well as some that were different parts of speech. The threshold for entry was set very high. Given that we were looking to develop a strong linguistic intuition, we needed the same from our Tolokers.

Bringing it home

With the markup complete, we submitted it to the client. And we can draw some conclusions even though the data hasn't hit the production phase yet.

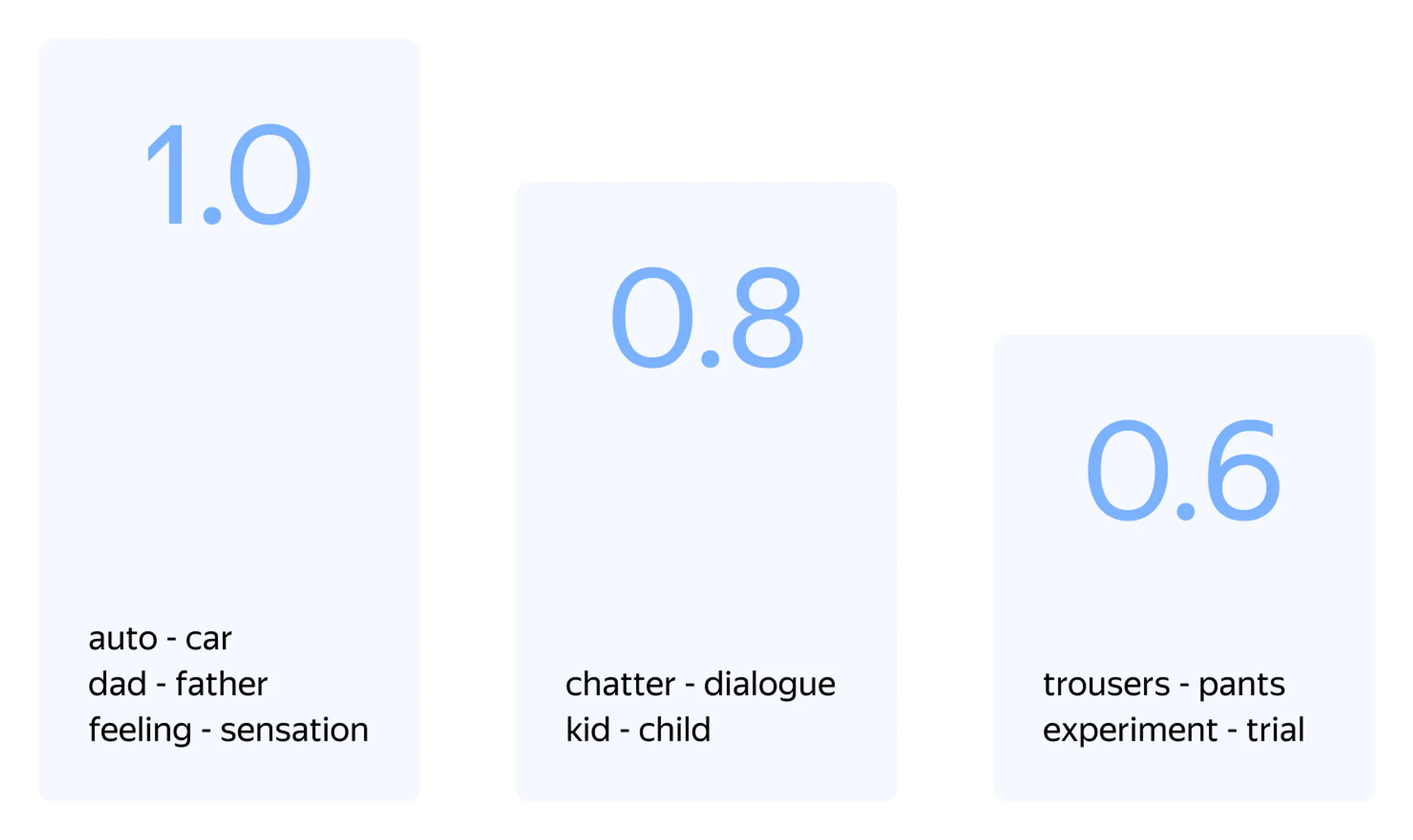

This is how we rated synonyms based on the results from Tolokers:

The numbers in the image show how consistent Tolokers were in their assessments for each word pair. Each pair was shown to five Tolokers.

1.0 - All five people thought the two words were synonymous.

0.8 - Four of the five thought the two words were synonymous.

0.6 - Here, we have to suspect the words are not synonymous.

The client walked away happy with our quality level. For our part, we confirmed the importance of starting the project with clear instructions that explain how the results will be used. To achieve high-quality results in fewer iterations, this step is just as essential as adding control tasks and overlap.