When to implement RLAIF instead of RLHF for building your LLM?

Two primary sources of feedback have been widely adopted in RL-based language model training: Reinforcement Learning with Artificial Intelligence Feedback (RLAIF) and Reinforcement Learning with Human Feedback (RLHF). The choice between the two approaches can significantly affect the performance, scalability, and ethical considerations involved in building language models. In this article, we examine the concerns in deciding whether to use RLAIF instead of RLHF to build a language model (LM).

Reinforcement learning as one of the types of ML

Reinforcement learning (RL) has its name due to the fact that the model learns from the reinforcement of the environment. Apart from RL, there are supervised and unsupervised learning. One of the fundamental concepts of artificial learning that helps to determine which type of learning is employed is whether labeled data is involved or not. Supervised learning only utilizes data with labels, unsupervised learning relies on data that is unlabeled and the model must find regularities on its own.Such algorithms are passive, as they do not interact with the environment and receive ready-made datasets, whether labeled or not.

RL models employ label-free data and are active since there is a continuous interaction with the environment, which provides the model with training datasets. The experience gained by the system or agent is its training dataset.

In that respect, reinforcement learning is similar to the way toddlers learn to interact with the world and determine the best approach to handling objects around them through experience. The AI model is awarded for doing the right thing, and it aims to max out that bounty. Likewise, a baby, having licked a lollipop, realizes that it is sweet and is eager to get more.

Reward signal as an driver of policy formation

AI models depend on reward signals to understand how accurate their behavior is. This signal is a scalar value that the agent receives from the environment after taking an action. It indicates the immediate benefit or desirability of the action taken by the agent in the current state. Through trial and error, the agent learns to associate actions with the rewards they yield in different states. Over time, the agent aims to discover a policy that maximizes its cumulative reward.

A state in RL represents a specific configuration or situation in which the agent finds itself within the environment at any given time. It encapsulates all the relevant information the agent needs to make decisions. The state can include various factors such as the position of the agent, the state of the environment, relevant variables, sensory inputs, or any other pertinent information.

A policy in RL defines the agent's strategy or behavior for selecting actions based on the current state. The goal of the RL agent is often to learn an optimal policy, which maximizes the expected cumulative reward over time. Optimal policies can vary depending on the specific RL problem and the agent's objectives.

What is RLHF?

Reinforcement learning from human feedback (or human preferences) is a subtype of standard RL. In traditional RL, the agent learns from environmental feedback (rewards or penalties) on its own. However, in RLHF, the agent can also receive feedback from humans, which can be more informative or nuanced than the response from the environment only. Instead of or in addition to environmental rewards, RLHF agents receive feedback from humans.

In RLHF humans provide feedback to the RL agent in the form of evaluations, preferences, or demonstrations. Moreover, human feedback can take various forms, such as explicit comments on actions taken by the agent, expressed through natural language. Such feedback helps the RL agent learn to make better decisions by reinforcing actions that lead to positive outcomes and discouraging actions that lead to negative outcomes, based on human judgment.

The RLHF pipeline involves the so-called reward model also referred to as the preference model, which evaluates the model's actions and assigns them a score. This separate AI algorithm learns from people's assessments also known as human preference data. A reward or preference model should replace a human in the RLHF pipeline. Therefore, the response received from such a model should be indistinguishable from real human evaluators' feedback.

This preference model is designed to make it easier to scale human feedback. Instead of having a human evaluate each LLM response, a preference model is generated that automates and replaces the subsequent collection of human feedback with the collection of feedback from the preference model. Since human preferences are collected from more than one person, the resulting model is a sort of average of all feedback data received.

Based on generated text, a reward model can predict how well that text meets a human's expectations. RLHF is what helps AI models become more human-like.

How RLHF works

Selection of a pre-trained model

For implementing RLHF in model customization, ML engineers start by selecting a pre-trained model, since training a so-called 'blank" algorithm from scratch will take more time and resources. Since aLLM is intelligent already, is aware of many concepts, and has a broad understanding of the language as well as other basic tasks, the subsequent alignment of the model will occur faster.

Such LLMs are also called foundation models because they constitute the base for building more narrowly specialized models. That is, in order to obtain specialization, the LLM must undergo supervised fine-tuning.

Supervised fine-tuning of a pre-trained model

Supervised fine-tuning of a pre-trained model demands a relatively small set of labeled data, which makes this process considerably easier. Such a golden set collected by human annotators allows the language model to understand the language better and respond to queries more efficiently.

Reward model creation

At this stage, the reward model is trained. In order to create , we need to evaluate the large language model's responses by human annotators starting from the worst to the best. Such a human preference dataset is further employed to train a reward model. As we discussed above, it evaluates the performance of the language model. The feedback or evaluations given by the reward model force RLHF models to generate more precise outputs.

Fine-tuning through the reward model

The agent interacts with the environment, taking actions and observing the resulting states and rewards. However, unlike traditional reinforcement learning, where the reward signal alone guides learning, RLHF incorporates human feedback in a form of rewards given by a reward model.

During the reinforcement learning phase, the LLM essentially goes through the fine-tuning phase again, but this time with the help of feedback from the reward model, the scores of which were generated based on the human preference dataset. The RLHF often uses an RL algorithm called Proximal Policy Optimization (PPO) to improve the policy of the model.

Ethical and Safety Ramifications of RLHF

Reinforcement Learning from Human Feedback is a great way to teach AI systems in a way that aligns them with human values and preferences. However, it has some disadvantages that cannot be fixed completely. That's why we need to have extra safety measures in place to deal with them and share what we learn with others to keep improving.

RLHF is supposed to make LLMs act in ways that match what people's expectations, but it's tricky to ensure that AI truly understands our values and beliefs. To mitigate the risks, AI developers need to design RLHF models in a way that respects different, even conflicting, moral beliefs and cultural differences.

RLHF can also be misused by people to spread false information or reinforce societal biases. For instance, malicious actors could provide biased or false feedback to an RLHF-trained AI, leading it to produce content that is misleading or outright untrue. This misuse of RLHF technology contributes to the proliferation of misinformation across various online platforms.

We need rules and systems to make sure RLHF is used responsibly. It is essential to establish clear guidelines and regulations governing the development, deployment, and use of RLHF applications to mitigate the risks associated with misinformation and societal harm. This includes measures such as verifying the authenticity of human feedback, implementing safeguards against biased training data, and monitoring the output of RLHF systems for signs of misinformation or prejudice.

By providing guidance, humans can steer the learning process and ensure that AI systems achieve human-level performance while behaving ethically and responsibly. One of the ways to do this is to implement expert feedback in the process of RLHF model development.

Implementing multiple quality control methods, similar to those utilized on the Toloka platform, can indeed enhance the effectiveness of human efforts in addressing the ethical implications of RLHF particularly related to misinformation and societal biases.

RLHF with domain experts

To create a specialized large language model in domains such as physics, healthcare, programming, and others, leveraging domain experts for data collection is crucial. However, accessing a large number of experts simultaneously can be challenging and expensive. Services like Toloka cater to this need, as they aggregate diverse experts and handle payments and communications efficiently.

The primary goal of organizing data collection in this manner is to ensure the acquisition of high-quality data. It's important to exclude any unreliable or ethically questionable content, as misinformation and biases can result from using such data for training Large Language Models (LLMs) via Reinforcement Learning from Human Feedback (RLHF). Consequently, becoming part of the domain experts team involves a rigorous selection process for experts, including multiple tests and qualification checks.

By implementing a blend of quality control strategies, Toloka mitigates the risk of incorporating untrustworthy or ethically questionable data into RLHF training datasets. Toloka has developed its own set of quality assurance methods to maintain the integrity of the training process. These measures ensure that LLMs trained through RLHF are honest, truthful and helpful.

Toloka's quality control methods

Toloka employs multiple quality control methods to ensure the accuracy and reliability of provided data. Here's an overview of these methods:

Post-verification. Once domain experts finish their assignments their work is cross-assessed with other experts. They manually review the quality of completed tasks. Post-verification maintains the integrity and reliability of the results obtained from the experts, as it helps to identify and correct any errors or inconsistencies in the submitted work. This validation process helps to keep high accuracy of the results;

Careful selection, testing, and training of experts. Toloka selects experts based on their skills, education, and experience in the relevant field of expertise. T. Once domain experts are selected, they undergo testing to assess their knowledge and abilities in a specific area. They are then trained on the specifics of working on the Toloka platform, writing techniques, quality requirements, and other aspects of their work;

Platform-wide antifraud system. Toloka has an integrated real-time anti-fraud system at every level of the platform to detect and prevent cheating or fraudulent behavior. This system ensures that participants are genuine individuals.

Toloka ensures that data contributed by experts meets high ethical standards and can be relied upon for training RLHF models. This accountability instills confidence in the ethical integrity of RLHF technology and encourages responsible use and development within the AI community. Get in touch with the Toloka team to get high-quality data for your project.

What is RLAIF?

In RLHF, do not interact directly with the LLM during training, rather it is the preference model trained on human feedback that gives a human-like assessment of the LLM's actions. But such a process isn’t fully automated, since the training of the reward or preference model still requires collecting human preferences.

RLAIF (reinforcement learning from AI feedback) provides an alternative approach to training RL agents that complements traditional methods such as RLHF. Reinforcement Learning from AI Feedback uses feedback from AI models to automate training and improve the capabilities of other AI models. It is very similar to RLHF, but here the AI is assisted by another AI instead of a human.

According to the paper “RLAIF: Scaling Reinforcement Learning from Human Feedback with AI Feedback”, RLAIF achieves comparable performance to RLHF across assignments such as harmless and helpful dialogue generation, and text summarization tasks, as evaluated by human evaluators.

RLAIF is seen as a solution to RLHF's challenges with scalability and resource allocation constraints. This is because the data acquisition process is automated, unlike the RLHF where people are engaged in data acquisition. However, this automated process is also controlled. A special so-called constitution is created to govern the kind of data that AI can generate. The overall RLAIF models training process is similar to RLHF, but it involves some additional stages.

How does RLAIF work?

The preference model in RLAIF is trained on feedback from a separate model called the feedback model, unlike RLHF where human annotators provide a dataset. This separate model is also referred to as a Constitutional AI because it is guided by a constitution, a relatively short set of rules for an AI to follow to make itself harmless, helpful, and honest. Such a feedback model may be represented by another LLMmodel.

The large language model that is meant to be trained, also referred to as the response model, generates two responses to the prompt. The feedback model then evaluates these two responses, based on which numerical preference scores are produced.

This iterative process creates an AI-generated dataset comprising prompts and two responses with scores from the AI, i.e. feedback model. This AI feedback dataset is similar to the one obtained by collecting human preferences in RLHF.

Like in RLHF, this AI-generated data is employed to train preference models that help the LLM give better and safer responses. However, unlike human feedback, preferences here are determined by the constitution that humans develop to regulate feedback model responses.

Step-by-step RLAIF training

Revision and critique phase

The response model is a pre-trained LLM that has undergone a fine-tuning process on AI training data consisting of so-called revised responses and prompts, that occurred through the revision process. These responses are revised, and all harmful responses redacted, therefore deemed safe.

Revision starts with feeding a separate helpful RLHF model with provocative prompts, the responses to which are then critiqued by the same RLHF model. The critique phase includes the random use of constitutional principles instilled into the RLHF pipeline by humans.

The RLHF model is helpful indeed since it is smart enough to generate a useful response to a very large number of prompts. However, they will not always be harmless. For example, such a model may provide assistance or information on how to engage in illegal activities, such as hacking, fraud, or other criminal acts.

After executing a critique request on itself the RLHF model requests to revise the original response. Several iterations of this critique-revision process take place to arrive at the final revision of the response. From such responses, a harmless corpus of data is assembled for a response model fine-tuning.

Supervised Learning for Constitutional AI

At this point the response model does not exist yet. In the previous step, a dataset for fine-tuning was built to train it. After this step, which the authors of Constitutional AI: Harmlessness from AI Feedback paper call Supervised Learning for Constitutional AI (SL-CAI), the pre-trained language model will evolve into an SL-CAI model (response model).

Fine-tuning with revised responses improves the future response model outputs that will be used to train the preference model. This SL-CAI (or response) model is the final model that should emerge after all RLAIF stages.

Harmless AI feedback dataset generation

A previously mentioned feedback model evaluates responses to harmful queries given by a fine-tuned SL-CAI model also referred to as response model. It employs a constitution in deciding which response is better, therefore the future AI feedback dataset, containing evaluations, will be harmless.

To figure out which answer is preferred, the model calculates the log-probability for each answer. This gives us a number indicating how likely each answer is based on the information provided in the question.

After calculating these log probabilities, the system normalizes them. Normalizing means adjusting the numbers so they're easier to compare. This ensures that the probabilities for both answers add up to 1, making them easier to work with.

Now, we have a set of preference data. In this dataset, we include the prompt, the possible completions , and the normalized probabilities for each answer. This dataset helps AI make decisions about which answer is the best choice given the question.

The desired outcomes in the set of preference data are continuous scalars, which means they are numbers that can take any value within a certain range. Specifically, in this case, these numbers fall between 0 and 1. This range is different from RLHF, where the desired outcomes are explicitly defined, meaning they are clearly identified as "better" or "worse" based on human feedback.

Preference model training

Before aligning large language models via reinforcement learning from AI feedback we must first train the preference model on the dataset obtained in the previous step. A trained preference model can now assign preference scores to any input.

Final RL stage

The preference model helps to train the SL-CAI model during the reinforcement learning process to obtain the final RLAIF model. A final AI assistant or LLM that is trained through RLAIF uses outputs of a preference model built using the AI feedback from another LLM or RLHF model to generate better responses.

RLAIF Ethical Limitations

AI models can be inaccurate, lack some information, or use the wrong data. AI-generated feedback can also be inaccurate or biased. LLMs may lack contextual understanding or may provide feedback based on incomplete or incorrect data. They may also inherit inconsistencies from the original training data, which would be reflected in the feedback they provide for the preference model training.

If the feedback model used in RLAIF inherits biases or inconsistencies from the original training data, it may inadvertently amplify these biases in the feedback process. This may reinforce discriminatory practices or perpetuate societal biases, which can potentially cause unethical outcomes.

Unlike RLHF, where adjustments can be made by refining the human feedback mechanism, RLAIF is more difficult to quickly adjust in case of inconsistent or inappropriate datasets. For this purpose, the feedback model itself (represented by another LLM or RLHF model) has to be reconfigured or replaced. This process can be time-consuming and resource-intensive, making it less flexible in responding to evolving data or feedback quality issues.

RLAIF involves AI systems making decisions based on feedback generated by other AI systems, with limited human oversight. This raises concerns about accountability and transparency in decision-making processes. Without adequate mechanisms for human intervention or review, RLAIF systems may produce results that are difficult to interpret or justify.

As we already know, a constitution sets the guidelines to govern the feedback model in Reinforcement Learning from AI Feedback (RLAIF) systems to prevent harmful or unethical outputs. However, RLAIF systems based on complex neural network architectures may lack interpretability. It makes it difficult to understand how decisions are made and whether they align with the ethical guidelines outlined in the constitution.

Even if ethical principles are clearly defined and integrated into a feedback model, enforcing them can be challenging, especially in systems involving multiple agents. Ethical considerations and societal norms are not static and evolve over time in response to the changes in human values, technological advances, and cultural changes. To keep the constitution relevant and adaptable to changing conditions, it demands sustained monitoring, updating, and engagement with the concerned parties.

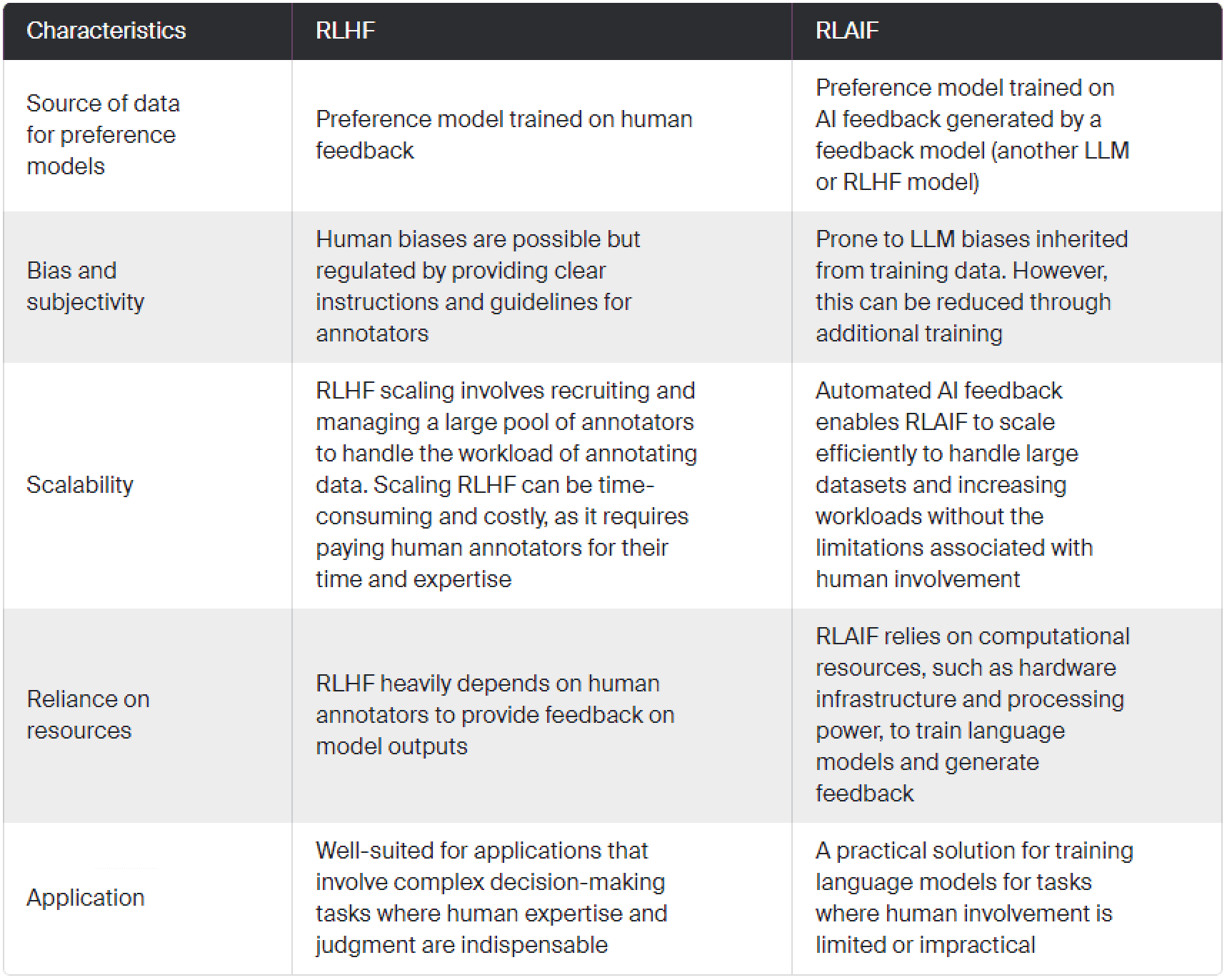

What are the differences between RLHF and RLAIF?

The idea of using a set of rules, i.e., a constitution, in generating AI feedback is the major differentiating factor between RLHF and RLAIF. In RLAIF, the preference model is built on the basis of data from the feedback model (another LLM, for example), while in RLHF the role of the feedback model is performed by people, whose feedback serves as the foundation of the reward or preference model. That is, the fundamental difference between these two approaches is the change in the method of creating training data for the preference model.

You need a tremendous amount of human preferences to train large language models through reinforcement learning. Naturally, RLHF's reliance on massive human feedback datasets for training leads to a time-consuming and expensive collection of human annotations and training. At Toloka we customize data collection pipelines to provide businesses the data they need for their specific project. Learn about our approach to sourcing high-quality data for RLHF.

RLAIF, on the other hand, uses an off-the-shelf LLM to generate preferences instead of relying on human annotators. This means that instead of collecting human annotations directly, RLAIF leverages the capabilities of the LLM to provide feedback, thereby bypassing the need for large-scale human input.

However, RLAIF still involves human input in setting up the training process and defining evaluation criteria but relies less on direct human annotation for generating preferences.

Benefits and disadvantages of both approaches

Benefits of RLAIF

RLAIF can potentially reduce costs by automating the feedback generation process, eliminating the need for extensive human annotation;

Leveraging AI-generated feedback allows for the efficient generation of large datasets, enabling scalability to handle complex tasks and larger models;

RLAIF can accelerate the training process by automating feedback generation, leading to faster iterations and model improvements.

Disadvantages of RLAIF

AI-generated feedback may lack the depth and nuance of human judgments, potentially leading to less accurate annotations;

Since people are minimally involved in the process, RLAIF may evoke accountability and transparency concerns related to the decision-making process;

RLAIF relies on pre-trained large language models that may inherit biases present in the training data, leading to biased feedback generation;

The final result heavily depends on the used Feedback model, which should be perfectly trained to generate highly calibrated and accurate preference labels;

RLAIF can introduce additional complexity compared to RLHF, which arises from the involvement of another AI system to generate preference scores or evaluations for model generation;

Benefits of RLHF

Human annotators can provide nuanced and contextually rich feedback that captures subtle aspects of language understanding and generation;

Human feedback can ensure high-quality annotations, particularly for complex tasks where human judgment is crucial;

Human-provided feedback is often more interpretable, allowing researchers to understand why certain responses are preferred over others;

Disadvantages of RLHF

Collecting and annotating large datasets of human preferences can be time-consuming, labor-intensive, and expensive;

Access to a diverse pool of human annotators with the necessary expertise may be limited, particularly for specialized domains or languages.

When to use RLAIF instead of RLHF?

Reinforcement Learning from AI Feedback and Reinforcement Learning from Human Feedback are two distinct approaches to training reinforcement learning (RL) agents, each with its own set of advantages and considerations. Deciding which one to choose depends on various factors, including the nature of the target task, the availability of human feedback, and the desired performance of the RL agent.

RLAIF can be a suitable alternative to RLHF in certain cases where human involvement is limited or where the benefits of automated feedback generation outweigh the reliance on human annotators. Here are some situations where RLAIF may be preferred over RLHF:

Cost Constraints. RLAIF can be advantageous when there are budgetary constraints or limitations on resources for collecting and annotating large datasets of human preferences. By automating the feedback generation process, RLAIF reduces the costs associated with human annotation;

Scalability. RLAIF offers scalability advantages compared to RLHF, particularly when handling large-scale datasets or complex tasks. Automated feedback generation using off-the-shelf language models allows for efficient processing of vast amounts of data;

Time Sensitivity. RLAIF can accelerate the training process by eliminating the need for extensive human involvement in providing feedback. This allows for faster iterations and model improvements.

When RLHF is a must and you cannot go with RLAIF?

Reinforcement Learning from Human Feedback tends to be a more appropriate approach in certain scenarios where human judgment and experience are paramount. It works best for tasks that require subtle insights and complex reasoning where human expertise is critical, for example, such as medical diagnosis, legal reasoning, and creative writing.

RLHF's human oversight helps ensure that models are trained responsibly and in accordance with ethical principles in domains where ethical concerns and cultural sensitivity are of utmost importance, such as health care, law enforcement, and social services.

The RLHF is favored in applications involving substantial risks or potential consequences of wrong decisions. For instance, self-driving cars, healthcare recommendations, and financial advice require reliable and valid feedback from human experts.

The RLHF allows the collection of individualized feedback from annotators with domain-specific expertise. This is useful for AI applications where the availability of training data is limited or where datasets should be highly specialized and diversified.

RLHF vs RLAIF: Key Characteristics Enabling a Choice in Favour of One of the Approaches

RLHF and RLAIF use cases in NLP

Reinforcement Learning from Artificial Intelligence Feedback and from Human Feedback have diverse applications in Natural Language Processing (NLP) and language model development. Here are some prominent use cases of RLHF and RLAIF in NLP.

Text Summarization. RLHF can be used to improve text summarization systems by learning from human-generated summaries as feedback. Human preferences can help the model understand which summaries are informative, concise, and well-written. As was previously mentioned RLAIF also achieves comparable performance to RLHF on the summarization task;

Dialogue Systems. RLAIF can improve dialogue systems by learning from automated evaluations or user interactions with the system. AI-generated feedback can guide the model to generate more engaging, contextually relevant, and coherent responses. Human feedback in RLHF also can help the model generate more precise and informative responses to user queries. By learning from human judgments about the relevance and accuracy of answers, RLHF can improve question-answering systems;

Content Generation. RLAIF can improve content generation tasks, such as generating stories or composing poetry, by learning from AI-generated feedback about the quality and creativity of the generated content. AI-generated feedback can help the model produce more interesting and varied output.

AI systems help generate content at scale and refine it based on feedback, while humans provide domain expertise, creativity, and subjective judgment. RLHF and RLAIF encourage humans and AI systems to collaborate on content creation tasks, leveraging the strengths of both.

Correction of grammar. RLHF can be applied to grammar correction systems by learning from the corrections that humans make to grammatically incorrect sentences. Human feedback can guide the model to be more effective in the detection and correction of grammar errors.

In RLAIF for grammar correction, the AI system evaluates sentences based on pre-defined grammar rules and linguistic patterns in order to identify grammatical errors and provide feedback on possible corrections. Both approaches work towards providing users with more accurate and contextually appropriate corrections.

Machine Translation. By learning from human corrections or preferences on the translated text, RLHF can improve machine translation systems. Human feedback can guide the model to produce translations that are more accurate, fluent, and contextually appropriate. Through iterative learning from AI-generated feedback in RLAIF, the machine translation system continuously improves. It refines its translation policies and becomes more accurate and fluent over time.

Other uses

Beyond NLP and LLM training, Reinforcement Learning from Human Feedback and Reinforcement Learning from Artificial Intelligence Feedback apply to a variety of domains:

Autonomous Vehicles. In autonomous vehicle development, RLHF can be used to improve driving behavior by learning from human preferences on driving performance and safety. Human evaluators guide the reinforcement learning agent to make safer and more efficient driving decisions by providing feedback on the vehicle's actions in different scenarios. RLAIF can also improve autonomous vehicle control systems by learning from AI-generated feedback.

Robotics. During RLAIF a robot tasked with moving objects from one place to another can receive AI-generated feedback on its performance, guiding it to improve its manipulation skills and optimize its movements. Robots can adapt and optimize their strategies over time by receiving feedback from AI systems on the actions it does.

In RLHF for robotics, humans can provide feedback to robots on task performance, guiding them to learn complex tasks more efficiently. Humans can evaluate the robot's actions in tasks such as grasping objects, navigating environments, or interacting with humans. For instance, in industrial settings, workers can provide feedback to robotic arms on the accuracy and precision of movements during assembly tasks, leading to improved performance and reliability.

Gaming. RLAIF enables the development of game-playing agents that can learn to play games at a high level by receiving feedback on their performance. They can compete against each other or human players, continuously improving their strategies through reinforcement learning. RLHF can help game-playing agents learn to adapt their behavior and strategies based on human evaluators' feedback. Agents receive feedback on their actions and decisions, which allows them to refine their behavior to better match human expectations and preferences.

Healthcare. RL algorithms can be integrated into clinical decision support systems to help healthcare professionals make optimal decisions regarding diagnosis, choice of treatment, and patient care management. RLAIF and RLHF can improve the accuracy and relevance of recommendations by utilizing AI-generated feedback or human expertise.

RL algorithms may be taught to analyze medical images (e.g. MRI, CT scans) for disease detection, segmentation, and classification. RLAIF and RLHF approaches can be used to improve image interpretation algorithms based on feedback from AI systems or human experts.

Concerns related to RLAIF use in high-risk domains

We have pointed out that RLAIF can be applied in demanding domains such as healthcare and self-driving car training. By demanding, we mean spheres of application that are directly related to people's safety and health preservation. However, AI-generated feedback may contain errors or uncertainties leading to incorrect predictions or recommendations. In healthcare as well as autonomous car development, such errors could have serious consequences for patient safety and well-being.

Conclusion

Reinforcement learning from AI feedback implies the RL agent interaction with an environment and receiving feedback from an AI model, instead of a human-like in RLHF. RLAIF offers cost-efficiency, faster data generation and training iterations, and scalability, especially when human expertise is limited or costly to obtain at scale. However, it also poses challenges related to bias, errors, and lack of depth and nuance in contrast to human judgments.

On the other hand, RLHF provides valuable domain expertise, interpretability, and adaptability benefits but may face limitations in scalability and consistency. The final choice between RLAIF and RLHF has to be made according to the specific requirements and constraints of the AI application. In some cases, it may even be better to find a balance between utilizing AI capabilities and human insight to achieve optimal performance in reinforcement learning tasks.