Datasets

High-quality datasets are the foundation for training AI and LLMs. Our expertly curated datasets ensure precision and reliability, enabling superior fine-tuning and performance for your models. These datasets are available for purchase.

Tau-bench Dataset Extension

Benchmark agent reasoning, planning, and tool-use in complex B2E workflows.

University-level Math

Reasoning Dataset

This dataset is designed to develop complex reasoning and problem-solving skills in STEM.

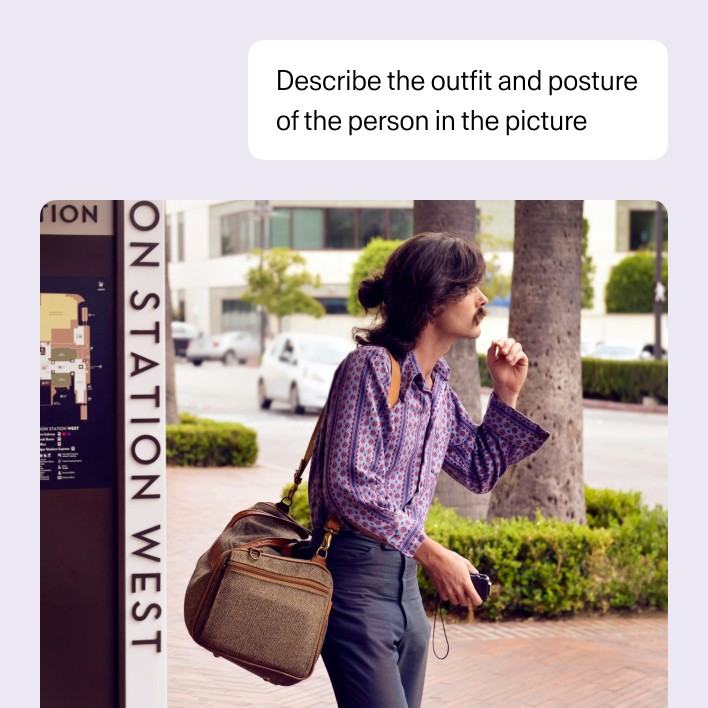

Multimodal Conversations Dataset

This dataset is designed to enhance image understanding, reasoning, and visual analysis in VLMs.

Case studies, product news, and other articles straight to your inbox.