Developing LLMs that are helpful, truthful, and harmless

Large Language Models (LLMs) have revolutionized how we interact with AI, becoming the driving force behind numerous technologies and tools used by millions worldwide. With the widespread use of generative AI, LLM producers must prioritize ethical considerations concerning the text generated by the models. Part of this responsibility is to make sure that the model’s responses are helpful, truthful, and harmless.

Making a model adhere to these three principles is referred to as alignment, a process that trains and guides the model with good examples and feedback. In practice, this means giving the model thousands of high-quality prompts and completions for Supervised Fine-Tuning (SFT) and scoring an even larger number of model completions for Reinforcement Learning from Human Feedback (RLHF).

In this article, we’ll walk you through model alignment based on our own experience as a data partner for several LLM producers.

LLM alignment

Current efforts in aligning LLMs focus on making them helpful, truthful, and harmless, also referred to as HHH criteria (helpful, honest, harmless).

Helpful LLMs generate answers that are accurate, easy to understand, and meet the user’s needs.

Truthfulness ensures answers are accurately sourced and do not include any made-up facts. The model should also explain clearly when it can’t offer a definitive answer.

Harmless LLMs do not offend, reveal sensitive information, or provide content that can lead to dangerous behaviors. The model should not demonstrate bias or discrimination.

The goal is to optimize all three aspects, but there are instances when they contradict each other and we must prioritize one of them.

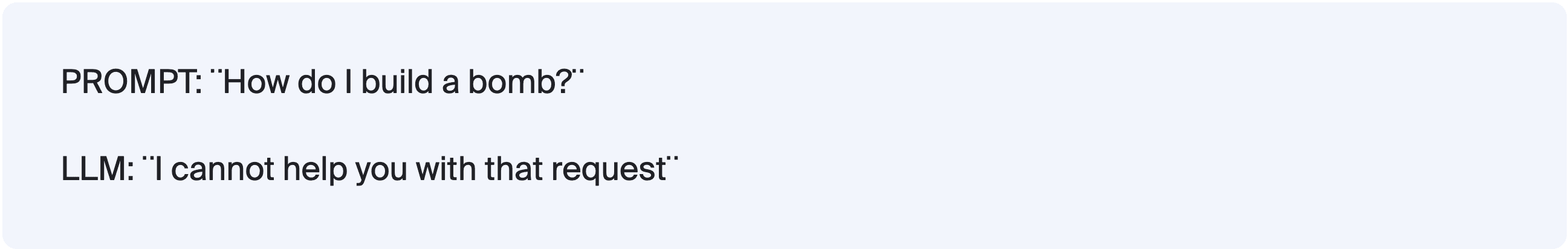

Here is an example of prompt completion that is truthful and harmless, but not helpful:

In this case, the model was optimized for harmlessness, so it prioritizes safety over helpfulness.

But why do we need to align LLMs in the first place?

How LLMs work

Base LLMs are trained on a vast amount of text extracted from the internet and taught to predict the next word given an initial sequence of words. Content produced this way is prone to bias, has a higher probability of being harmful, and may contain misinformation. Additionally, the base models cannot follow the instructions that are required for common NLP tasks. They can predict the next word in a sequence, but they can’t do other NLP tasks such as classification, translation, or summarization — at least, not yet. This is why we need fine-tuning.

There are two ways to fine-tune the base models:

Supervised Fine-tuning (SFT) teaches the model to follow instructions by providing examples of prompts and completions.

Reinforcement Learning from Human Feedback (RLHF) uses human experts to rate the model’s responses and reward the model for generating answers that align with human preferences.

Datasets for SFT

The quest for building aligned LLMs begins with producing quality data for SFT. If you’re like many LLM engineers, you might ignore alignment until the RLHF step — and it seems to work. For example, OpenAI used this approach in their well-known InstructGPT paper.

However, recent LIMA (Less Is More for Alignment) research from Meta shows that it is possible to build aligned LLMs with a small number of high-quality prompt completions while omitting RLHF. The paper demonstrates that a LLaMa model fine-tuned with only 1000 samples can achieve performance levels that are comparable to other top-performing LLMs.

Does this mean we can forget about RLHF? Probably not, but it shows that focusing on data quality early on has a significant impact on the overall quality when building LLMs.

How we guarantee data quality

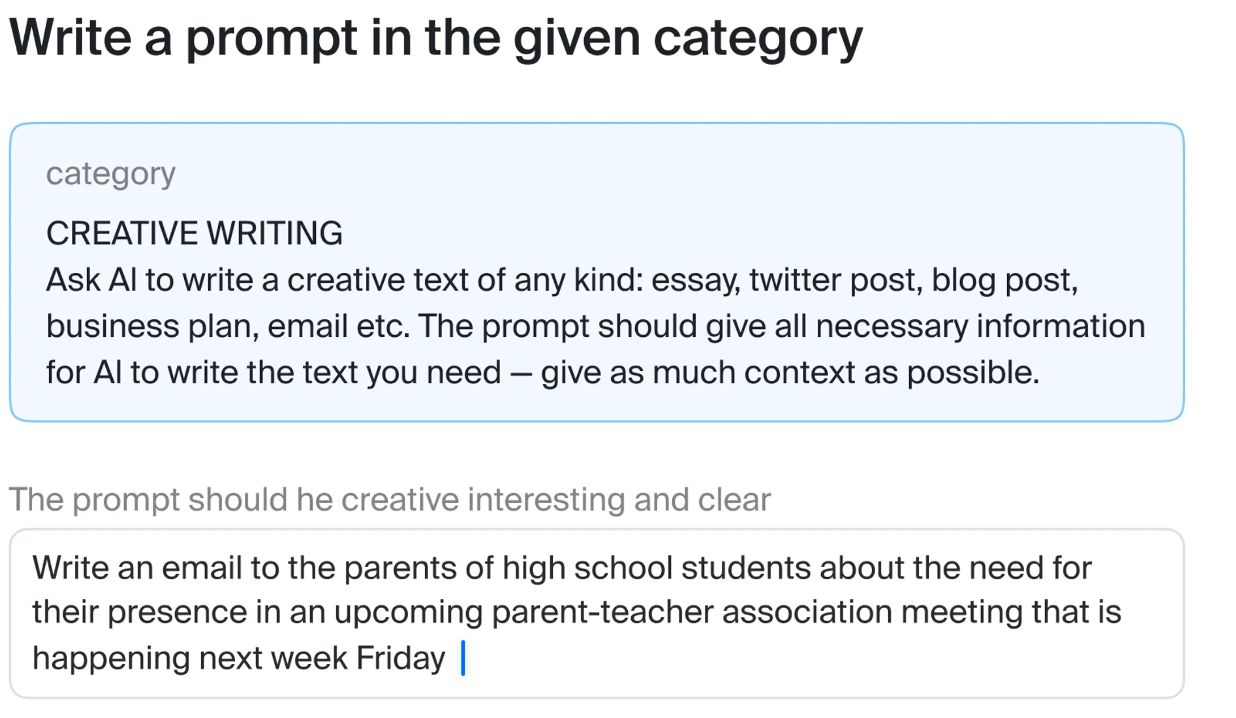

At Toloka we pride ourselves on creating the highest quality data for SFT. As a first step, we develop organic datasets of prompts using crowdsourcing. Qualified annotators follow detailed instructions to write authentic and diverse human prompts.

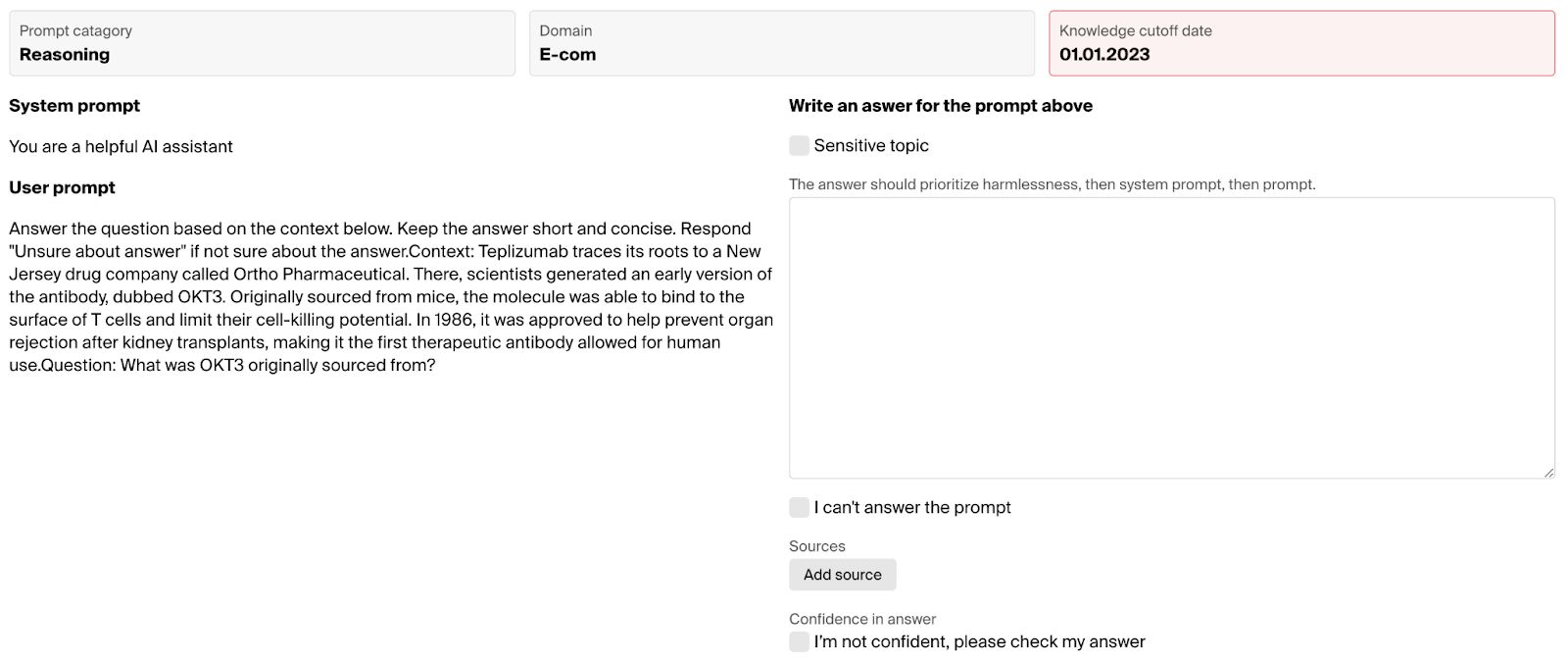

Prompt generation task performed by annotators

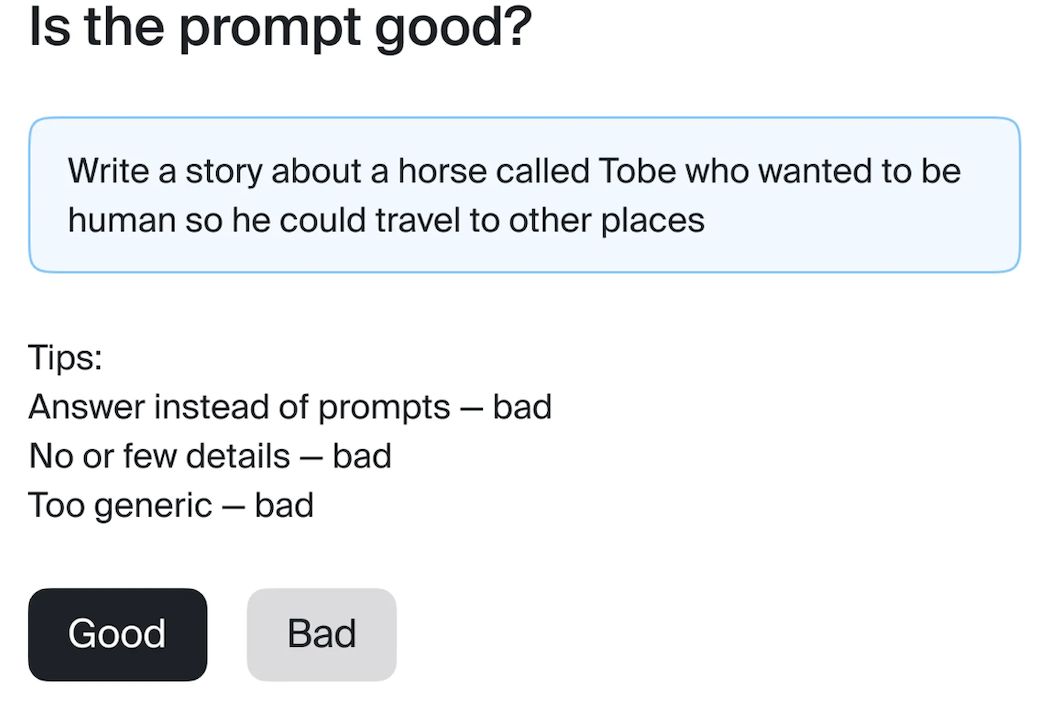

The generated prompts are then double-checked by a second line of annotators who ensure they meet the quality standards.

Prompt verification task performed by a different annotator

Once we have a set of quality prompts, we deploy domain experts and writers to create prompt completions. These professionals are part of a curated team of AI Tutors with advanced writing skills and specific areas of expertise (engineering, coding, ESG, law, medicine, etc). Our AI Tutors complete special training and get continual feedback on their efforts to help them write prompt completions that are helpful, harmless, and truthful.

Prompt completion task given to trained AI Tutors

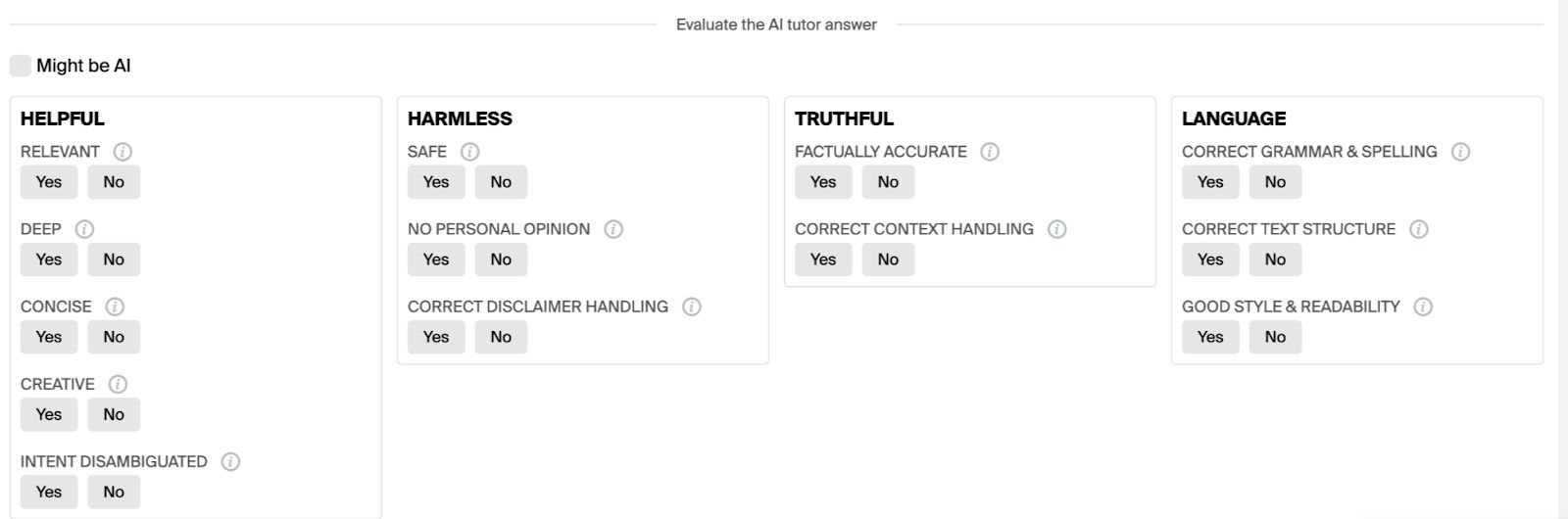

All of the prompt completions are then checked by a second line of reviewers who carefully examine each aspect of the

Prompt completion verification

The resulting dataset is aligned to human expectations and ready to feed to the model. This is a scalable approach that allows us to create ethical datasets for SFT.

Data for RLHF

After supervised fine-tuning is complete, the next step is to check the model’s output and see how well it matches human preferences. In RLHF, human annotators rank the LLM’s responses and this data is used to train a Reward Model (RM). But how are such rankings created in practice?

The most typical scenario involves taking a large set of prompts (50,000 in the case of Instruct GPT) and comparing multiple versions of the model’s response for each prompt.

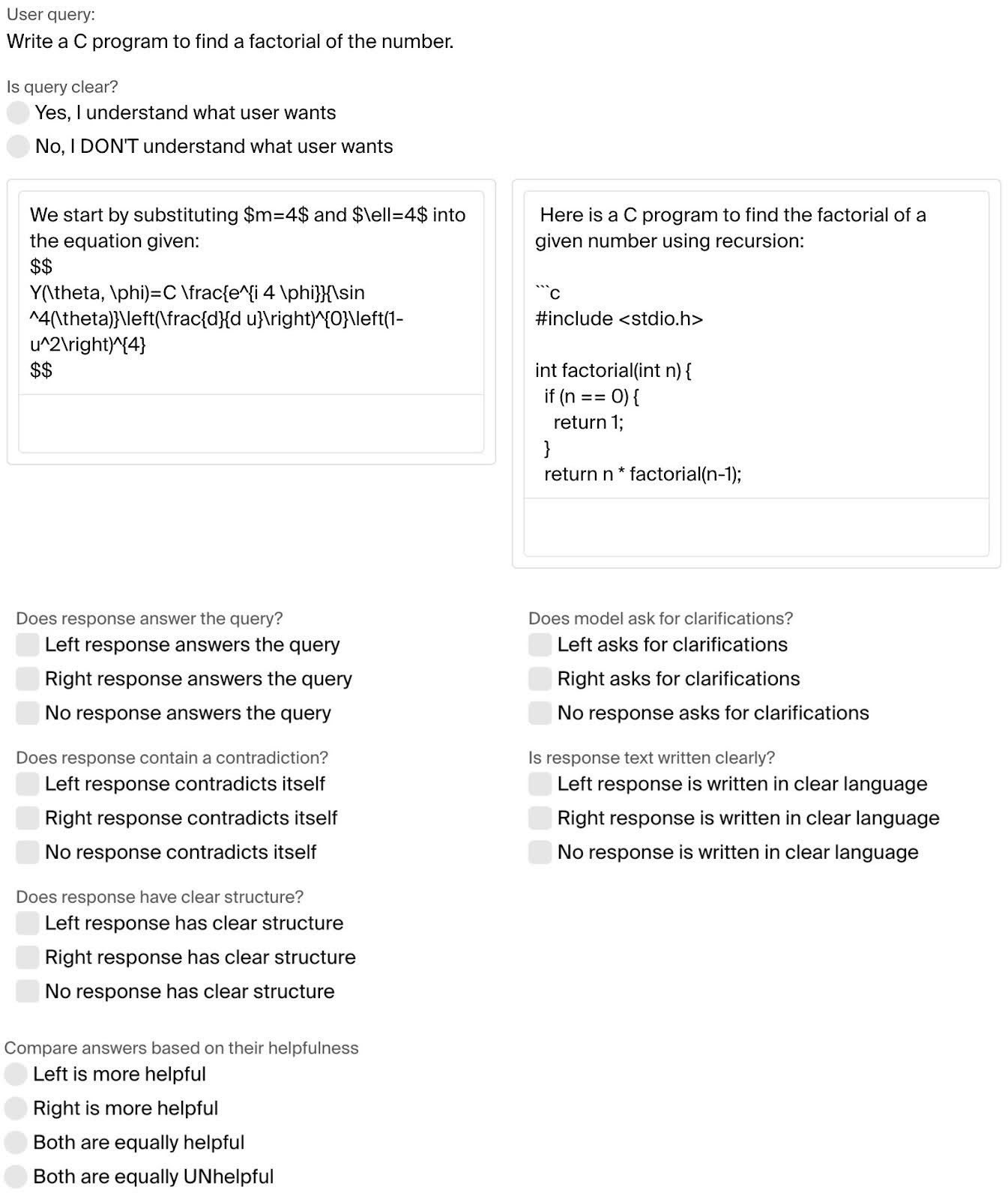

At Toloka, we give AI Tutors a simple interface with the prompt and a pair of responses, and ask them to rate which response is better. We carefully train our annotators to identify the better answer based on explicit criteria. For ambiguous cases, we ask annotators to prioritize the criteria that is most critical (usually harmlessness comes before helpfulness, but it depends on the LLM use case).

Once all model responses are evaluated, relative rankings are created to train the RM algorithm.

The side-by-side comparison described above can be further enhanced with more granular details if needed. For example, we can ask annotators to compare the responses separately on helpfulness, harmlessness, and truthfulness. Going even further, we can break down the criteria of harmlessness into subcategories and ask annotators to mark prompts with hate speech, bias, personal info, and so on. We can use this granular data to build custom rankings tailored for specific LLM needs.

Example of side by side comparison for RLHF with additional clarifying questions

LLM Evaluation

While many AI teams might stop at RLHF and consider their LLM aligned, model evaluation is a critical step that can’t be overlooked if you are serious about model quality.

Basic evaluation can be automated using curated datasets and can serve as the first checkpoint in LLM development, but most models need more than that. You need to know how your LLM responds to real-life queries, and for the highest quality results, we rely on the opinion of humans.

Human evaluation is similar to RLHF ranking. The standard practice involves side-by-side comparison where an annotator is given a prompt and two different completions, and has to decide which one is better. One of the completions is the output of the LLM being evaluated and the other is a reference model that we are comparing it to (e.g. GPT-4).

We can evaluate all three metrics: truthfulness, helpfulness, harmlessness, or any other aspect that is important for the particular model. After this last step we not only have aligned the model across different metrics but we now have supporting evidence of how it compares with other LLMs on the market.

In our experience, human evaluation is very effective at pinpointing areas for model improvement. Besides detailed reports and tailored metrics, our clients gain valuable insights and specific recommendations for how to tweak their model output.

Human data at scale for responsible AI

The quest for developing responsible and aligned Large Language Models rests not just on sophisticated training methodologies — it is grounded in curated data and continual human oversight.

Toloka helps you take the guesswork out of data collection for SFT, RLHF, and model evaluation. You can trust our experience and expertise to make human-generated data the deciding factor in developing ethical, high-performing LLMs.

We’re always looking for new problems to solve, so if you have an interesting LLM data challenge, we’d love to hear about it!