← Blog

/

Model context protocol: How MCP bridges AI and the real world

Training datasets are what it needs to reason, adapt, and act in unpredictable environments

AI applications can write code, draft copy, and plan projects — but they often can’t access the data or tools required to put those plans into action. Integrating models with live databases and services usually means fragile, custom connections that break as soon as something changes.

The Model Context Protocol, or MCP, proposes a fix — a universal way for AI agents to interface with real-world applications — making them not just responsive, but operational.

The integration challenge in AI

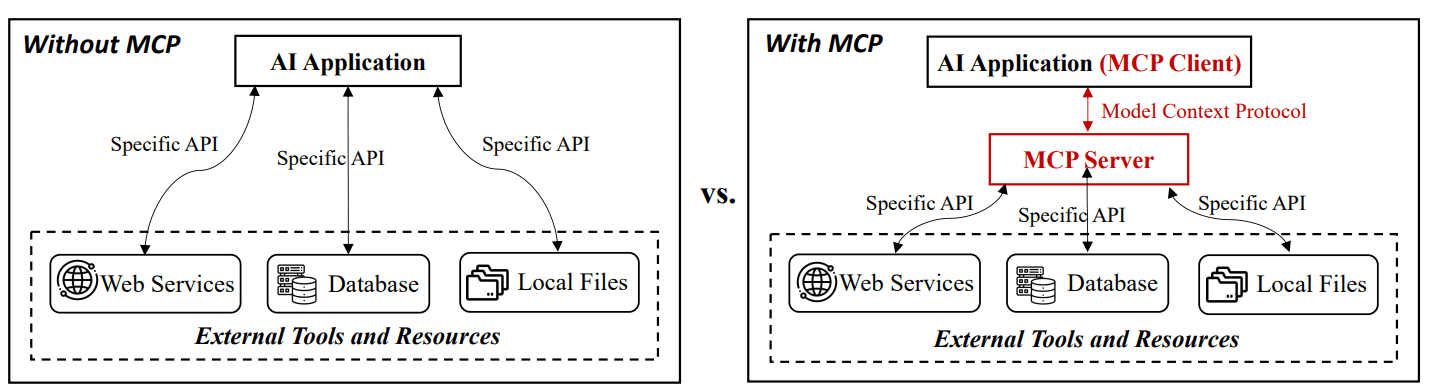

Connecting AI to business systems is rarely a smooth process. Every deployment needs something different — a database, a workspace, an API. Each connection must be built by hand and maintained whenever one side changes. This “N×M problem” — N models times M tools — quickly turns integration into a full-time job.

Even when AI applications work flawlessly in testing, most still can’t tap live dashboards or transaction logs once deployed. They generate insights but can’t execute them — trapped at the edge of the systems they’re meant to improve.

The Model Context Protocol (MCP) was designed to fix that: defining a common language between AI agents and external systems.

A schematic comparison showing direct tool integrations versus a unified MCP client–server interface. Source: Model Context Protocol (MCP): Landscape, Security Threats, and Future Research Directions

Background and rationale

Introduced by Anthropic in 2024, MCP addresses a growing systems problem: every model interacts with different tools in its own way. Companies built custom integrations to let AI access data or perform tasks — a pattern that couldn’t scale. MCP proposed a single, standardized way for AI to communicate with external resources.

How MCP works

MCP defines a shared communication layer between AI clients and servers. Servers host data sources or functions; clients discover and invoke them through one structured interface. This removes the need for one-off integrations and makes connections predictable across tools and environments.

As an open protocol, MCP enables developers to build integrations once and reuse them widely, transforming tool access from a bespoke engineering task into a core part of the platform layer itself.

Why MCP matters

With MCP, enterprise AI systems can expose standardized servers that AI agents call through a single interface. This eliminates redundant connectors, simplifies scaling, and makes deployment easier to manage.

MCP also brings operational data within reach. Instead of relying on static training data, AI applications can query internal systems, execute predefined functions, and trigger workflows safely within enterprise environments.

The USB-C for AI

A universal USB-C port replaced dozens of proprietary connectors, creating a single standardized way to connect hardware. MCP does the same for software — replacing fragmented APIs and SDKs with a standardized protocol that lets AI applications connect to tools and data sources through a single layer.

This shift makes interoperability practical — enabling modular systems where new tools can plug in as easily as hardware connects through a port.

Inside the MCP architecture: The role of the MCP host

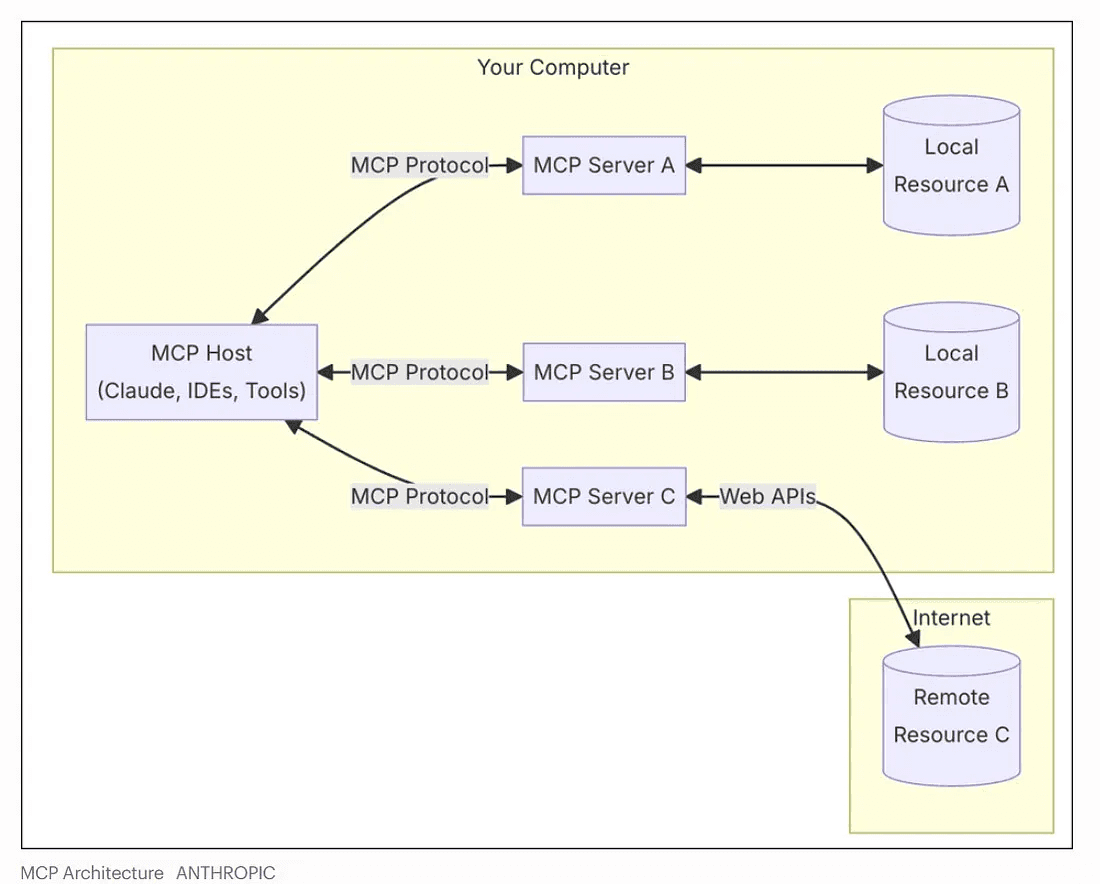

At the core of the Model Context Protocol (MCP) architecture is a simple principle: separate what an AI model knows from what it can do. The host — an environment such as Claude, an IDE, or another AI-driven application — orchestrates communication but doesn’t store business data or run external services.

Instead, it connects to MCP servers linking to local databases, external data sources, or remote APIs through the MCP protocol, which defines how data and actions move securely between components. Models stay focused on reasoning, while servers handle execution — enabling AI assistants to operate safely and modularly across connected environments.

The MCP host communicates with multiple MCP servers through the MCP protocol, each exposing access to local or remote resources via a standardized interface. Source: Forbes, citing Anthropic Model Context Protocol documentation

This setup scales cleanly: one host can connect to many servers, and each server can support multiple hosts or platforms with MCP support built in. Access control and tool permissions remain at the server level, while the host focuses on reasoning — reducing risk and improving auditability.

Core Elements: MCP client and MCP servers

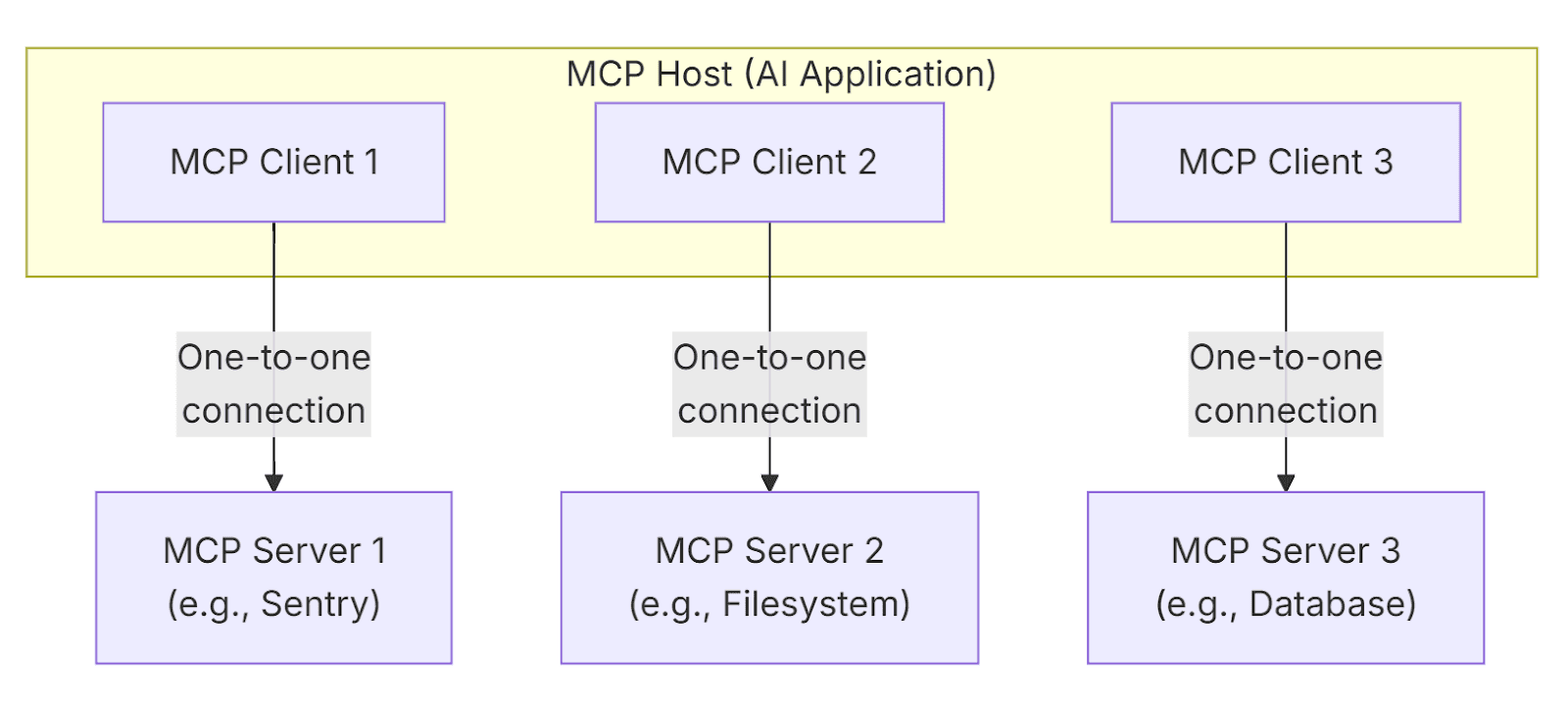

MCP defines two primary roles:

MCP client: an AI application or agent that accesses external systems.

MCP server: a program exposing data, tools, or functions that the client can call through the protocol.

Together they form a client–server architecture where the AI agent issues standardized requests and the server responds with structured results.

The MCP host manages multiple MCP clients, each maintaining a one-to-one connection with a corresponding MCP server. Source: Anthropic Model Context Protocol documentation

Discovery, function calling, and the MCP protocol

The MCP specification defines mechanisms for discovery, capability negotiation, and function invocation. Each server advertises its available functions, data sources, and permissions through structured schemas. Clients introspect these capabilities and determine which to call, removing the need for hard-coded integrations.

Example: How AI agents use MCP to act on external data

An AI assistant — or any AI app — might query a PostgreSQL database and post updates to Slack. Using MCP, the assistant discovers a database server, invokes query_table(), receives structured data, and then connects to a Slack server with post_message() to send the summary — all through a single protocol.

Benefits of the MCP ecosystem

MCP streamlines integration across AI applications and enterprise ecosystems, reducing maintenance overhead and improving scalability.

It enables real-time context and grounding, giving models access to verified data sources rather than static snapshots — reducing hallucinations and increasing trust.

Each MCP server is self-contained, promoting modularity and interoperability among AI tools. Together, these qualities provide a foundation for more capable, actionable AI agents that reason within the AI model and act safely across connected systems.

Use cases & early adoption

Originally developed by Anthropic, the Model Context Protocol (MCP) is now implemented across developer and enterprise tools that support MCP for standardized, cross-platform integration. Popular MCP server implementations, such as the GitHub MCP server, connect data, communication, and automation systems through one protocol.

Early partners such as Replit, Sourcegraph, and Zed use MCP to standardize how their AI environments access and control external tools — making AI agents more capable and immediately actionable.

Example of a real-world MCP integration. Apify exposes its web automation and scraping tools through MCP servers, allowing AI agents to orchestrate data collection and reporting workflows through a standardized protocol. Source: How to use MCP with Apify Actors

In enterprise settings, MCP enables secure internal data access and task automation, connecting AI assistants and AI-powered tools across systems while reducing the need for bespoke integrations.

Challenges, risks, and limitations

While the Model Context Protocol (MCP) simplifies integration, it introduces new security, maintenance, and performance risks that organizations must address. A compromised agent could issue unsafe calls, so each server must enforce strict permissions and validation.

Adding more servers requires monitoring and regular updates. Poorly maintained endpoints can expose vulnerabilities or disrupt workflows.

Latency can arise when agents depend on multiple servers for context or execution. Efficient caching and asynchronous design help maintain responsiveness.

Version mismatches or inconsistent schemas may cause failed calls. And many legacy AI systems can’t be easily wrapped as MCP servers, requiring hybrid solutions.

Evaluating MCP with Toloka

Reliable MCP integrations require rigorous evaluation. To ensure that AI agents interact with tools safely and effectively, testing must cover diverse, real-world tasks and include high-quality human feedback.

Toloka enables this through scalable, human-in-the-loop evaluation pipelines that measure how well AI agents perform when using MCP servers. Through its agent data platform, developers can assess:

Understanding of tool instructions — how accurately the agent interprets function descriptions and capabilities.

Action correctness and efficiency — whether calls to MCP servers are properly formatted, safe, and yield expected results.

Robustness — how consistently the agent performs under varied prompts, contexts, or edge cases.

Scenario-based evaluation verifies that MCP-enabled AI assistants retrieve correct data, execute commands safely, and handle errors gracefully. Toloka also provides domain-specific feedback, enabling realistic benchmarking of model behavior before deployment.

Best practices and recommendations

Implement least-privilege access control so AI agents perform only necessary actions.

Regularly audit MCP servers and outputs to detect unsafe calls or data leaks early.

Use static analysis and safety-scanning tools to identify vulnerabilities before deployment.

Adopt incremental deployment — start with read-only access and expand as reliability improves.

Contribute to the open MCP ecosystem, sharing best practices to strengthen security and interoperability.

The Future of MCP: Building connected, responsible AI

The Model Context Protocol (MCP) is emerging as a foundation for AI interoperability, making systems more connected and capable while bridging reasoning with real-world action. Its success depends on balancing rapid innovation with responsible design — ensuring every integration is secure, auditable, and human-aligned.

As new AI models and LLM applications continue to evolve, the entire community — from research teams to enterprise developers — has a role in advancing MCP as a shared infrastructure for standardizing context and delivering more relevant responses from connected systems.

Future research directions include improving security threats detection, refining schema negotiation, and extending the protocol to AI-powered IDEs and other available tools that depend on consistent MCP implementations.

Developers and organizations are encouraged to experiment, contribute, and evaluate MCP implementations responsibly — shaping an open, interoperable future where AI tools can think, act, and collaborate safely across systems and development environments.

Subscribe to Toloka news

Case studies, product news, and other articles straight to your inbox.