Self-driving car technology: a case study

The Yandex Self-Driving Group has been working on cutting-edge technologies for self-driving cars since 2017. But how does Toloka help keep them in the race to develop autonomous vehicles? In this post we'll talk about how the vehicles learn to see the world around them — what kind of data is collected, how it is processed, what algorithms are used, and the role Toloka plays.

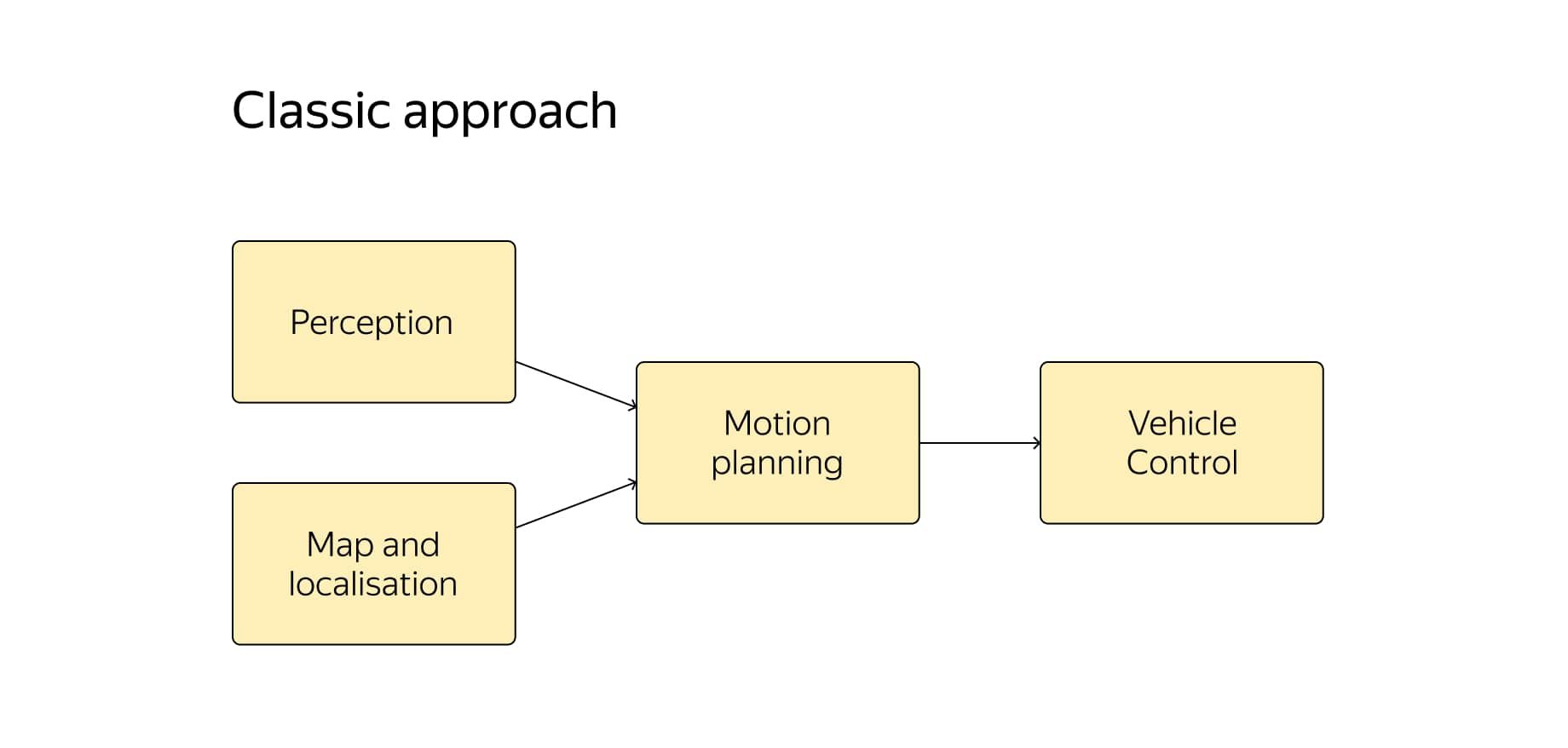

Here's a diagram showing what's "under the hood" of self-driving cars:

Classic approach

First there's the perception component, which is responsible for detecting what's around the car. Then there are maps and the localization component, which determines exactly where the vehicle is located in the world. Information from these two components is fed into the motion planning component of the car, which decides where to go and what path to take based on real conditions. Then the motion planning component passes the path to the vehicle control component, which sets the steering angle for the path and compensates for the physics of the vehicle. Vehicle control is mostly about physics.

In this post, we'll focus on the perception component and related data analysis. The other components are also extremely important, but the better a car is able to recognize the world around it, the easier it is to do the rest.

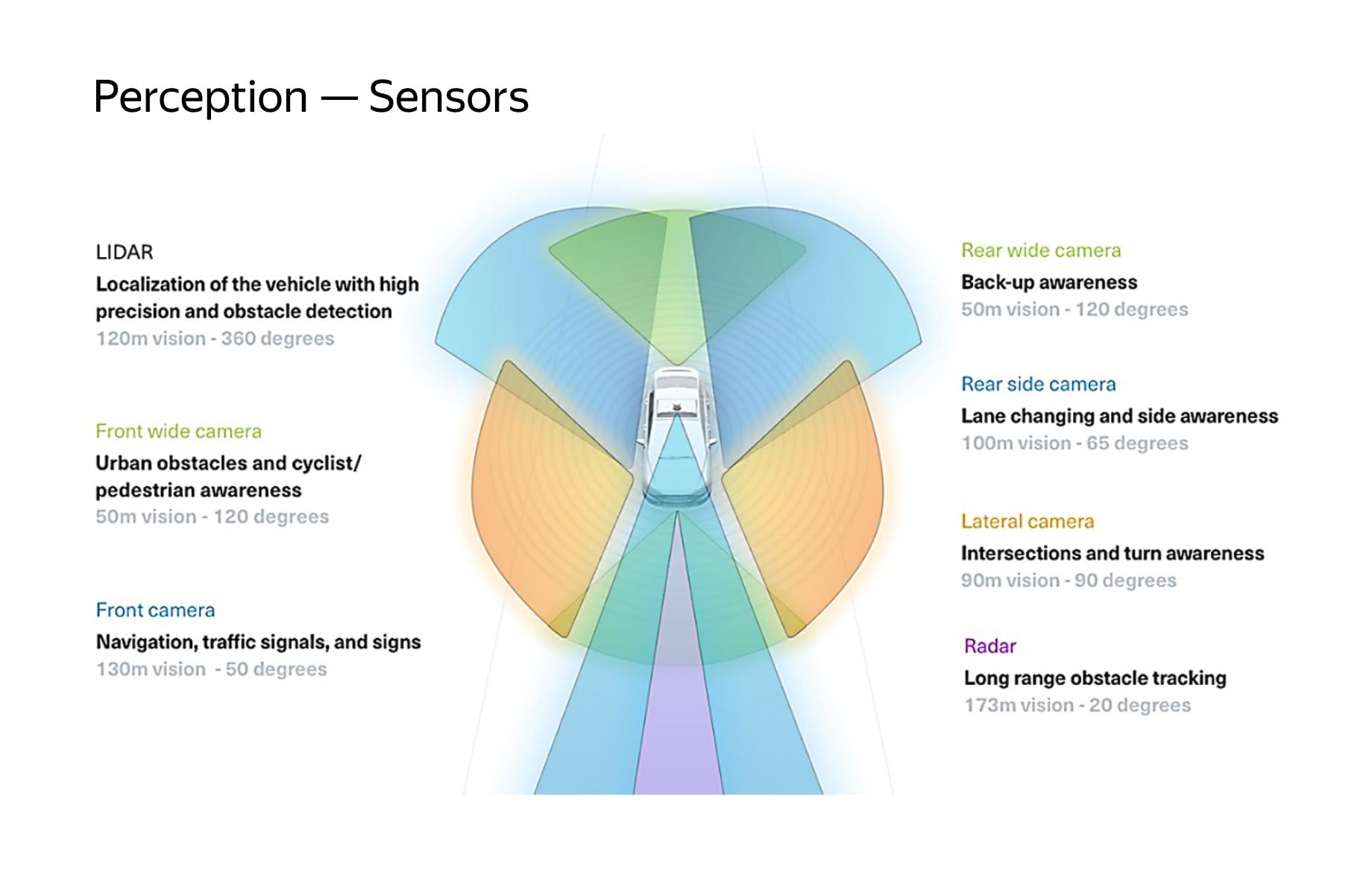

So how does the perception component work? First, we need to understand what data is fed into the vehicle. There are many sensors built into the car, but the most widely used ones are cameras, radars, and lidars.

Perception - Sensors

A radar is a production sensor that is widely used in adaptive cruise controls. It's the sensor that detects a car's position based on its solid angle. This sensor works very well on metal items, like cars, but it doesn't work so well on pedestrians. A distinctive feature of radar is that it detects both position and speed; the Doppler effect is used to determine radial velocity. Cameras provide standard video input.

Cameras

Lidar

Lidar is more interesting. If you've ever renovated a home, you might be familiar with laser rangefinders that can be mounted on a wall. Inside those rangefinders is a timer that measures how much time it takes for light to travel back and forth to and from a wall, which is used to calculate the distance. There are actually more complex physical principles at work, but the point is that the lidar has many laser rangefinders that are mounted vertically. As the lidar spins, the rangefinders scan the space around them.

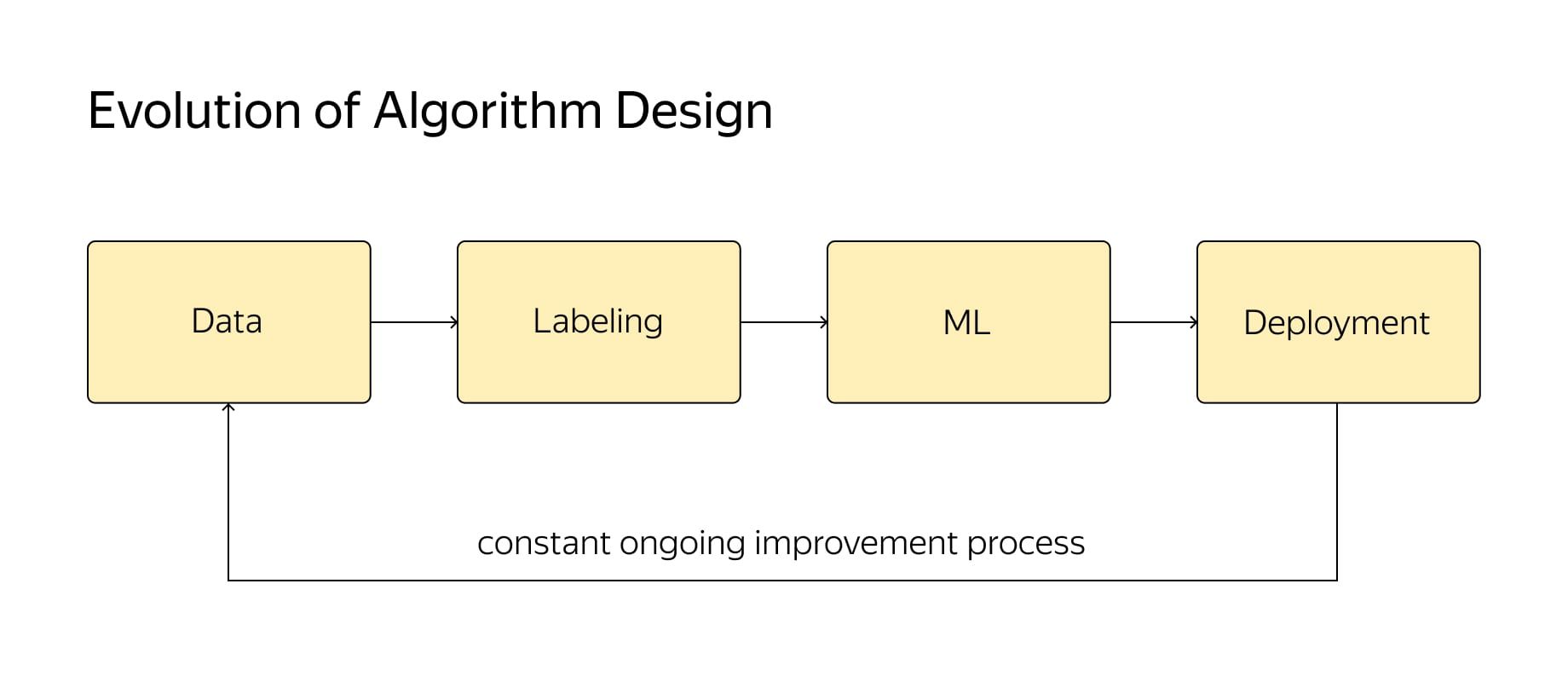

Evolution of Algorithm Design

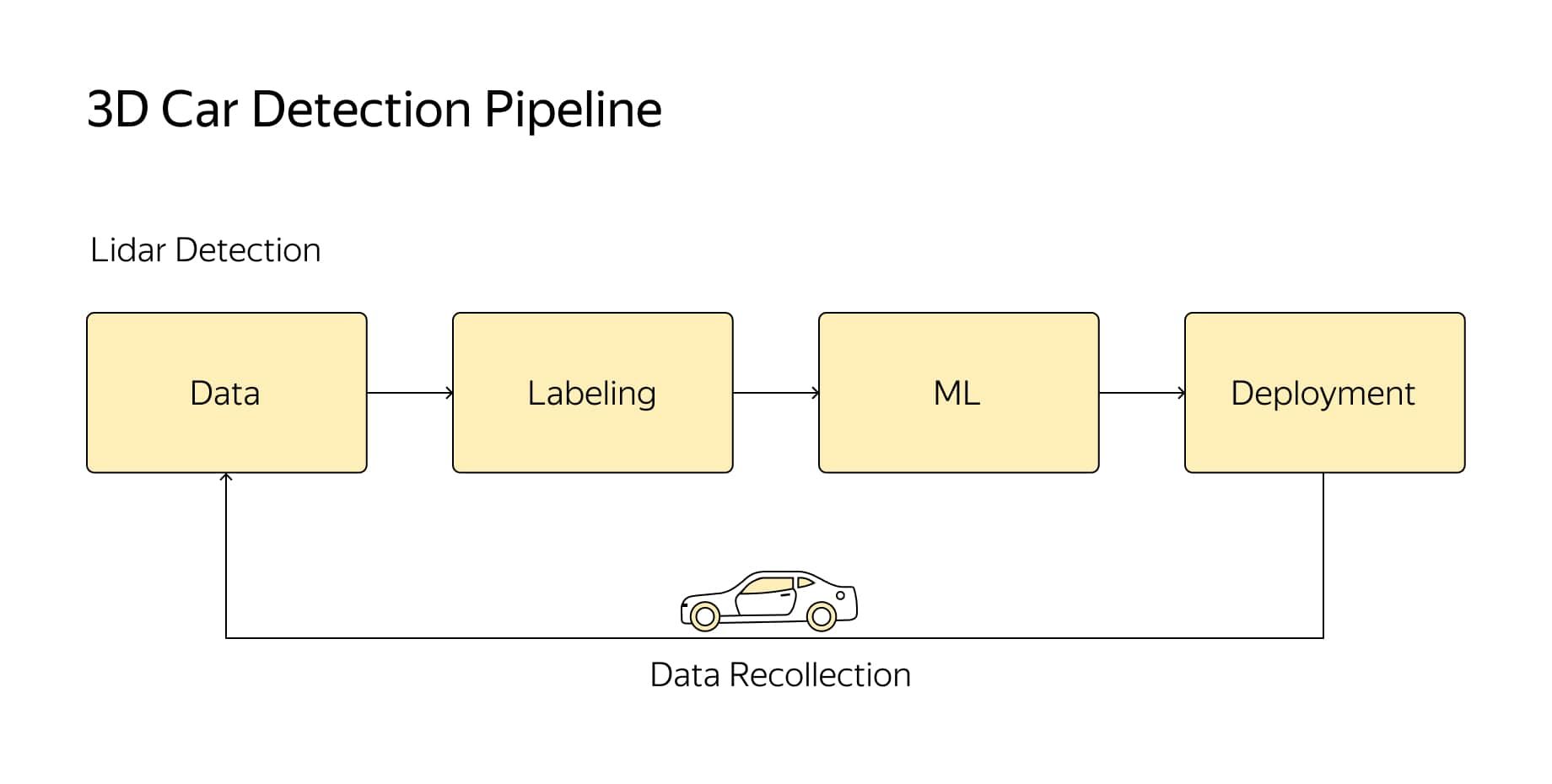

The vehicle has multiple sensors, and each of them generates data. This paves the way to a classic pipeline for training machine learning algorithms.

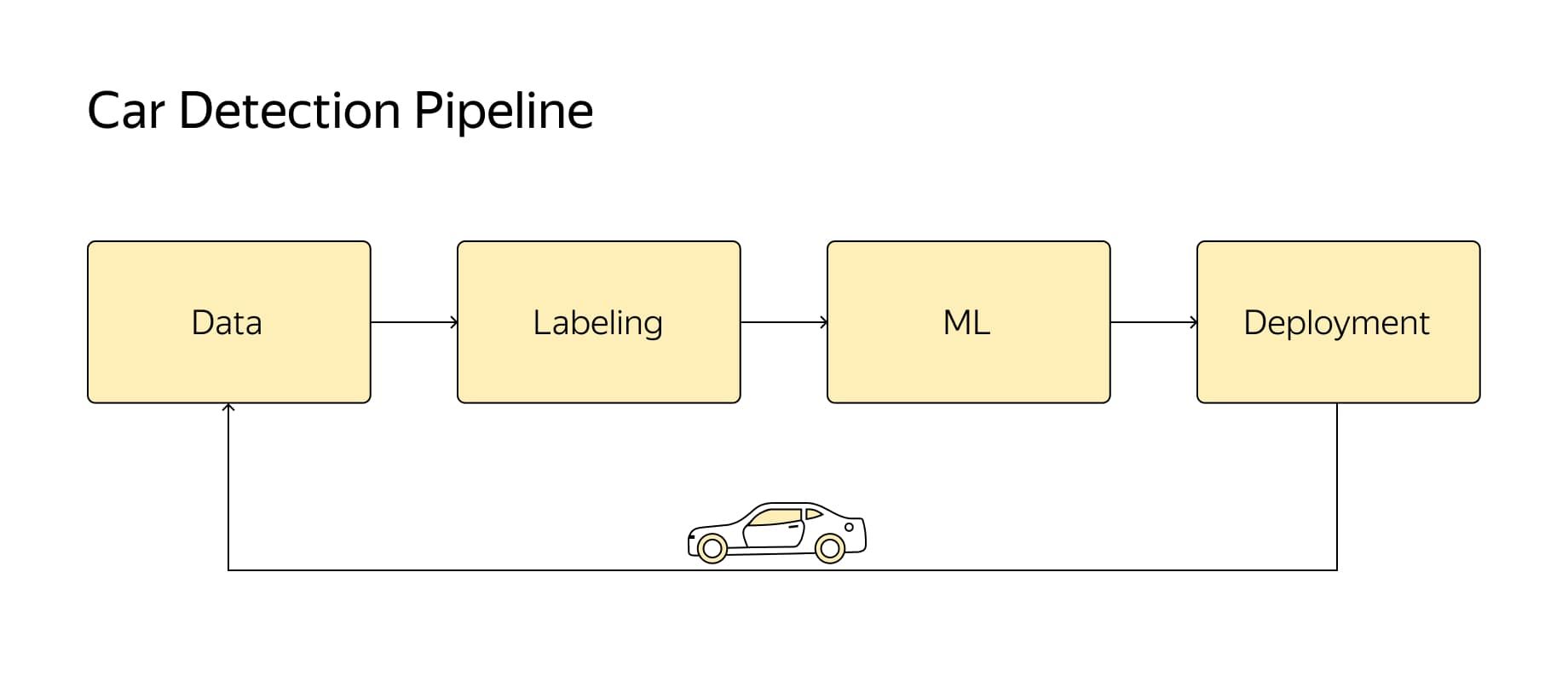

Car Detection Pipeline

Data must be collected from vehicles, uploaded to the cloud, and sent out for labeling. The labeled data is used to train the model. After it is deployed on the fleet, more data is collected, and this process is repeated over and over. The result should be fed back to the car as soon as possible to keep things running smoothly.

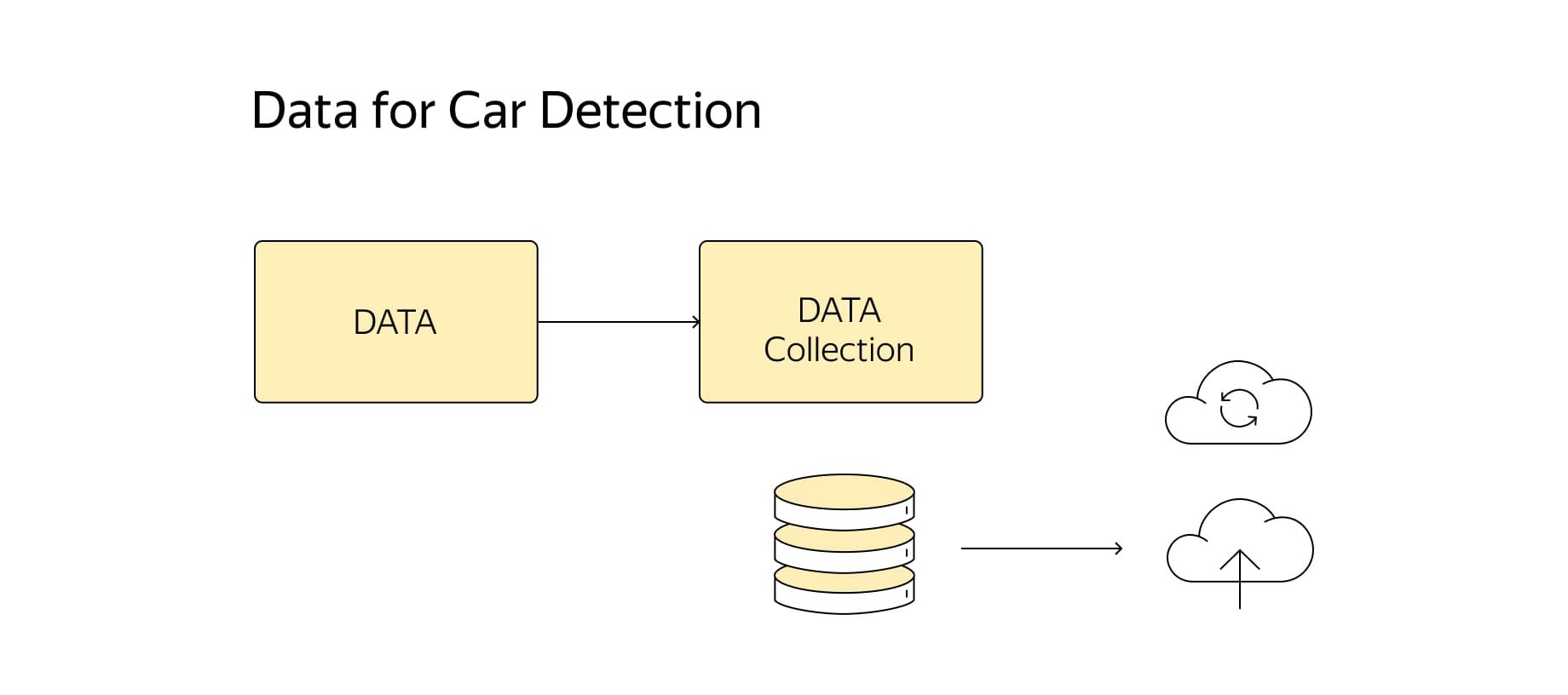

Data for Car Detection

Once the data is collected and uploaded to the cloud, speed is of the essence in data labeling.

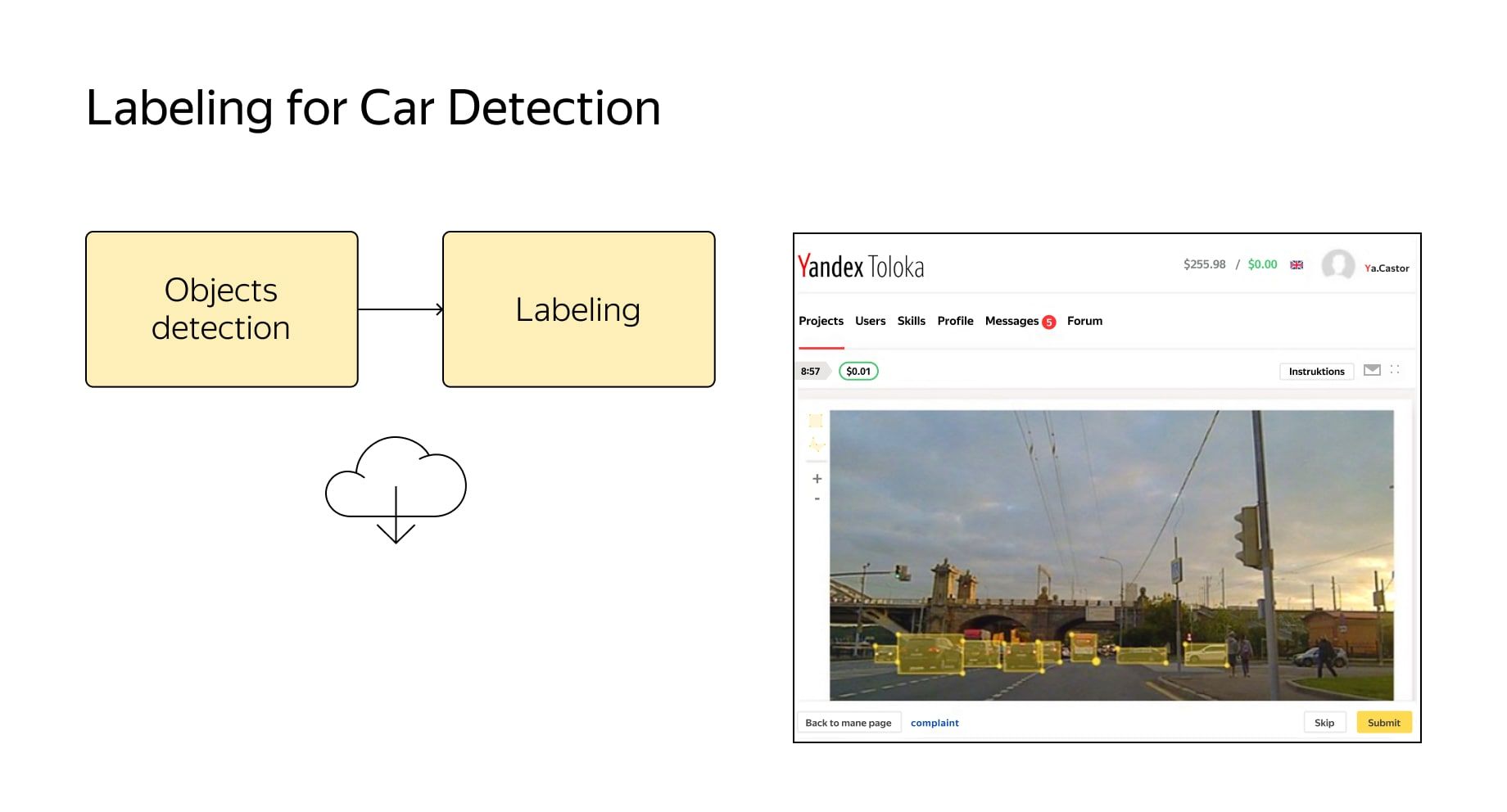

Labeling for Car Detection

The Yandex development team goes to Toloka to prepare the training data. Tolokers can label large datasets quickly and cheaply. In the case of vehicle detectors, a simple web interface is distributed to tolokers where they select objects in images with bounding boxes, which is fast and easy.

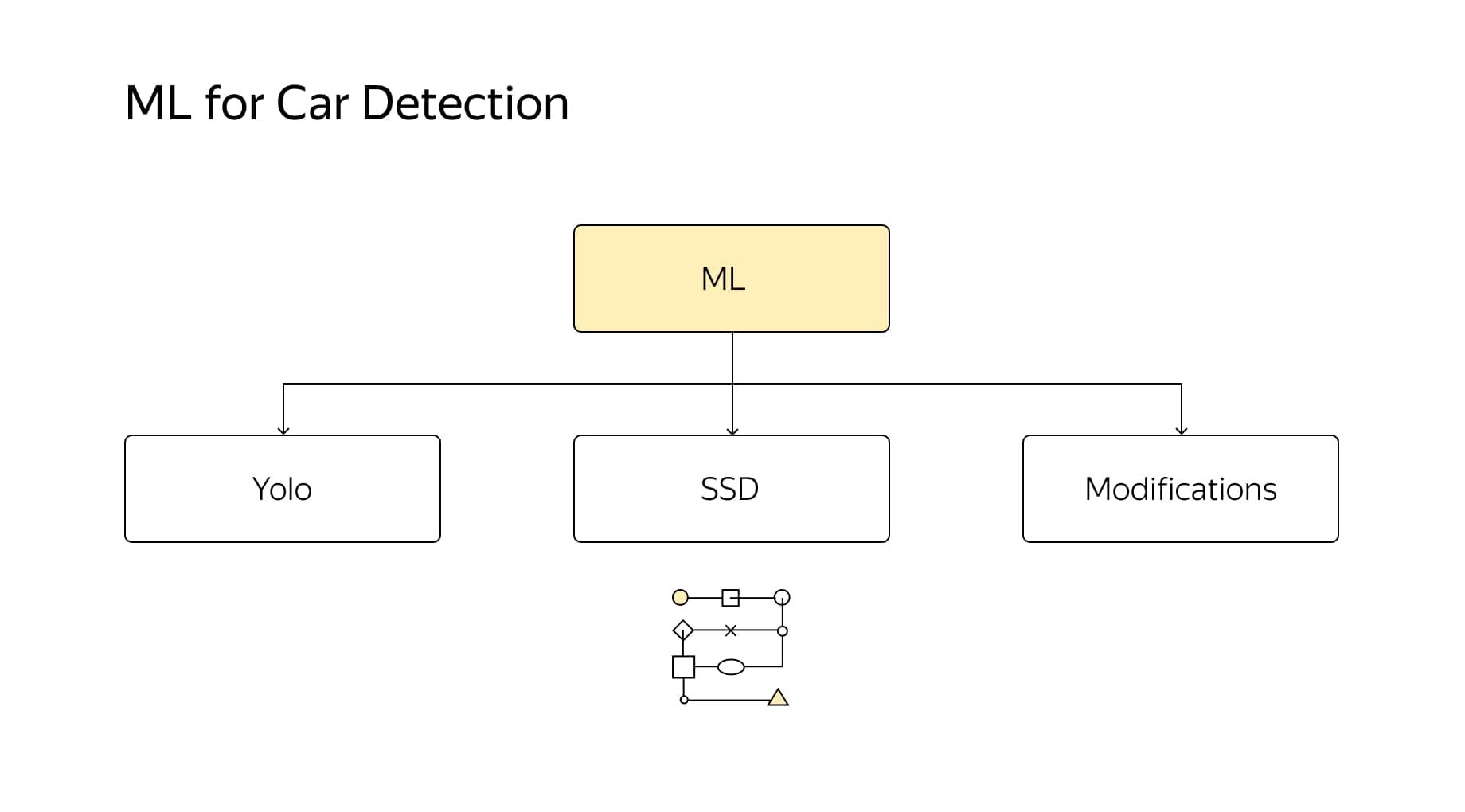

ML for Car Detection

The next step is to choose the machine learning method. There are many fast machine learning methods available, like SSD, Yolo, and their modifications.

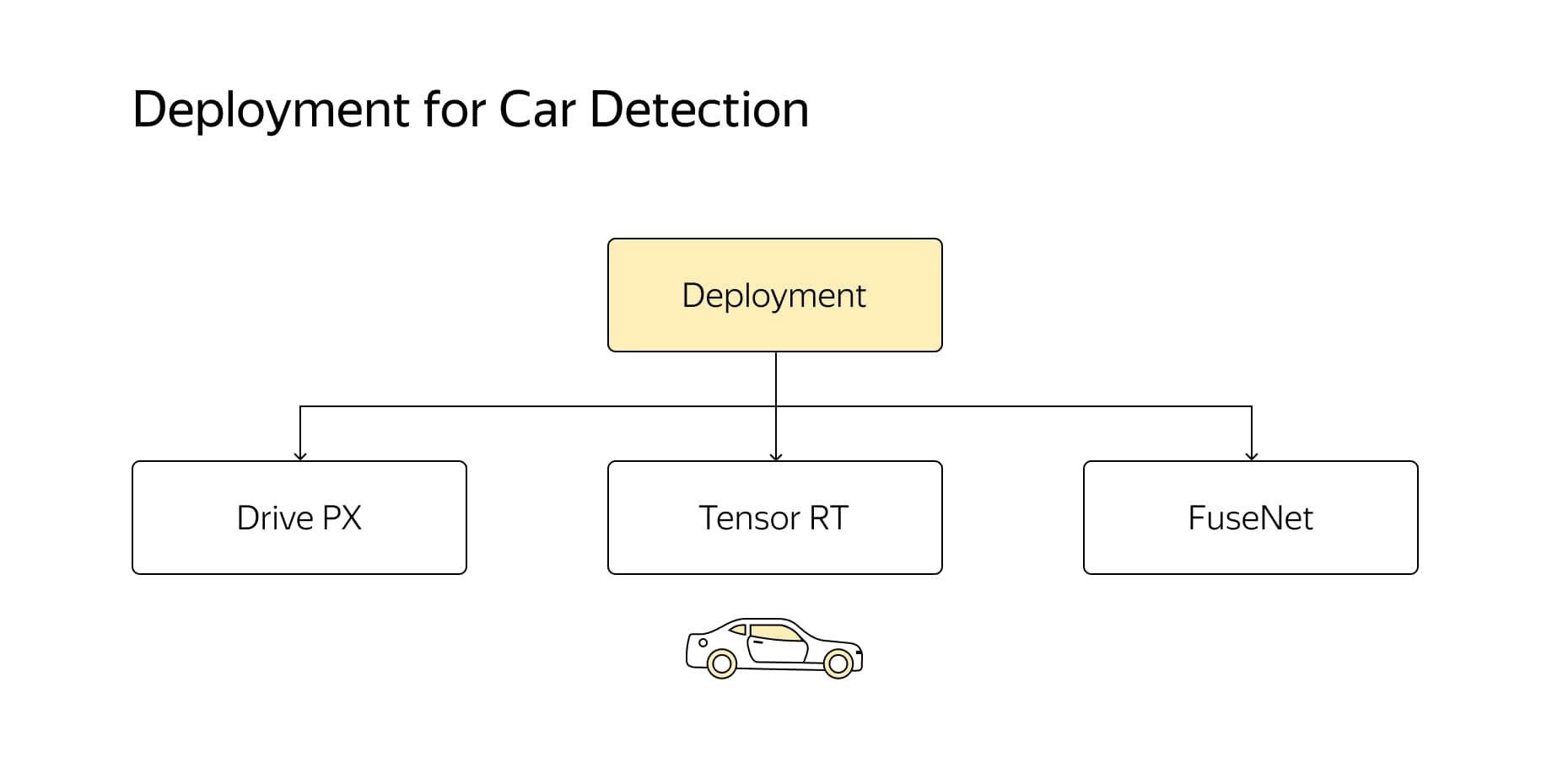

Deployment for Car Detection

The results are then deployed in the vehicles. There are a lot of cameras involved in order to cover all 360 degrees, and the system must work very quickly to respond to traffic conditions. Various technologies are used: inference engines like Tensor RT, specialized hardware, Drive PX, and FuseNet. Multiple algorithms operate with a unified backend, and compressions are run once. This is a fairly common approach.

In addition to vehicles, the sensors also detect pedestrians and the direction of movement. This algorithm works for a large number of car cameras in real time.

3D Car Detection Pipeline

Object detection is essentially a solved problem. There are various algorithms available, as well as lots of competitions on this topic and lots of datasets.

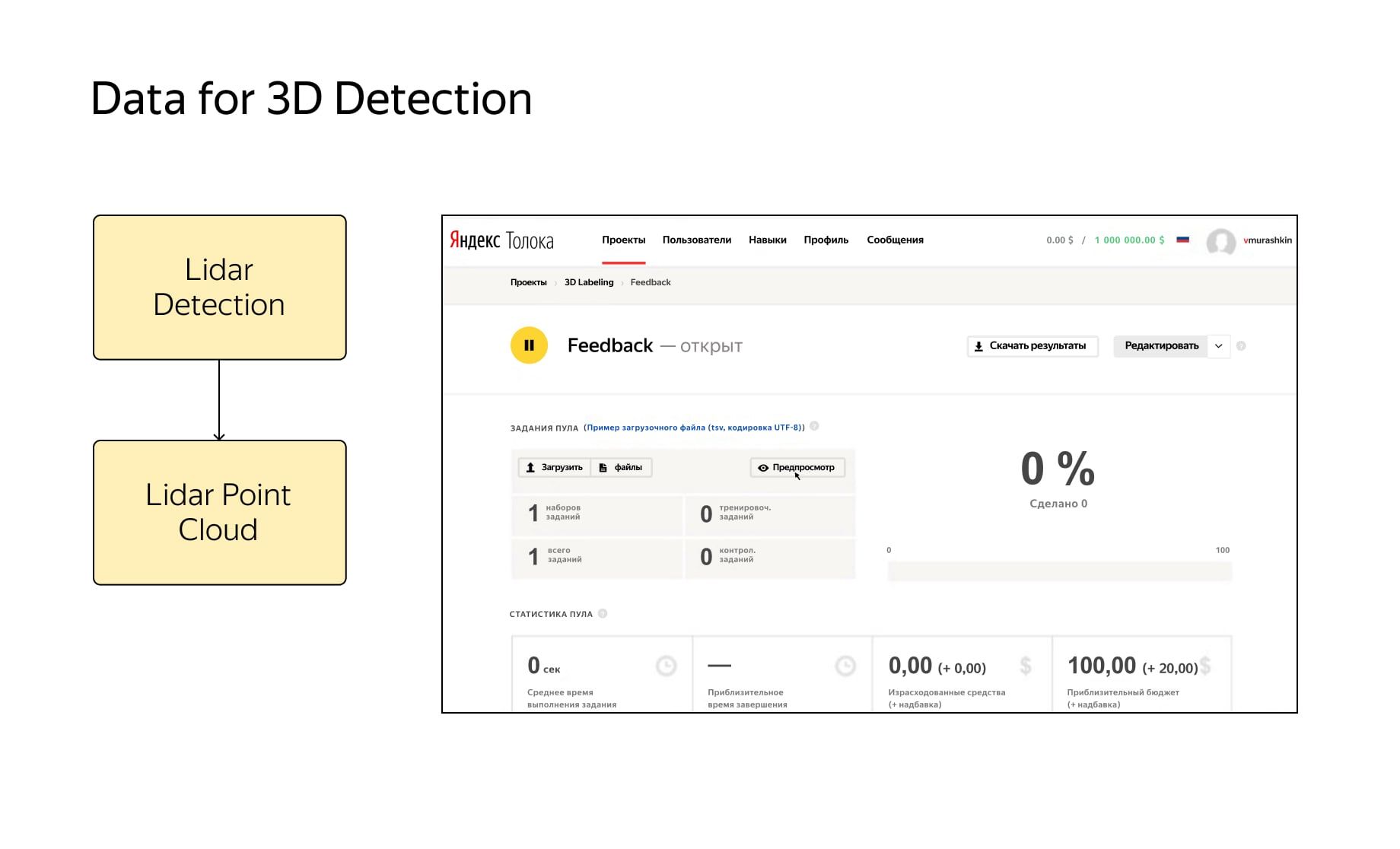

Data for 3D Detection

The situation with lidars is more difficult because there was previously only one relevant dataset available: the KITTI dataset. To get started, Yandex developers had to label new data from scratch, and at this point Toloka came to the rescue again.

Labeling a point cloud is not a trivial procedure. Toloka users are not experts in 3D labeling, so it is a challenge to explain how 3D projections work and how they can identify cars in a point cloud, but a good lidar data flow was established.

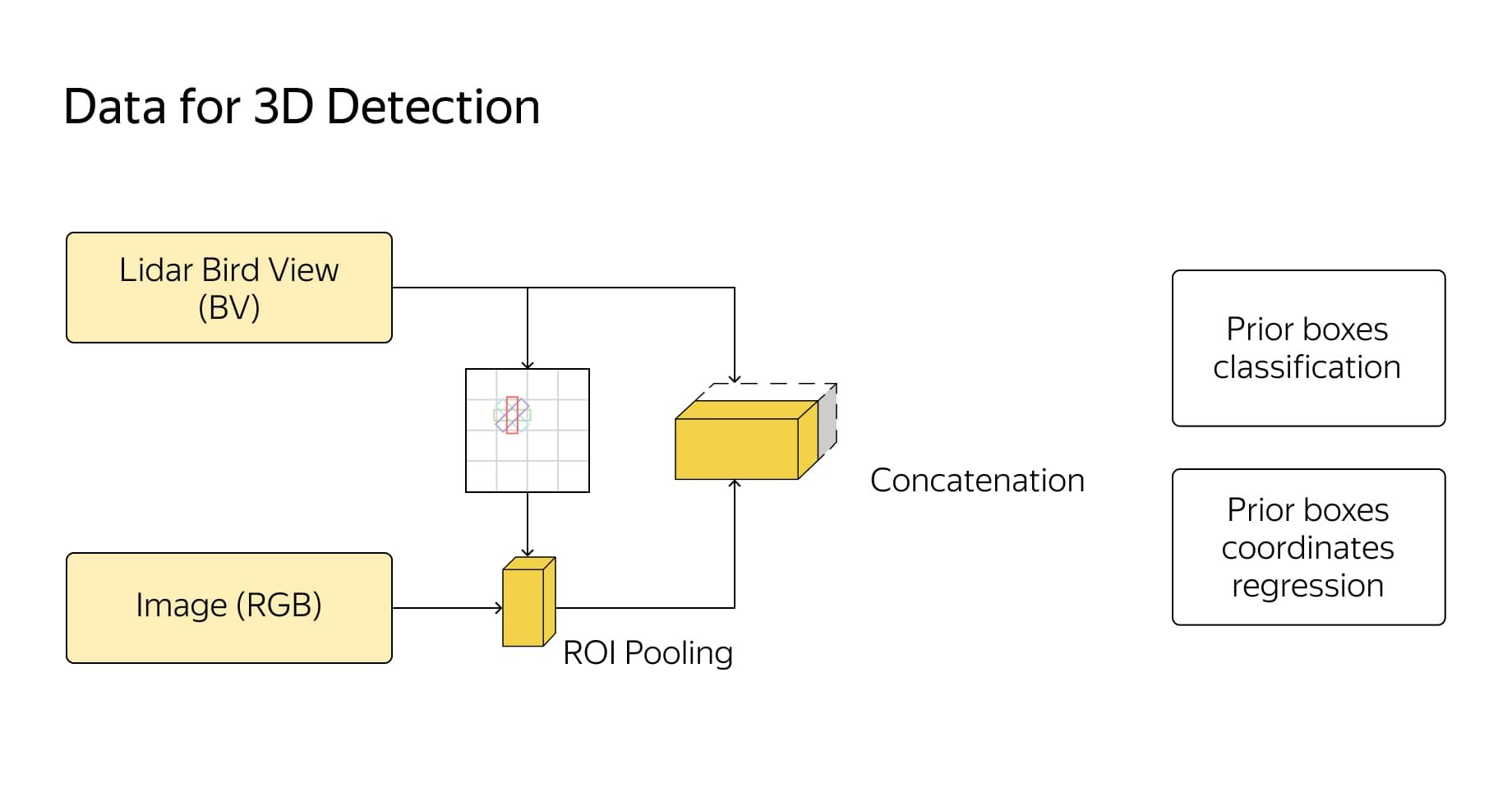

The next challenge is to take the point clouds with 3D coordinates from around a car and neural networks that are optimized for detection, and feed the point clouds to the neural network as input. Yandex experimented with an approach in which projections are constructed with a birds-eye view of the points and then divided into cells. If there is at least one point in a given cell, it is considered to be occupied.

Data for 3D Detection

Vertical slices can be added and if there is at least one point in the resulting cube, it can be assigned some kind of characteristic. For example, designating cubes by their topmost point works well. Slices are then fed into a neural network, much like images. There are 14 channels for input and they are handled in the same way as with an SSD, plus an input signal from a network trained for detection. An image is fed into the network, which is trained end-to-end. The output predicts the 3D boxes, their classes, and positions.

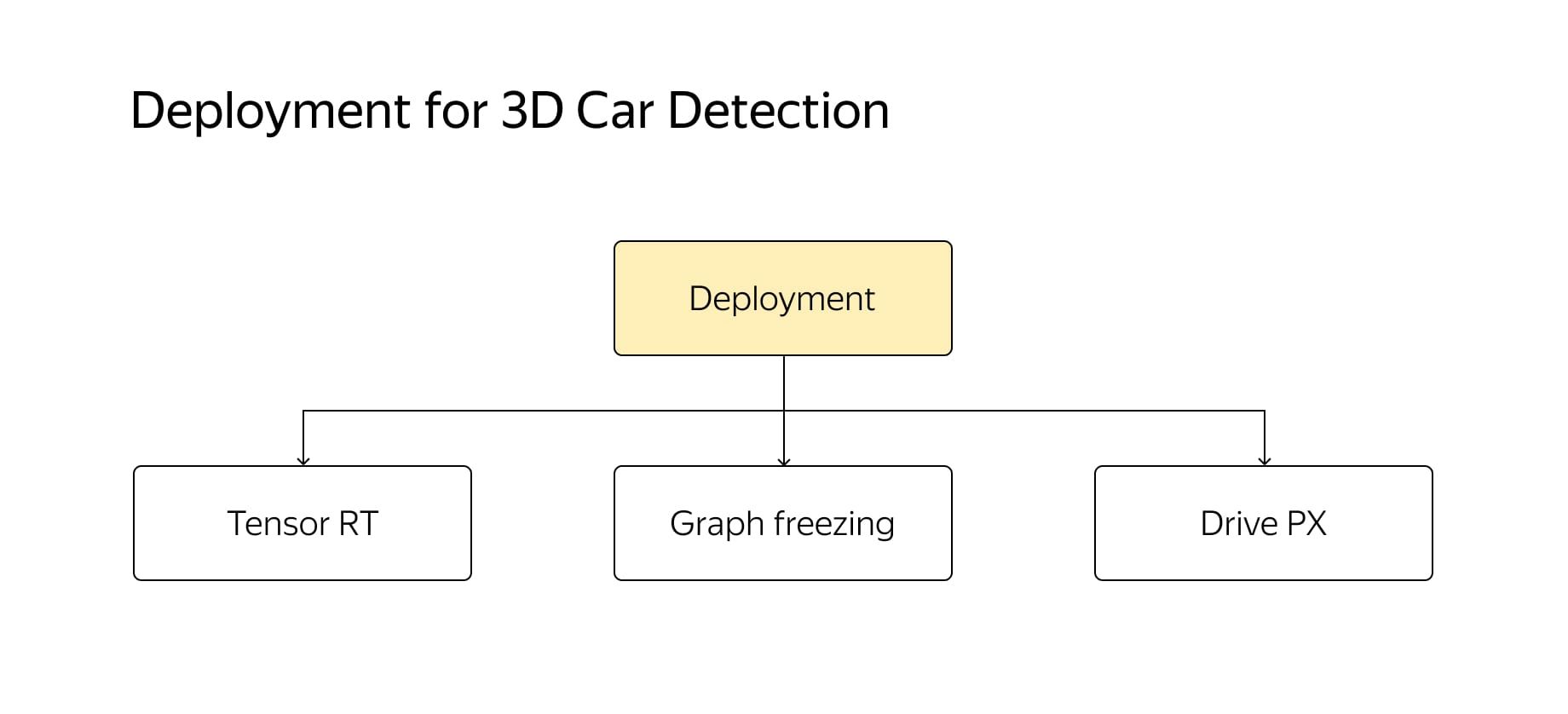

Deployment for 3D Car Detection

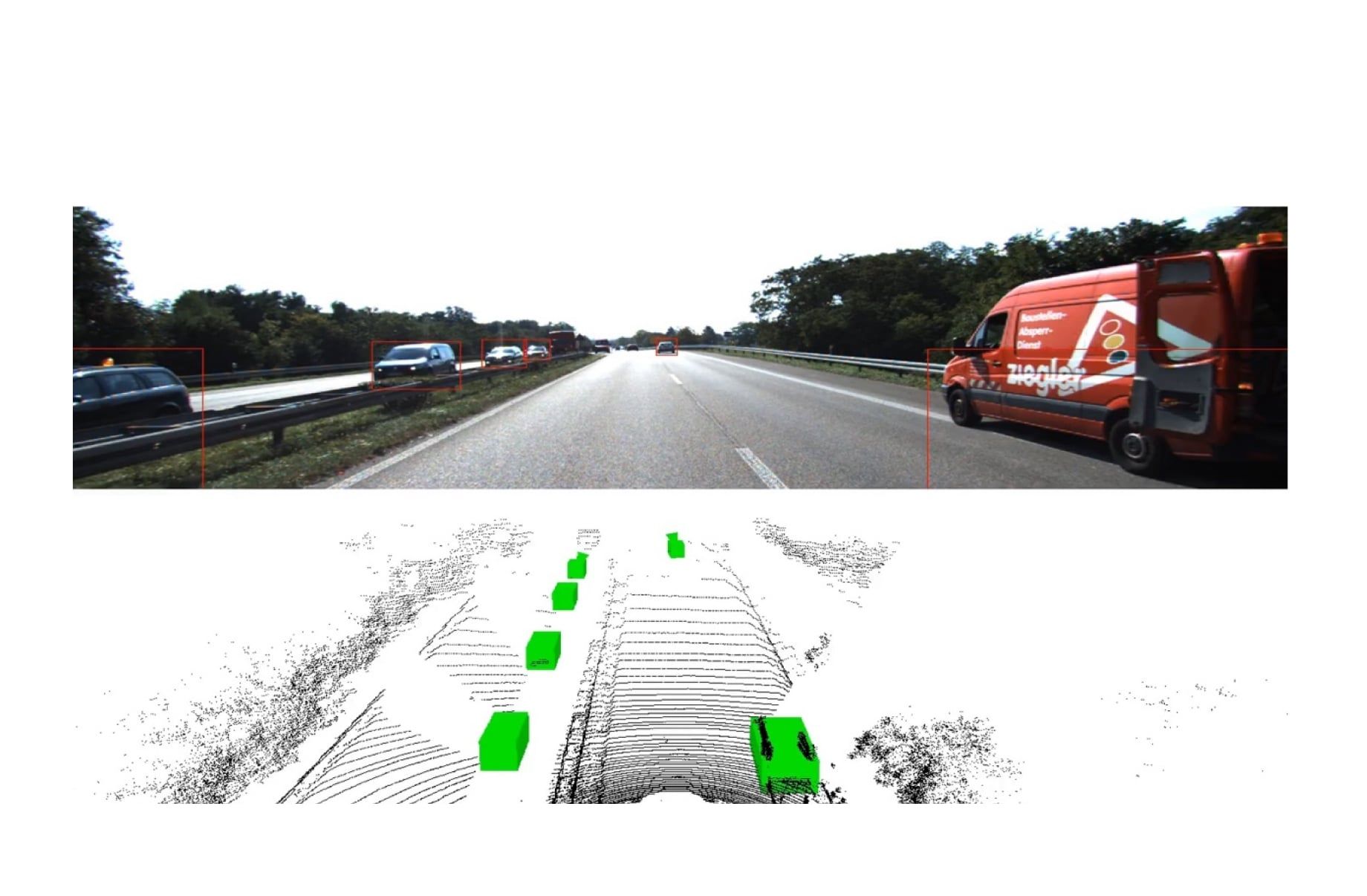

The next step is redeployment in the car system. Here's an example of how it works (this is just training, but it also works in real tests).

Redeployment

Cars are marked with green boxes.

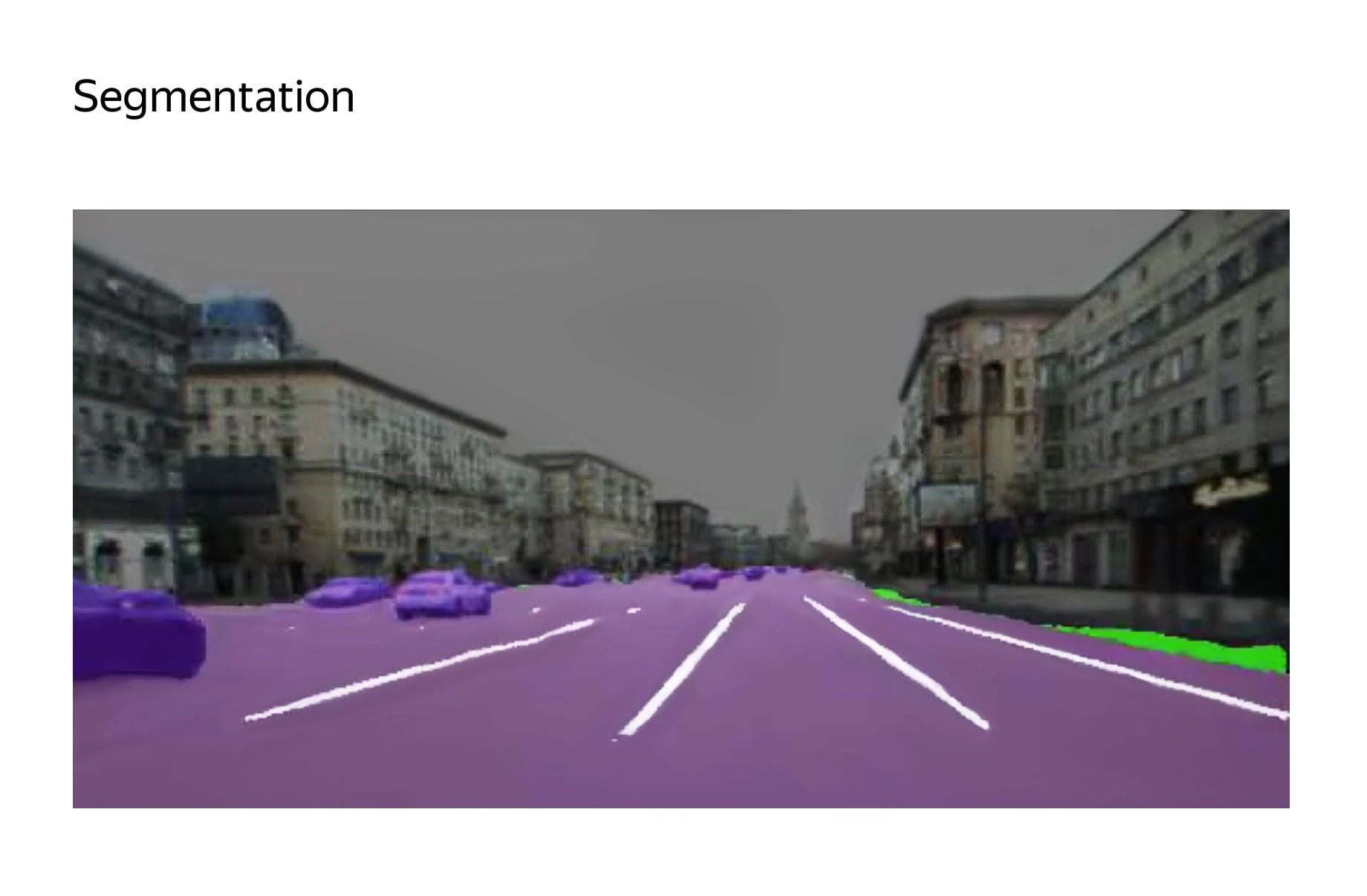

Segmentation

Segmentation is another algorithm that can be used to identify what is in the image and where it is located. Segmentation assigns a class to every pixel. This specific image shows a road and labeled segments. The edges of the road are marked in green, and the cars are marked in purple.

Segmentation has a disadvantage in terms of how it can be fed into motion planning: everything blends together. For instance, if there are cars parked close to one another, there will be one big purple block of cars and it's hard to guess how many there actually are. This leads to another problem — instance segmentation — which involves dividing different entities into parts. Yandex has been working on this, too.

Results

Watch the video of a self-driving car on Moscow streets after a heavy snowfall. The Yandex team tries to experiment with as many different approaches and hypotheses as possible. The goal is not to create the world's best object detection. That's an important aspect, but developments in new sensors and new approaches are first priority. The challenge is to test out and implement these innovations as quickly as possible, while dealing with whatever obstacles crop up — be it problems with deployment in cars or bottlenecks in data labeling. The latter is easily and successfully resolved using Toloka.