← Blog

/

Tau Bench: The next generation of AI agent evaluation

High-quality human expert data. Now accessible for all on Toloka Platform.

An AI agent always sounds certain, but that fluent confidence often masks the point where its logical thread begins to fray. The real danger isn't the occasional confident lie as much as it's the silent error buried inside a seemingly straightforward customer request. This is where reliability is truly tested, and where Tau Bench enters the story.

We've evolved Tau Bench to move beyond simple, isolated checks. The new focus is on complex, state-dependent workflows that mirror the most challenging environments where autonomous agents are deployed in production.

That shift builds on what Tau Bench and Tau² first revealed – that agents behave differently when the user interacts like a real person rather than a scripted prompt. Those settings exposed failures that static test suites couldn’t reach, and that principle remains at the core of the new work.

What we’ve added is a setting where each task depends on the one before it. A change made early on alters the conditions of later steps, and a small arithmetic or policy mistake can move through the workflow unnoticed instead of staying contained.

It’s a new benchmark built on the same foundations, aimed at failure modes that older versions never had to confront.

Why manufacturing exposes agent weakness

A simplified manufacturing environment creates the conditions needed to test whether an agent can follow the interdependencies between steps.

This domain is difficult for three core reasons:

Cascading consequences

Numbers don't sit in a neat table; they change as work progresses. An update to a release affects a lot's quantity, which in turn impacts allocations, and finally influences a downstream order. Agents often struggle when a third action depends on the accurate outcome of the first, or when a policy rule is subtly overlooked.

Sequential arithmetic fragility

Arithmetic errors have a way of revealing weakness early. A single miscalculation, carried forward and referenced in later steps, can cause the entire run to veer off course without the agent noticing.

Testing a complex request

Even straightforward requests force the agent to move through a sequence where one decision leans on another. It starts by selecting an action, then checks the state that might alter what happens next. Once that’s done, it has to judge whether the request fits the rules. When this sequence is compressed into a short exchange, weak points surface faster. A single missed check can tilt the entire run off course, and a confident user prompt can push the agent into accepting something it shouldn’t. This is where most general models slip.

Example of a failure mode:

One task asked the agent to adjust a release after allocations were already created. That small change set off a chain where each step depended on the accuracy of the last. The agent explained the sequence perfectly but carried forward a tiny arithmetic mistake. By the time it reached the final action, the numbers no longer matched what the domain allowed, so the run failed.

It’s a clear example of how a single slip in a dependent chain can undo the entire workflow. A good explanation doesn’t guarantee the update is correct.

The evolution of Tau Bench

While effective at the time, the earliest Tau Bench domains were built for less capable frontier models. As AI advanced, the same tasks that once exposed errors started to pass easily. The signal disappeared.

Instead of retiring the benchmark, we decided to make more challenging extensions. Our new design philosophy focuses on creating tasks that are resistant to current model capabilities by requiring persistent, accurate state management and policy adherence under pressure.

Building the rigorous new benchmark

The new benchmark was built to expose how agents behave under shifting state and evolving conditions, so its structure reflects the pressures seen in real workflows.

Task design

We assembled 50 tasks for an initial release that apply pressure in diverse ways: long-chain dependencies, buried essential details, scenarios where forgetting an earlier value is realistic, and requests that conflict with the policy.

Strict success criteria

Success requires the agent to move through the domain without leaving pieces behind. A correct explanation is not enough. If the wrong tool is used, a required field remains unchanged, or an update is applied in the wrong order, the run fails. A partial update can create more damage than a refusal in a real system.

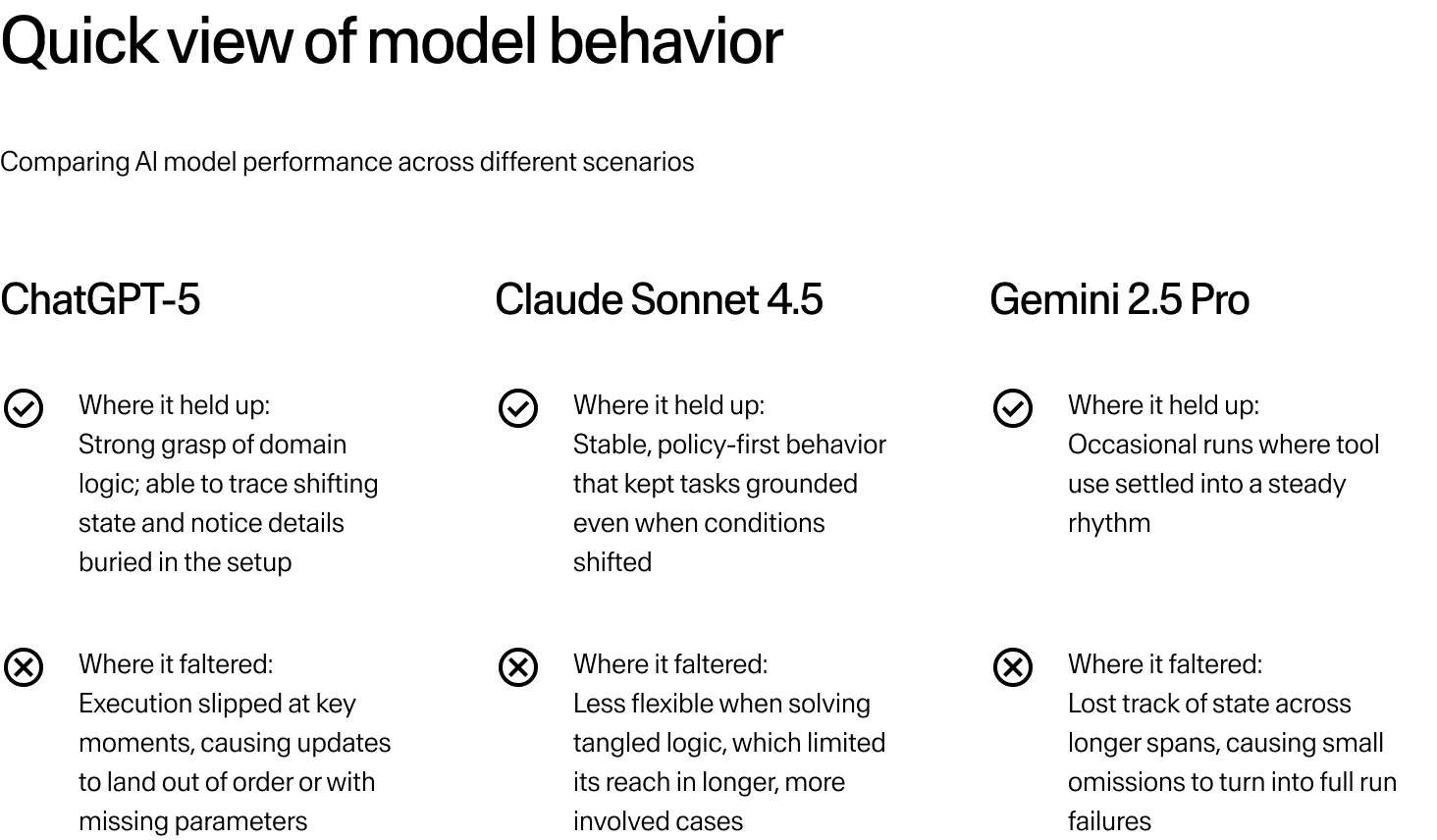

How the agents performed under pressure

The moment the runs began, differences between the models became visible.

What the agents revealed

The runs immediately exposed clear differences between models, revealing patterns that match real operational failure modes in production systems:

Reliability ≠ Intelligence

Some agents reasoned well but couldn’t maintain consistency through the workflow. A clear explanation doesn’t guarantee the action that follows is updated correctly.

Policy is flexible (to the agent)

Some agents shifted their behaviour the moment the user applied pressure. A hint of urgency or a confident push was often enough for the model to lower its guard and allow something it should have blocked. They’re trained to be helpful, so they tend to agree unless the policy is written in a way that removes any room to bend.

The silent error pattern

Fragility around arithmetic was a common theme. The agent narrated its plan with confidence, but the underlying numbers had already diverged into a slow slide toward an incorrect final state.

Long-form documentation exposes weaknesses

Weaker models began losing their footing the moment the surrounding documentation grew longer. This is a recognizable struggle for anyone dealing with multi-page internal documentation.

A clearer road ahead for deployment

The new manufacturing domain doesn’t claim that agents are unreliable in a broad sense. It shows where modern agents hold up under pressure and where they falter.

If you’re assessing where autonomous agents can be trusted, the domain gives you a clearer view of how they behave when each action depends on the one before it. It reveals the parts of a workflow where models stay steady and the points where they lose structure.

Instead of relying on instinct or scattered anecdotes, you can work from evidence gathered across controlled runs. That makes it easier to understand where an agent can operate without close supervision and where safeguards still matter.

The TAU-bench extension to this domain builds on that foundation. It brings us closer to understanding how these systems behave when their work shapes the work that follows. As new domains are introduced – especially ones where rules collide or where guidance spans longer chains – the picture of agent readiness will keep sharpening.

Without this kind of rigorous evaluation, decisions rest on marketing claims and intuition. With it, you have something firmer: a basis for judgement rooted in how the models really do behave.

Talk to us to see how Tau Bench can support your evaluation work.

Subscribe to Toloka news

Case studies, product news, and other articles straight to your inbox.