Decomposition

Break your task down into steps that are clear enough for anyone to handle.

Subscribe to Toloka News

Subscribe to Toloka News

Decomposition means to break a task down into parts by replacing one large problem with a series of smaller, separate problems that are easier to solve and can be completed by different annotators. It is the first step to take when planning a new crowdsourcing project.

Benefits of decomposition

- Makes it easier to review completed tasks

- Reduces the number of errors

- Lower entry barrier for new annotators means more people can handle it

- Saves money: you spend less on the project overall

How does it save money?

It may seem counterintuitive, but splitting one task into several smaller ones helps lower the total project cost. This happens because smaller tasks are easier to complete without mistakes, so we don't need to re-evaluate them as often as complex ones.

| Cost | Tasks for re-evaluation | Active annotators | |

| One complex task | 100% | 15-25% | 600 |

| Several smaller tasks | 70% | 10-15% | 11000 |

How do I know that a task needs to be decomposed?

If your task offers a choice of 3-5 answers and the instructions fit on one page without scrolling, then most likely your task doesn't need to be decomposed. In all other cases, you should probably try to break down the task. You can also discover when you need to decompose by running short experiments. If your task is taken up very slowly or all the Tolokers are filtered out due to low skill levels, and you don't see any problems with your control tasks, you can assume that the task is too complex.

Ways to decompose a task

Decomposing a complex challenge

If your task is to answer a complex question, try dividing it into a series of simple ones that are easy to answer and independent of each other. For example, instead of asking whether a tech support specialist gave a “good” or “bad” answer, ask if the response was detailed, friendly and grammatically correct.

Decomposing a multi-task

If your task involves a series of questions or actions, try presenting them one at a time. If you have a set of pictures and you need to outline traffic lights on them, first ask if the picture contains a traffic light and then (if yes) ask to outline it. Best practice here is to use two different projects for collecting data.

Decomposing a multitude of options

Sometimes there's only one question that needs to be answered, but there are too many possible answers and it's difficult to remember all the rules about them. If there are more than 10 options to choose from, we recommend grouping them thematically, so that the Toloker first chooses a general theme and then chooses within a limited variety of answers. Best practice here is to support the successive classification with a clean interface that displays only the necessary options.

Decomposing a crowdsourcing project itself

Collecting crowd data involves more than just setting up a task for people to do. You also need to set up quality control mechanisms to maintain good quality. If the best control method is human evaluation, try adding a post-verification project where Tolokers will check tasks completed by other Tolokers.

Examples of real-life decomposition techniques

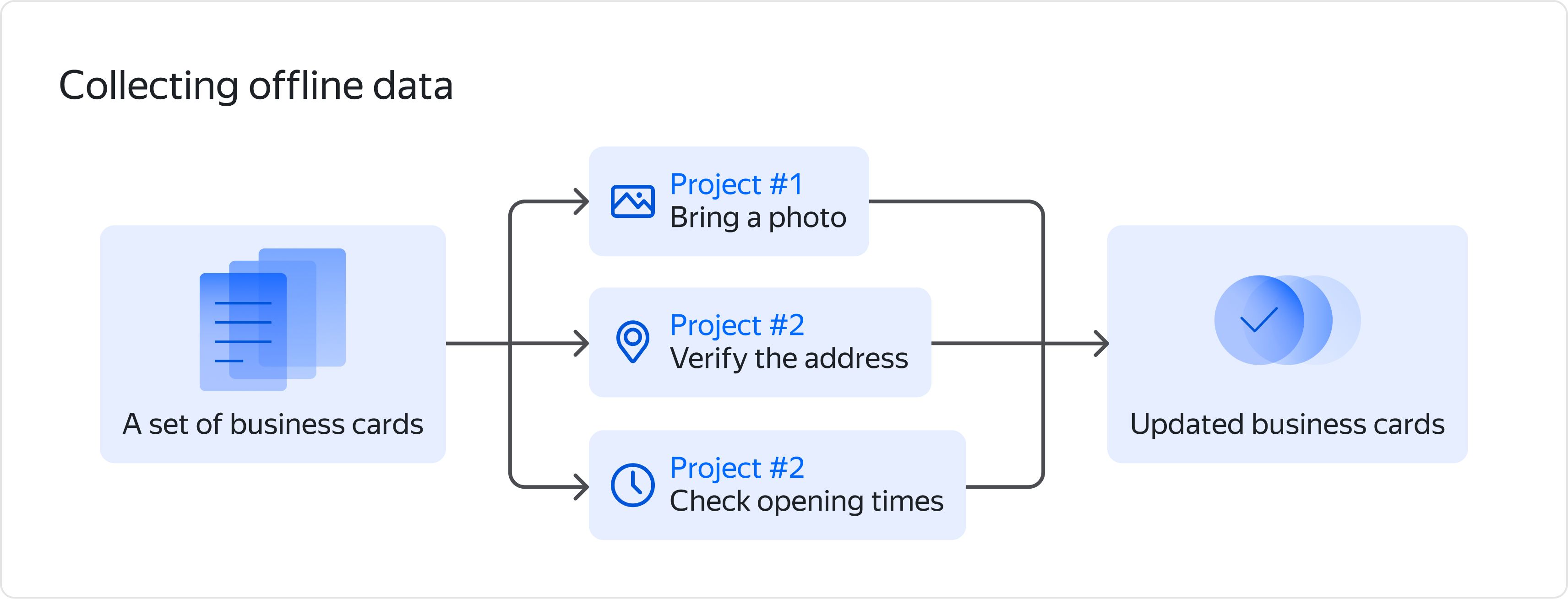

Let's say your task is to regularly update information about local businesses in order to keep an up-to-date list. You give Tolokers an offline task to find a particular business, check the address and opening hours, and provide a photo. After the task is complete, you find out that some answers are only partly correct. Some Tolokers didn't provide a quality photo, while others got the opening times wrong. How can you clean up this data? Do you need to pay the people who were only partly correct? Where do you get the extra budget to re-label the objects with missing data? This task can be decomposed by splitting it into three independent projects where one simple piece of information is collected, and Tolokers don't get confused with multi-tasking:

• An entrance photo

• Address

• Opening hours

This allows you to use simple quality control mechanisms, choose Tolokers who are better at each individual task, and save money on relabeling incorrect data.

Decomposition and…

When we say that decomposition is the key, we mean it. Here's how decomposition is connected to other components of crowdsourcing:

- Instructions

A well-decomposed task is easily explained using simple instructions. Learn more about clear instructions - Pricing

The simpler a task is, the quicker it can be submitted, and the cheaper it is. Learn more about pricing strategies - Interface

Each single step of a decomposed task should be supported by a clean and simple task interface, with no unnecessary elements. Learn more about good interfaces - Quality control

A set of simple tasks is easy to check with basic quality control methods, such as majority vote or golden sets. Learn more about quality control