Stirr but do not mix: Crafting Your Own Synthetic Data Pipeline for SFT

Subscribe to Toloka News

Subscribe to Toloka News

Introduction

Welcome to the digital kitchen of AI development! Today, we're rolling up our sleeves to “cook” a synthetic data pipeline, perfect for Supervised Fine-Tuning (SFT). Just as in any culinary adventure, the right ingredients, techniques, and a pinch of inspiration are essential. Let’s get started!

Empower your GenAI development

Ingredients

-

A hearty batch of source data (raw texts relevant to your domain, sourced from HuggingFace, Kaggle, or the web)

-

A collection of text slicing tools (for chopping long texts into manageable paragraphs)

-

A filter for refining your data stew (to keep only the most relevant chunks)

-

An open-source LLM for profiling and seasoning. We prefer Mixtral 8x7B but you can use the one to your taste

-

Web queries crafted from profiles to fetch fresh data for our digital kitchen

-

Some LLM-based creativity serum to generate diverse prompts

-

Tools for textual transformation and deduplication

Cooking Instructions

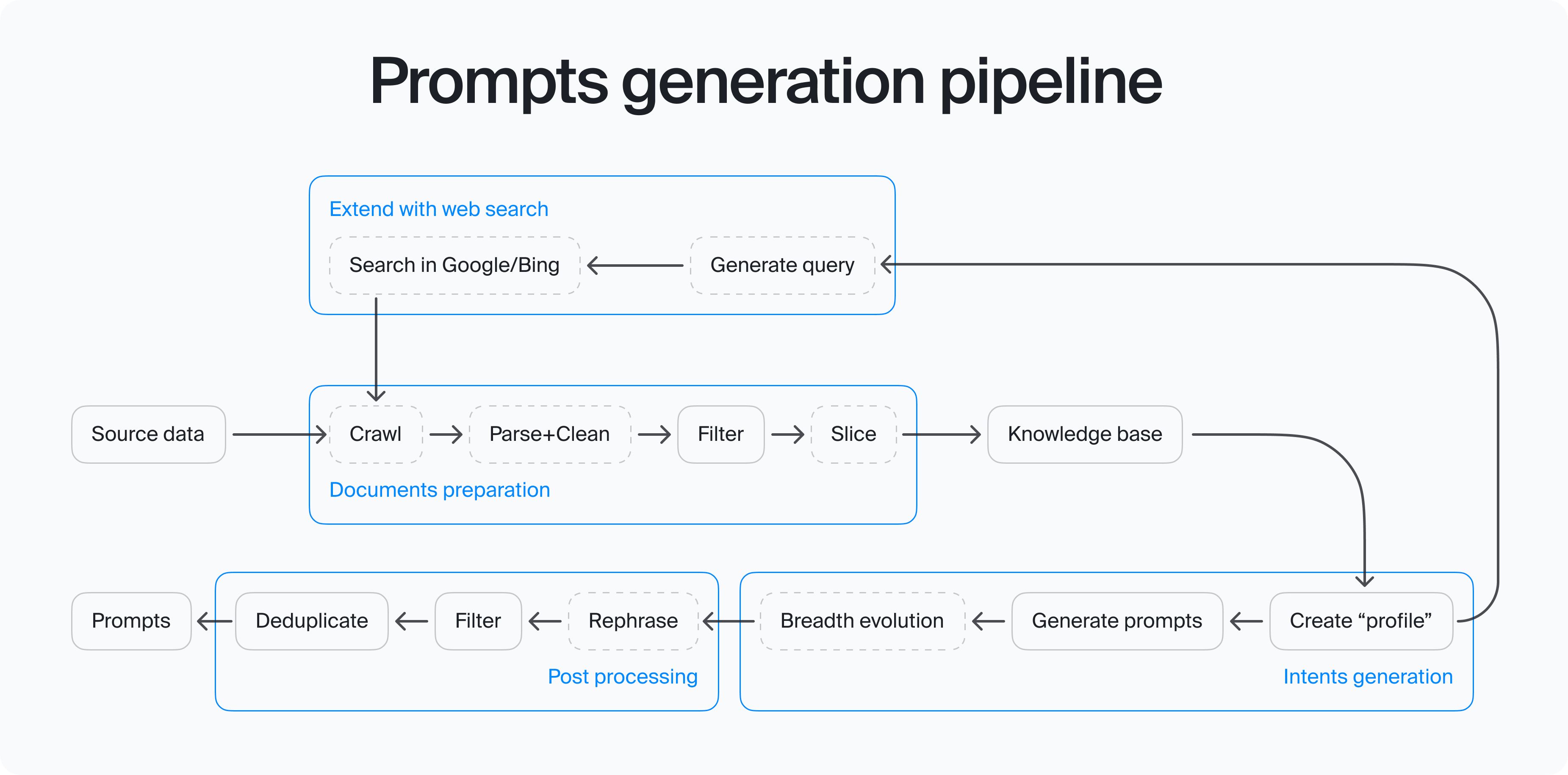

Step 1: Gather Your Ingredients

Start by collecting a set of raw texts that correspond to your domain of interest. These can be open-source datasets already published online or the results of web scraping.

Step 2: Chop It Up

Slice your long texts into smaller, more digestible paragraphs. Think of this as prepping your vegetables for a stir-fry: you want chunks that are long enough to be meaningful but short enough to fit several into the context window of the LLM you intend to use later.

Step 3: Sift Through Your Chunks

Use your filtering tool to sift through the text chunks, keeping only those that are relevant to your specific needs, such as domain and subdomains.

Step 4: Profile Seasoning

Create a “profile” for each document using your LLM. This profile is like a structured summary, identifying key flavors such as subdomain, main topic, and use case.

Step 5: Fresh from the Web

Take an LLM of your choice and generate further web queries using the profiles you’ve created. Collect the most relevant pages from the web. Clean and parse these new ingredients before mixing them back into your source data. Repeat these five steps until you have enough data. In our projects we usually need 10 to 20 thousand prompts for a domain, so you can keep this number at the back of your mind.

Step 6: Prompt Generation

Use the profiles above and cook up a variety of prompts with your LLM.

Step 7: In-Breadth Flavor Testing

Evolve your prompts in-breadth with the LLM, generating multiple similar prompts across different topics and use cases to fully explore the flavor palette. The diversity of prompts is key for the success of the whole pipeline. You want your SFT dataset be adjusted to a variety of the usecases within a given domain. Toloka implements various automated and manual checks to guarantee that the domain is covered broadly.

Step 8: Add Lexical Spice

Once again, use your LLM to rephrase your prompts to add lexical diversity, ensuring a rich, complex taste that avoids bland repetition.

Step 9: Quality Control

Filter out any bad prompts—those that are irrelevant, meaningless, or just plain rubbish. This step is crucial for maintaining the quality of your dish. Toloka has a variety of quality control tools in place and we apply relevant tools at every step in this process.

Step 10: Avoid Clumping

Finally, transform your text into embeddings and filter out any closely similar samples. This ensures each prompt in your collection remains distinct and covers relevant are of the domain.

Our prompts-starter is ready, now we need to add a pipeline of hearty answers to it. Parts of the prompt-collection recipe could be reused here.

Step 1: Synthetic answers

You can use the same LLM that you used for prompt generation to get some short-list of synthetic answers.

Step 2. Web-condiments

To improve the quality of your answers you need depth. Generating several queries per prompt and saving several top search results significantly extends the range of your answers.

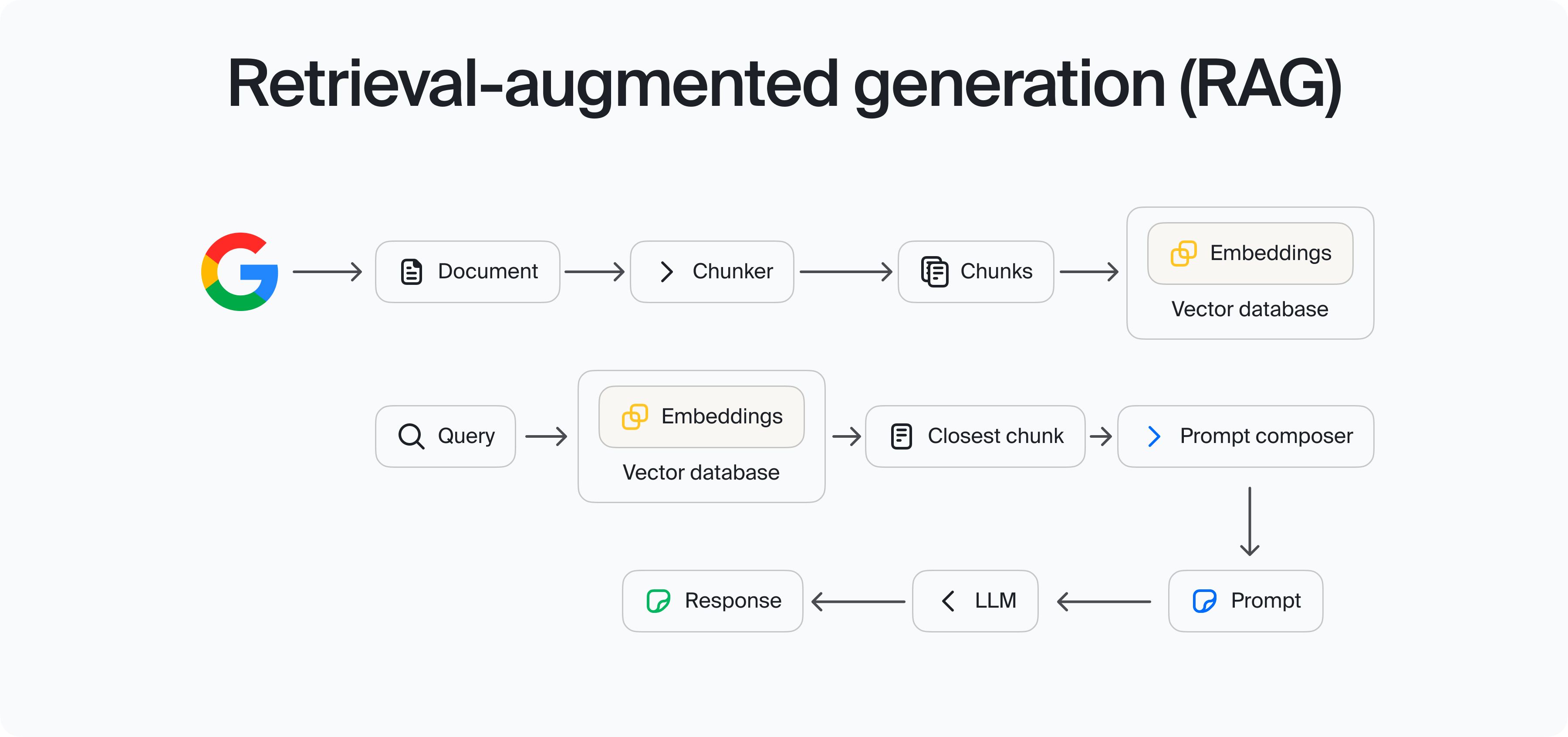

Step 3. Chop-n-RAG

Similarly to the second step in the prompt generation pipeline we split the relevant web-pages into chunks. Now you can search for the chunks that are most relevant to a given prompt and add them to the model context (similarly to RAG approach). This extra knowledge adds domain specific information, lowers hallucinations and improves overall answers accuracy.

Step 4. Fine-tuning with connoisseurs

If you are not satisfied with the your synthetic answers you can use expert knowledge and evaluate the answers on various scales like style, correspondence to the domain, context-relevance etc. Once you have your evaluations you can fine-tune the model to the domain and increase the quality of your synthetic answers further.

Congratulations, you’ve just prepared a synthetic data pipeline for SFT! Just like in cooking, the key to success in AI development is in carefully selecting your ingredients, applying the right techniques, and not being afraid to experiment. Tune on it while it’s hot — bon appétit in the world of AI!

Recent articles

Have a data labeling project?

More about Toloka

- Our mission is to empower businesses with high quality data to develop AI products that are safe, responsible and trustworthy.

- Toloka is a European company. Our global headquarters is located in Amsterdam. In addition to the Netherlands, Toloka has offices in the US, Israel, Switzerland, and Serbia. We provide data for Generative AI development.

- We are the trusted data partner for all stages of AI development–from training to evaluation. Toloka has over a decade of experience supporting clients with its unique methodology and optimal combination of machine learning technology and human expertise. Toloka offers high quality expert data for training models at scale.

- The Toloka team has supported clients with high-quality data and exceptional service for over 10 years.

- Toloka ensures the quality and accuracy of collected data through rigorous quality assurance measures–including multiple checks and verifications–to provide our clients with data that is reliable and accurate. Our unique quality control methodology includes built-in post-verification, dynamic overlaps, cross-validation, and golden sets.

- Toloka has developed a state-of-the-art technology platform for data labeling and has over 10 years of managing human efforts, ensuring operational excellence at scale. Now, Toloka collaborates with data workers from 100+ countries speaking 40+ languages across 20+ knowledge domains and 120+ subdomains.

- Toloka provides high-quality data for each stage of large language model (LLM) and generative AI (GenAI) development as a managed service. We offer data for fine-tuning, RLHF, and evaluation. Toloka handles a diverse range of projects and tasks of any data type—text, image, audio, and video—showcasing our versatility and ability to cater to various client needs.

- Toloka addresses ML training data production needs for companies of various sizes and industries– from big tech giants to startups. Our experts cover over 20 knowledge domains and 120 subdomains, enabling us to serve every industry, including complex fields such as medicine and law. Many successful projects have demonstrated Toloka's expertise in delivering high-quality data to clients. Learn more about the use cases we feature on our customer case studies page.