Best Stable Diffusion prompts and how to find them

Subscribe to Toloka News

Subscribe to Toloka News

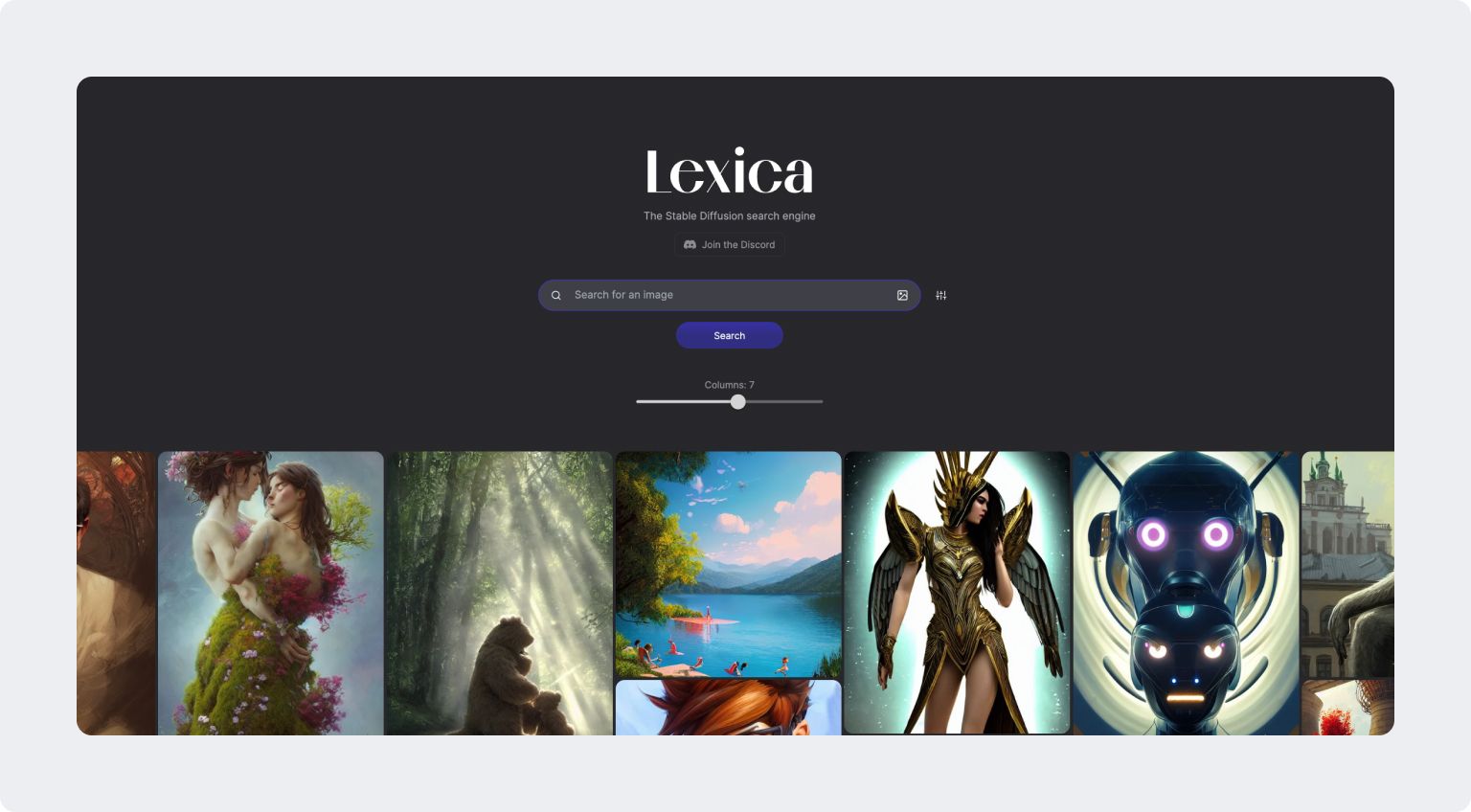

Recently, a new generation of generative AI models such as DALL-E 2 and Stable Diffusion has inundated the machine learning community with high-quality AI art. For some cool examples, just browse the lexica.art website.

The most exciting thing about these models is the easy access. Now OpenAI has publicly released the DALL-E 2 API for everyone and Stable Diffusion is open-source and small enough that you can run it in Google Colab or even on your personal laptop.

However, if you want to generate high-quality images you need to do some prompt engineering. There are even special cookbooks that show how to construct a prompt correctly. For instance, there are many weird tricks that exploit specific properties of LAION-5B/OpenAI CLIP datasets such as adding keywords like “4k” or “trending on artstation” to the prompt that allow you to generate better images. Such keywords do work but it’s often counterintuitive which ones work best and how to combine them to get exciting pictures. Most users just try to search for ones that perform better on a single prompt and share their findings on Reddit and Discord servers.

In this post, I’m going to share the experience of the Toloka research team on automatic prompt engineering with human feedback. Long story short, we’ve developed an approach that employs a genetic optimization of different sets of keywords for Stable Diffusion where real human annotators compare pairs of images generated with different keywords and the algorithm optimizes these keywords to comply with preferences.

Why it looks like web search quality evaluation

To provide a better understanding of our approach, let’s consider the following example. Imagine we want to build a really good website generator that produces websites that are ranked in top positions in Google for most of the queries (don’t do this in real life — it’s just search spam). This means that our site generator needs to produce websites that are more relevant (or just better) than other websites that try to appear on the search result page for the same queries.

This problem is quite similar to the one we want to solve with image generators. We have some image descriptions in mind (concepts) and we want to produce the best images showing these concepts. We might think of image descriptions as queries and our images as websites. In other words, we want to find keywords that rank generated images in top positions if we sort them by user preference.

Why is this analogy relevant? Because there are established methods for evaluating website relevancy, and we can apply them to solve our problem.

Evaluation

In search relevance evaluation we collect some buckets of queries for testing the quality of the search results. In our case, it means we need to find some concepts that are representative enough with different setups, orientations, styles, etc.

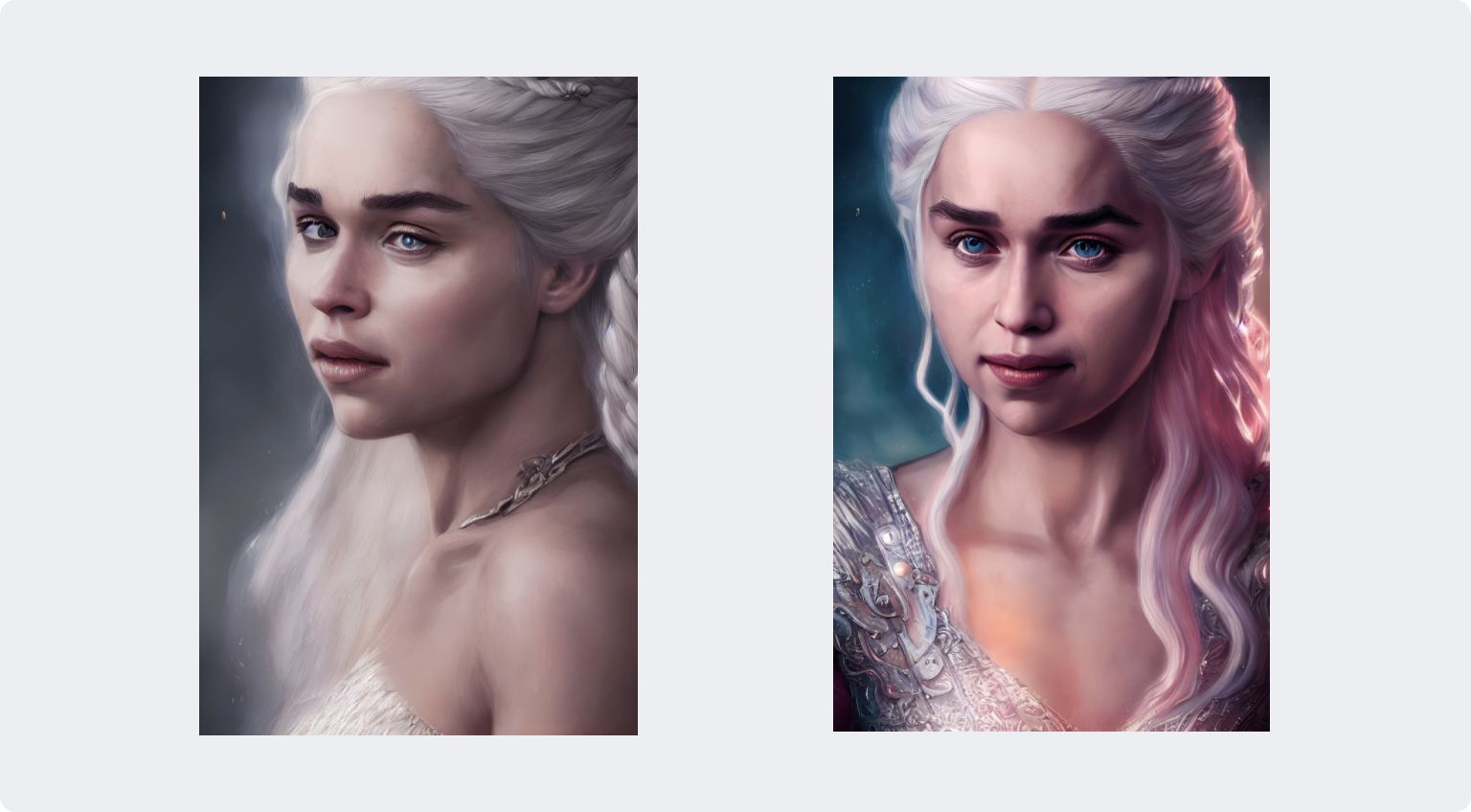

We decided to browse lexica.art, Stable Diffusion Discord, and Reddit to find concepts that real users feed into Stable Diffusion. We’ve divided them into ten categories: portraits, buildings, animals, interiors, landscapes, and other. For each category, we took 10 image descriptions for training and 2 for testing (to stay within our annotation budget). For example, “A portrait painting of Daenerys Targaryen queen” goes into the portrait category.

Now let’s move on to how we evaluate different combinations of keywords. Assume we want to know which one of “4k 8k”, “trending on artstation, colorful background”, and “4k unreal engine” is the best.

We appended the keywords to image descriptions, generating four images using each set of keywords. Then, for each image description, we ran pairwise comparisons on the Toloka crowdsourcing platform: real humans viewed two sets of four images and chose the better one.

After we collected the results of the comparisons, we needed to obtain the keyword ranking for each image description (from the worst to the best, because then we will maximize the position).

To do so, we used a special algorithm from Crowd-Kit called Bradley-Terry. One might think of it as some sort of Elo rating in chess. Once we rank, for each keyword combination, we take its positions in ranked lists and average them. As a result, we have a single number for each keyword combination and we can use it as a quality metric.

Genetic algorithm

Above we discussed only how to evaluate keywords but not how to find the best ones. We now know how to obtain a value of a quality measure for keyword combinations. The next goal is to maximize it over different keywords. It is clear that this is some sort of combinatorial optimization problem, so the straightforward solution is to use a genetic algorithm.

Let’s take the top 100 keywords from Stable Diffusion Dreambot queries. Each keyword combination then can be described as a bit-mask of length 100. Ones stand for keywords included in the combination and zeros for ones that are not. So, we want to find a bit-mask that has the highest quality metric value.

First, we take a bit-mask that stands for top-15 the most popular keywords and one that contatins only zeros. This will be our initial population. Then, we evaluate the quality of corresponding keywords with pairwise comparisons. After that, we make a cross-over: take these two samples, two random integers a and b and swap segments of bit-masks between positions a and b. Finally, we apply a mutation: each bit is swapped randomly with probability 0.01.

As a result, we get a new candidate that is evaluated and added to the population. We repeat these steps multiple times, choosing the two best samples in the population for the cross-over. After 56 iterations, the best set of keywords is “cinematic, colorful background, concept art, dramatic lighting, high detail, highly detailed, hyper realistic, intricate, intricate sharp details, octane render, smooth, studio lighting, trending on artstation”.

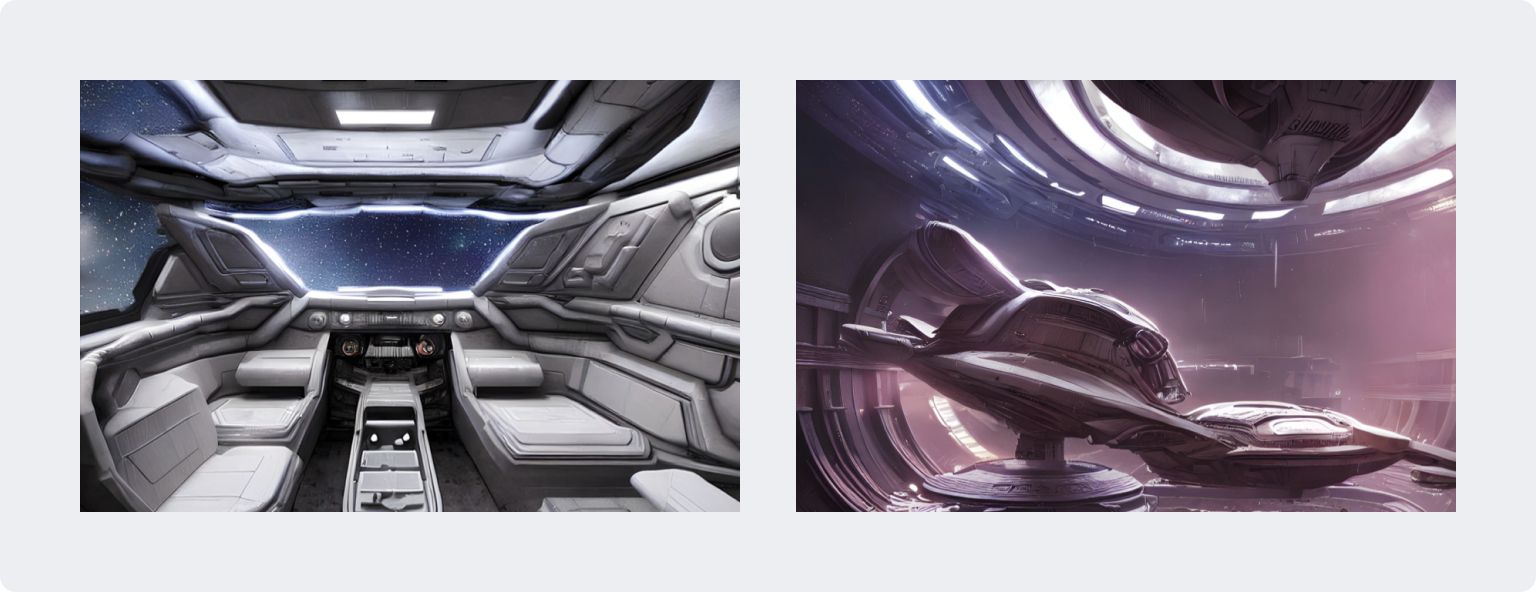

Here you can see the differences between images produced with these keywords and no keywords at all (images are cherry-picked):

Conclusion

It is obvious that our approach has several limitations. First, genetic algorithms tend to be stuck in a local maxima. Second, we need to run it for more iterations and use more possible keywords to achieve higher quality. Finally, our approach may not apply to some specific image descriptions and there might be some keywords that work significantly better only on, for example, portraits.

Nevertheless, we share our code and data to allow everyone to continue our experiment.

We believe that using human-in-the-loop approaches might significantly advance the progress in generative models by better alignment, higher quality generations, and solving specific tasks like following instructions.

See our paper Best Prompts for Stable Diffusion and How to Find Them if you want to dive deeper into the details.

Need human-labeled data for your ML project?

Check out Toloka’s data labeling platform.

- Global crowd: 40+ languages, 100+ countries

- Any data type: text, image, video, audio, and more

Recent articles

Have a data labeling project?

More about Toloka

- Our mission is to empower businesses with high quality data to develop AI products that are safe, responsible and trustworthy.

- Toloka is a European company. Our global headquarters is located in Amsterdam. In addition to the Netherlands, Toloka has offices in the US, Israel, Switzerland, and Serbia. We provide data for Generative AI development.

- We are the trusted data partner for all stages of AI development–from training to evaluation. Toloka has over a decade of experience supporting clients with its unique methodology and optimal combination of machine learning technology and human expertise. Toloka offers high quality expert data for training models at scale.

- The Toloka team has supported clients with high-quality data and exceptional service for over 10 years.

- Toloka ensures the quality and accuracy of collected data through rigorous quality assurance measures–including multiple checks and verifications–to provide our clients with data that is reliable and accurate. Our unique quality control methodology includes built-in post-verification, dynamic overlaps, cross-validation, and golden sets.

- Toloka has developed a state-of-the-art technology platform for data labeling and has over 10 years of managing human efforts, ensuring operational excellence at scale. Now, Toloka collaborates with data workers from 100+ countries speaking 40+ languages across 20+ knowledge domains and 120+ subdomains.

- Toloka provides high-quality data for each stage of large language model (LLM) and generative AI (GenAI) development as a managed service. We offer data for fine-tuning, RLHF, and evaluation. Toloka handles a diverse range of projects and tasks of any data type—text, image, audio, and video—showcasing our versatility and ability to cater to various client needs.

- Toloka addresses ML training data production needs for companies of various sizes and industries– from big tech giants to startups. Our experts cover over 20 knowledge domains and 120 subdomains, enabling us to serve every industry, including complex fields such as medicine and law. Many successful projects have demonstrated Toloka's expertise in delivering high-quality data to clients. Learn more about the use cases we feature on our customer case studies page.